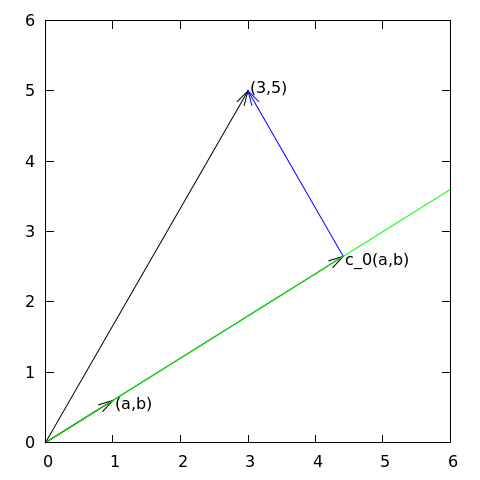

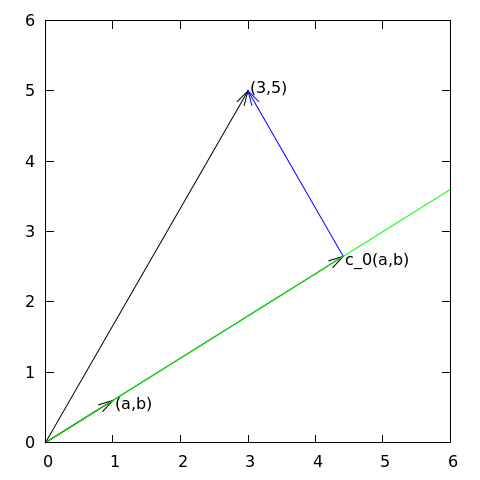

Figure 2: Approximation of a two-dimensional vector by a one-dimensional vector.

We shall start with introducing two fundamental methods for determining the coefficients \( c_i \) in (1) and illustrate the methods on approximation of vectors, because vectors in vector spaces give a more intuitive understanding than starting directly with approximation of functions in function spaces. The extension from vectors to functions will be trivial as soon as the fundamental ideas are understood.

The first method of approximation is called the least squares method and consists in finding \( c_i \) such that the difference \( u-f \), measured in some norm, is minimized. That is, we aim at finding the best approximation \( u \) to \( f \) (in some norm). The second method is not as intuitive: we find \( u \) such that the error \( u-f \) is orthogonal to the space where we seek \( u \). This is known as projection, or we may also call it a Galerkin method. When approximating vectors and functions, the two methods are equivalent, but this is no longer the case when applying the principles to differential equations.

Suppose we have given a vector \( \f = (3,5) \) in the \( xy \) plane and that we want to approximate this vector by a vector aligned in the direction of the vector \( (a,b) \). Figure 2 depicts the situation.

Figure 2: Approximation of a two-dimensional vector by a one-dimensional vector.

We introduce the vector space \( V \) spanned by the vector \( \psib_0=(a,b) \):

$$ \begin{equation} V = \mbox{span}\,\{ \psib_0\}\tp \end{equation} $$ We say that \( \psib_0 \) is a basis vector in the space \( V \). Our aim is to find the vector \( \u = c_0\psib_0\in V \) which best approximates the given vector \( \f = (3,5) \). A reasonable criterion for a best approximation could be to minimize the length of the difference between the approximate \( \u \) and the given \( \f \). The difference, or error \( \e = \f -\u \), has its length given by the norm

$$ \begin{equation*} ||\e|| = (\e,\e)^{\half},\end{equation*} $$ where \( (\e,\e) \) is the inner product of \( \e \) and itself. The inner product, also called scalar product or dot product, of two vectors \( \u=(u_0,u_1) \) and \( \v =(v_0,v_1) \) is defined as

$$ \begin{equation} (\u, \v) = u_0v_0 + u_1v_1\tp \end{equation} $$

Remark 1. We should point out that we use the notation \( (\cdot,\cdot) \) for two different things: \( (a,b) \) for scalar quantities \( a \) and \( b \) means the vector starting in the origin and ending in the point \( (a,b) \), while \( (\u,\v) \) with vectors \( \u \) and \( \v \) means the inner product of these vectors. Since vectors are here written in boldface font there should be no confusion. We may add that the norm associated with this inner product is the usual Eucledian length of a vector.

Remark 2. It might be wise to refresh some basic linear algebra by consulting a textbook. Exercise 1: Linear algebra refresher I and Exercise 2: Linear algebra refresher II suggest specific tasks to regain familiarity with fundamental operations on inner product vector spaces.

We now want to find \( c_0 \) such that it minimizes \( ||\e|| \). The algebra is simplified if we minimize the square of the norm, \( ||\e||^2 = (\e, \e) \), instead of the norm itself. Define the function

$$ \begin{equation} E(c_0) = (\e,\e) = (\f - c_0\psib_0, \f - c_0\psib_0) \tp \end{equation} $$ We can rewrite the expressions of the right-hand side in a more convenient form for further work:

$$ \begin{equation} E(c_0) = (\f,\f) - 2c_0(\f,\psib_0) + c_0^2(\psib_0,\psib_0)\tp \tag{2} \end{equation} $$ The rewrite results from using the following fundamental rules for inner product spaces:

$$ \begin{equation} (\alpha\u,\v)=\alpha(\u,\v),\quad \alpha\in\Real, \tag{3} \end{equation} $$

$$ \begin{equation} (\u +\v,\w) = (\u,\w) + (\v, \w), \tag{4} \end{equation} $$

$$ \begin{equation} (\u, \v) = (\v, \u)\tp \end{equation} \tag{5} $$

Minimizing \( E(c_0) \) implies finding \( c_0 \) such that

$$ \begin{equation*} \frac{\partial E}{\partial c_0} = 0\tp \end{equation*} $$ Differentiating (2) with respect to \( c_0 \) gives

$$ \begin{equation} \frac{\partial E}{\partial c_0} = -2(\f,\psib_0) + 2c_0 (\psib_0,\psib_0) \tp \tag{6} \end{equation} $$ Setting the above expression equal to zero and solving for \( c_0 \) gives

$$ \begin{equation} c_0 = \frac{(\f,\psib_0)}{(\psib_0,\psib_0)}, \tag{7} \end{equation} $$ which in the present case with \( \psib_0=(a,b) \) results in

$$ \begin{equation} c_0 = \frac{3a + 5b}{a^2 + b^2}\tp \end{equation} $$

For later, it is worth mentioning that setting the key equation (6) to zero can be rewritten as

$$ (\f-c0\psib_0,\psib_0) = 0, $$ or

$$ \begin{equation} (\e, \psib_0) = 0 \tp \tag{8} \end{equation} $$

We shall now show that minimizing \( ||\e||^2 \) implies that \( \e \) is orthogonal to any vector \( \v \) in the space \( V \). This result is visually quite clear from Figure 2 (think of other vectors along the line \( (a,b) \): all of them will lead to a larger distance between the approximation and \( \f \)). To see this result mathematically, we express any \( \v\in V \) as \( \v=s\psib_0 \) for any scalar parameter \( s \), recall that two vectors are orthogonal when their inner product vanishes, and calculate the inner product $$ \begin{align*} (\e, s\psib_0) &= (\f - c_0\psib_0, s\psib_0)\\ &= (\f,s\psib_0) - (c_0\psib_0, s\psib_0)\\ &= s(\f,\psib_0) - sc_0(\psib_0, \psib_0)\\ &= s(\f,\psib_0) - s\frac{(\f,\psib_0)}{(\psib_0,\psib_0)}(\psib_0,\psib_0)\\ &= s\left( (\f,\psib_0) - (\f,\psib_0)\right)\\ &=0\tp \end{align*} $$ Therefore, instead of minimizing the square of the norm, we could demand that \( \e \) is orthogonal to any vector in \( V \). This method is known as projection, because it is the same as projecting the vector onto the subspace. (The approach can also be referred to as a Galerkin method as explained at the end of the section ref{approximation!of general vectors}.)

Mathematically the projection method is stated by the equation

$$ \begin{equation} (\e, \v) = 0,\quad\forall\v\in V\tp \tag{9} \end{equation} $$ An arbitrary \( \v\in V \) can be expressed as \( s\psib_0 \), \( s\in\Real \), and therefore (9) implies

$$ \begin{equation*} (\e,s\psib_0) = s(\e, \psib_0) = 0,\end{equation*} $$ which means that the error must be orthogonal to the basis vector in the space \( V \):

$$ \begin{equation*} (\e, \psib_0)=0\quad\hbox{or}\quad (\f - c_0\psib_0, \psib_0)=0 \tp \end{equation*} $$ The latter equation gives (7) and it also arose from least squares computations in (8).

Let us generalize the vector approximation from the previous section to vectors in spaces with arbitrary dimension. Given some vector \( \f \), we want to find the best approximation to this vector in the space

$$ \begin{equation*} V = \hbox{span}\,\{\psib_0,\ldots,\psib_N\} \tp \end{equation*} $$ We assume that the basis vectors \( \psib_0,\ldots,\psib_N \) are linearly independent so that none of them are redundant and the space has dimension \( N+1 \). Any vector \( \u\in V \) can be written as a linear combination of the basis vectors,

$$ \begin{equation*} \u = \sum_{j=0}^N c_j\psib_j,\end{equation*} $$ where \( c_j\in\Real \) are scalar coefficients to be determined.

Now we want to find \( c_0,\ldots,c_N \), such that \( \u \) is the best approximation to \( \f \) in the sense that the distance (error) \( \e = \f - \u \) is minimized. Again, we define the squared distance as a function of the free parameters \( c_0,\ldots,c_N \),

$$ \begin{align} E(c_0,\ldots,c_N) &= (\e,\e) = (\f -\sum_jc_j\psib_j,\f -\sum_jc_j\psib_j) \nonumber\\ &= (\f,\f) - 2\sum_{j=0}^N c_j(\f,\psib_j) + \sum_{p=0}^N\sum_{q=0}^N c_pc_q(\psib_p,\psib_q)\tp \tag{10} \end{align} $$ Minimizing this \( E \) with respect to the independent variables \( c_0,\ldots,c_N \) is obtained by requiring

$$ \begin{equation*} \frac{\partial E}{\partial c_i} = 0,\quad i=0,\ldots,N \tp \end{equation*} $$ The second term in (10) is differentiated as follows:

$$ \begin{equation} \frac{\partial}{\partial c_i} \sum_{j=0}^N c_j(\f,\psib_j) = (\f,\psib_i), \end{equation} $$ since the expression to be differentiated is a sum and only one term, \( c_i(\f,\psib_i) \), contains \( c_i \) and this term is linear in \( c_i \). To understand this differentiation in detail, write out the sum specifically for, e.g, \( N=3 \) and \( i=1 \).

The last term in (10) is more tedious to differentiate. We start with

$$ \begin{align} \frac{\partial}{\partial c_i} c_pc_q = \left\lbrace\begin{array}{ll} 0, & \hbox{ if } p\neq i\hbox{ and } q\neq i,\\ c_q, & \hbox{ if } p=i\hbox{ and } q\neq i,\\ c_p, & \hbox{ if } p\neq i\hbox{ and } q=i,\\ 2c_i, & \hbox{ if } p=q= i,\\ \end{array}\right. \end{align} $$ Then

$$ \begin{equation*} \frac{\partial}{\partial c_i} \sum_{p=0}^N\sum_{q=0}^N c_pc_q(\psib_p,\psib_q) = \sum_{p=0, p\neq i}^N c_p(\psib_p,\psib_i) + \sum_{q=0, q\neq i}^N c_q(\psib_q,\psib_i) +2c_i(\psib_i,\psib_i)\tp \end{equation*} $$ The last term can be included in the other two sums, resulting in

$$ \begin{equation} \frac{\partial}{\partial c_i} \sum_{p=0}^N\sum_{q=0}^N c_pc_q(\psib_p,\psib_q) = 2\sum_{j=0}^N c_i(\psib_j,\psib_i)\tp \end{equation} $$ It then follows that setting

$$ \begin{equation*} \frac{\partial E}{\partial c_i} = 0,\quad i=0,\ldots,N,\end{equation*} $$ leads to a linear system for \( c_0,\ldots,c_N \):

$$ \begin{equation} \sum_{j=0}^N A_{i,j} c_j = b_i, \quad i=0,\ldots,N, \tag{11} \end{equation} $$ where

$$ \begin{align} A_{i,j} &= (\psib_i,\psib_j),\\ b_i &= (\psib_i, \f)\tp \end{align} $$ We have changed the order of the two vectors in the inner product according to (5):

$$ A_{i,j} = (\psib_j,\psib_i) = (\psib_i,\psib_j),$$ simply because the sequence \( i \)-$j$ looks more aesthetic.

In analogy with the "one-dimensional" example in the section Approximation of planar vectors, it holds also here in the general case that minimizing the distance (error) \( \e \) is equivalent to demanding that \( \e \) is orthogonal to all \( \v\in V \):

$$ \begin{equation} (\e,\v)=0,\quad \forall\v\in V\tp \tag{12} \end{equation} $$ Since any \( \v\in V \) can be written as \( \v =\sum_{i=0}^N c_i\psib_i \), the statement (12) is equivalent to saying that

$$ \begin{equation*} (\e, \sum_{i=0}^N c_i\psib_i) = 0,\end{equation*} $$ for any choice of coefficients \( c_0,\ldots,c_N \). The latter equation can be rewritten as

$$ \begin{equation*} \sum_{i=0}^N c_i (\e,\psib_i) =0\tp \end{equation*} $$ If this is to hold for arbitrary values of \( c_0,\ldots,c_N \) we must require that each term in the sum vanishes,

$$ \begin{equation} (\e,\psib_i)=0,\quad i=0,\ldots,N\tp \tag{13} \end{equation} $$ These \( N+1 \) equations result in the same linear system as (11):

$$ (\f - \sum_{j=0}^N c_j\psib_j, \psib_i) = (\f, \psib_i) - \sum_{j\in\If} (\psib_i,\psib_j)c_j = 0,$$ and hence

$$ \sum_{j=0}^N (\psib_i,\psib_j)c_j = (\f, \psib_i),\quad i=0,\ldots, N \tp $$ So, instead of differentiating the \( E(c_0,\ldots,c_N) \) function, we could simply use (12) as the principle for determining \( c_0,\ldots,c_N \), resulting in the \( N+1 \) equations (13).

The names least squares method or least squares approximation are natural since the calculations consists of minimizing \( ||\e||^2 \), and \( ||\e||^2 \) is a sum of squares of differences between the components in \( \f \) and \( \u \). We find \( \u \) such that this sum of squares is minimized.

The principle (12), or the equivalent form (13), is known as projection. Almost the same mathematical idea was used by the Russian mathematician Boris Galerkin to solve differential equations, resulting in what is widely known as Galerkin's method.