Solving ordinary differential equations¶

Differential equations constitute one of the most powerful mathematical tools to understand and predict the behavior of dynamical systems in nature, engineering, and society. A dynamical system is some system with some state, usually expressed by a set of variables, that evolves in time. For example, an oscillating pendulum, the spreading of a disease, and the weather are examples of dynamical systems. We can use basic laws of physics, or plain intuition, to express mathematical rules that govern the evolution of the system in time. These rules take the form of differential equations. You are probably well experienced with equations, at least equations like \(ax+b=0\) or \(ax^2 + bx + c=0\). Such equations are known as algebraic equations, and the unknown is a number. The unknown in a differential equation is a function, and a differential equation will almost always involve this function and one or more derivatives of the function. For example, \(f'(x)=f(x)\) is a simple differential equation (asking if there is any function \(f\) such that it equals its derivative - you might remember that \(e^x\) is a candidate).

The present chapter starts with explaining how easy it is to solve both single (scalar) first-order ordinary differential equations and systems of first-order differential equations by the Forward Euler method. We demonstrate all the mathematical and programming details through two specific applications: population growth and spreading of diseases.

Then we turn to a physical application: oscillating mechanical systems, which arise in a wide range of engineering situations. The differential equation is now of second order, and the Forward Euler method does not perform well. This observation motivates the need for other solution methods, and we derive the Euler-Cromer scheme [1], the 2nd- and 4th-order Runge-Kutta schemes, as well as a finite difference scheme (the latter to handle the second-order differential equation directly without reformulating it as a first-order system). The presentation starts with undamped free oscillations and then treats general oscillatory systems with possibly nonlinear damping, nonlinear spring forces, and arbitrary external excitation. Besides developing programs from scratch, we also demonstrate how to access ready-made implementations of more advanced differential equation solvers in Matlab.

| [1] | The term scheme is used as synonym for method or computational recipe, especially in the context of numerical methods for differential equations. |

As we progress with more advanced methods, we develop more sophisticated and reusable programs, and in particular, we incorporate good testing strategies so that we bring solid evidence to correct computations. Consequently, the beginning with population growth and disease modeling examples has a very gentle learning curve, while that curve gets significantly steeper towards the end of the treatment of differential equations for oscillatory systems.

Population growth¶

Our first taste of differential equations regards modeling the growth of some population, such as a cell culture, an animal population, or a human population. The ideas even extend trivially to growth of money in a bank. Let \(N(t)\) be the number of individuals in the population at time \(t\). How can we predict the evolution of \(N(t)\) in time? Below we shall derive a differential equation whose solution is \(N(t)\). The equation reads

where \(r\) is a number. Note that although \(N\) is an integer in real life, we model \(N\) as a real-valued function. We are forced to do this because the solution of differential equations are (normally continuous) real-valued functions. An integer-valued \(N(t)\) in the model would lead to a lot of mathematical difficulties.

With a bit of guessing, you may realize that \(N(t)=Ce^{rt}\), where \(C\) is any number. To make this solution unique, we need to fix \(C\), done by prescribing the value of \(N\) at some time, usually \(t=0\). Say \(N(0)\) is given as \(N_0\). Then \(N(t)=N_0e^{rt}\).

In general, a differential equation model consists of a differential equation, such as (29) and an initial condition, such as \(N(0)=N_0\). With a known initial condition, the differential equation can be solved for the unknown function and the solution is unique.

It is, of course, very seldom that we can find the solution of a differential equation as easy as in this example. Normally, one has to apply certain mathematical methods, but these can only handle some of the simplest differential equations. However, we can easily deal with almost any differential equation by applying numerical methods and a bit of programming. This is exactly the topic of the present chapter.

Derivation of the model¶

It can be instructive to show how an equation like (29) arises. Consider some population of (say) an animal species and let \(N(t)\) be the number of individuals in a certain spatial region, e.g. an island. We are not concerned with the spatial distribution of the animals, just the number of them in some spatial area where there is no exchange of individuals with other spatial areas. During a time interval \(\Delta t\), some animals will die and some new will be born. The number of deaths and births are expected to be proportional to \(N\). For example, if there are twice as many individuals, we expect them to get twice as many newborns. In a time interval \(\Delta t\), the net growth of the population will be

where \(\bar bN(t)\) is the number of newborns and \(\bar d N(t)\) is the number of deaths. If we double \(\Delta t\), we expect the proportionality constants \(\bar b\) and \(\bar d\) to double too, so it makes sense to think of \(\bar b\) and \(\bar d\) as proportional to \(\Delta t\) and “factor out” \(\Delta t\). That is, we introduce \(b=\bar b/\Delta t\) and \(d=\bar d/\Delta t\) to be proportionality constants for newborns and deaths independent of \(\Delta t\). Also, we introduce \(r=b-d\), which is the net rate of growth of the population per time unit. Our model then becomes

Equation (30) is actually a computational model. Given \(N(t)\), we can advance the population size by

This is called a difference equation. If we know \(N(t)\) for some \(t\), e.g., \(N(0)=N_0\), we can compute

where \(k\) is some arbitrary integer. A computer program can easily compute \(N((k+1)\Delta t)\) for us with the aid of a little loop.

Warning

Observe that the computational formula cannot be started unless we have an initial condition!

The solution of \(N'=rN\) is \(N=Ce^{rt}\) for any constant \(C\), and the initial condition is needed to fix \(C\) so the solution becomes unique. However, from a mathematical point of view, knowing \(N(t)\) at any point \(t\) is sufficient as initial condition. Numerically, we more literally need an initial condition: we need to know a starting value at the left end of the interval in order to get the computational formula going.

In fact, we do not need a computer since we see a repetitive pattern when doing hand calculations, which leads us to a mathematical formula for \(N((k+1)\Delta t)\), :

Rather than using (30) as a computational model directly, there is a strong tradition for deriving a differential equation from this difference equation. The idea is to consider a very small time interval \(\Delta t\) and look at the instantaneous growth as this time interval is shrunk to an infinitesimally small size. In mathematical terms, it means that we let \(\Delta t\rightarrow 0\). As (30) stands, letting \(\Delta t\rightarrow 0\) will just produce an equation \(0=0\), so we have to divide by \(\Delta t\) and then take the limit:

The term on the left-hand side is actually the definition of the derivative \(N'(t)\), so we have

which is the corresponding differential equation.

There is nothing in our derivation that forces the parameter \(r\) to be constant - it can change with time due to, e.g., seasonal changes or more permanent environmental changes.

Detour: Exact mathematical solution

If you have taken a course on mathematical solution methods for differential equations, you may want to recap how an equation like \(N'=rN\) or \(N'=r(t)N\) is solved. The method of separation of variables is the most convenient solution strategy in this case:

which for constant \(r\) results in \(N=N_0e^{rt}\). Note that \(\exp{(t)}\) is the same as \(e^t\).

As will be described later, \(r\) must in more realistic models depend on \(N\). The method of separation of variables then requires to integrate \(\int_{N_0}^{N} N/r(N)dN\), which quickly becomes non-trivial for many choices of \(r(N)\). The only generally applicable solution approach is therefore a numerical method.

Numerical solution¶

There is a huge collection of numerical methods for problems like (30), and in general any equation of the form \(u'=f(u,t)\), where \(u(t)\) is the unknown function in the problem, and \(f\) is some known formula of \(u\) and optionally \(t\). For example, \(f(u,t)=ru\) in (30). We will first present a simple finite difference method solving \(u'=f(u,t)\). The idea is four-fold:

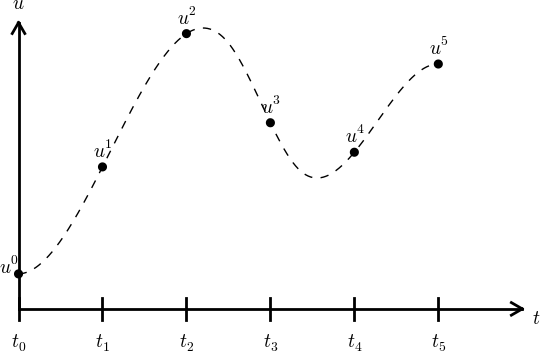

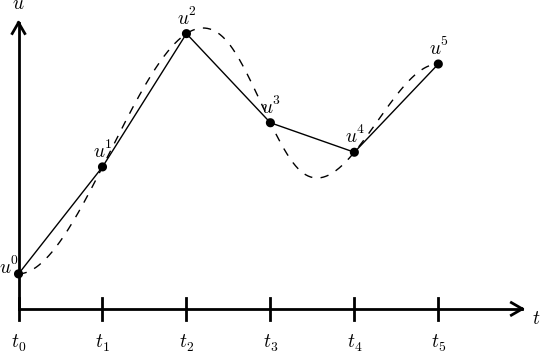

- Introduce a mesh in time with \(N_t+1\) points \(t_0,t_1,\ldots,t_{N_t}\). We seek the unknown \(u\) at the mesh points \(t_n\), and introduce \(u^n\) as the numerical approximation to \(u(t_n)\), see Figure Mesh in time with corresponding discrete values (unknowns).

- Assume that the differential equation is valid at the mesh points.

- Approximate derivatives by finite differences, see Figure Illustration of a forward difference approximation to the derivative.

- Formulate a computational algorithm that can compute a new value \(u^n\) based on previously computed values \(u^i\), \(i<n\).

An example will illustrate the steps. First, we introduce the mesh, and very often the mesh is uniform, meaning that the spacing between points \(t_n\) and \(t_{n+1}\) is constant. This property implies that

Second, the differential equation is supposed to hold at the mesh points. Note that this is an approximation, because the differential equation is originally valid at all real values of \(t\). We can express this property mathematically as

For example, with our model equation \(u'=ru\), we have the special case

or

if \(r\) depends explicitly on \(t\).

Third, derivatives are to be replaced by finite differences. To this end, we need to know specific formulas for how derivatives can be approximated by finite differences. One simple possibility is to use the definition of the derivative from any calculus book,

At an arbitrary mesh point \(t_n\) this definition can be written as

Instead of going to the limit \(\Delta t\rightarrow 0\) we can use a small \(\Delta t\), which yields a computable approximation to \(u'(t_n)\):

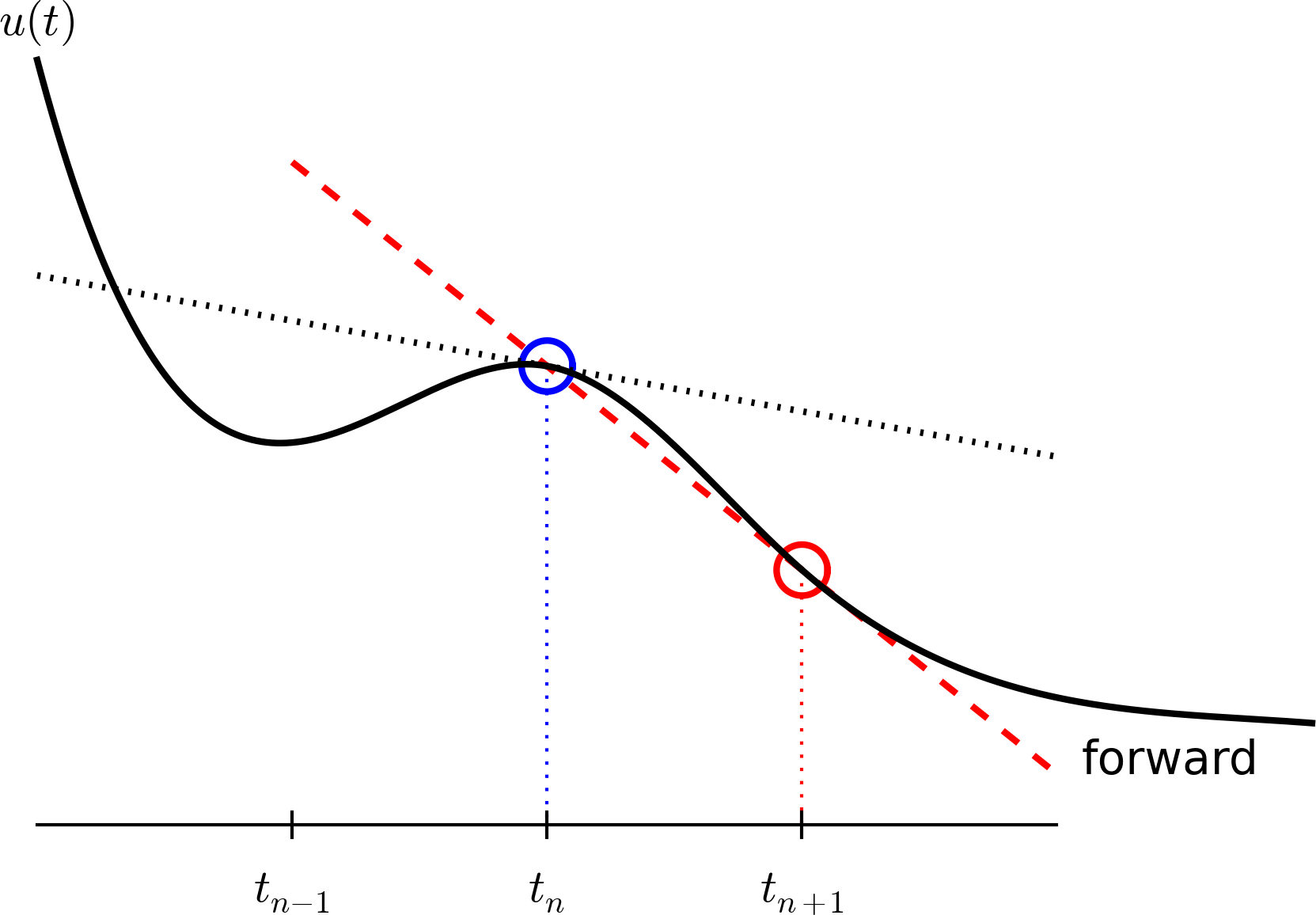

This is known as a forward difference since we go forward in time (\(u^{n+1}\)) to collect information in \(u\) to estimate the derivative. Figure Illustration of a forward difference approximation to the derivative illustrates the idea. The error in of the forward difference is proportional to \(\Delta t\) (often written as \({\mathcal{O}(\Delta t)}\), but will not use this notation in the present book).

We can now plug in the forward difference in our differential equation sampled at the arbitrary mesh point \(t_n\):

or with \(f(u,t)=ru\) in our special model problem for population growth,

If \(r\) depends on time, we insert \(r(t_n)=r^n\) for \(r\) in this latter equation.

The fourth step is to derive a computational algorithm. Looking at (31), we realize that if \(u^n\) should be known, we can easily solve with respect to \(u^{n+1}\) to get a formula for \(u\) at the next time level \(t_{n+1}\):

Provided we have a known starting value, \(u^0=U_0\), we can use (33) to advance the solution by first computing \(u^1\) from \(u^0\), then \(u^2\) from \(u^1\), \(u^3\) from \(u^2\), and so forth.

Such an algorithm is called a numerical scheme for the differential equation and often written compactly as

This scheme is known as the Forward Euler scheme, also called Euler’s method.

In our special population growth model, we have

We may also write this model using the problem-specific symbol \(N\) instead of the generic \(u\) function:

The observant reader will realize that (36) is nothing but the computational model (30) arising directly in the model derivation. The formula (36) arises, however, from a detour via a differential equation and a numerical method for the differential equation. This looks rather unnecessary! The reason why we bother to derive the differential equation model and then discretize it by a numerical method is simply that the discretization can be done in many ways, and we can create (much) more accurate and more computationally efficient methods than (36) or (34). This can be useful in many problems! Nevertheless, the Forward Euler scheme is intuitive and widely applicable, at least when \(\Delta t\) is chosen to be small.

The numerical solution between the mesh points

Our numerical method computes the unknown function \(u\) at discrete mesh points \(t_1,t_2,\ldots,t_{N_t}\). What if we want to evaluate the numerical solution between the mesh points? The most natural choice is to assume a linear variation between the mesh points, see Figure The numerical solution at points can be extended by linear segments between the mesh points. This is compatible with the fact that when we plot the array \(u^0,u^1,\ldots\) versus \(t_0,t_1,\ldots\), a straight line is drawn between the discrete points.

Programming the Forward Euler scheme; the special case¶

Let us compute (36) in a program. The input variables are \(N_0\), \(\Delta t\), \(r\), and \(N_t\). Note that we need to compute \(N_t+1\) new values \(N^1,\ldots,N^{N_t+1}\). A total of \(N_t+2\) values are needed in an array representation of \(N^n\), \(n=0,\ldots,N_t+1\).

Our first version of this program is as simple as possible:

N_0 = input('Give initial population size N_0: ');

r = input('Give net growth rate r: ');

dt = input('Give time step size: ');

N_t = input('Give number of steps: ');

t = linspace(0, (N_t+1)*dt, N_t+2);

N = zeros(N_t+2, 1);

N(1) = N_0;

for n = 1:N_t

N(n+1) = N(n) + r*dt*N(n);

end

if N_t < 70

numerical_sol = 'bo';

else

numerical_sol = 'b-';

end

plot(t, N, numerical_sol, t, N_0*exp(r.*t), 'r-');

xlabel('t'); ylabel('N(t)');

legend('numerical', 'exact', 'location', 'northwest');

filestem = strcat('growth1_', num2str(N_t), 'steps');

print(filestem, '-dpng'); print(filestem, '-dpdf');

The complete code above resides in the file growth1.m.

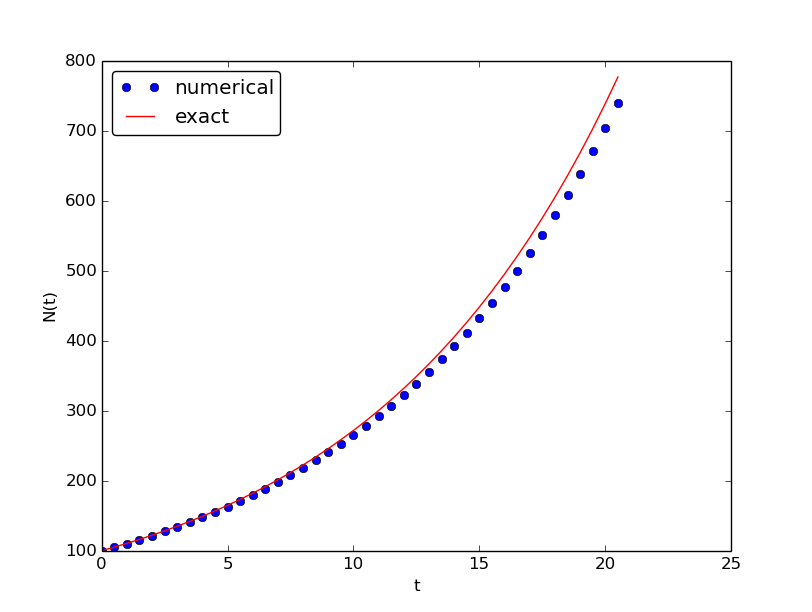

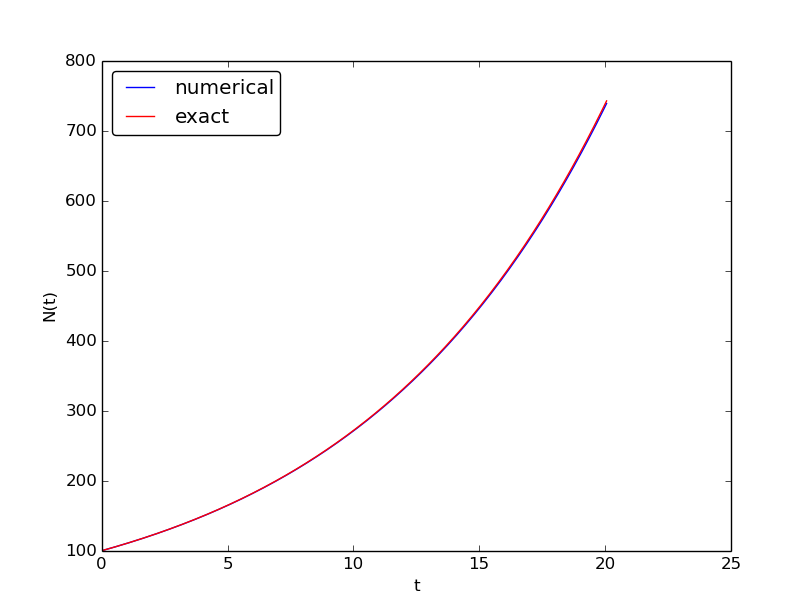

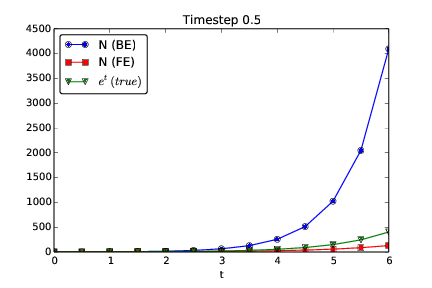

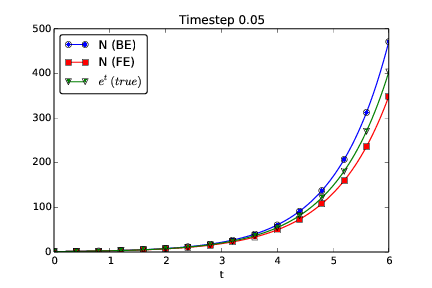

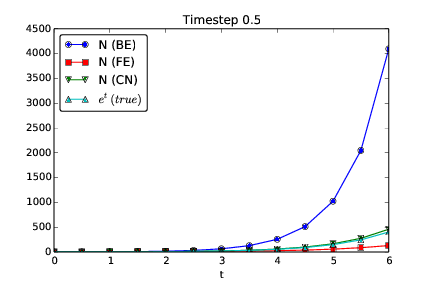

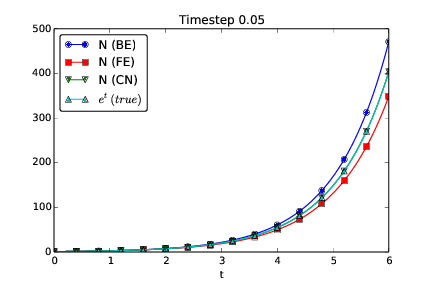

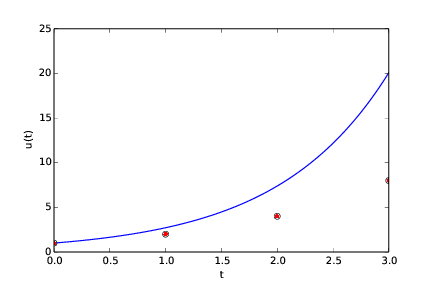

Let us demonstrate a simulation where we start with 100 animals, a net growth rate of 10 percent (0.1) per time unit, which can be one month, and \(t\in [0,20]\) months. We may first try \(\Delta t\) of half a month (0.5), which implies \(N_t=40\) (or to be absolutely precise, the last time point to be computed according to our set-up above is \(t_{N_t+1}=20.5\)). Figure Evolution of a population computed with time step 0.5 month shows the results. The solid line is the exact solution, while the circles are the computed numerical solution. The discrepancy is clearly visible. What if we make \(\Delta t\) 10 times smaller? The result is displayed in Figure Evolution of a population computed with time step 0.05 month, where we now use a solid line also for the numerical solution (otherwise, 400 circles would look very cluttered, so the program has a test on how to display the numerical solution, either as circles or a solid line). We can hardly distinguish the exact and the numerical solution. The computing time is also a fraction of a second on a laptop, so it appears that the Forward Euler method is sufficiently accurate for practical purposes. (This is not always true for large, complicated simulation models in engineering, so more sophisticated methods may be needed.)

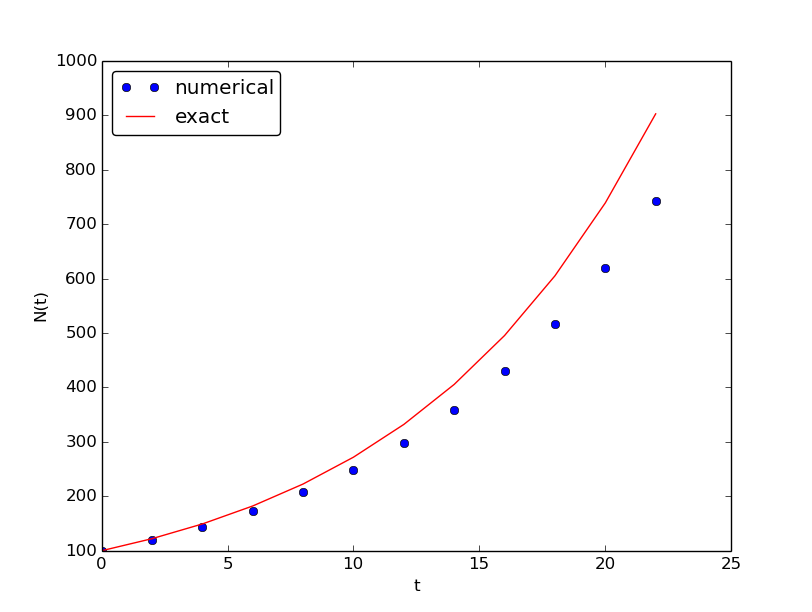

It is also of interest to see what happens if we increase \(\Delta t\) to 2 months. The results in Figure Evolution of a population computed with time step 2 months indicate that this is an inaccurate computation.

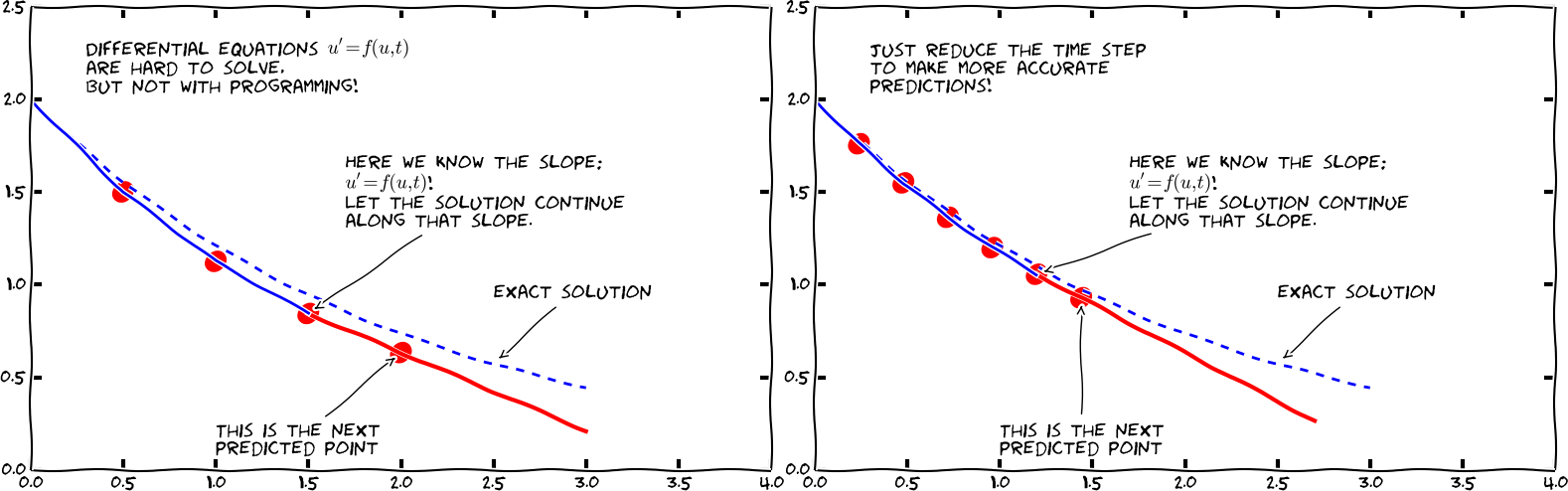

Understanding the Forward Euler method¶

The good thing about the Forward Euler method is that it gives an understanding of what a differential equation is and a geometrical picture of how to construct the solution. The first idea is that we have already computed the solution up to some time point \(t_n\). The second idea is that we want to progress the solution from \(t_n\) to \(t_{n+1}\) as a straight line.

We know that the line must go through the solution at \(t_n\), i.e., the point \((t_n, u^n)\). The differential equation tells us the slope of the line: \(u'(t_n) = f(u^n,t_n)=ru^n\). That is, the differential equation gives a direct formula for the further direction of the solution curve. We can say that the differential equation expresses how the system (\(u\)) undergoes changes at a point.

There is a general formula for a straight line \(y=ax+b\) with slope \(a\) that goes through the point \((x_0,y_0)\): \(y=a(x-x_0)+y_0\). Using this formula adapted to the present case, and evaluating the formula for \(t_{n+1}\), results in

which is nothing but the Forward Euler formula. You are now encouraged to do Exercise 43: Geometric construction of the Forward Euler method to become more familiar with the geometric interpretation of the Forward Euler method.

Programming the Forward Euler scheme; the general case¶

Our previous program was just a flat main program tailored to a special differential equation. When programming mathematics, it is always good to consider a (large) class of problems and making a Matlab function to solve any problem that fits into the class. More specifically, we will make software for the class of differential equation problems of the form

for some given function \(f\), and numbers \(U_0\) and \(T\). We also take the opportunity

to illustrate what is commonly called a demo function. As the name implies,

the purpose of such a function is solely to demonstrate how the function works (not

to be confused with a test function, which does verification by use of assert). The Matlab function

calculating the solution must take \(f\), \(U_0\), \(\Delta t\), and

\(T\) as input, find the corresponding \(N_t\), compute the solution, and return and

array with \(u^0,u^1,\ldots,u^{N_t}\) and an array with

\(t_0,t_1,\ldots,t_{N_t}\). The Forward Euler scheme reads

The corresponding program ode_FE.m may now take the form

function [sol, time] = ode_FE(f, U_0, dt, T)

N_t = floor(T/dt);

u = zeros(N_t+1, 1);

t = linspace(0, N_t*dt, length(u));

u(1) = U_0;

for n = 1:N_t

u(n+1) = u(n) + dt*f(u(n), t(n));

end

sol = u;

time = t;

end

Note that the function ode_FE is general, i.e. it can

solve any differential equation \(u'=f(u,t)\).

A proper demo function for this solver might be written as (file demo_population_growth.m):

function demo_population_growth()

% Test case: u' = r*u, u(0)=100

function r = f(u, t)

r = 0.1*u;

end

[u, t] = ode_FE(@f, 100, 0.5, 20);

plot(t, u, t, 100*exp(0.1*t));

end

The solution should be identical to what the growth1.m program

produces with the same parameter settings (\(r=0.1\), \(N_0=100\)).

This feature can easily be tested by inserting a print statement, but

a much better, automated verification is suggested in

Exercise 43: Geometric construction of the Forward Euler method. You are strongly encouraged to take

a “break” and do that exercise now.

Remark on the use of u as variable

In the ode_FE program, the variable u is used in different

contexts. Inside the ode_FE function, u is an array, but in

the f(u,t) function, as exemplified in the demo_population_growth

function, the argument u is

a number. Typically, we call f (in ode_FE) with the u argument as

one element of the array u in the ode_FE function:

u(n).

Making the population growth model more realistic¶

Exponential growth of a population according the model \(N'=rN\), with exponential solution \(N=N_0e^{rt}\), is unrealistic in the long run because the resources needed to feed the population are finite. At some point there will not be enough resources and the growth will decline. A common model taking this effect into account assumes that \(r\) depends on the size of the population, \(N\):

The corresponding differential equation becomes

The reader is strongly encouraged to repeat the steps in the derivation of the Forward Euler scheme and establish that we get

which computes as easy as for a constant \(r\), since \(r(N^n)\) is known when computing \(N^{n+1}\). Alternatively, one can use the Forward Euler formula for the general problem \(u'=f(u,t)\) and use \(f(u,t)=r(u)u\) and replace \(u\) by \(N\).

The simplest choice of \(r(N)\) is a linear function, starting with some growth value \(\bar r\) and declining until the population has reached its maximum, \(M\), according to the available resources:

In the beginning, \(N\ll M\) and we will have exponential growth \(e^{\bar rt}\), but as \(N\) increases, \(r(N)\) decreases, and when \(N\) reaches \(M\), \(r(N)=0\) so there is now more growth and the population remains at \(N(t)=M\). This linear choice of \(r(N)\) gives rise to a model that is called the logistic model. The parameter \(M\) is known as the carrying capacity of the population.

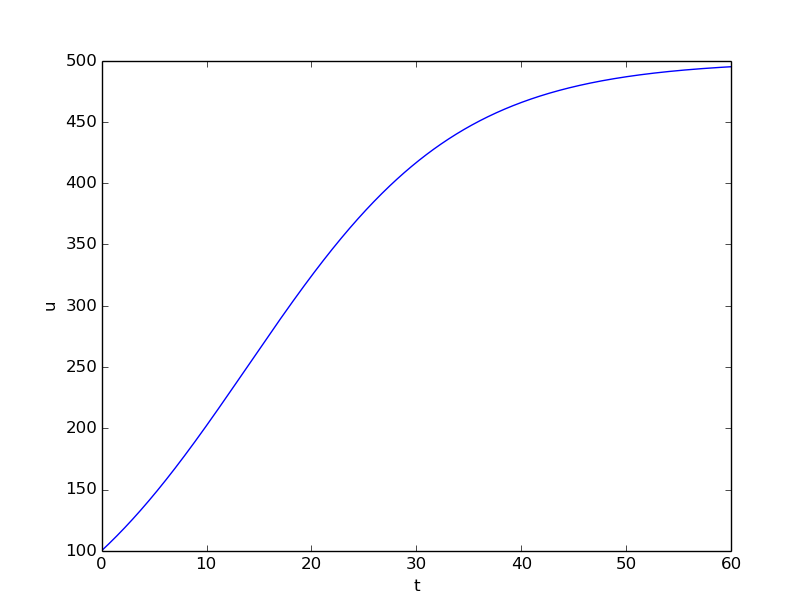

Let us run the logistic model with aid of the ode_FE function in

the ode_FE module. We choose \(N(0)=100\), \(\Delta t=0.5\) month,

\(T=60\) months, \(r=0.1\), and \(M=500\). The complete program, called

logistic.m,

is basically a call to ode_FE:

f = @(u, t) 0.1*(1 - u/500)*u;

U_0 = 100;

dt = 0.5; T = 60;

[u, t] = ode_FE(f, U_0, dt, T);

plot(t, u, 'b-');

xlabel('t'); ylabel('N(t)');

filestem = strcat('tmp_',num2str(dt));

% Note: this print statement gets a problem with the decimal point

%print(filestem,'-dpng'); print(filestem,'-dpdf');

% so we rather do it like this:

filename = strcat(filestem, '.png'); print(filename);

filename = strcat(filestem, '.pdf'); print(filename);

dt = 20; T = 100;

[u, t] = ode_FE(f, U_0, dt, T);

plot(t, u, 'b-');

xlabel('t'); ylabel('N(t)');

filestem = strcat('tmp_',num2str(dt));

print(filestem, '-dpng'); print(filestem, '-dpdf');

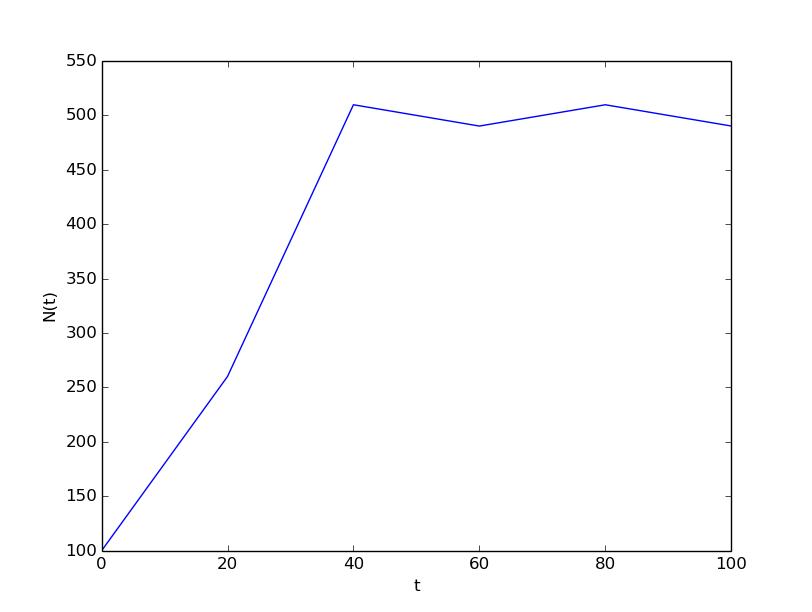

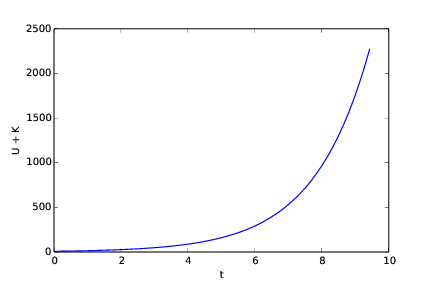

Figure Logistic growth of a population shows the resulting curve. We see that the population stabilizes around \(M=500\) individuals. A corresponding exponential growth would reach \(N_0e^{rt}=100e^{0.1\cdot 60}\approx 40,300\) individuals!

It is always interesting to see what happens with large \(\Delta t\) values. We may set \(\Delta t=20\) and \(T=100\). Now the solution, seen in Figure Logistic growth with large time step, oscillates and is hence qualitatively wrong, because one can prove that the exact solution of the differential equation is monotone. (However, there is a corresponding difference equation model, \(N_{n+1}=rN_n(1-N_n/M)\), which allows oscillatory solutions and those are observed in animal populations. The problem with large \(\Delta t\) is that it just leads to wrong mathematics - and two wrongs don’t make a right in terms of a relevant model.)

Remark on the world population

The number of people on the planet follows the model \(N'=r(t)N\), where the net reproduction \(r(t)\) varies with time and has decreased since its top in 1990. The current world value of \(r\) is 1.2%, and it is difficult to predict future values. At the moment, the predictions of the world population point to a growth to 9.6 billion before declining.

This example shows the limitation of a differential equation model: we need to know all input parameters, including \(r(t)\), in order to predict the future. It is seldom the case that we know all input parameters. Sometimes knowledge of the solution from measurements can help estimate missing input parameters.

Verification: exact linear solution of the discrete equations¶

How can we verify that the programming of an ODE model is correct? The best method is to find a problem where there are no unknown numerical approximation errors, because we can then compare the exact solution of the problem with the result produced by our implementation and expect the difference to be within a very small tolerance. We shall base a unit test on this idea and implement a corresponding test function (see the section Constructing unit tests and writing test functions) for automatic verification of our implementation.

It appears that most numerical methods for ODEs will exactly reproduce a solution \(u\) that is linear in \(t\). We may therefore set \(u=at+b\) and choose any \(f\) whose derivative is \(a\). The choice \(f(u,t)=a\) is very simple, but we may add anything that is zero, e.g.,

This is a valid \(f(u,t)\) for any \(a\), \(b\), and \(m\). The corresponding ODE looks highly non-trivial, however:

Using the general ode_FE function in

ode_FE.m,

we may

write a proper test function as follows

(in file

test_ode_FE_exact_linear.m):

function test_ode_FE_exact_linear()

% Test if a linear function u(t) = a*x + b is exactly reproduced.

a = 4; b = -1; m = 6;

exact_solution = @(t) (a*t + b)';

f = @(u, t) a + (u - exact_solution(t))^m;

dt = 0.5; T = 20.0;

[u, t] = ode_FE(f, exact_solution(0), dt, T);

diff = max(abs(exact_solution(t) - u));

tol = 1E-15; % Tolerance for float comparison

assert(diff < tol);

end

Observe that we cannot compare diff to zero, which is what we mathematically

expect, because diff is a floating-point variable that most likely

contains small rounding errors. Therefore, we must compare diff to

zero with a tolerance, here \(10^{-15}\).

You are encouraged to do Exercise 44: Make test functions for the Forward Euler method where the goal is to make a test function for a verification based on comparison with hand-calculated results for a few time steps.

Spreading of diseases¶

Our aim with this section is to show in detail how one can apply mathematics and programming to investigate spreading of diseases. The mathematical model is now a system of three differential equations with three unknown functions. To derive such a model, we can use mainly intuition, so no specific background knowledge of diseases is required.

Spreading of a flu¶

Imagine a boarding school out in the country side. This school is a small and closed society. Suddenly, one or more of the pupils get a flu. We expect that the flu may spread quite effectively or die out. The question is how many of the pupils and the school’s staff will be affected. Some quite simple mathematics can help us to achieve insight into the dynamics of how the disease spreads.

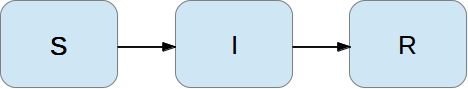

Let the mathematical function \(S(t)\) count how many individuals, at time \(t\), that have the possibility to get infected. Here, \(t\) may count hours or days, for instance. These individuals make up a category called susceptibles, labeled as S. Another category, I, consists of the individuals that are infected. Let \(I(t)\) count how many there are in category I at time \(t\). An individual having recovered from the disease is assumed to gain immunity. There is also a small possibility that an infected will die. In either case, the individual is moved from the I category to a category we call the removed category, labeled with R. We let \(R(t)\) count the number of individuals in the \(R\) category at time \(t\). Those who enter the \(R\) category, cannot leave this category.

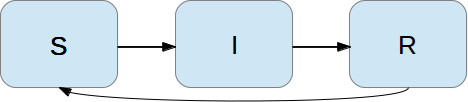

To summarize, the spreading of this disease is essentially the dynamics of moving individuals from the S to the I and then to the R category:

We can use mathematics to more precisely describe the exchange between the categories. The fundamental idea is to describe the changes that take place during a small time interval, denoted by \(\Delta t\).

Our disease model is often referred to as a compartment model, where quantities are shuffled between compartments (here a synonym for categories) according to some rules. The rules express changes in a small time interval \(\Delta t\), and from these changes we can let \(\Delta t\) go to zero and obtain derivatives. The resulting equations then go from difference equations (with finite \(\Delta t\)) to differential equations (\(\Delta t\rightarrow 0\)).

We introduce a uniform mesh in time, \(t_n=n\Delta t\), \(n=0,\ldots,N_t\), and seek \(S\) at the mesh points. The numerical approximation to \(S\) at time \(t_n\) is denoted by \(S^n\). Similarly, we seek the unknown values of \(I(t)\) and \(R(t)\) at the mesh points and introduce a similar notation \(I^n\) and \(R^n\) for the approximations to the exact values \(I(t_n)\) and \(R(t_n)\).

In the time interval \(\Delta t\) we know that some people will be infected, so \(S\) will decrease. We shall soon argue by mathematics that there will be \(\beta\Delta tSI\) new infected individuals in this time interval, where \(\beta\) is a parameter reflecting how easy people get infected during a time interval of unit length. If the loss in \(S\) is \(\beta\Delta tSI\), we have that the change in \(S\) is

Dividing by \(\Delta t\) and letting \(\Delta t\rightarrow 0\), makes the left-hand side approach \(S'(t_n)\) such that we obtain a differential equation

The reasoning in going from the difference equation (37) to the differential equation (38) follows exactly the steps explained in the section Derivation of the model.

Before proceeding with how \(I\) and \(R\) develops in time, let us explain the formula \(\beta\Delta tSI\). We have \(S\) susceptibles and \(I\) infected people. These can make up \(SI\) pairs. Now, suppose that during a time interval \(T\) we measure that \(m\) actual pairwise meetings do occur among \(n\) theoretically possible pairings of people from the S and I categories. The probability that people meet in pairs during a time \(T\) is (by the empirical frequency definition of probability) equal to \(m/n\), i.e., the number of successes divided by the number of possible outcomes. From such statistics we normally derive quantities expressed per unit time, i.e., here we want the probability per unit time, \(\mu\), which is found from dividing by \(T\): \(\mu = m/(nT)\).

Given the probability \(\mu\), the expected number of meetings per time interval of \(SI\) possible pairs of people is (from basic statistics) \(\mu SI\). During a time interval \(\Delta t\), there will be \(\mu SI\Delta t\) expected number of meetings between susceptibles and infected people such that the virus may spread. Only a fraction of the \(\mu\Delta t SI\) meetings are effective in the sense that the susceptible actually becomes infected. Counting that \(m\) people get infected in \(n\) such pairwise meetings (say 5 are infected from 1000 meetings), we can estimate the probability of being infected as \(p=m/n\). The expected number of individuals in the S category that in a time interval \(\Delta t\) catch the virus and get infected is then \(p\mu\Delta t SI\). Introducing a new constant \(\beta =p\mu\) to save some writing, we arrive at the formula \(\beta\Delta tSI\).

The value of \(\beta\) must be known in order to predict the future with the disease model. One possibility is to estimate \(p\) and \(\mu\) from their meanings in the derivation above. Alternatively, we can observe an “experiment” where there are \(S_0\) susceptibles and \(I_0\) infected at some point in time. During a time interval \(T\) we count that \(N\) susceptibles have become infected. Using (37) as a rough approximation of how \(S\) has developed during time \(T\) (and now \(T\) is not necessarily small, but we use (37) anyway), we get

We need an additional equation to describe the evolution of \(I(t)\). Such an equation is easy to establish by noting that the loss in the S category is a corresponding gain in the I category. More precisely,

However, there is also a loss in the I category because people recover from the disease. Suppose that we can measure that \(m\) out of \(n\) individuals recover in a time period \(T\) (say 10 of 40 sick people recover during a day: \(m=10\), \(n=40\), \(T=24\) h). Now, \(\gamma =m/(nT)\) is the probability that one individual recovers in a unit time interval. Then (on average) \(\gamma\Delta t I\) infected will recover in a time interval \(\Delta t\). This quantity represents a loss in the I category and a gain in the R category. We can therefore write the total change in the I category as

The change in the R category is simple: there is always an increase from the I category:

Since there is no loss in the R category (people are either recovered and immune, or dead), we are done with the modeling of this category. In fact, we do not strictly need the equation (42) for \(R\), but extensions of the model later will need an equation for \(R\).

Dividing by \(\Delta t\) in (41) and (42) and letting \(\Delta t\rightarrow 0\), results in the corresponding differential equations

and

To summarize, we have derived difference equations (37)-(42), and alternative differential equations (43)-(44). For reference, we list the complete set of the three difference equations:

Note that we have isolated the new unknown quantities \(S^{n+1}\), \(I^{n+1}\), and \(R^{n+1}\) on the left-hand side, such that these can readily be computed if \(S^n\), \(I^n\), and \(R^n\) are known. To get such a procedure started, we need to know \(S^0\), \(I^0\), \(R^0\). Obviously, we also need to have values for the parameters \(\beta\) and \(\gamma\).

We also list the system of three differential equations:

This differential equation model (and also its discrete counterpart above) is known as a SIR model. The input data to the differential equation model consist of the parameters \(\beta\) and \(\gamma\) as well as the initial conditions \(S(0)=S_0\), \(I(0)=I_0\), and \(R(0)=R_0\).

A Forward Euler method for the differential equation system¶

Let us apply the same principles as we did in the section Numerical solution to discretize the differential equation system by the Forward Euler method. We already have a time mesh and time-discrete quantities \(S^n\), \(I^n\), \(R^n\), \(n=0,\ldots,N_t\). The three differential equations are assumed to be valid at the mesh points. At the point \(t_n\) we then have

for \(n=0,1,\ldots,N_t\). This is an approximation since the differential equations are originally valid at all times \(t\) (usually in some finite interval \([0,T]\)). Using forward finite differences for the derivatives results in an additional approximation,

As we see, these equations are identical to the difference equations that naturally arise in the derivation of the model. However, other numerical methods than the Forward Euler scheme will result in slightly different difference equations.

Programming the numerical method; the special case¶

The computation of (54)-(56) can be readily made in a computer program SIR1.m:

% Time unit: 1 h

beta = 10/(40*8*24);

gamma = 3/(15*24);

dt = 0.1; % 6 min

D = 30; % Simulate for D days

N_t = floor(D*24/dt); % Corresponding no of hours

t = linspace(0, N_t*dt, N_t+1);

S = zeros(N_t+1, 1);

I = zeros(N_t+1, 1);

R = zeros(N_t+1, 1);

% Initial condition

S(1) = 50;

I(1) = 1;

R(1) = 0;

% Step equations forward in time

for n = 1:N_t

S(n+1) = S(n) - dt*beta*S(n)*I(n);

I(n+1) = I(n) + dt*beta*S(n)*I(n) - dt*gamma*I(n);

R(n+1) = R(n) + dt*gamma*I(n);

end

plot(t, S, t, I, t, R);

legend('S', 'I', 'R', 'Location','northwest');

xlabel('hours');

print('tmp', '-dpdf'); print('tmp', '-dpng');

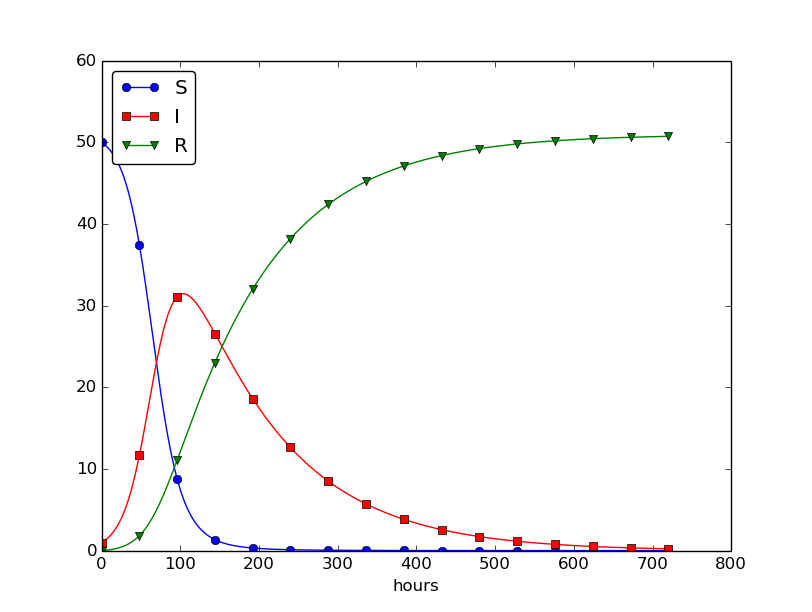

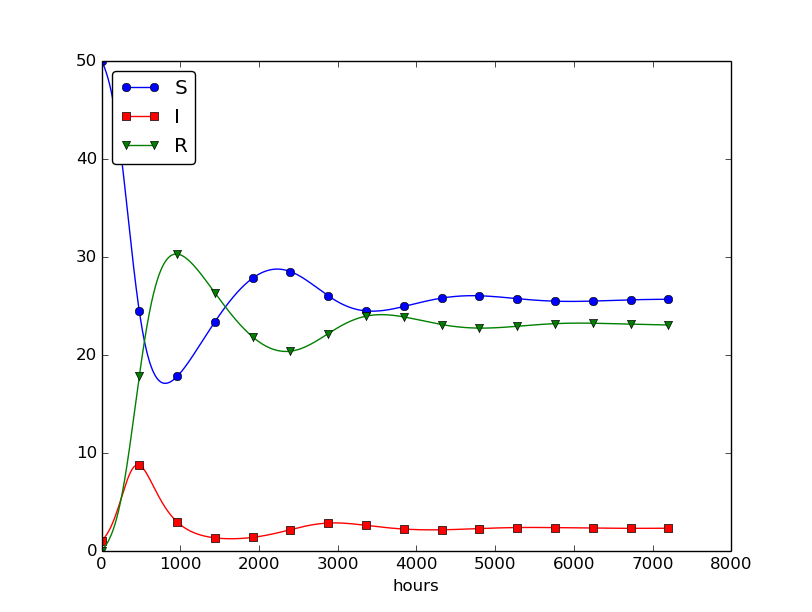

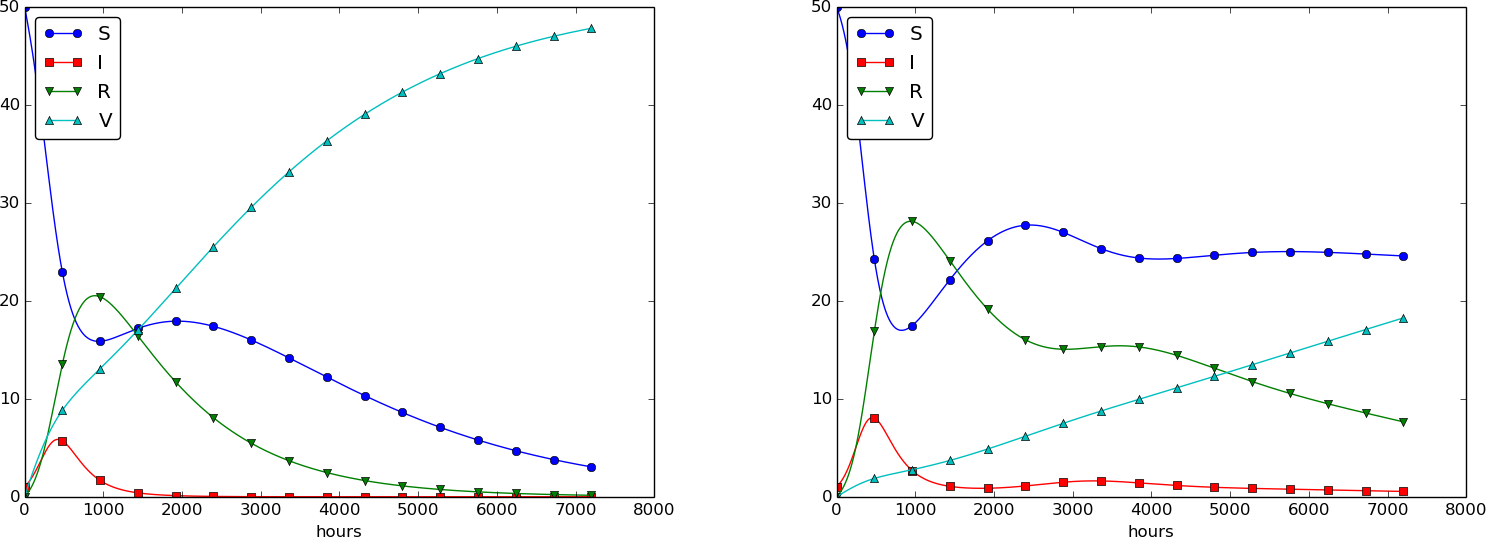

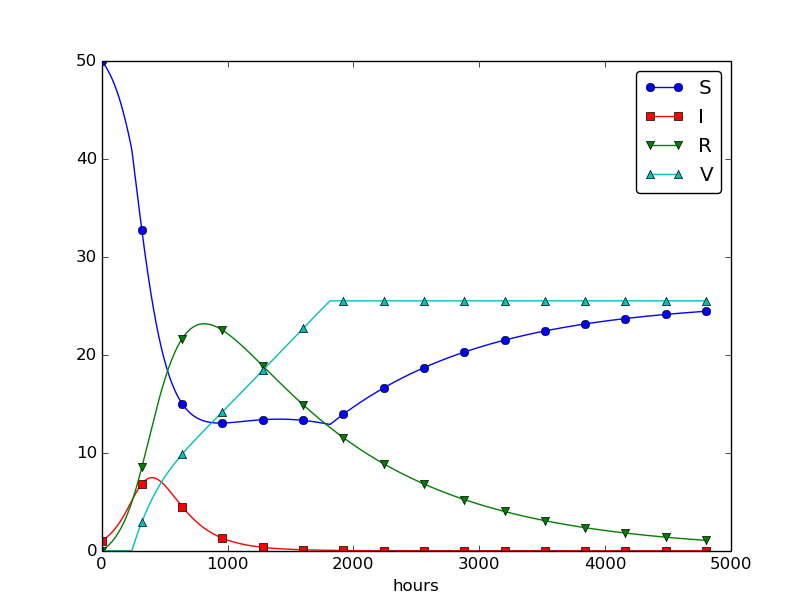

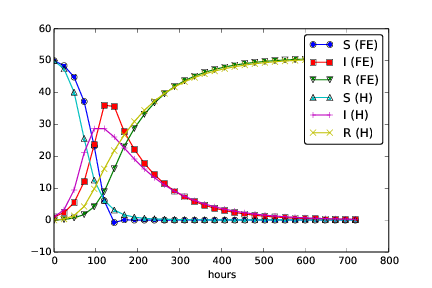

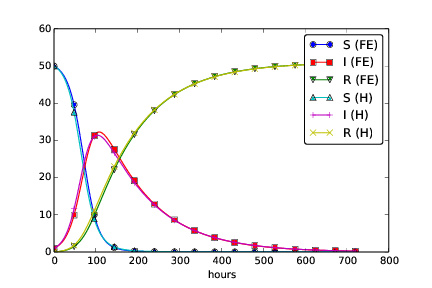

This program was written to investigate the spreading of a flu at the mentioned boarding school, and the reasoning for the specific choices \(\beta\) and \(\gamma\) goes as follows. At some other school where the disease has already spread, it was observed that in the beginning of a day there were 40 susceptibles and 8 infected, while the numbers were 30 and 18, respectively, 24 hours later. Using 1 h as time unit, we then have from (39) that \(\beta = 10/(40\cdot 8\cdot 24)\). Among 15 infected, it was observed that 3 recovered during a day, giving \(\gamma = 3/(15\cdot 24)\). Applying these parameters to a new case where there is one infected initially and 50 susceptibles, gives the graphs in Figure Natural evolution of a flu at a boarding school. These graphs are just straight lines between the values at times \(t_i=i\Delta t\) as computed by the program. We observe that \(S\) reduces as \(I\) and \(R\) grows. After about 30 days everyone has become ill and recovered again.

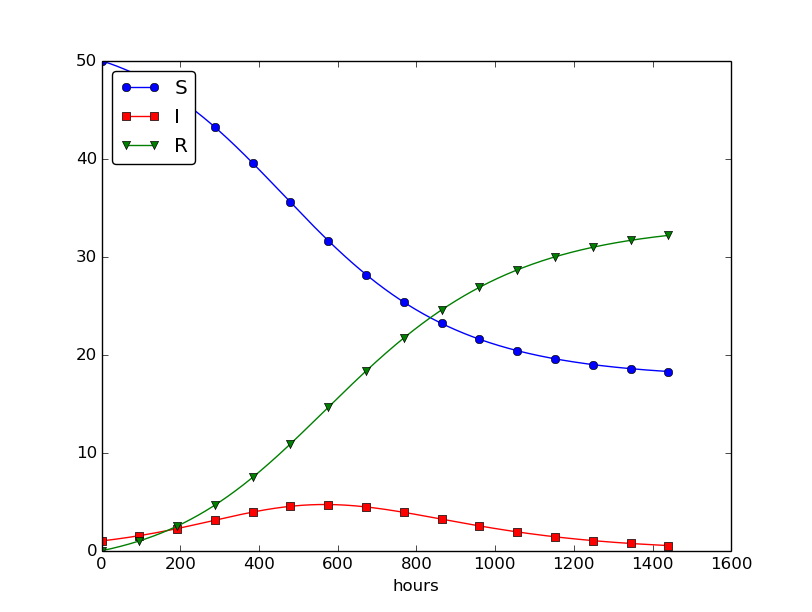

We can experiment with \(\beta\) and \(\gamma\) to see whether we get an outbreak of the disease or not. Imagine that a “wash your hands” campaign was successful and that the other school in this case experienced a reduction of \(\beta\) by a factor of 5. With this lower \(\beta\) the disease spreads very slowly so we simulate for 60 days. The curves appear in Figure Small outbreak of a flu at a boarding school ( \( beta \) is much smaller than in Figure :ref:`sec:de:flu:fig1`).

Outbreak or not¶

Looking at the equation for \(I\), it is clear that we must have \(\beta SI - \gamma I>0\) for \(I\) to increase. When we start the simulation it means that

or simpler

to increase the number of infected people and accelerate the spreading

of the disease. You can run the SIR1.m program with a smaller \(\beta\)

such that (57) is violated and observe that there is

no outbreak.

The power of mathematical modeling

The reader should notice our careful use of words in the previous paragraphs. We started out with modeling a very specific case, namely the spreading of a flu among pupils and staff at a boarding school. With purpose we exchanged words like pupils and flu with more neutral and general words like individuals and disease, respectively. Phrased equivalently, we raised the abstraction level by moving from a specific case (flu at a boarding school) to a more general case (disease in a closed society). Very often, when developing mathematical models, we start with a specific example and see, through the modeling, that what is going on of essence in this example also will take place in many similar problem settings. We try to incorporate this generalization in the model so that the model has a much wider application area than what we aimed at in the beginning. This is the very power of mathematical modeling: by solving one specific case we have often developed more generic tools that can readily be applied to solve seemingly different problems. The next sections will give substance to this assertion.

Abstract problem and notation¶

When we had a specific differential equation with one unknown, we quickly turned to an abstract differential equation written in the generic form \(u'=f(u,t)\). We refer to such a problem as a scalar ODE. A specific equation corresponds to a specific choice of the formula \(f(u,t)\) involving \(u\) and (optionally) \(t\).

It is advantageous to also write a system of differential equations in the same abstract notation,

but this time it is understood that \(u\) is a vector of functions and \(f\) is also vector. We say that \(u'=f(u,t)\) is a vector ODE or system of ODEs in this case. For the SIR model we introduce the two 3-vectors, one for the unknowns,

and one for the right-hand side functions,

The equation \(u'=f(u,t)\) means setting the two vectors equal, i.e., each component must be equal. Since \(u'=(S', I', R')\), we get that \(u'=f\) implies

The generalized short notation \(u'=f(u,t)\) is very handy since we can derive numerical methods and implement software for this abstract system and in a particular application just identify the formulas in the \(f\) vector, implement these, and call functionality that solves the differential equation system.

Programming the numerical method; the general case¶

In Matlab code, the Forward Euler step

being a scalar or a vector equation, can be coded as

u(n+1,:) = u(n,:) + dt*f(u(n,:), t(n))

both in the scalar and vector case. In the vector case,

u(n,:) is a one-dimensional array of length \(m+1\)

holding the mathematical quantity

\(u^n\), and the Matlab function f must return an array

of length \(m+1\). Then the expression u(n,:) + dt*f(u(n,:), t(n))

is an array plus a scalar times an array.

For all this to work, the complete numerical solution must be represented by a

two-dimensional array, created by u = zeros(N_t+1, m+1).

The first index counts the time points and the second the components

of the solution vector at one time point.

That is, u(n,i) corresponds

to the mathematical quantity \(u^n_i\). Writing u(n,:) picks out all the

components in the solution at the time point with index n.

The nice feature of these facts is that the same piece of

Matlab code works for both a scalar ODE and a system of ODEs!

The ode_FE function for the vector ODE is placed in the file

ode_system_FE.m

and was written as follows:

function [u, t] = ode_FE(f, U_0, dt, T)

N_t = floor(T/dt);

u = zeros(N_t+1, length(U_0));

t = linspace(0, N_t*dt, length(u));

u(1,:) = U_0; % Initial values

t(1) = 0;

for n = 1:N_t

u(n+1,:) = u(n,:) + dt*f(u(n,:), t(n));

end

end

Let us show how the previous SIR model can be solved using the new

general ode_FE that can solve any vector ODE. The user’s f(u, t)

function takes a vector u, with three components corresponding to

\(S\), \(I\), and \(R\) as argument, along with the current time point t(n),

and must return the values of the formulas of the right-hand sides

in the vector ODE. An appropriate implementation is

function result = f(u, t)

S = u(1); I = u(2); R = u(3);

result = [-beta*S*I beta*S*I - gamma*I gamma*I]

end

where beta and gamma are problem specific parameters set outside of that function.

Note that the S, I, and R values correspond to \(S^n\), \(I^n\), and \(R^n\).

These values are then just inserted in the various formulas

in the vector ODE.

We can now show a function

(in file

demo_SIR.m)

that runs the previous SIR example,

but which applies the generic ode_FE function:

function demo_SIR()

% Test case using an SIR model

dt = 0.1; % 6 min

D = 30; % Simulate for D days

N_t = floor(D*24/dt); % Corresponding no of hours

T = dt*N_t; % End time

U_0 = [50 1 0];

f_handle = @f;

[u, t] = ode_FE(f_handle, U_0, dt, T);

S = u(:,1);

I = u(:,2);

R = u(:,3);

plot(t, S, 'b-', t, I, 'r-', t, R, 'g-');

legend('S', 'I', 'R');

xlabel('hours');

% Consistency check:

N = S(1) + I(1) + R(1);

eps = 1E-12; % Tolerance for comparing real numbers

for n = 1:length(S)

err = abs(S(n) + I(n) + R(n) - N);

if (err > eps)

error('demo_SIR: error=%g', err);

end

end

end

function result = f(u,t)

beta = 10/(40*8*24);

gamma = 3/(15*24);

S = u(1); I = u(2); R = u(3);

result = [-beta*S*I beta*S*I - gamma*I gamma*I];

end

Recall that the u returned from ode_FE contains all components

(\(S\), \(I\), \(R\)) in the solution vector at all time points. We

therefore need to extract the \(S\), \(I\), and \(R\) values in separate

arrays for further analysis and easy plotting.

Another key feature of this higher-quality code is the consistency check. By adding the three differential equations in the SIR model, we realize that \(S' + I' + R'=0\), which means that \(S+I+R=\mbox{const}\). We can check that this relation holds by comparing \(S^n+I^n+R^n\) to the sum of the initial conditions. The check is not a full-fledged verification, but it is a much better than doing nothing and hoping that the computation is correct. Exercise 47: Find an appropriate time step; SIR model suggests another method for controlling the quality of the numerical solution.

Time-restricted immunity¶

Let us now assume that immunity after the disease only lasts for some certain time period. This means that there is transport from the R state to the S state:

Modeling the loss of immunity is very similar to modeling recovery from the disease: the amount of people losing immunity is proportional to the amount of recovered patients and the length of the time interval \(\Delta t\). We can therefore write the loss in the R category as \(-\nu\Delta t R\) in time \(\Delta t\), where \(\nu^{-1}\) is the typical time it takes to lose immunity. The loss in \(R(t)\) is a gain in \(S(t)\). The “budgets” for the categories therefore become

Dividing by \(\Delta t\) and letting \(\Delta t\rightarrow 0\) gives the differential equation system

This system can be solved by the same methods as we demonstrated for

the original SIR model. Only one modification in the program is

necessary: adding nu*R[n] to the S[n+1] update and subtracting

the same quantity in the R[n+1] update:

for n = 1:N_t

S(n+1) = S(n) - dt*beta*S(n)*I(n) + dt*nu*R(n)

I(n+1) = I(n) + dt*beta*S(n)*I(n) - dt*gamma*I(n)

R(n+1) = R(n) + dt*gamma*I(n) - dt*nu*R(n)

end

The modified code is found in the file SIR2.m.

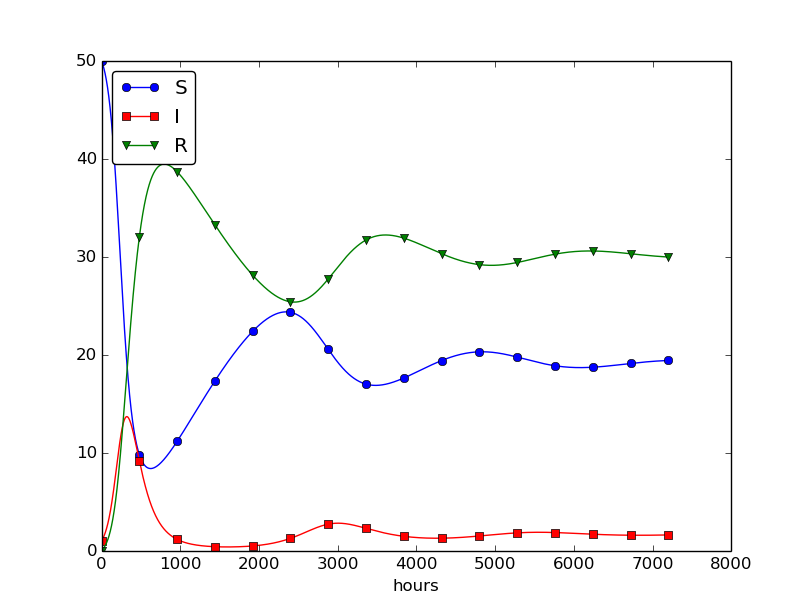

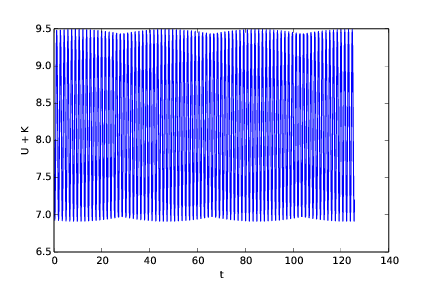

Setting \(\nu^{-1}\) to 50 days, reducing \(\beta\) by a factor of 4 compared to the previous example (\(\beta=0.00033\)), and simulating for 300 days gives an oscillatory behavior in the categories, as depicted in Figure Including loss of immunity. It is easy now to play around and study how the parameters affect the spreading of the disease. For example, making the disease slightly more effective (increase \(\beta\) to 0.00043) and increasing the average time to loss of immunity to 90 days lead to other oscillations in Figure Increasing \( beta \) and reducing \( nu \) compared to Figure :ref:`sec:de:flu:fig3`.

Incorporating vaccination¶

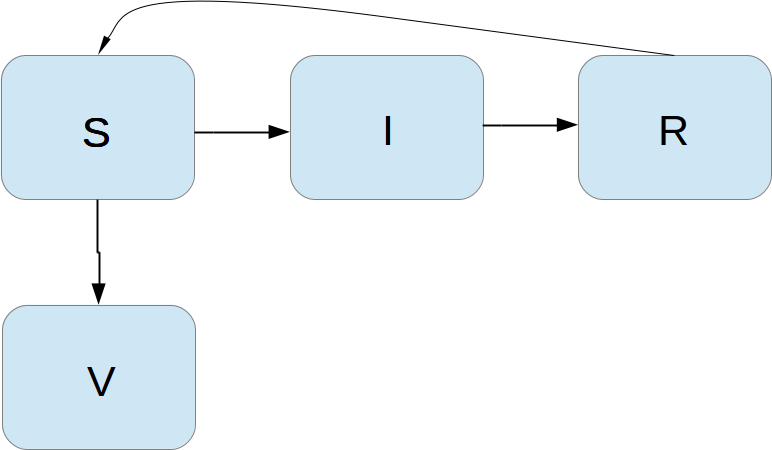

We can extend the model to also include vaccination. To this end, it can be useful to track those who are vaccinated and those who are not. So, we introduce a fourth category, V, for those who have taken a successful vaccination. Furthermore, we assume that in a time interval \(\Delta t\), a fraction \(p\Delta t\) of the S category is subject to a successful vaccination. This means that in the time \(\Delta t\), \(p\Delta t S\) people leave from the S to the V category. Since the vaccinated ones cannot get the disease, there is no impact on the I or R categories. We can visualize the categories, and the movement between them, as

The new, extended differential equations with the \(V\) quantity become

We shall refer to this model as the SIRV model.

The new equation for \(V'\) poses no difficulties when it comes to the numerical method. In a Forward Euler scheme we simply add an update

The program needs to store \(V(t)\) in an additional array V,

and the plotting command must be extended with more arguments to

plot V versus t as well. The complete code is found in

the file SIRV1.m.

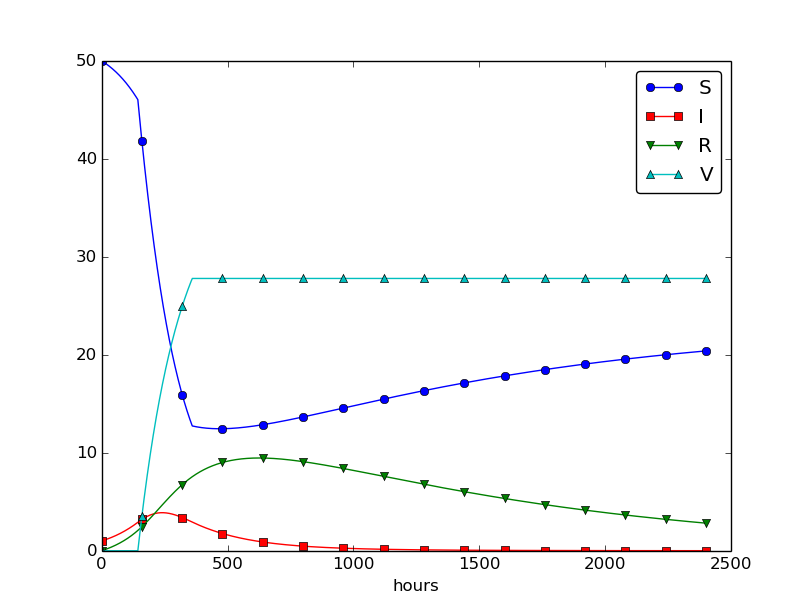

Using \(p=0.0005\) and \(p=0.0001\) as values for the vaccine efficiency parameter, the effect of vaccination is seen in Figure The effect of vaccination: \( p=0005 \) (left) and \( p=0.0001 \) (right) (other parameters are as in Figure Including loss of immunity).

Discontinuous coefficients: a vaccination campaign¶

What about modeling a vaccination campaign? Imagine that six days after the outbreak of the disease, the local health station launches a vaccination campaign. They reach out to many people, say 10 times as efficiently as in the previous (constant vaccination) case. If the campaign lasts for 10 days we can write

Note that we must multiply the \(t\) value by 24 because \(t\) is measured in hours, not days. In the differential equation system, \(pS(t)\) must be replaced by \(p(t)S(t)\), and in this case we get a differential equation system with a term that is discontinuous. This is usually quite a challenge in mathematics, but as long as we solve the equations numerically in a program, a discontinuous coefficient is easy to treat.

There are two ways to implement the discontinuous coefficient \(p(t)\): through a function and through an array. The function approach is perhaps the easiest:

function value = p(t)

if (6*24 <= t <= 15*24)

value = 0.005;

else

value = 0;

end

end

In the code for updating the arrays S and V we get a term

p(t(n))*S(n).

We can also let \(p(t)\) be an array filled with correct values prior

to the simulation. Then we need to allocate an array p of length N_t+1

and find the indices corresponding to the time period between 6 and 15

days. These indices are found from the time point divided by

\(\Delta t\). That is,

p = zeros(N_t+1,1);

start_index = 6*24/dt + 1;

stop_index = 15*24/dt + 1;

p(start_index:stop_index) = 0.005;

The \(p(t)S(t)\) term in the updating formulas for \(S\) and \(V\) simply becomes

p(n)*S(n). The file SIRV2.m

contains a program based on filling an array p.

The effect of a vaccination campaign is illustrated in Figure The effect of a vaccination campaign. All the data are as in Figure The effect of vaccination: \( p=0005 \) (left) and \( p=0.0001 \) (right) (left), except that \(p\) is ten times stronger for a period of 10 days and \(p=0\) elsewhere.

Oscillating one-dimensional systems¶

Numerous engineering constructions and devices contain materials that act like springs. Such springs give rise to oscillations, and controlling oscillations is a key engineering task. We shall now learn to simulate oscillating systems.

As always, we start with the simplest meaningful mathematical model, which for oscillations is a second-order differential equation:

where \(\omega\) is a given physical parameter. Equation (68) models a one-dimensional system oscillating without damping (i.e., with negligible damping). One-dimensional here means that some motion takes place along one dimension only in some coordinate system. Along with (68) we need the two initial conditions \(u(0)\) and \(u'(0)\).

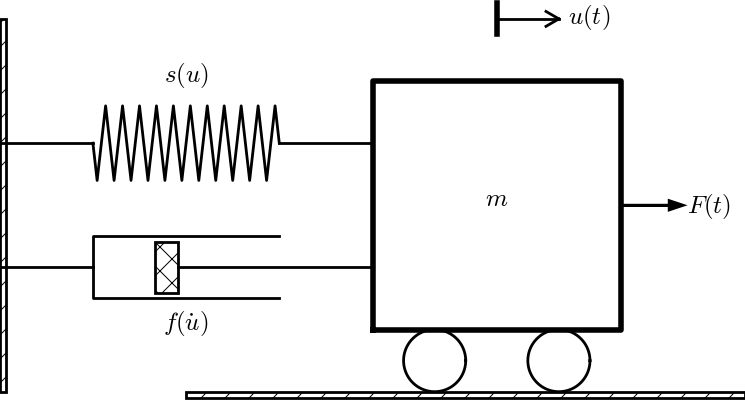

Derivation of a simple model¶

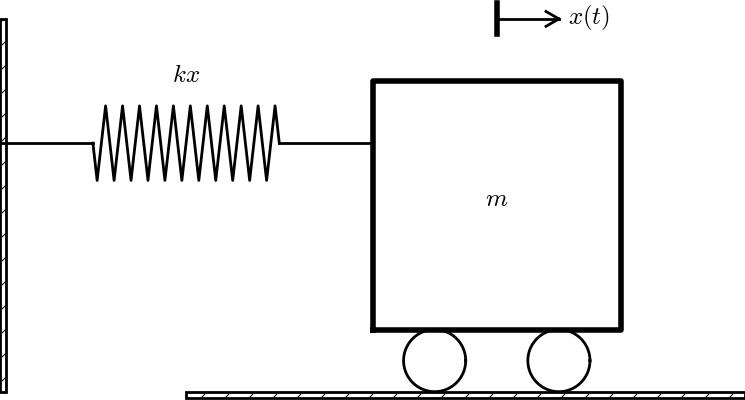

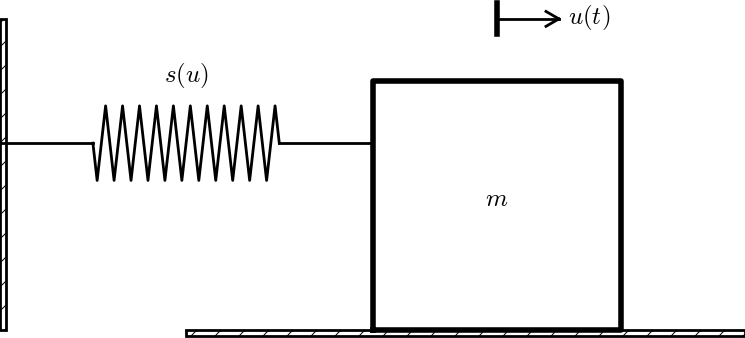

Many engineering systems undergo oscillations, and differential equations constitute the key tool to understand, predict, and control the oscillations. We start with the simplest possible model that captures the essential dynamics of an oscillating system. Some body with mass \(m\) is attached to a spring and moves along a line without friction, see Figure Sketch of a one-dimensional, oscillating dynamic system (without friction) for a sketch (rolling wheels indicate “no friction”). When the spring is stretched (or compressed), the spring force pulls (or pushes) the body back and work “against” the motion. More precisely, let \(x(t)\) be the position of the body on the \(x\) axis, along which the body moves. The spring is not stretched when \(x=0\), so the force is zero, and \(x=0\) is hence the equilibrium position of the body. The spring force is \(-kx\), where \(k\) is a constant to be measured. We assume that there are no other forces (e.g., no friction). Newton’s 2nd law of motion \(F=ma\) then has \(F=-kx\) and \(a=\ddot x\),

which can be rewritten as

by introducing \(\omega = \sqrt{k/m}\) (which is very common).

Equation (70) is a second-order differential equation, and therefore we need two initial conditions, one on the position \(x(0)\) and one on the velocity \(x'(0)\). Here we choose the body to be at rest, but moved away from its equilibrium position:

The exact solution of (70) with these initial conditions is \(x(t)=X_0\cos\omega t\). This can easily be verified by substituting into (70) and checking the initial conditions. The solution tells that such a spring-mass system oscillates back and forth as described by a cosine curve.

The differential equation (70) appears in numerous other contexts. A classical example is a simple pendulum that oscillates back and forth. Physics books derive, from Newton’s second law of motion, that

where \(m\) is the mass of the body at the end of a pendulum with length \(L\), \(g\) is the acceleration of gravity, and \(\theta\) is the angle the pendulum makes with the vertical. Considering small angles \(\theta\), \(\sin \theta\approx \theta\), and we get (70) with \(x=\theta\), \(\omega = \sqrt{g/L}\), \(x(0)=\Theta\), and \(x'(0)=0\), if \(\Theta\) is the initial angle and the pendulum is at rest at \(t=0\).

Numerical solution¶

We have not looked at numerical methods for handling second-order derivatives, and such methods are an option, but we know how to solve first-order differential equations and even systems of first-order equations. With a little, yet very common, trick we can rewrite (70) as a first-order system of two differential equations. We introduce \(u=x\) and \(v=x'=u'\) as two new unknown functions. The two corresponding equations arise from the definition \(v=u'\) and the original equation (70):

(Notice that we can use \(u''=v'\) to remove the second-order derivative from Newton’s 2nd law.)

We can now apply the Forward Euler method to (71)-(72), exactly as we did in the section A Forward Euler method for the differential equation system:

resulting in the computational scheme

Programming the numerical method; the special case¶

A simple program for (75)-(76) follows the same ideas as in the section Programming the numerical method; the special case:

omega = 2;

P = 2*pi/omega;

dt = P/20;

T = 3*P;

N_t = floor(T/dt);

t = linspace(0, N_t*dt, N_t+1);

u = zeros(N_t+1, 1);

v = zeros(N_t+1, 1);

% Initial condition

X_0 = 2;

u(1) = X_0;

v(1) = 0;

% Step equations forward in time

for n = 1:N_t

u(n+1) = u(n) + dt*v(n);

v(n+1) = v(n) - dt*omega^2*u(n);

end

plot(t, u, 'b-', t, X_0*cos(omega*t), 'r--');

legend('numerical', 'exact', 'Location','northwest');

xlabel('t');

print('tmp', '-dpdf'); print('tmp', '-dpng');

(See file osc_FE_special_case.m.)

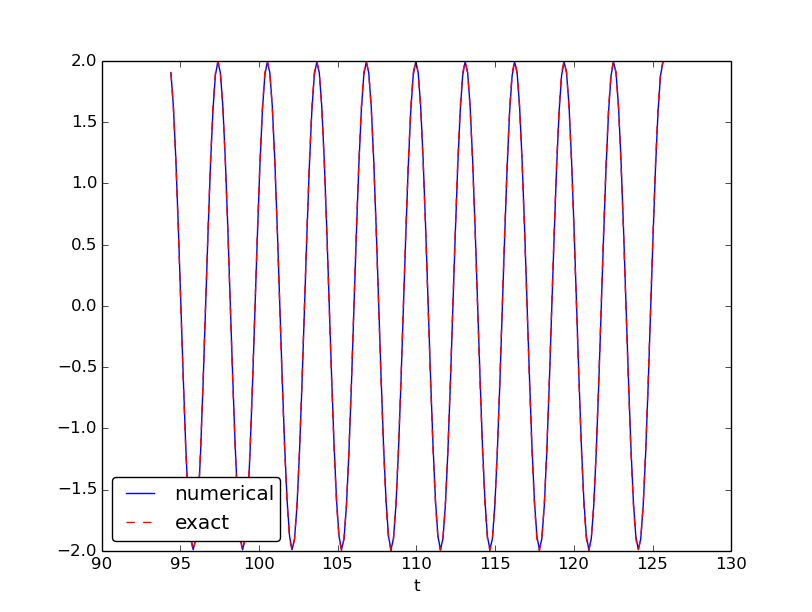

Since we already know the exact solution as \(u(t)=X_0\cos\omega t\), we have reasoned as follows to find an appropriate simulation interval \([0,T]\) and also how many points we should choose. The solution has a period \(P=2\pi/\omega\). (The period \(P\) is the time difference between two peaks of the \(u(t)\sim\cos\omega t\) curve.) Simulating for three periods of the cosine function, \(T=3P\), and choosing \(\Delta t\) such that there are 20 intervals per period gives \(\Delta t=P/20\) and a total of \(N_t=T/\Delta t\) intervals. The rest of the program is a straightforward coding of the Forward Euler scheme.

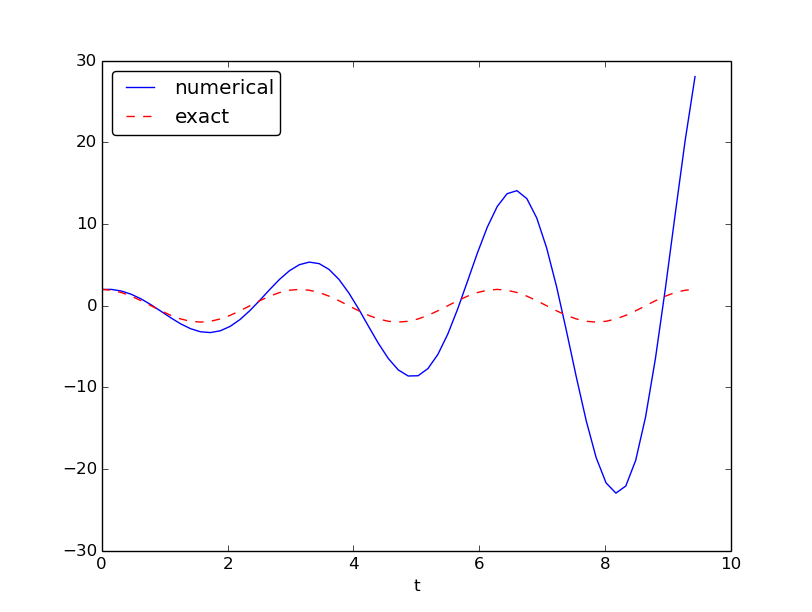

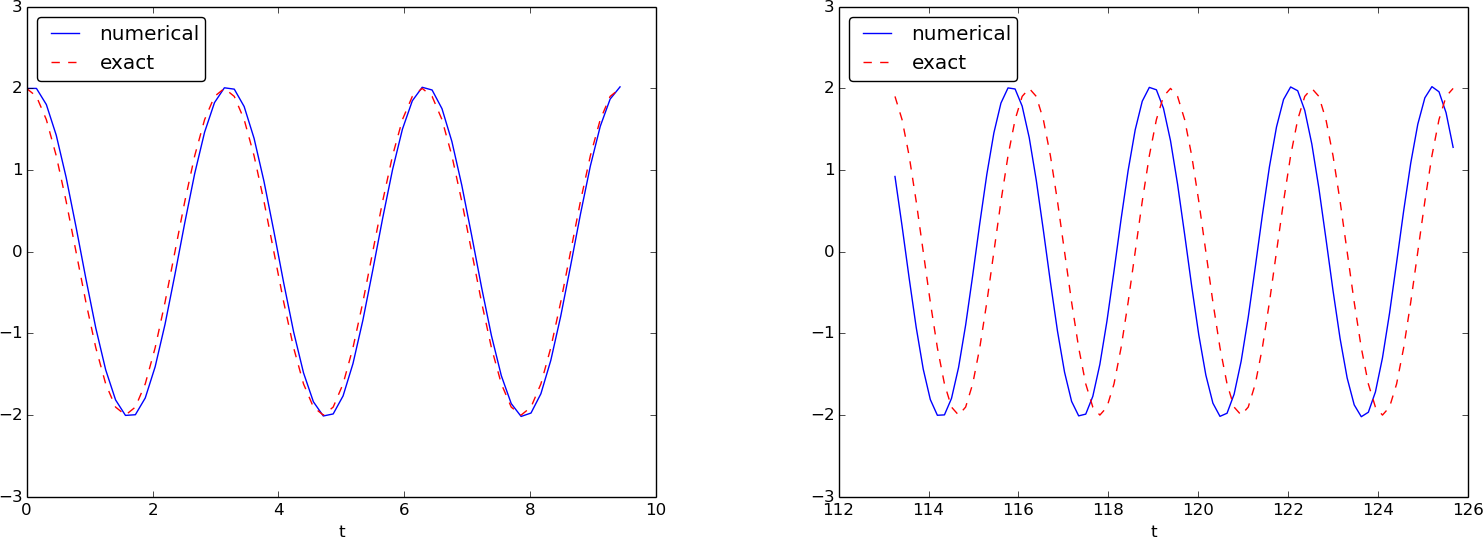

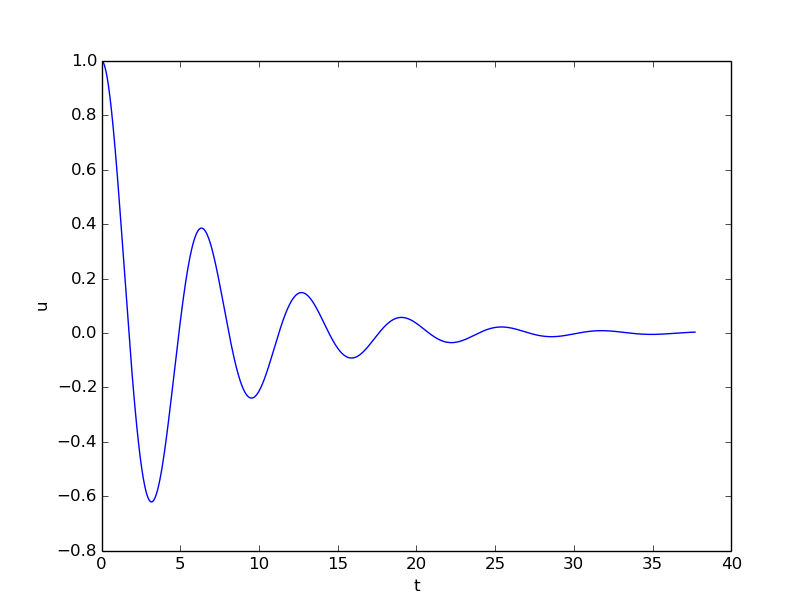

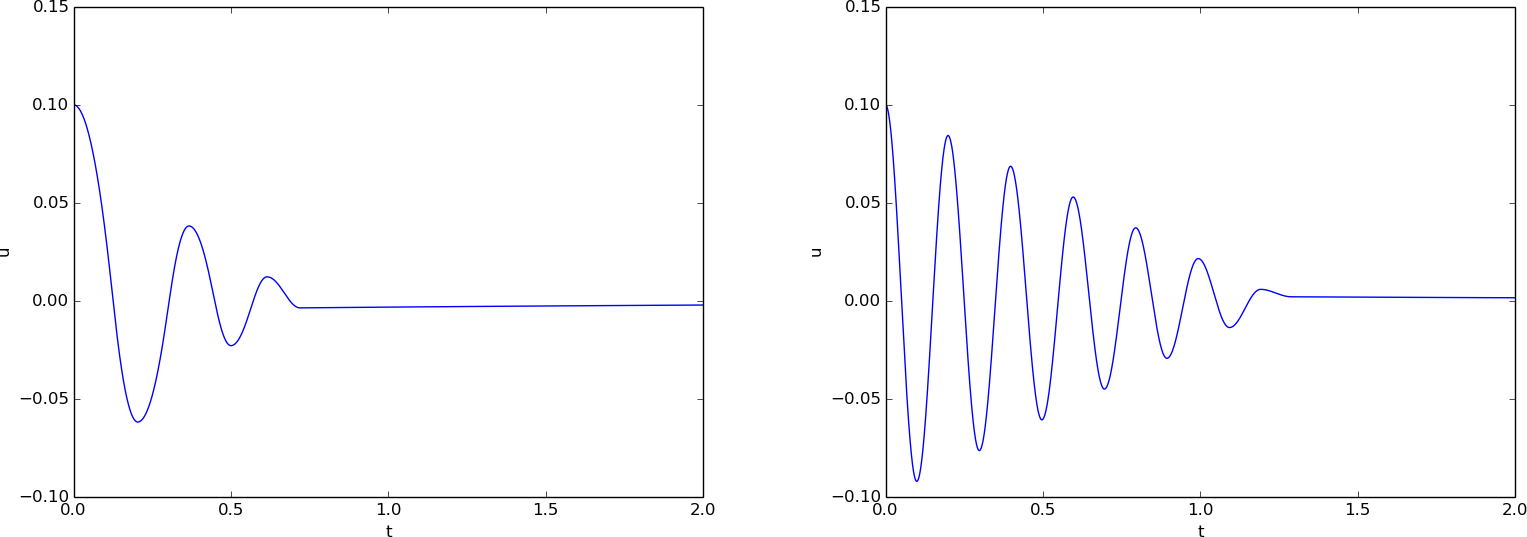

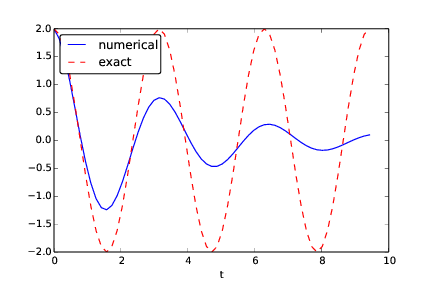

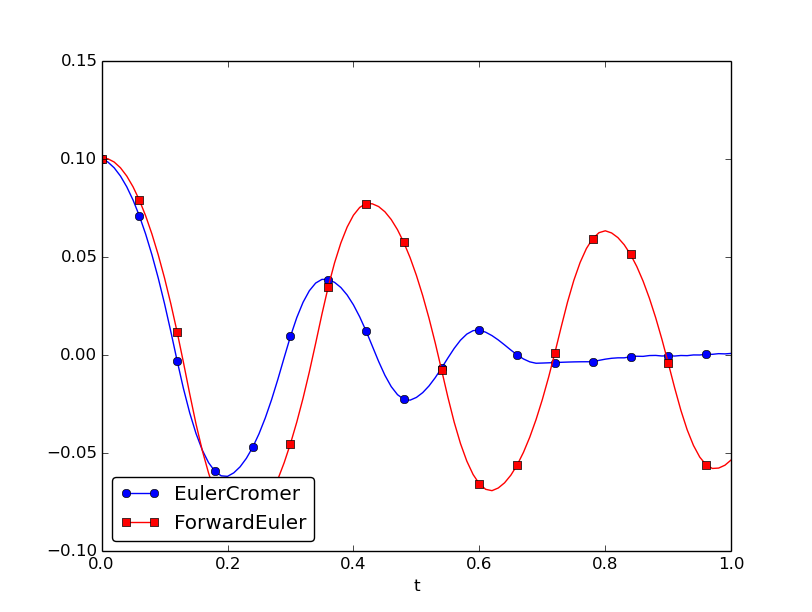

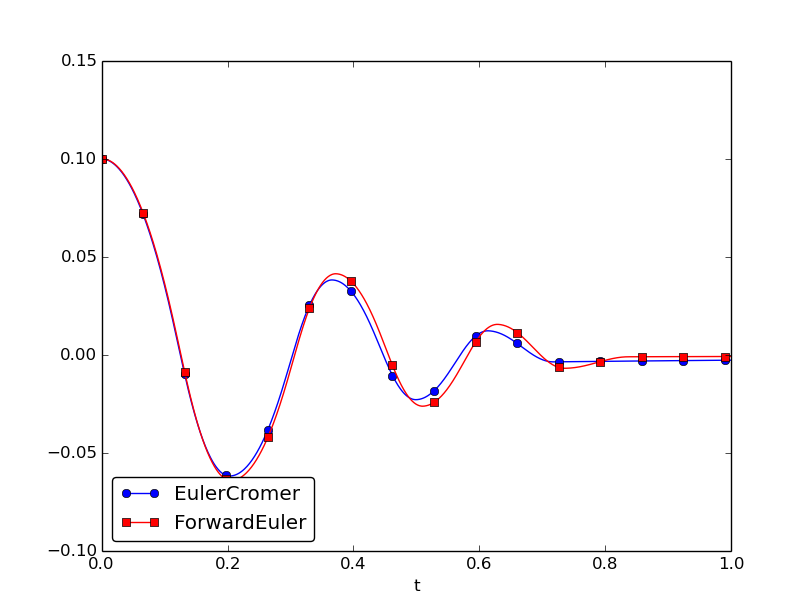

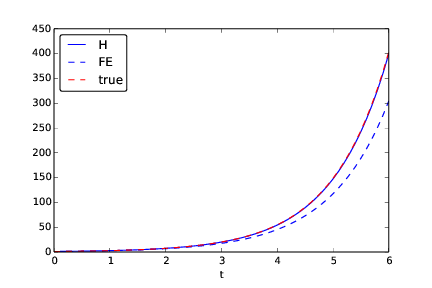

Figure Simulation of an oscillating system shows a comparison between the numerical solution and the exact solution of the differential equation. To our surprise, the numerical solution looks wrong. Is this discrepancy due to a programming error or a problem with the Forward Euler method?

First of all, even before trying to run the program, you should sit down and compute two steps in the time loop with a calculator so you have some intermediate results to compare with. Using \(X_0=2\), \(dt=0.157079632679\), and \(\omega=2\), we get \(u^1=2\), \(v^1=-1.25663706\), \(u^2=1.80260791\), and \(v^2=-2.51327412\). Such calculations show that the program is seemingly correct. (Later, we can use such values to construct a unit test and a corresponding test function.)

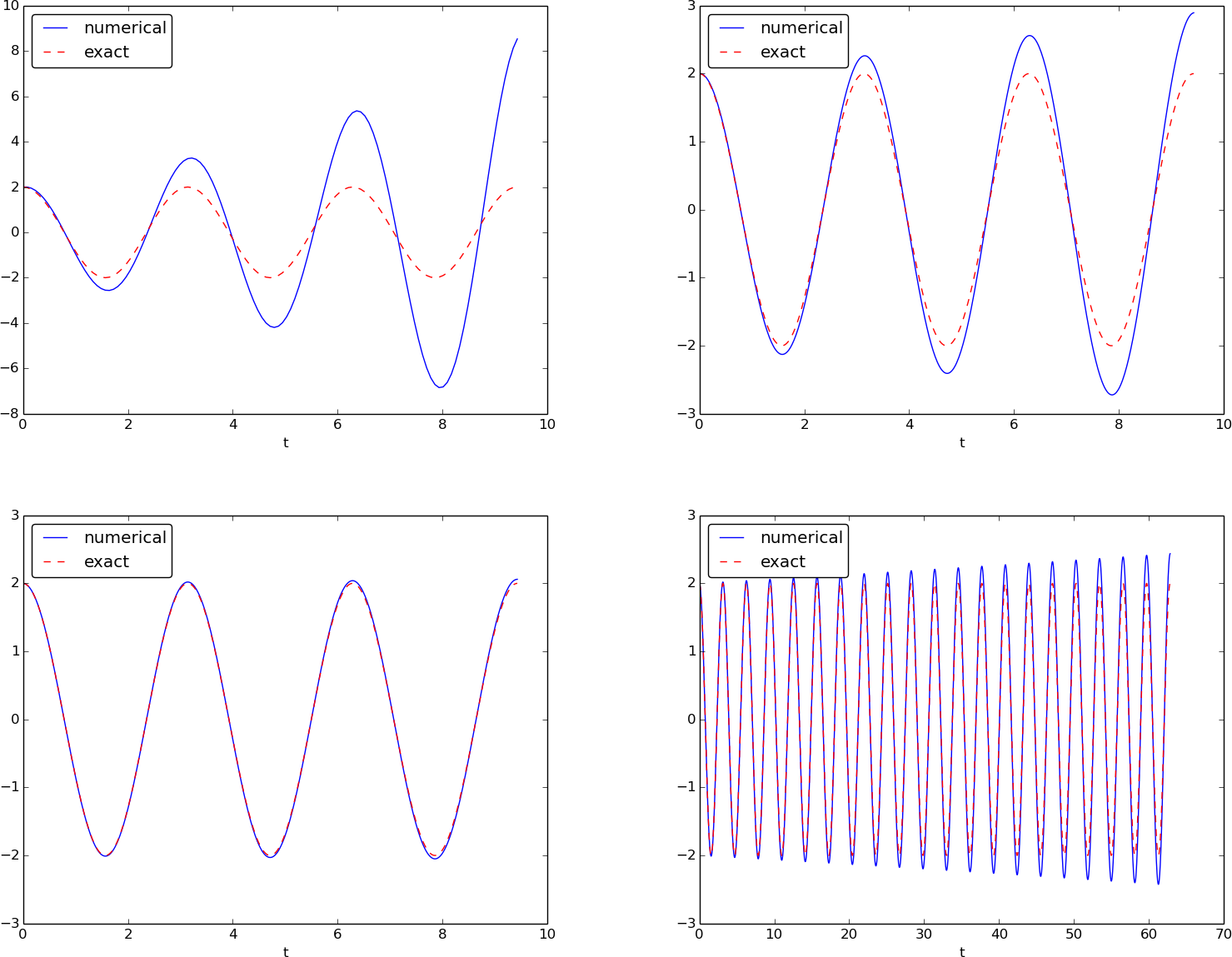

The next step is to reduce the discretization parameter \(\Delta t\) and see if the results become more accurate. Figure Simulation of an oscillating system with different time steps. Upper left: 40 steps per oscillation period. Upper right: 160 steps per period. Lower left: 2000 steps per period. Lower right: 2000 steps per period, but longer simulation shows the numerical and exact solution for the cases \(\Delta t = P/40, P/160, P/2000\). The results clearly become better, and the finest resolution gives graphs that cannot be visually distinguished. Nevertheless, the finest resolution involves 6000 computational intervals in total, which is considered quite much. This is no problem on a modern laptop, however, as the computations take just a fraction of a second.

Simulation of an oscillating system with different time steps. Upper left: 40 steps per oscillation period. Upper right: 160 steps per period. Lower left: 2000 steps per period. Lower right: 2000 steps per period, but longer simulation

Although 2000 intervals per oscillation period seem sufficient for an accurate numerical solution, the lower right graph in Figure Simulation of an oscillating system with different time steps. Upper left: 40 steps per oscillation period. Upper right: 160 steps per period. Lower left: 2000 steps per period. Lower right: 2000 steps per period, but longer simulation shows that if we increase the simulation time, here to 20 periods, there is a little growth of the amplitude, which becomes significant over time. The conclusion is that the Forward Euler method has a fundamental problem with its growing amplitudes, and that a very small \(\Delta t\) is required to achieve satisfactory results. The longer the simulation is, the smaller \(\Delta t\) has to be. It is certainly time to look for more effective numerical methods!

A magic fix of the numerical method¶

In the Forward Euler scheme,

we can replace \(u^n\) in the last equation by the recently computed value \(u^{n+1}\) from the first equation:

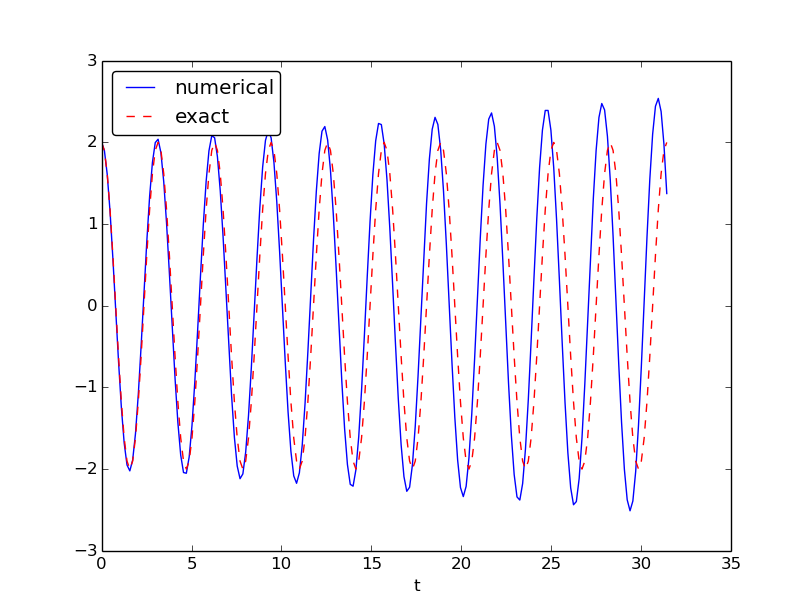

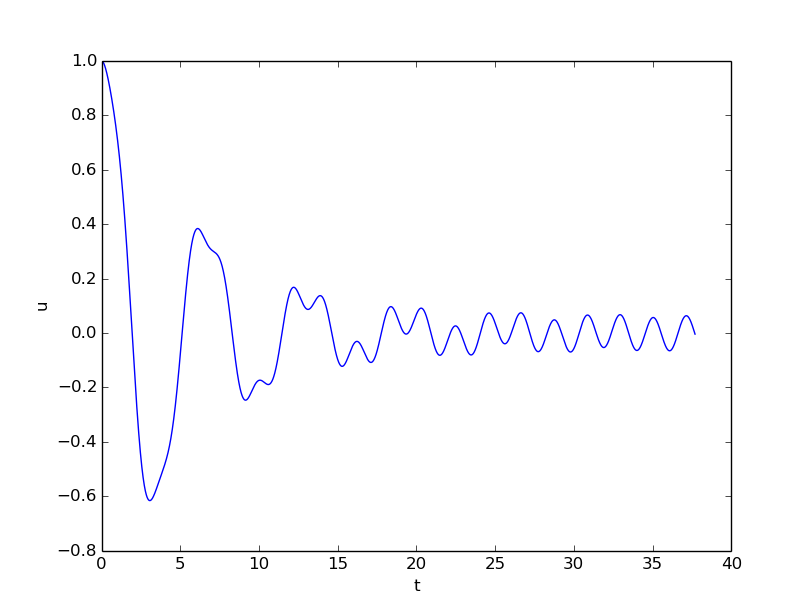

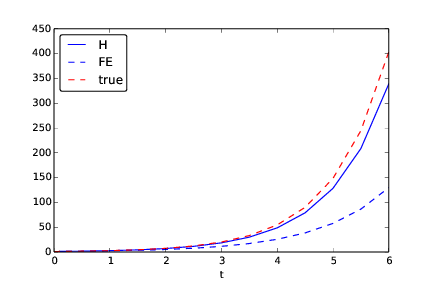

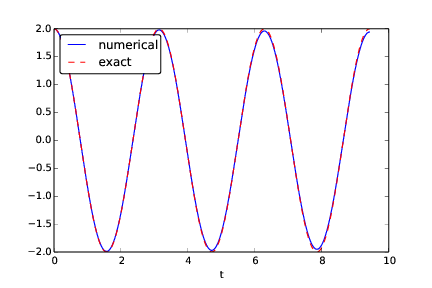

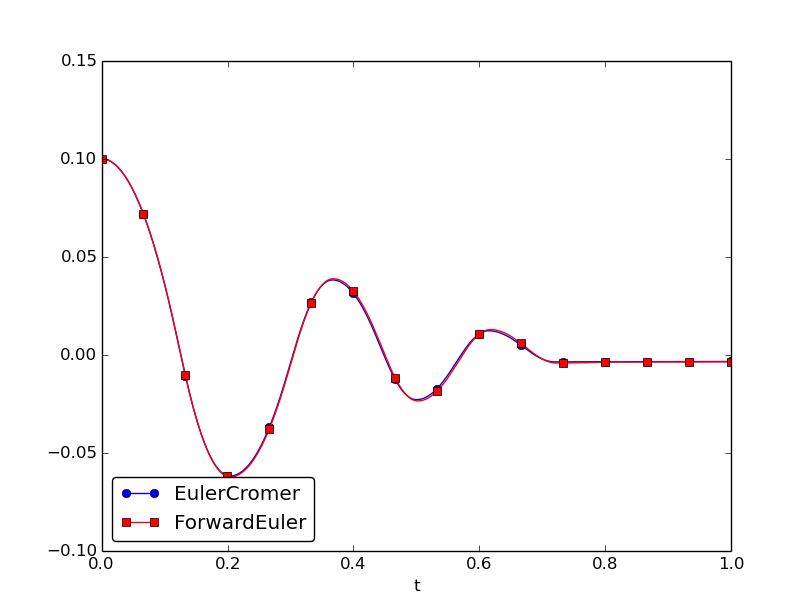

Before justifying this fix more mathematically, let us try it on the previous example. The results appear in Figure Adjusted method: first three periods (left) and period 36-40 (right). We see that the amplitude does not grow, but the phase is not entirely correct. After 40 periods (Figure Adjusted method: first three periods (left) and period 36-40 (right) right) we see a significant difference between the numerical and the exact solution. Decreasing \(\Delta t\) decreases the error. For example, with 2000 intervals per period, we only see a small phase error even after 50,000 periods (!). We can safely conclude that the fix results in an excellent numerical method!

Let us interpret the adjusted scheme mathematically. First we order (77)-(78) such that the difference approximations to derivatives become transparent:

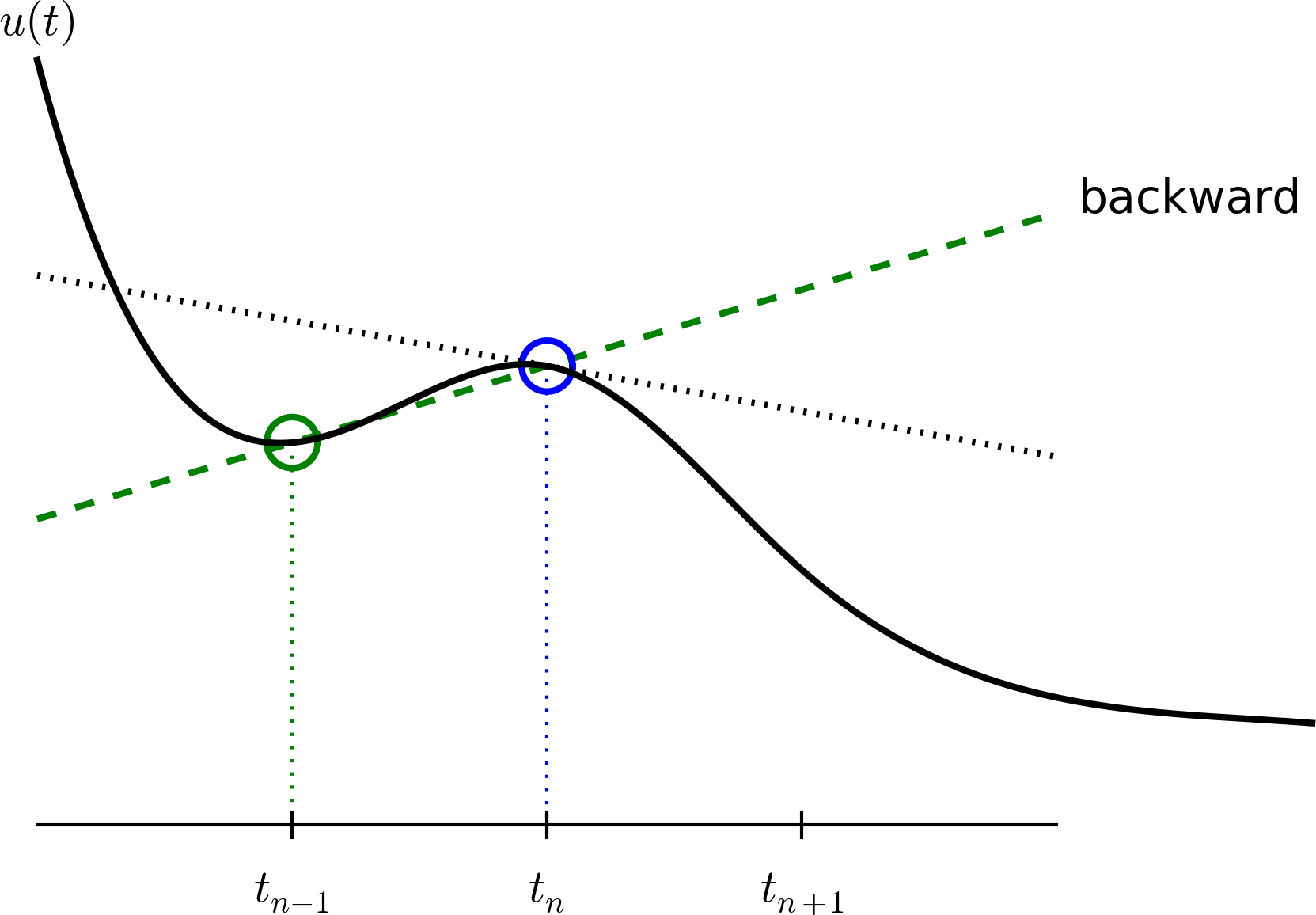

We interpret (79) as the differential equation sampled at mesh point \(t_n\), because we have \(v^n\) on the right-hand side. The left-hand side is then a forward difference or Forward Euler approximation to the derivative \(u'\), see Figure Illustration of a forward difference approximation to the derivative. On the other hand, we interpret (80) as the differential equation sampled at mesh point \(t_{n+1}\), since we have \(u^{n+1}\) on the right-hand side. In this case, the difference approximation on the left-hand side is a backward difference,

Figure Illustration of a backward difference approximation to the derivative illustrates the backward difference. The error in the backward difference is proportional to \(\Delta t\), the same as for the forward difference (but the proportionality constant in the error term has different sign). The resulting discretization method for (80) is often referred to as a Backward Euler scheme.

To summarize, using a forward difference for the first equation and a backward difference for the second equation results in a much better method than just using forward differences in both equations.

The standard way of expressing this scheme in physics is to change the order of the equations,

and apply a forward difference to (81) and a backward difference to (82):

That is, first the velocity \(v\) is updated and then the position \(u\),

using the most recently computed velocity.

There is no difference between

(83)-(84)

and

(77)-(78)

with respect to accuracy, so the order of the original differential

equations does not matter.

The scheme (83)-(84)

goes under the names Semi-implicit Euler or Euler-Cromer.

The implementation of

(83)-(84)

is found in the file osc_EC.m. The core of the code goes like

u = zeros(N_t+1,1);

v = zeros(N_t+1,1);

% Initial condition

u(1) = 2;

v(1) = 0;

% Step equations forward in time

for n = 1:N_t

v(n+1) = v(n) - dt*omega^2*u(n);

u(n+1) = u(n) + dt*v(n+1);

end

The 2nd-order Runge-Kutta method (or Heun’s method)¶

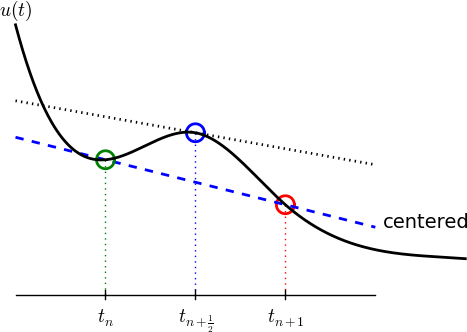

A very popular method for solving scalar and vector ODEs of first order is the 2nd-order Runge-Kutta method (RK2), also known as Heun’s method. The idea, first thinking of a scalar ODE, is to form a centered difference approximation to the derivative between two time points:

The centered difference formula is visualized in Figure Illustration of a centered difference approximation to the derivative. The error in the centered difference is proportional to \(\Delta t^2\), one order higher than the forward and backward differences, which means that if we halve \(\Delta t\), the error is more effectively reduced in the centered difference since it is reduced by a factor of four rather than two.

The problem with such a centered scheme for the general ODE \(u'=f(u,t)\) is that we get

which leads to difficulties since we do not know what \(u^{n+\frac{1}{2}}\) is. However, we can approximate the value of \(f\) between two time levels by the arithmetic average of the values at \(t_n\) and \(t_{n+1}\):

This results in

which in general is a nonlinear algebraic equation for \(u^{n+1}\) if \(f(u,t)\) is not a linear function of \(u\). To deal with the unknown term \(f(u^{n+1}, t_{n+1})\), without solving nonlinear equations, we can approximate or predict \(u^{n+1}\) using a Forward Euler step:

This reasoning gives rise to the method

The scheme applies to both scalar and vector ODEs.

For an oscillating system with \(f=(v,-\omega^2u)\) the file

osc_Heun.m implements this method.

The demo script demo_osc_Heun.m runs the simulation for 10 periods

with 20 time steps per period.

The corresponding numerical and exact solutions are shown

in Figure Simulation of 10 periods of oscillations by Heun’s method. We see that the amplitude grows,

but not as much as for the Forward Euler method. However, the

Euler-Cromer method is much better!

We should add that in problems where the Forward Euler method gives satisfactory approximations, such as growth/decay problems or the SIR model, the 2nd-order Runge-Kutta method or Heun’s method, usually works considerably better and produces greater accuracy for the same computational cost. It is therefore a very valuable method to be aware of, although it cannot compete with the Euler-Cromer scheme for oscillation problems. The derivation of the RK2/Heun scheme is also good general training in “numerical thinking”.

Software for solving ODEs¶

Matlab and Octave users have a handful of functions for solving ODEs, e.g.

the popular methods ode45 and ode23s. To illustrate, we may use

ode45 to solve the simple problem \(u'=u\), \(u(0)=2\), for 100 time steps until \(t=4\):

u0 = 2; % initial condition

time_points = linspace(0, 4, 101);

[t, u] = ode45(@exp_dudt, time_points, u0);

plot(t, u);

xlabel('t'); ylabel('u');

Here, ode45 is called with three parameters. The first one, @exp_dudt, is

a handle to a function that specifies the right hand side of the ODE, i.e., f(u, t). In the present example,

it reads

function dudt = exp_dudt(t, u)

dudt = u

The second parameter, time_points, is an array that gives the time points on the interval

where we want the solution to be reported. Alternatively, this second parameter could

have been given as [0 4], which just specifies the interval, giving no directions

to Matlab as to where (on the interval) the solution should be found. The third parameter, u0,

just states the initial condition.

Other ODE solvers in Matlab work in a similar fashion. Several ODEs may also be solved with one function call and parameters may be included.

There is a jungle of methods for solving ODEs, and it would be nice to

have easy access to implementations of a wide range of methods,

especially the sophisticated

and complicated adaptive methods

(like ode45 and ode23s above)

that adjusts \(\Delta t\) automatically

to obtain a prescribed accuracy. The Python package

Odespy gives easy access to a lot

of numerical methods for ODEs.

The simplest possible example on using Odespy is to solve the same problem that we just looked at, i.e., \(u'=u\), \(u(0)=2\), for 100 time steps until \(t=4\):

import odespy

def f(u, t):

return u

method = odespy.Heun # or, e.g., odespy.ForwardEuler

solver = method(f)

solver.set_initial_condition(2)

time_points = np.linspace(0, 4, 101)

u, t = solver.solve(time_points)

In other words, you define your right-hand side function f(u, t),

initialize an Odespy solver object, set the initial condition,

compute a collection of time points where you want the solution,

and ask for the solution. The returned arrays u and t can be

plotted directly: plot(t, u).

Warning

Note that Odespy must be operated from Python, so you need to learn some basic Python to make use of this software. The type of Python programming you need to learn has a syntax very close to that of Matlab.

A nice feature of Odespy is that problem parameters can be

arguments to the user’s f(u, t) function. For example,

if our ODE problem is \(u'=-au+b\), with two problem parameters

\(a\) and \(b\), we may write our f function as

def f(u, t, a, b):

return -a*u + b

The extra, problem-dependent arguments a and b can be transferred

to this function

if we collect their values in a list or tuple

when creating the Odespy solver and use the f_args argument:

a = 2

b = 1

solver = method(f, f_args=[a, b])

This is a good feature because problem parameters must otherwise be global variables - now they can be arguments in our right-hand side function in a natural way. Exercise 58: Use Odespy to solve a simple ODE asks you to make a complete implementation of this problem and plot the solution.

Using Odespy to solve oscillation ODEs like \(u''+\omega^2u=0\),

reformulated as a system \(u'=v\) and \(v'=-\omega^2u\), is done

as follows. We specify

a given number of time steps per period and compute the

associated time steps and end time of the simulation (T),

given a number of periods to simulate:

import odespy

# Define the ODE system

# u' = v

# v' = -omega**2*u

def f(sol, t, omega=2):

u, v = sol

return [v, -omega**2*u]

# Set and compute problem dependent parameters

omega = 2

X_0 = 1

number_of_periods = 40

time_intervals_per_period = 20

from numpy import pi, linspace, cos

P = 2*pi/omega # length of one period

dt = P/time_intervals_per_period # time step

T = number_of_periods*P # final simulation time

# Create Odespy solver object

odespy_method = odespy.RK2

solver = odespy_method(f, f_args=[omega])

# The initial condition for the system is collected in a list

solver.set_initial_condition([X_0, 0])

# Compute the desired time points where we want the solution

N_t = int(round(T/dt)) # no of time intervals

time_points = linspace(0, T, N_t+1)

# Solve the ODE problem

sol, t = solver.solve(time_points)

# Note: sol contains both displacement and velocity

# Extract original variables

u = sol[:,0]

v = sol[:,1]

The last two statements are important since our two functions \(u\) and \(v\)

in the ODE system are packed together in one array inside the Odespy solver.

The solution of the ODE system

is returned as a two-dimensional array where the first

column (sol[:,0]) stores \(u\) and the second (sol[:,1]) stores \(v\).

Plotting \(u\) and \(v\) is a matter of running plot(t, u, t, v).

Remark

In the right-hand side function we write f(sol, t, omega) instead

of f(u, t, omega) to indicate that the solution sent to f

is a solution at time t where the values of \(u\) and \(v\) are

packed together: sol = [u, v]. We might well use u as argument:

def f(u, t, omega=2):

u, v = u

return [v, -omega**2*u]

This just means that we redefine the name u inside the function

to mean the solution at time t for the first component of

the ODE system.

To switch to another numerical method, just substitute RK2 by

the proper name of the desired method.

Typing pydoc odespy in the terminal window brings up a list

of all the implemented methods.

This very simple way of choosing a method suggests an obvious extension

of the code above: we can define a list of methods, run all

methods, and compare their \(u\) curves in a plot.

As Odespy also contains the Euler-Cromer scheme, we rewrite

the system with \(v'=-\omega^2u\) as the first ODE and \(u'=v\)

as the second ODE, because this is the standard choice when

using the Euler-Cromer method (also in Odespy):

def f(u, t, omega=2):

v, u = u

return [-omega**2*u, v]

This change of equations also affects the initial condition:

the first component is zero and second is X_0 so we need

to pass the list [0, X_0] to solver.set_initial_condition.

The code ode_odespy.py contains the details:

def compare(odespy_methods,

omega,

X_0,

number_of_periods,

time_intervals_per_period=20):

from numpy import pi, linspace, cos

P = 2*pi/omega # length of one period

dt = P/time_intervals_per_period

T = number_of_periods*P

# If odespy_methods is not a list, but just the name of

# a single Odespy solver, we wrap that name in a list

# so we always have odespy_methods as a list

if type(odespy_methods) != type([]):

odespy_methods = [odespy_methods]

# Make a list of solver objects

solvers = [method(f, f_args=[omega]) for method in

odespy_methods]

for solver in solvers:

solver.set_initial_condition([0, X_0])

# Compute the time points where we want the solution

dt = float(dt) # avoid integer division

N_t = int(round(T/dt))

time_points = linspace(0, N_t*dt, N_t+1)

legends = []

for solver in solvers:

sol, t = solver.solve(time_points)

v = sol[:,0]

u = sol[:,1]

# Plot only the last p periods

p = 6

m = p*time_intervals_per_period # no time steps to plot

plot(t[-m:], u[-m:])

hold('on')

legends.append(solver.name())

xlabel('t')

# Plot exact solution too

plot(t[-m:], X_0*cos(omega*t)[-m:], 'k--')

legends.append('exact')

legend(legends, loc='lower left')

axis([t[-m], t[-1], -2*X_0, 2*X_0])

title('Simulation of %d periods with %d intervals per period'

% (number_of_periods, time_intervals_per_period))

savefig('tmp.pdf'); savefig('tmp.png')

show()

A new feature in this code is the ability to plot only the last p

periods, which allows us to perform long time simulations and

watch the end results without a cluttered plot with too many

periods. The syntax t[-m:] plots the last m elements in t (a

negative index in Python arrays/lists counts from the end).

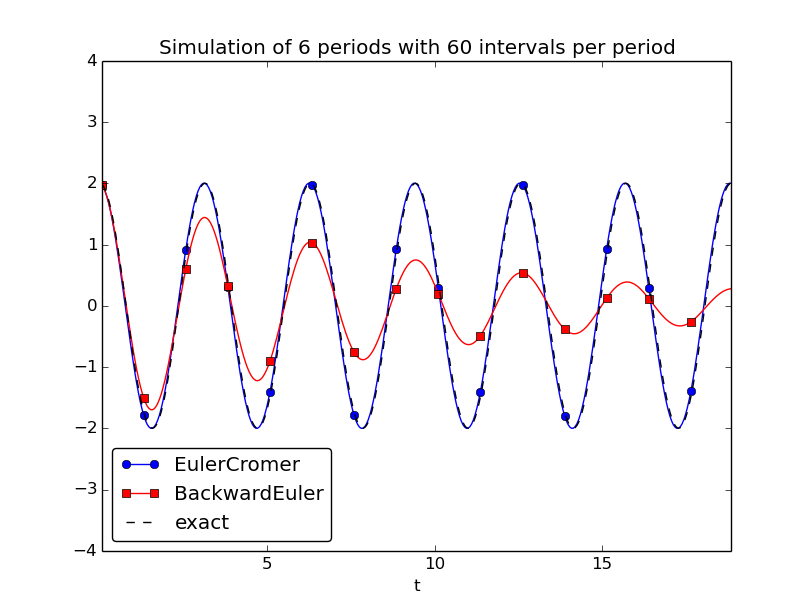

We may compare Heun’s method (or equivalently the RK2 method) with the Euler-Cromer scheme:

compare(odespy_methods=[odespy.Heun, odespy.EulerCromer],

omega=2, X_0=2, number_of_periods=20,

time_intervals_per_period=20)

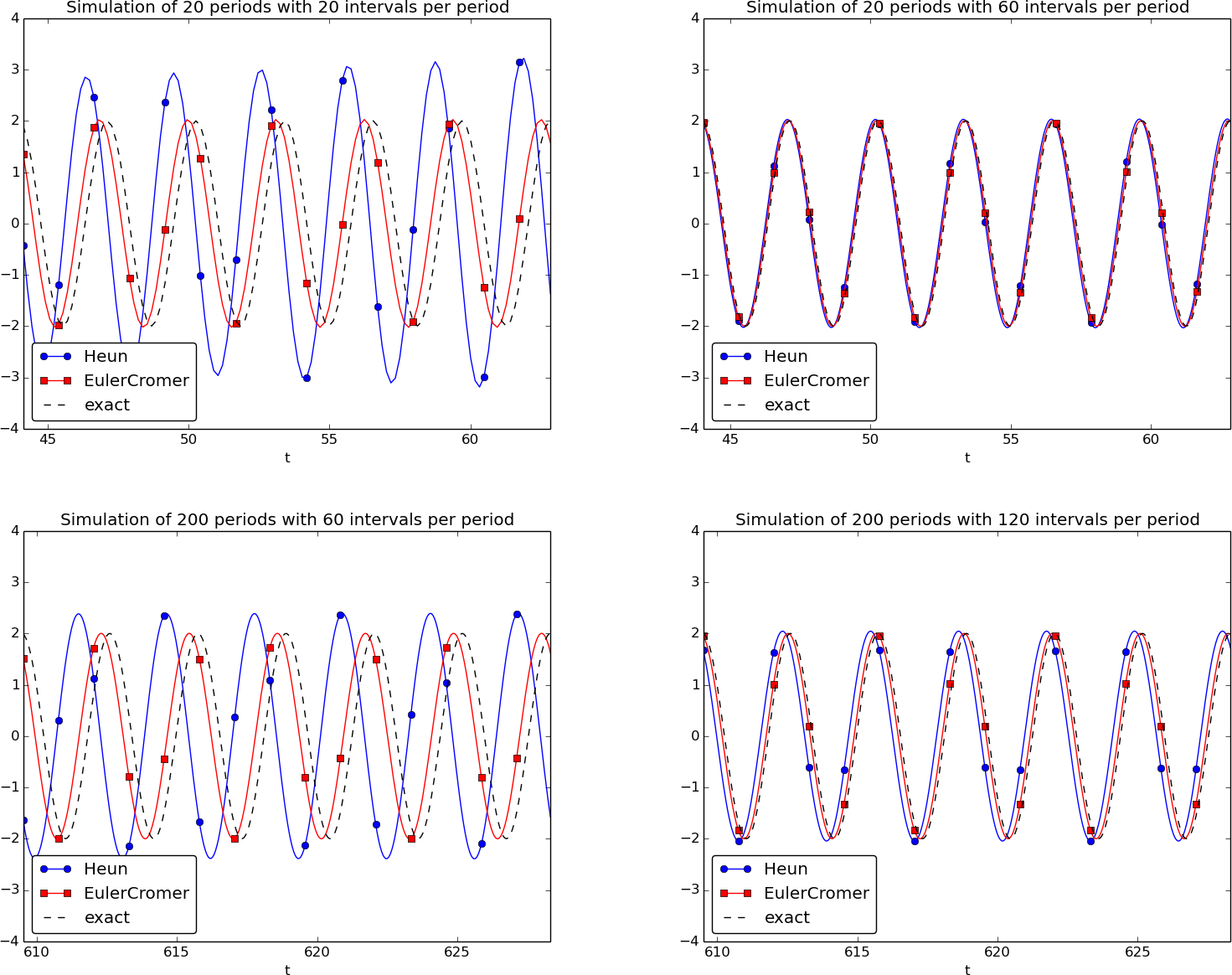

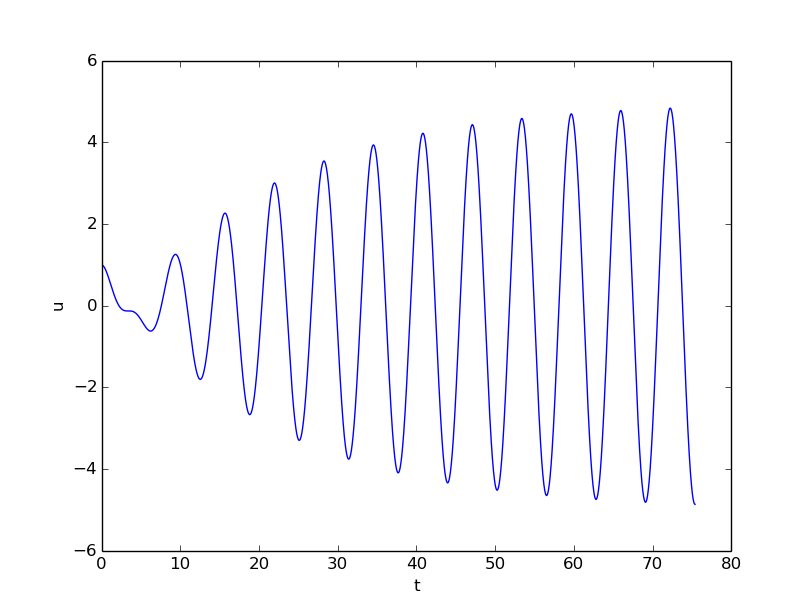

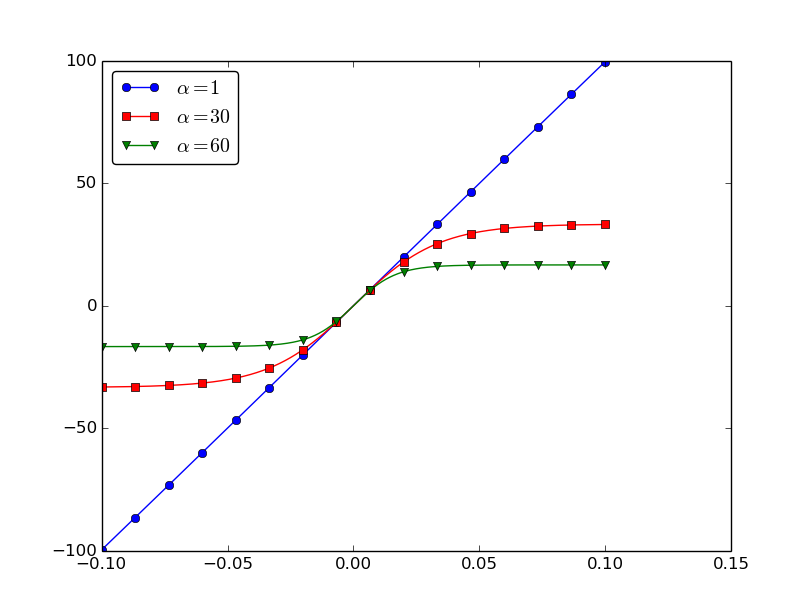

Figure Illustration of the impact of resolution (time steps per period) and length of simulation shows how Heun’s method (the blue line with small disks) has considerable error in both amplitude and phase already after 14-20 periods (upper left), but using three times as many time steps makes the curves almost equal (upper right). However, after 194-200 periods the errors have grown (lower left), but can be sufficiently reduced by halving the time step (lower right).

With all the methods in Odespy at hand, it is now easy to start exploring other methods, such as backward differences instead of the forward differences used in the Forward Euler scheme. Exercise 59: Set up a Backward Euler scheme for oscillations addresses that problem.

Odespy contains quite sophisticated adaptive methods where the user is

“guaranteed” to get a solution with prescribed accuracy. There is

no mathematical guarantee, but the error will for most cases not

deviate significantly from the user’s tolerance that reflects the

accuracy. A very popular method of this type is the Runge-Kutta-Fehlberg method,

which runs a 4th-order Runge-Kutta method and uses a 5th-order

Runge-Kutta method to estimate the error so that \(\Delta t\) can

be adjusted to keep the error below a tolerance. This method is

also widely known as ode45, because that is the name of the function

implementing the method in Matlab.

We can easily test the Runge-Kutta-Fehlberg method as soon as we

know the corresponding Odespy name, which is RKFehlberg:

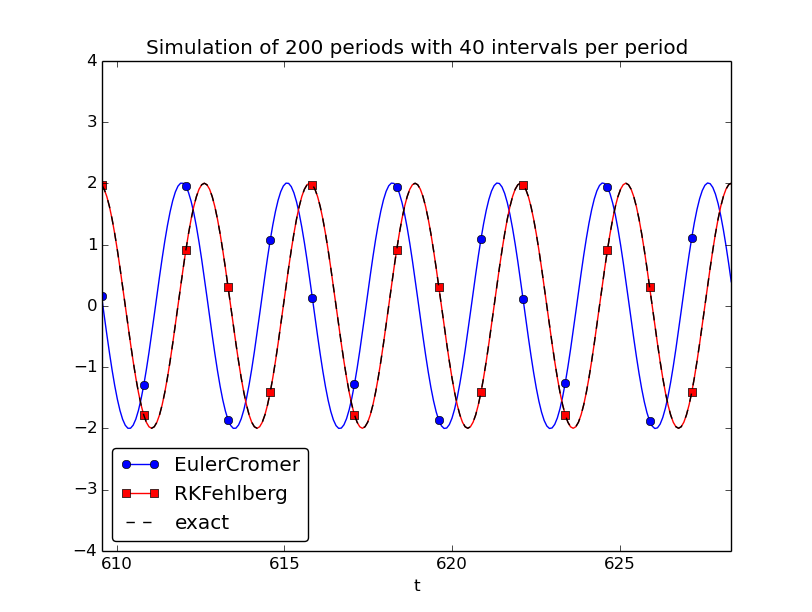

compare(odespy_methods=[odespy.EulerCromer, odespy.RKFehlberg],

omega=2, X_0=2, number_of_periods=200,

time_intervals_per_period=40)

Note that the time_intervals_per_period argument refers to the

time points where we want the solution. These points are also the ones

used for numerical computations in the

odespy.EulerCromer solver,

while the odespy.RKFehlberg solver will use an unknown set of

time points since the time intervals are adjusted as the method runs.

One can easily look at the points actually used by the method

as these are available as an array solver.t_all (but plotting or

examining the points requires modifications inside the compare method).

Figure Comparison of the Runge-Kutta-Fehlberg adaptive method against the Euler-Cromer scheme for a long time simulation (200 periods) shows a computational example where the Runge-Kutta-Fehlberg method is clearly superior to the Euler-Cromer scheme in long time simulations, but the comparison is not really fair because the Runge-Kutta-Fehlberg method applies about twice as many time steps in this computation and performs much more work per time step. It is quite a complicated task to compare two so different methods in a fair way so that the computational work versus accuracy is scientifically well reported.

The 4th-order Runge-Kutta method¶

The 4th-order Runge-Kutta method (RK4) is clearly the most widely used method to solve ODEs. Its power comes from high accuracy even with not so small time steps.

The algorithm¶

We first just state the four-stage algorithm:

where

Application¶

We can run the same simulation as in Figures Simulation of an oscillating system, Adjusted method: first three periods (left) and period 36-40 (right), and Simulation of 10 periods of oscillations by Heun’s method, for 40 periods. The 10 last periods are shown in Figure The last 10 of 40 periods of oscillations by the 4th-order Runge-Kutta method. The results look as impressive as those of the Euler-Cromer method.

Implementation¶

The stages in the 4th-order Runge-Kutta method can easily be implemented

as a modification of the osc_Heun.py code.

Alternatively, one can use the osc_odespy.py code by just providing

the argument odespy_methods=[odespy.RK4] to the compare function.

Derivation¶

The derivation of the 4th-order Runge-Kutta method can be presented in a pedagogical way that brings many fundamental elements of numerical discretization techniques together and that illustrates many aspects of “numerical thinking” when constructing approximate solution methods.

We start with integrating the general ODE \(u'=f(u,t)\) over a time step, from \(t_n\) to \(t_{n+1}\),

The goal of the computation is \(u(t_{n+1})\) (\(u^{n+1}\)), while \(u(t_n)\) (\(u^n\)) is the most recently known value of \(u\). The challenge with the integral is that the integrand involves the unknown \(u\) between \(t_n\) and \(t_{n+1}\).

The integral can be approximated by the famous Simpson’s rule:

The problem with this formula is that we do not know \(f^{n+\frac{1}{2}}=f(u^{n+\frac{1}{2}},t_{n+\frac{1}{2}})\) and \(f^{n+1}=(u^{n+1},t_{n+1})\) as only \(u^n\) is available and only \(f^n\) can then readily be computed.

To proceed, the idea is to use various approximations for \(f^{n+\frac{1}{2}}\) and \(f^{n+1}\) based on using well-known schemes for the ODE in the intervals \([t_n,t_{n+\frac{1}{2}}]\) and \([t_n, t_{n+1}]\). Let us split the integral into four terms:

where \(\hat{f}^{n+\frac{1}{2}}\), \(\tilde{f}^{n+\frac{1}{2}}\), and \(\bar{f}^{n+1}\) are approximations to \(f^{n+\frac{1}{2}}\) and \(f^{n+1}\) that can utilize already computed quantities. For \(\hat{f}^{n+\frac{1}{2}}\) we can simply apply an approximation to \(u^{n+\frac{1}{2}}\) based on a Forward Euler step of size \(\frac{1}{2}\Delta t\):

This formula provides a prediction of \(f^{n+\frac{1}{2}}\), so we can for \(\tilde{f}^{n+\frac{1}{2}}\) try a Backward Euler method to approximate \(u^{n+\frac{1}{2}}\):

With \(\tilde{f}^{n+\frac{1}{2}}\) as an approximation to \(f^{n+\frac{1}{2}}\), we can for the final term \(\bar{f}^{n+1}\) use a midpoint method (or central difference, also called a Crank-Nicolson method) to approximate \(u^{n+1}\):