Analysis of the difference equations¶

Properties of the solution of the wave equation¶

The wave equation

has solutions of the form

for any functions \(g_R\) and \(g_L\) sufficiently smooth to be differentiated twice. The result follows from inserting (75) in the wave equation. A function of the form \(g_R(x-ct)\) represents a signal moving to the right in time with constant velocity \(c\). This feature can be explained as follows. At time \(t=0\) the signal looks like \(g_R(x)\). Introducing a moving \(x\) axis with coordinates \(\xi = x-ct\), we see the function \(g_R(\xi)\) is “at rest” in the \(\xi\) coordinate system, and the shape is always the same. Say the \(g_R(\xi)\) function has a peak at \(\xi=0\). This peak is located at \(x=ct\), which means that it moves with the velocity \(dx/dt=c\) in the \(x\) coordinate system. Similarly, \(g_L(x+ct)\) is a function initially with shape \(g_L(x)\) that moves in the negative \(x\) direction with constant velocity \(c\) (introduce \(\xi=x+ct\), look at the point \(\xi=0\), \(x=-ct\), which has velocity \(dx/dt=-c\)).

With the particular initial conditions

we get, with \(u\) as in (75),

which have the solution \(g_R=g_L=I/2\), and consequently

The interpretation of (76) is that the initial shape of \(u\) is split into two parts, each with the same shape as \(I\) but half of the initial amplitude. One part is traveling to the left and the other one to the right.

Splitting of the initial profile into two waves.

The solution has two important physical features: constant amplitude of the left and right wave, and constant velocity of these two waves. It turns out that the numerical solution will also preserve the constant amplitude, but the velocity depends on the mesh parameters \(\Delta t\) and \(\Delta x\).

The solution (76) will be influenced by boundary conditions when the parts \(\frac{1}{2} I(x-ct)\) and \(\frac{1}{2} I(x+ct)\) hit the boundaries and get, e.g., reflected back into the domain. However, when \(I(x)\) is nonzero only in a small part in the middle of the spatial domain \([0,L]\), which means that the boundaries are placed far away from the initial disturbance of \(u\), the solution (76) is very clearly observed in a simulation.

A useful representation of solutions of wave equations is a linear combination of sine and/or cosine waves. Such a sum of waves is a solution if the governing PDE is linear and each sine or cosine wave fulfills the equation. To ease analytical calculations by hand we shall work with complex exponential functions instead of real-valued sine or cosine functions. The real part of complex expressions will typically be taken as the physical relevant quantity (whenever a physical relevant quantity is strictly needed). The idea now is to build \(I(x)\) of complex wave components \(e^{ikx}\):

Here, \(k\) is the frequency of a component, \(K\) is some set of all the discrete \(k\) values needed to approximate \(I(x)\) well, and \(b_k\) are constants that must be determined. We will very seldom need to compute the \(b_k\) coefficients: most of the insight we look for, and the understanding of the numerical methods we want to establish, come from investigating how the PDE and the scheme treat a single component \(e^{ikx}\) wave.

Letting the number of \(k\) values in \(K\) tend to infinity, makes the sum (77) converge to \(I(x)\). This sum is known as a Fourier series representation of \(I(x)\). Looking at (76), we see that the solution \(u(x,t)\), when \(I(x)\) is represented as in (77), is also built of basic complex exponential wave components of the form \(e^{ik(x\pm ct)}\) according to

It is common to introduce the frequency in time \(\omega = kc\) and assume that \(u(x,t)\) is a sum of basic wave components written as \(e^{ikx -\omega t}\). (Observe that inserting such a wave component in the governing PDE reveals that \(\omega^2 = k^2c^2\), or \(\omega =\pm kc\), reflecting the two solutions: one (\(+kc\)) traveling to the right and the other (\(-kc\)) traveling to the left.)

More precise definition of Fourier representations¶

The above introduction to function representation by sine and cosine waves was quick and intuitive, but will suffice as background knowledge for the following material of single wave component analysis. However, to understand all details of how different wave components sum up to the analytical and numerical solutions, a more precise mathematical treatment is helpful and therefore summarized below.

It is well known that periodic functions can be represented by Fourier series. A generalization of the Fourier series idea to non-periodic functions defined on the real line is the Fourier transform:

The function \(A(k)\) reflects the weight of each wave component \(e^{ikx}\) in an infinite sum of such wave components. That is, \(A(k)\) reflects the frequency content in the function \(I(x)\). Fourier transforms are particularly fundamental for analyzing and understanding time-varying signals.

The solution of the linear 1D wave PDE can be expressed as

In a finite difference method, we represent \(u\) by a mesh function \(u^n_q\), where \(n\) counts temporal mesh points and \(q\) counts the spatial ones (the usual counter for spatial points, \(i\), is here already used as imaginary unit). Similarly, \(I(x)\) is approximated by the mesh function \(I_q\), \(q=0,\ldots,N_x\). On a mesh, it does not make sense to work with wave components \(e^{ikx}\) for very large \(k\), because the shortest possible sine or cosine wave that can be represented uniquely on a mesh with spacing \(\Delta x\) is the wave with wavelength \(2\Delta x\). This wave has its peaks and throughs at every two mesh points. That is, the “jumps up and down” between the mesh points.

The corresponding \(k\) value for the shortest possible wave in the mesh is \(k=2\pi /(2\Delta x) = \pi/\Delta x\). This maximum frequency is known as the Nyquist frequency. Within the range of relevant frequencies \((0,\pi/\Delta x]\) one defines the discrete Fourier transform, using \(N_x+1\) discrete frequencies:

The \(A_k\) values represent the discrete Fourier transform of the \(I_q\) values, which themselves are the inverse discrete Fourier transform of the \(A_k\) values.

The discrete Fourier transform is efficiently computed by the Fast Fourier transform algorithm. For a real function \(I(x)\), the relevant Python code for computing and plotting the discrete Fourier transform appears in the example below.

import numpy as np

from numpy import sin, pi

def I(x):

return sin(2*pi*x) + 0.5*sin(4*pi*x) + 0.1*sin(6*pi*x)

# Mesh

L = 10; Nx = 100

x = np.linspace(0, L, Nx+1)

dx = L/float(Nx)

# Discrete Fourier transform

A = np.fft.rfft(I(x))

A_amplitude = np.abs(A)

# Compute the corresponding frequencies

freqs = np.linspace(0, pi/dx, A_amplitude.size)

import matplotlib.pyplot as plt

plt.plot(freqs, A_amplitude)

plt.show()

Stability¶

The scheme

for the wave equation \(u_t = c^2u_{xx}\) allows basic wave components

as solution, but it turns out that the frequency in time, \(\tilde\omega\), is not equal to the exact frequency \(\omega = kc\). The goal now is to find exactly what \(\tilde \omega\) is. We ask two key questions:

- How accurate is \(\tilde\omega\) compared to \(\omega\)?

- Does the amplitude of such a wave component preserve its (unit) amplitude, as it should, or does it get amplified or damped in time (because of a complex \(\tilde\omega\))?

The following analysis will answer these questions. We shall continue using \(q\) as counter for the mesh point in \(x\) direction.

Preliminary results¶

A key result needed in the investigations is the finite difference approximation of a second-order derivative acting on a complex wave component:

By just changing symbols (\(\omega\rightarrow k\), \(t\rightarrow x\), \(n\rightarrow q\)) it follows that

Numerical wave propagation¶

Inserting a basic wave component \(u^n_q=e^{i(kx_q-\tilde\omega t_n)}\) in (83) results in the need to evaluate two expressions:

Then the complete scheme,

leads to the following equation for the unknown numerical frequency \(\tilde\omega\) (after dividing by \(-e^{ikx}e^{-i\tilde\omega t}\)):

or

where

is the Courant number. Taking the square root of (86) yields

Since the exact \(\omega\) is real it is reasonable to look for a real solution \(\tilde\omega\) of (88). The right-hand side of (88) must then be in \([-1,1]\) because the sine function on the left-hand side has values in \([-1,1]\) for real \(\tilde\omega\). The sine function on the right-hand side can attain the value 1 when

With \(m=1\) we have \(k\Delta x = \pi\), which means that the wavelength \(\lambda = 2\pi/k\) becomes \(2\Delta x\). This is the absolutely shortest wavelength that can be represented on the mesh: the wave jumps up and down between each mesh point. Larger values of \(|m|\) are irrelevant since these correspond to \(k\) values whose waves are too short to be represented on a mesh with spacing \(\Delta x\). For the shortest possible wave in the mesh, \(\sin\left(k\Delta x/2\right)=1\), and we must require

Consider a right-hand side in (88) of magnitude larger than unity. The solution \(\tilde\omega\) of (88) must then be a complex number \(\tilde\omega = \tilde\omega_r + i\tilde\omega_i\) because the sine function is larger than unity for a complex argument. One can show that for any \(\omega_i\) there will also be a corresponding solution with \(-\omega_i\). The component with \(\omega_i>0\) gives an amplification factor \(e^{\omega_it}\) that grows exponentially in time. We cannot allow this and must therefore require \(C\leq 1\) as a stability criterion.

Remark on the stability requirement

For smoother wave components with longer wave lengths per length \(\Delta x\), (89) can in theory be relaxed. However, small round-off errors are always present in a numerical solution and these vary arbitrarily from mesh point to mesh point and can be viewed as unavoidable noise with wavelength \(2\Delta x\). As explained, \(C>1\) will for this very small noise leads to exponential growth of the shortest possible wave component in the mesh. This noise will therefore grow with time and destroy the whole solution.

Numerical dispersion relation¶

Equation (88) can be solved with respect to \(\tilde\omega\):

The relation between the numerical frequency \(\tilde\omega\) and the other parameters \(k\), \(c\), \(\Delta x\), and \(\Delta t\) is called a numerical dispersion relation. Correspondingly, \(\omega =kc\) is the analytical dispersion relation. In general, dispersion refers to the phenomenon where the wave velocity depends on the spatial frequency (\(k\), or the wave length \(\lambda = 2\pi/k\)) of the wave. Since the wave velocity is \(\omega/k =c\), we realize that the analytical dispersion relation reflects the fact that there is no dispersion. However, in a numerical scheme we have dispersive waves where the wave velocity depends on \(k\).

The special case \(C=1\) deserves attention since then the right-hand side of (90) reduces to

That is, \(\tilde\omega = \omega\) and the numerical solution is exact at all mesh points regardless of \(\Delta x\) and \(\Delta t\)! This implies that the numerical solution method is also an analytical solution method, at least for computing \(u\) at discrete points (the numerical method says nothing about the variation of \(u\) between the mesh points, and employing the common linear interpolation for extending the discrete solution gives a curve that in general deviates from the exact one).

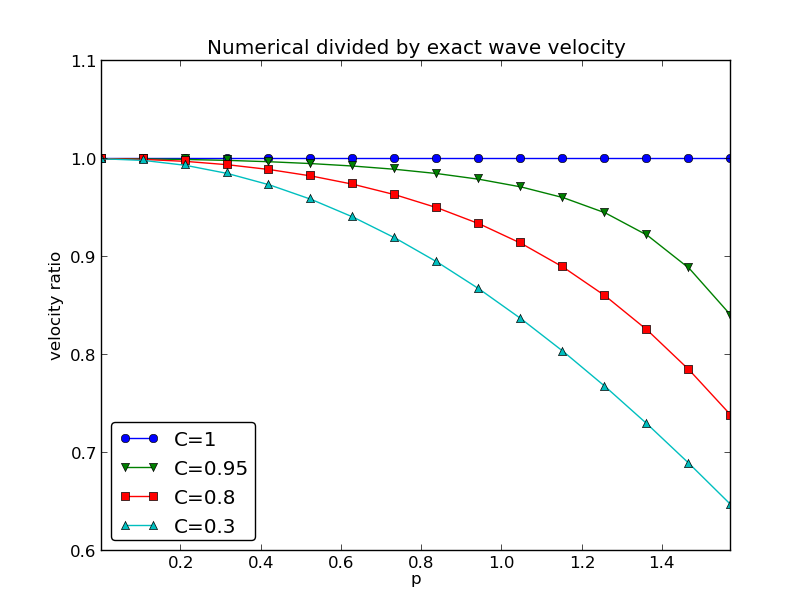

For a closer examination of the error in the numerical dispersion relation when \(C<1\), we can study \(\tilde\omega -\omega\), \(\tilde\omega/\omega\), or the similar error measures in wave velocity: \(\tilde c - c\) and \(\tilde c/c\), where \(c=\omega /k\) and \(\tilde c = \tilde\omega /k\). It appears that the most convenient expression to work with is \(\tilde c/c\), since it can be written as a function of just two parameters:

with \(p=k\Delta x/2\) as a non-dimensional measure of the spatial frequency. In essence, \(p\) tells how many spatial mesh points we have per wave length in space for the wave component with frequency \(k\) (recall that the wave length is \(2\pi/k\)). That is, \(p\) reflects how well the spatial variation of the wave component is resolved in the mesh. Wave components with wave length less than \(2\Delta x\) (\(2\pi/k < 2\Delta x\)) are not visible in the mesh, so it does not make sense to have \(p>\pi/2\).

We may introduce the function \(r(C, p)=\tilde c/c\) for further investigation of numerical errors in the wave velocity:

This function is very well suited for plotting since it combines several parameters in the problem into a dependence on two dimensionless numbers, \(C\) and \(p\).

Defining

def r(C, p):

return 2/(C*p)*asin(C*sin(p))

we can plot \(r(C,p)\) as a function of \(p\) for various values of \(C\), see Figure The fractional error in the wave velocity for different Courant numbers. Note that the shortest waves have the most erroneous velocity, and that short waves move more slowly than they should.

We can also easily make a Taylor series expansion in the discretization parameter \(p\):

>>> import sympy as sym

>>> C, p = sym.symbols('C p')

>>> # Compute the 7 first terms around p=0 with no O() term

>>> rs = r(C, p).series(p, 0, 7).removeO()

>>> rs

p**6*(5*C**6/112 - C**4/16 + 13*C**2/720 - 1/5040) +

p**4*(3*C**4/40 - C**2/12 + 1/120) +

p**2*(C**2/6 - 1/6) + 1

>>> # Pick out the leading order term, but drop the constant 1

>>> rs_error_leading_order = (rs - 1).extract_leading_order(p)

>>> rs_error_leading_order

p**2*(C**2/6 - 1/6)

>>> # Turn the series expansion into a Python function

>>> rs_pyfunc = lambdify([C, p], rs, modules='numpy')

>>> # Check: rs_pyfunc is exact (=1) for C=1

>>> rs_pyfunc(1, 0.1)

1.0

Note that without the .removeO() call the series get an O(x**7) term

that makes it impossible to convert the series to a Python function

(for, e.g., plotting).

From the rs_error_leading_order expression above, we see that the leading

order term in the error of this series expansion is

pointing to an error \({\mathcal{O}(\Delta t^2, \Delta x^2)}\), which is compatible with the errors in the difference approximations (\(D_tD_tu\) and \(D_xD_xu\)).

We can do more with a series expansion, e.g., factor it to see how the factor \(C-1\) plays a significant role. To this end, we make a list of the terms, factor each term, and then sum the terms:

>>> rs = r(C, p).series(p, 0, 4).removeO().as_ordered_terms()

>>> rs

[1, C**2*p**2/6 - p**2/6,

3*C**4*p**4/40 - C**2*p**4/12 + p**4/120,

5*C**6*p**6/112 - C**4*p**6/16 + 13*C**2*p**6/720 - p**6/5040]

>>> rs = [factor(t) for t in rs]

>>> rs

[1, p**2*(C - 1)*(C + 1)/6,

p**4*(C - 1)*(C + 1)*(3*C - 1)*(3*C + 1)/120,

p**6*(C - 1)*(C + 1)*(225*C**4 - 90*C**2 + 1)/5040]

>>> rs = sum(rs) # Python's sum function sums the list

>>> rs

p**6*(C - 1)*(C + 1)*(225*C**4 - 90*C**2 + 1)/5040 +

p**4*(C - 1)*(C + 1)*(3*C - 1)*(3*C + 1)/120 +

p**2*(C - 1)*(C + 1)/6 + 1

We see from the last expression

that \(C=1\) makes all the terms in rs vanish.

Since we already know that the numerical solution is exact for \(C=1\), the

remaining terms in the Taylor series expansion

will also contain factors of \(C-1\) and cancel for \(C=1\).

Extending the analysis to 2D and 3D¶

The typical analytical solution of a 2D wave equation

is a wave traveling in the direction of \(\boldsymbol{k} = k_x\boldsymbol{i} + k_y\boldsymbol{j}\), where \(\boldsymbol{i}\) and \(\boldsymbol{j}\) are unit vectors in the \(x\) and \(y\) directions, respectively. Such a wave can be expressed by

for some twice differentiable function \(g\), or with \(\omega =kc\), \(k=|\boldsymbol{k}|\):

We can, in particular, build a solution by adding complex Fourier components of the form

A discrete 2D wave equation can be written as

This equation admits a Fourier component

as solution. Letting the operators \(D_tD_t\), \(D_xD_x\), and \(D_yD_y\) act on \(u^n_{q,r}\) from (94) transforms (93) to

or

where we have eliminated the factor 4 and introduced the symbols

For a real-valued \(\tilde\omega\) the right-hand side must be less than or equal to unity in absolute value, requiring in general that

This gives the stability criterion, more commonly expressed directly in an inequality for the time step:

A similar, straightforward analysis for the 3D case leads to

In the case of a variable coefficient \(c^2=c^2(\boldsymbol{x})\), we must use the worst-case value

in the stability criteria. Often, especially in the variable wave velocity case, it is wise to introduce a safety factor \(\beta\in (0,1]\) too:

The exact numerical dispersion relations in 2D and 3D becomes, for constant \(c\),

We can visualize the numerical dispersion error in 2D much like we did in 1D. To this end, we need to reduce the number of parameters in \(\tilde\omega\). The direction of the wave is parameterized by the polar angle \(\theta\), which means that

A simplification is to set \(\Delta x=\Delta y=h\). Then \(C_x=C_y=c\Delta t/h\), which we call \(C\). Also,

The numerical frequency \(\tilde\omega\) is now a function of three parameters:

- \(C\), reflecting the number cells a wave is displaced during a time step,

- \(p=\frac{1}{2} kh\), reflecting the number of cells per wave length in space,

- \(\theta\), expressing the direction of the wave.

We want to visualize the error in the numerical frequency. To avoid having \(\Delta t\) as a free parameter in \(\tilde\omega\), we work with \(\tilde c/c = \tilde\omega/(kc)\). The coefficient in front of the \(\sin^{-1}\) factor is then

and

We want to visualize this quantity as a function of \(p\) and \(\theta\) for some values of \(C\leq 1\). It is instructive to make color contour plots of \(1-\tilde c/c\) in polar coordinates with \(\theta\) as the angular coordinate and \(p\) as the radial coordinate.

The stability criterion (97) becomes \(C\leq C_{\max} = 1/\sqrt{2}\) in the present 2D case with the \(C\) defined above. Let us plot \(1-\tilde c/c\) in polar coordinates for \(C_{\max}, 0.9C_{\max}, 0.5C_{\max}, 0.2C_{\max}\). The program below does the somewhat tricky work in Matplotlib, and the result appears in Figure Error in numerical dispersion in 2D. From the figure we clearly see that the maximum \(C\) value gives the best results, and that waves whose propagation direction makes an angle of 45 degrees with an axis are the most accurate.

def dispersion_relation_2D(p, theta, C):

arg = C*sqrt(sin(p*cos(theta))**2 +

sin(p*sin(theta))**2)

c_frac = 2./(C*p)*arcsin(arg)

return c_frac

import numpy as np

from numpy import \

cos, sin, arcsin, sqrt, pi # for nicer math formulas

r = p = np.linspace(0.001, pi/2, 101)

theta = np.linspace(0, 2*pi, 51)

r, theta = np.meshgrid(r, theta)

# Make 2x2 filled contour plots for 4 values of C

import matplotlib.pyplot as plt

C_max = 1/sqrt(2)

C = [[C_max, 0.9*C_max], [0.5*C_max, 0.2*C_max]]

fix, axes = plt.subplots(2, 2, subplot_kw=dict(polar=True))

for row in range(2):

for column in range(2):

error = 1 - dispersion_relation_2D(

p, theta, C[row][column])

print error.min(), error.max()

# use vmin=error.min(), vmax=error.max()

cax = axes[row][column].contourf(

theta, r, error, 50, vmin=-1, vmax=-0.28)

axes[row][column].set_xticks([])

axes[row][column].set_yticks([])

# Add colorbar to the last plot

cbar = plt.colorbar(cax)

cbar.ax.set_ylabel('error in wave velocity')

plt.savefig('disprel2D.png'); plt.savefig('disprel2D.pdf')

plt.show()