Analysis of finite difference equations

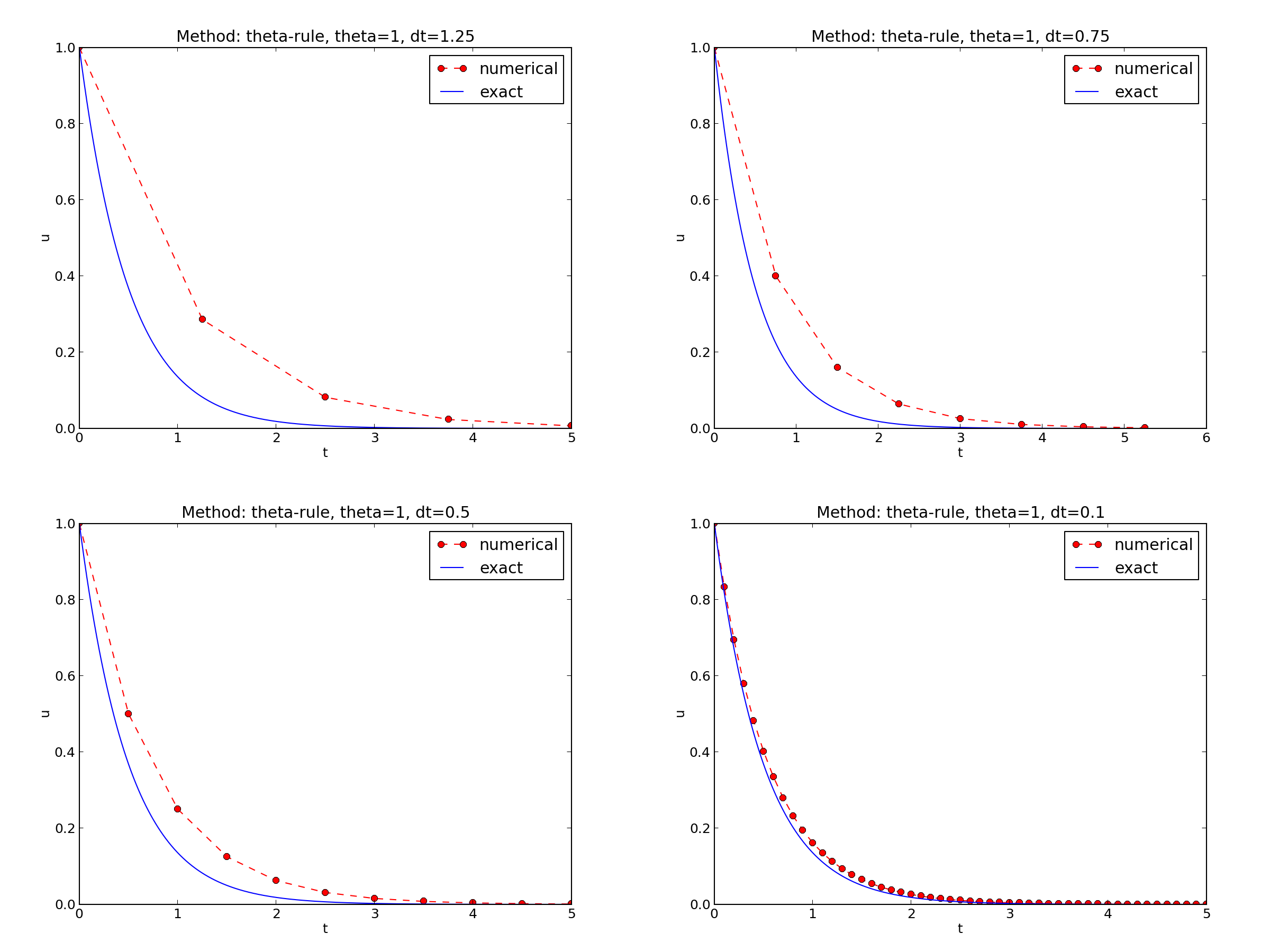

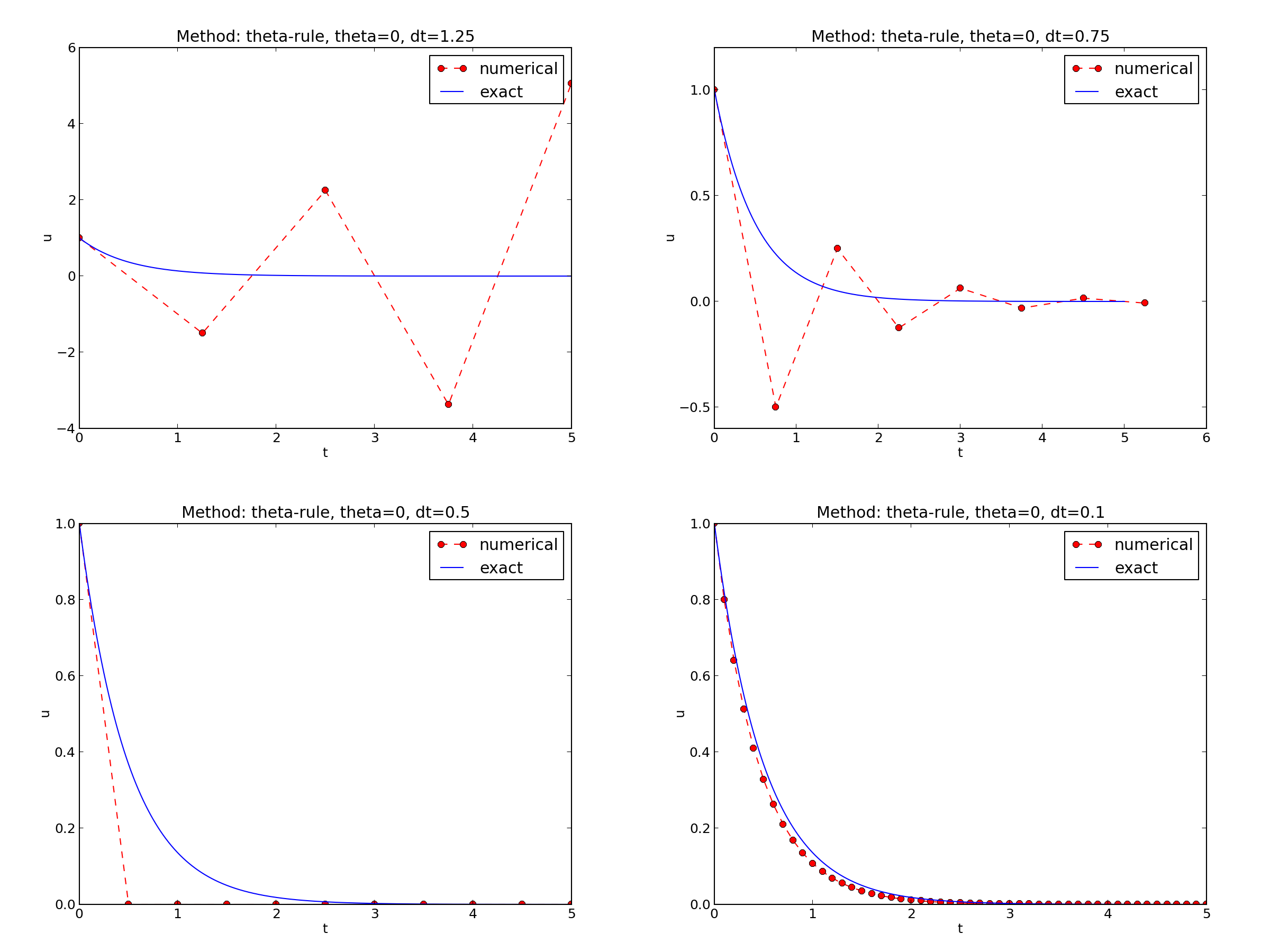

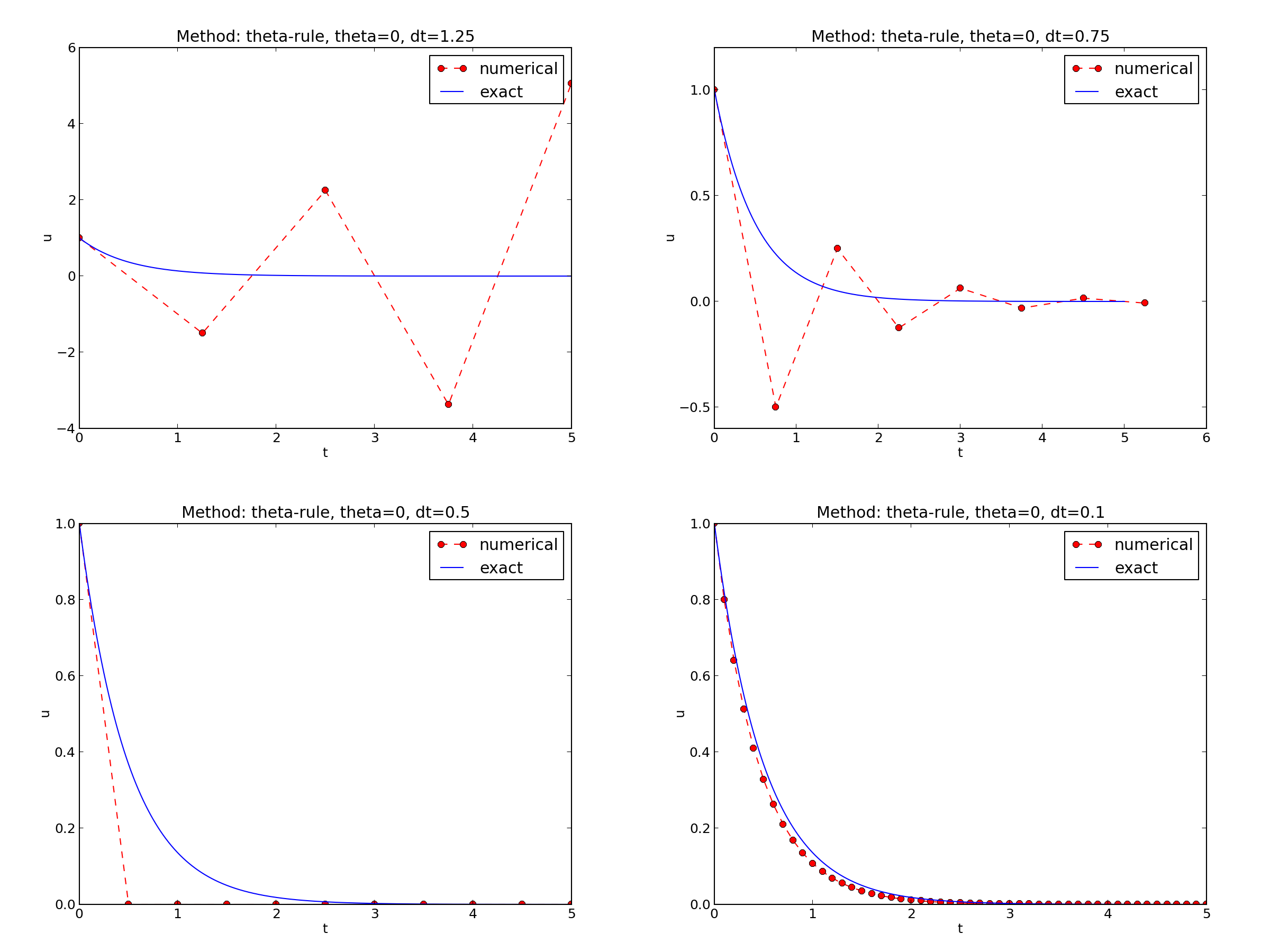

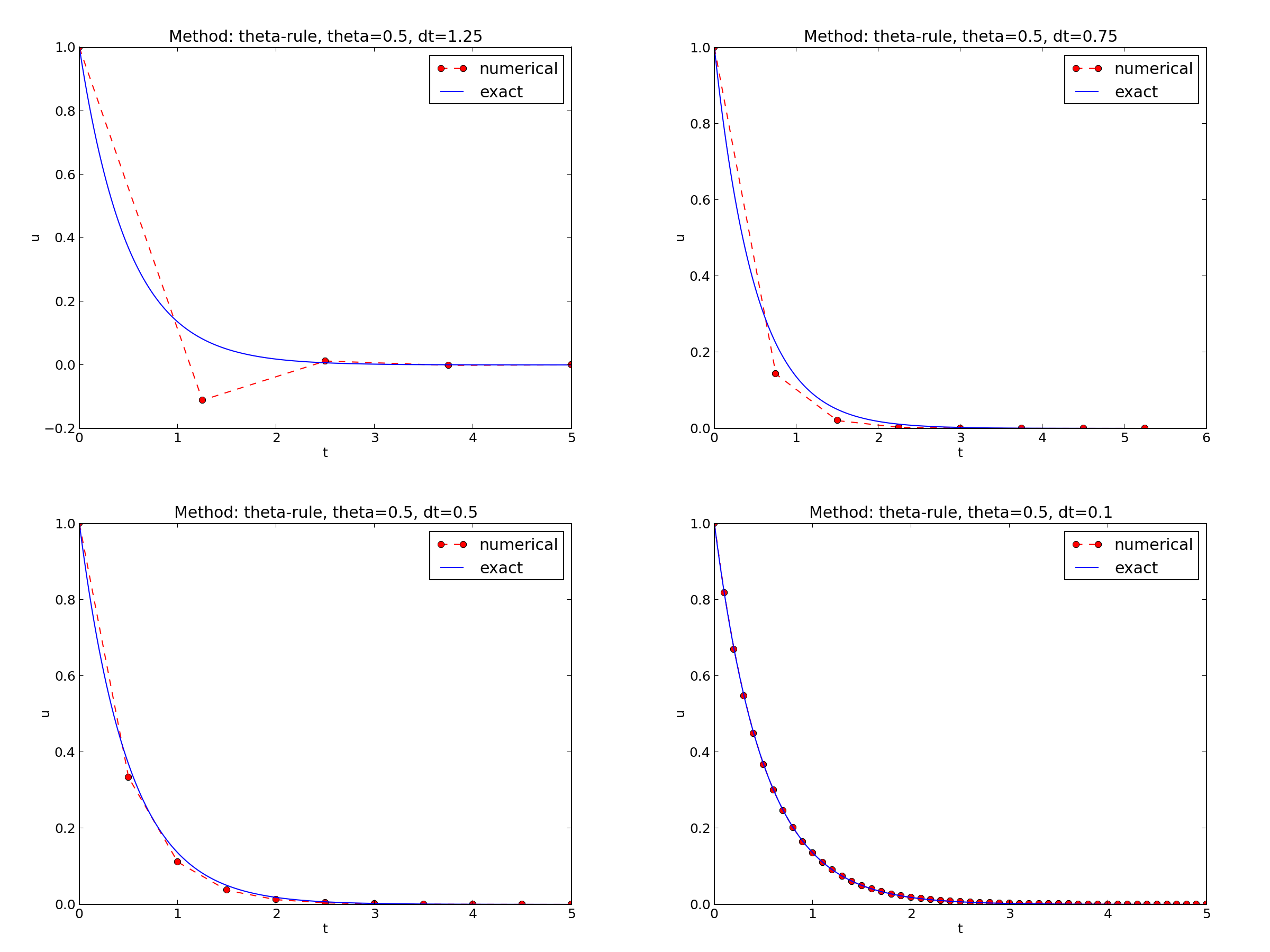

Encouraging numerical solutions

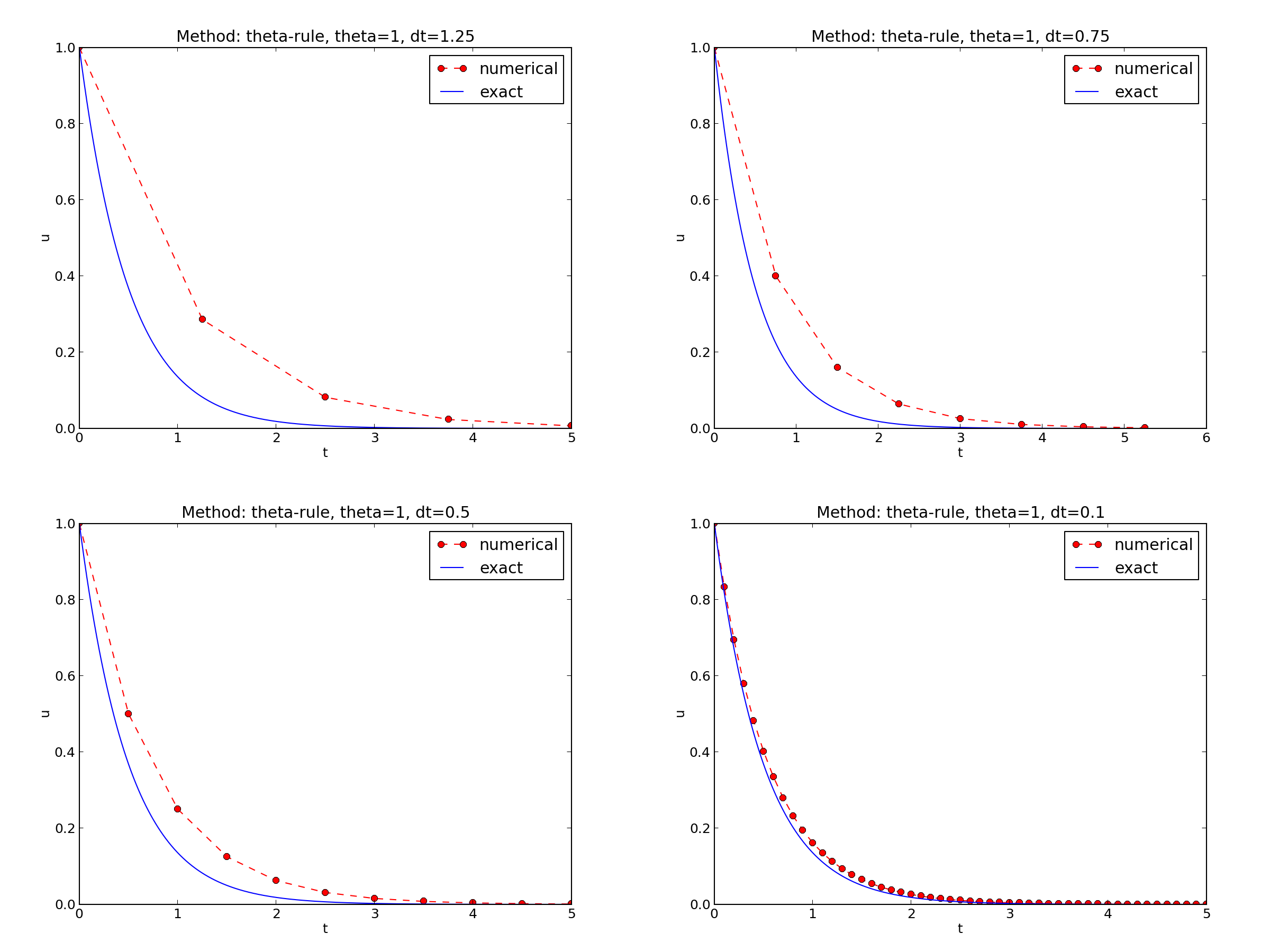

Discouraging numerical solutions; Crank-Nicolson

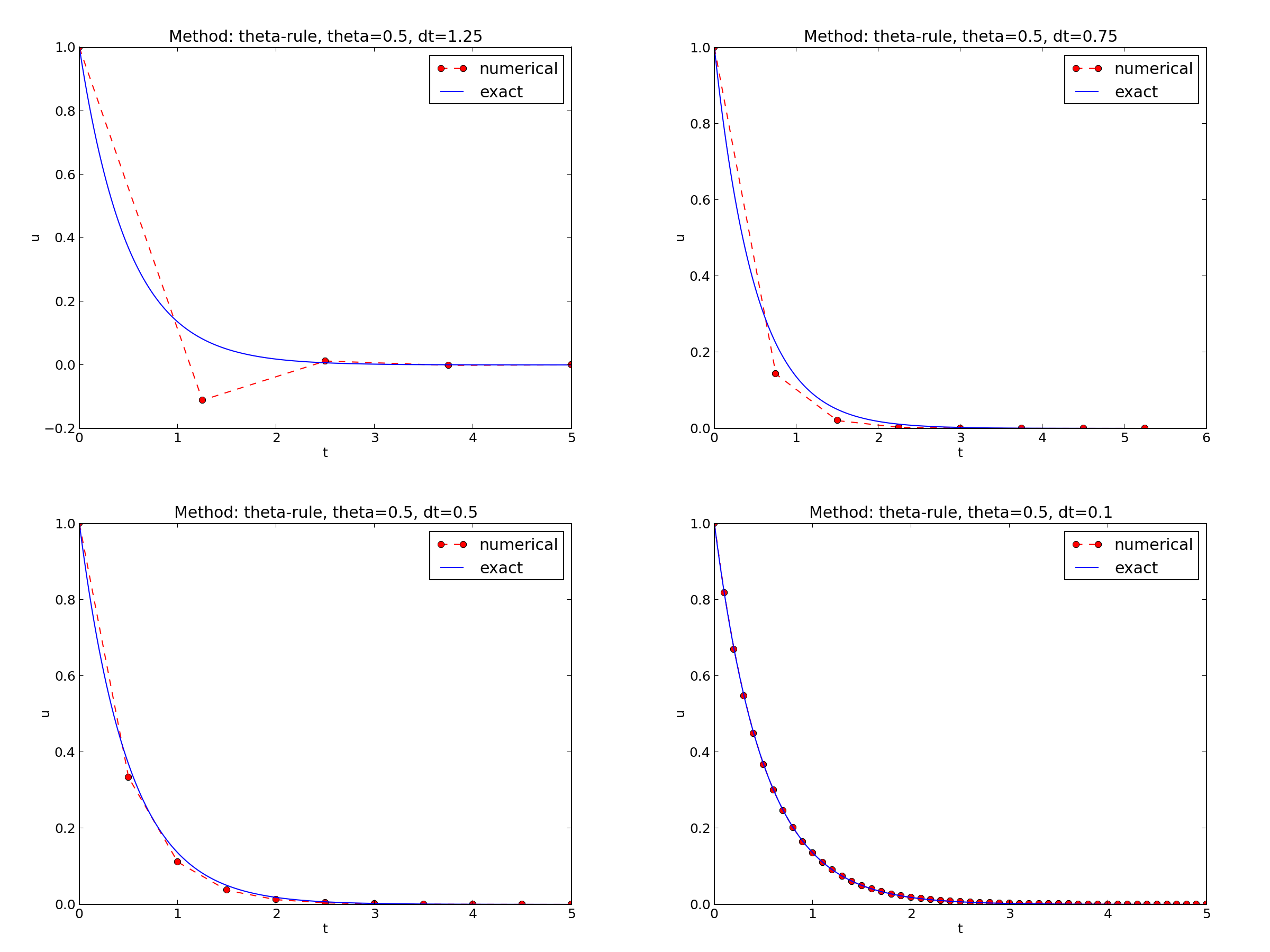

Discouraging numerical solutions; Forward Euler

Summary of observations

Problem setting

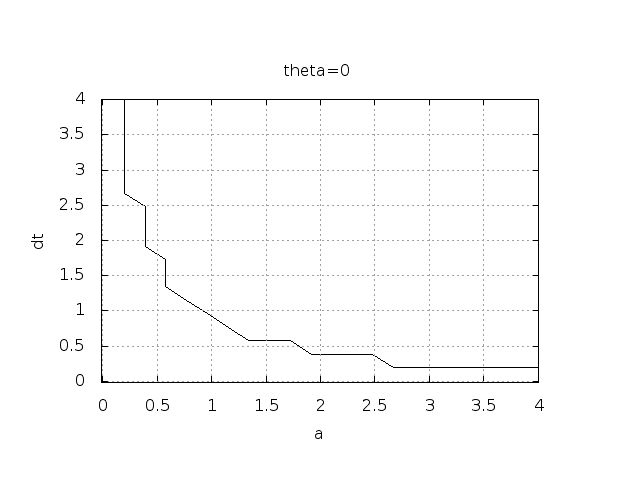

Experimental investigation of oscillatory solutions

Exact numerical solution

Stability

Computation of stability in this problem

Computation of stability in this problem

Explanation of problems with Forward Euler

Explanation of problems with Crank-Nicolson

Summary of stability

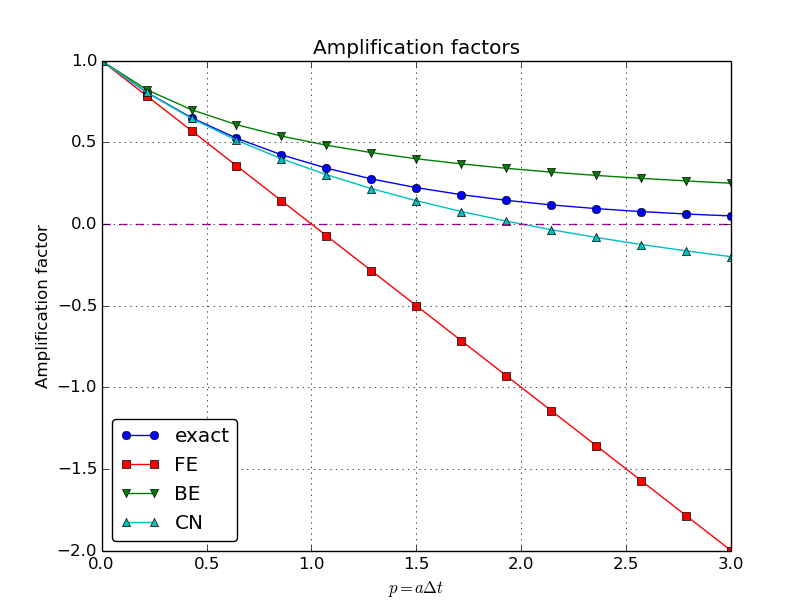

Comparing amplification factors

Plot of amplification factors

\( p=a\Delta t \) is the important parameter for numerical performance

Series expansion of amplification factors

Error in amplification factors

The fraction of numerical and exact amplification factors

The true/global error at a point

Computing the global error at a point

Convergence

Integrated errors

Truncation error

Computation of the truncation error

The truncation error for other schemes

Consistency, stability, and convergence

Model: $$ \begin{equation} u'(t) = -au(t),\quad u(0)=I \label{_auto1} \end{equation} $$

Method: $$ \begin{equation} u^{n+1} = \frac{1 - (1-\theta) a\Delta t}{1 + \theta a\Delta t}u^n \label{decay:analysis:scheme} \end{equation} $$

How good is this method? Is it safe to use it?

\( I=1 \), \( a=2 \), \( \theta =1,0.5, 0 \), \( \Delta t=1.25, 0.75, 0.5, 0.1 \).

The characteristics of the displayed curves can be summarized as follows:

We ask the question

The solution is oscillatory if $$ u^{n} > u^{n-1}$$ ("Safe choices" of \( \Delta t \) lie under the following curve as a function of \( a \).)

Seems that \( a\Delta t < 1 \) for FE and 2 for CN.

Starting with \( u^0=I \), the simple recursion \eqref{decay:analysis:scheme} can be applied repeatedly \( n \) times, with the result that $$ \begin{equation} u^{n} = IA^n,\quad A = \frac{1 - (1-\theta) a\Delta t}{1 + \theta a\Delta t} \label{decay:analysis:unex} \end{equation} $$

Such a formula for the exact discrete solution is unusual to obtain in practice, but very handy for our analysis here.

Note: An exact dicrete solution fulfills a discrete equation (without round-off errors), whereas an exact solution fulfills the original mathematical equation.

Since \( u^n=I A^n \),

\( A < 0 \) if $$ \frac{1 - (1-\theta) a\Delta t}{1 + \theta a\Delta t} < 0 $$ To avoid oscillatory solutions we must have \( A> 0 \) and $$ \begin{equation} \Delta t < \frac{1}{(1-\theta)a}\ \label{_auto2} \end{equation} $$

\( |A|\leq 1 \) means \( -1\leq A\leq 1 \) $$ \begin{equation} -1\leq\frac{1 - (1-\theta) a\Delta t}{1 + \theta a\Delta t} \leq 1 \label{decay:th:stability} \end{equation} $$ \( -1 \) is the critical limit (because \( A\le 1 \) is always satisfied): $$ \begin{align*} \Delta t &\leq \frac{2}{(1-2\theta)a},\quad \mbox{when }\theta < \half \end{align*} $$

\( u^{n+1} \) is an amplification \( A \) of \( u^n \): $$ u^{n+1} = Au^n,\quad A = \frac{1 - (1-\theta) a\Delta t}{1 + \theta a\Delta t} $$

The exact solution is also an amplification: $$ u(t_{n+1}) = \Aex u(t_n), \quad \Aex = e^{-a\Delta t}$$

A possible measure of accuracy: \( \Aex - A \)

If we scale the model by \( \bar t=at \), \( \bar u=u/I \), we get \( d\bar u/d\bar t = -\bar u \), \( \bar u(0)=1 \) (no physical parameters!). The analysis show that \( \Delta \bar t \) is key, corresponding to \( a\Delta t \) in the unscaled model.

To investigate \( \Aex - A \) mathematically, we can Taylor expand the expression, using \( p=a\Delta t \) as variable.

>>> from sympy import *

>>> # Create p as a mathematical symbol with name 'p'

>>> p = Symbol('p')

>>> # Create a mathematical expression with p

>>> A_e = exp(-p)

>>>

>>> # Find the first 6 terms of the Taylor series of A_e

>>> A_e.series(p, 0, 6)

1 + (1/2)*p**2 - p - 1/6*p**3 - 1/120*p**5 + (1/24)*p**4 + O(p**6)

>>> theta = Symbol('theta')

>>> A = (1-(1-theta)*p)/(1+theta*p)

>>> FE = A_e.series(p, 0, 4) - A.subs(theta, 0).series(p, 0, 4)

>>> BE = A_e.series(p, 0, 4) - A.subs(theta, 1).series(p, 0, 4)

>>> half = Rational(1,2) # exact fraction 1/2

>>> CN = A_e.series(p, 0, 4) - A.subs(theta, half).series(p, 0, 4)

>>> FE

(1/2)*p**2 - 1/6*p**3 + O(p**4)

>>> BE

-1/2*p**2 + (5/6)*p**3 + O(p**4)

>>> CN

(1/12)*p**3 + O(p**4)

Focus: the error measure \( A-\Aex \) as function of \( \Delta t \) (recall that \( p=a\Delta t \)): $$ \begin{equation} A-\Aex = \left\lbrace\begin{array}{ll} \Oof{\Delta t^2}, & \hbox{Forward and Backward Euler},\\ \Oof{\Delta t^3}, & \hbox{Crank-Nicolson} \end{array}\right. \label{_auto3} \end{equation} $$

Focus: the error measure \( 1-A/\Aex \) as function of \( p=a\Delta t \):

>>> FE = 1 - (A.subs(theta, 0)/A_e).series(p, 0, 4)

>>> BE = 1 - (A.subs(theta, 1)/A_e).series(p, 0, 4)

>>> CN = 1 - (A.subs(theta, half)/A_e).series(p, 0, 4)

>>> FE

(1/2)*p**2 + (1/3)*p**3 + O(p**4)

>>> BE

-1/2*p**2 + (1/3)*p**3 + O(p**4)

>>> CN

(1/12)*p**3 + O(p**4)

Same leading-order terms as for the error measure \( A-\Aex \).

>>> n = Symbol('n')

>>> u_e = exp(-p*n) # I=1

>>> u_n = A**n # I=1

>>> FE = u_e.series(p, 0, 4) - u_n.subs(theta, 0).series(p, 0, 4)

>>> BE = u_e.series(p, 0, 4) - u_n.subs(theta, 1).series(p, 0, 4)

>>> CN = u_e.series(p, 0, 4) - u_n.subs(theta, half).series(p, 0, 4)

>>> FE

(1/2)*n*p**2 - 1/2*n**2*p**3 + (1/3)*n*p**3 + O(p**4)

>>> BE

(1/2)*n**2*p**3 - 1/2*n*p**2 + (1/3)*n*p**3 + O(p**4)

>>> CN

(1/12)*n*p**3 + O(p**4)

Substitute \( n \) by \( t/\Delta t \):

The numerical scheme is convergent if the global error \( e^n\rightarrow 0 \) as \( \Delta t\rightarrow 0 \). If the error has a leading order term \( \Delta t^r \), the convergence rate is of order \( r \).

Focus: norm of the numerical error $$ ||e^n||_{\ell^2} = \sqrt{\Delta t\sum_{n=0}^{N_t} ({\uex}(t_n) - u^n)^2}$$

Forward and Backward Euler: $$ ||e^n||_{\ell^2} = \frac{1}{4}\sqrt{\frac{T^3}{3}} a^2\Delta t$$

Crank-Nicolson: $$ ||e^n||_{\ell^2} = \frac{1}{12}\sqrt{\frac{T^3}{3}}a^3\Delta t^2$$

Analysis of both the pointwise and the time-integrated true errors:

Backward Euler: $$ R^n \approx -\half\uex''(t_n)\Delta t $$

Crank-Nicolson: $$ R^{n+\half} \approx \frac{1}{24}\uex'''(t_{n+\half})\Delta t^2$$

(Consistency and stability is in most problems much easier to establish than convergence.)