Study Guide: Introduction to Finite Element Methods

Dec 16, 2013

Why finite elements?

- Can with ease solve PDEs in domains with complex geometry

- Can with ease provide higher-order approximations

- Has (in simpler stationary problems) a rigorus mathematical analysis framework (not much considered here)

Domain for flow around a dolphin

The flow

Basic ingredients of the finite element method

- Transform the PDE problem to a variational form

- Define function approximation over finite elements

- Use a machinery to derive linear systems

- Solve linear systems

Our learning strategy

- Start with approximation of functions, not PDEs

- Introduce finite element approximations

- See later how this is applied to PDEs

Reason: the finite element method has many concepts and a jungle of details. This strategy minimizes the mixing of ideas, concepts, and technical details.

Approximation in vector spaces

Approximation set-up

General idea of finding an approximation \( u(x) \) to some given \( f(x) \):

$$

\begin{equation}

u(x) = \sum_{i=0}^N c_i\baspsi_i(x)

\tag{1}

\end{equation}

$$

where

- \( \baspsi_i(x) \) are prescribed functions

- \( c_i \), \( i=0,\ldots,N \) are unknown coefficients to be determined

How to determine the coefficients?

We shall address three approaches:

- The least squares method

- The projection (or Galerkin) method

- The interpolation (or collocation) method

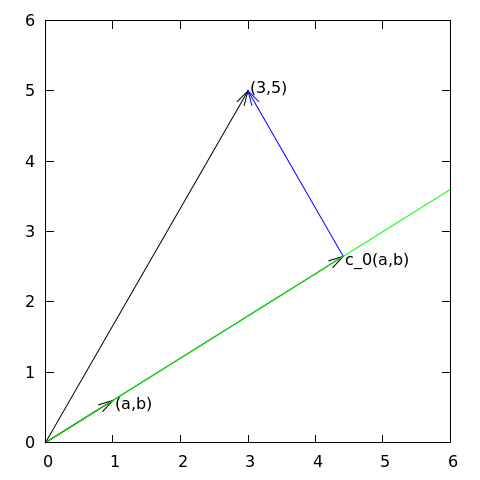

Approximation of planar vectors; problem

Given a vector \( \f = (3,5) \), find an approximation to \( \f \) directed along a given line.

Approximation of planar vectors; vector space terminology

$$

\begin{equation}

V = \mbox{span}\,\{ \psib_0\}

\end{equation}

$$

- \( \psib_0 \) is a basis vector in the space \( V \)

- Seek \( \u = c_0\psib_0\in V \)

- Determine \( c_0 \) such that \( \u \) is the "best" approximation to \( \f \)

- Visually, "best" is obvious

Define

- the error \( \e = \f - \u \)

- the (Eucledian) scalar product of two vectors: \( (\u,\v) \)

- the norm of \( \e \): \( ||\e|| = \sqrt{(\e, \e)} \)

The least squares method; principle

- Idea: find \( c_0 \) such that \( ||\e|| \) is minimized

- Actually, we always minimize \( E=||\e||^2 \)

$$

\begin{equation*}

\frac{\partial E}{\partial c_0} = 0

\end{equation*}

$$

The least squares method; calculations

$$

\begin{equation}

E(c_0) = (\e,\e) = (\f,\f) - 2c_0(\f,\psib_0) + c_0^2(\psib_0,\psib_0)

\end{equation}

$$

$$

\begin{equation}

\frac{\partial E}{\partial c_0} = -2(\f,\psib_0) + 2c_0 (\psib_0,\psib_0) = 0

\tag{2}

\end{equation}

$$

$$

\begin{equation}

c_0 = \frac{(\f,\psib_0)}{(\psib_0,\psib_0)}

\tag{3}

\end{equation}

$$

$$

\begin{equation}

c_0 = \frac{3a + 5b}{a^2 + b^2}

\end{equation}

$$

Observation for later: the vanishing derivative (2) can be alternatively written as

$$

\begin{equation}

(\e, \psib_0) = 0

\tag{4}

\end{equation}

$$

The projection (or Galerkin) method

- Backgrund: minimizing \( ||\e||^2 \) implies that \( \e \) is orthogonal to any vector \( \v \) in the space \( V \) (visually clear, but can easily be computed too)

- Alternative idea: demand \( (\e, \v) = 0,\quad\forall\v\in V \)

- Equivalent statement: \( (\e, \psib_0)=0 \) (see notes for why)

- Insert \( \e = \f - c_0\psib_0 \) and solve for \( c_0 \)

- Same equation for \( c_0 \) and hence same solution as in the least squares method

Approximation of general vectors

Given a vector \( \f \), find an approximation \( \u\in V \):

$$

\begin{equation*}

V = \hbox{span}\,\{\psib_0,\ldots,\psib_N\}

\end{equation*}

$$

- We have a set of linearly independent basis vectors \( \psib_0,\ldots,\psib_N \)

- Any \( \u\in V \) can then be written as \( \u = \sum_{j=0}^Nc_j\psib_j \)

The least squares method

Idea: find \( c_0,\ldots,c_N \) such that \( E= ||\e||^2 \) is minimized, \( \e=\f-\u \).

$$

\begin{align*}

E(c_0,\ldots,c_N) &= (\e,\e) = (\f -\sum_jc_j\psib_j,\f -\sum_jc_j\psib_j)

\nonumber\\

&= (\f,\f) - 2\sum_{j=0}^Nc_j(\f,\psib_j) +

\sum_{p=0}^N\sum_{q=0}^N c_pc_q(\psib_p,\psib_q)

\end{align*}

$$

$$

\begin{equation*}

\frac{\partial E}{\partial c_i} = 0,\quad i=0,\ldots,N

\end{equation*}

$$

After some work we end up with a linear system

$$

\begin{align}

\sum_{j=0}^N A_{i,j}c_j &= b_i,\quad i=0,\ldots,N\\

A_{i,j} &= (\psib_i,\psib_j)\\

b_i &= (\psib_i, \f)

\end{align}

$$

The projection (or Galerkin) method

Can be shown that minimizing \( ||\e|| \) implies that \( \e \) is orthogonal to all \( \v\in V \):

$$

(\e,\v)=0,\quad \forall\v\in V

$$

which implies that \( \e \) most be orthogonal to each basis vector:

$$

\begin{equation}

(\e,\psib_i)=0,\quad i=0,\ldots,N

\tag{5}

\end{equation}

$$

This orthogonality condition is the principle of the projection (or Galerkin) method. Leads to the same linear system as in the least squares method.

Approximation of functions

Let \( V \) be a function space spanned by a set of basis functions \( \baspsi_0,\ldots,\baspsi_N \),

$$

\begin{equation*}

V = \hbox{span}\,\{\baspsi_0,\ldots,\baspsi_N\}

\end{equation*}

$$

Find \( u\in V \) as a linear combination of the basis functions:

$$

\begin{equation}

u = \sum_{j\in\If} c_j\baspsi_j,\quad\If = \{0,1,\ldots,N\}

\tag{6}

\end{equation}

$$

The least squares method

- Extend the ideas from the vector case: minimize the (square) norm of the error.

- What norm? \( (f,g) = \int_\Omega f(x)g(x)\, dx \)

$$

\begin{equation}

E = (e,e) = (f-u,f-u) = (f(x)-\sum_{j\in\If} c_j\baspsi_j(x), f(x)-\sum_{j\in\If} c_j\baspsi_j(x))

\tag{7}

\end{equation}

$$

$$

\begin{equation}

E(c_0,\ldots,c_N) = (f,f) -2\sum_{j\in\If} c_j(f,\baspsi_i)

+ \sum_{p\in\If}\sum_{q\in\If} c_pc_q(\baspsi_p,\baspsi_q)

\end{equation}

$$

$$

\begin{equation*}

\frac{\partial E}{\partial c_i} = 0,\quad i=\in\If

\end{equation*}

$$

After computations identical to the vector case, we get a linear system

$$

\begin{align}

\sum_{j\in\If}^N A_{i,j}c_j &= b_i,\quad i\in\If

\tag{8}\\

A_{i,j} &= (\baspsi_i,\baspsi_j)

\tag{9}\\

b_i &= (f,\baspsi_i)

\tag{10}

\end{align}

$$

The projection (or Galerkin) method

As before, minimizing \( (e,e) \) is equivalent to the projection (or Galerkin) method

$$

\begin{equation}

(e,v)=0,\quad\forall v\in V

\tag{11}

\end{equation}

$$

which means, as before,

$$

\begin{equation}

(e,\baspsi_i)=0,\quad i\in\If

\tag{12}

\end{equation}

$$

With the same algebra as in the multi-dimensional vector case, we get the same linear system as arose from the least squares method.

Example: linear approximation; problem

$$

\begin{equation*} V = \hbox{span}\,\{1, x\} \end{equation*}

$$

That is, \( \baspsi_0(x)=1 \), \( \baspsi_1(x)=x \), and \( N=1 \).

We seek

$$

\begin{equation*}

u=c_0\baspsi_0(x) + c_1\baspsi_1(x) = c_0 + c_1x

\end{equation*}

$$

Example: linear approximation; solution

$$

\begin{align}

A_{0,0} &= (\baspsi_0,\baspsi_0) = \int_1^21\cdot 1\, dx = 1\\

A_{0,1} &= (\baspsi_0,\baspsi_1) = \int_1^2 1\cdot x\, dx = 3/2\\

A_{1,0} &= A_{0,1} = 3/2\\

A_{1,1} &= (\baspsi_1,\baspsi_1) = \int_1^2 x\cdot x\,dx = 7/3

\end{align}

$$

$$

\begin{align}

b_1 &= (f,\baspsi_0) = \int_1^2 (10(x-1)^2 - 1)\cdot 1 \, dx = 7/3\\

b_2 &= (f,\baspsi_1) = \int_1^2 (10(x-1)^2 - 1)\cdot x\, dx = 13/3

\end{align}

$$

Solution of 2x2 linear system:

$$

\begin{equation}

c_0 = -38/3,\quad c_1 = 10,\quad u(x) = 10x - \frac{38}{3}

\end{equation}

$$

Example: linear approximation; plot

Implementation of the least squares method; ideas

Consider symbolic computation of the linear system, where

- \( f(x) \) is given as a

sympyexpressionf(involving the symbolx), -

psiis a list of \( \sequencei{\baspsi} \), -

Omegais a 2-tuple/list holding the domain \( \Omega \)

Carry out the integrations, solve the linear system, and return \( u(x)=\sum_jc_j\baspsi_j(x) \)

Implementation of the least squares method; symbolic code

import sympy as sp

def least_squares(f, psi, Omega):

N = len(psi) - 1

A = sp.zeros((N+1, N+1))

b = sp.zeros((N+1, 1))

x = sp.Symbol('x')

for i in range(N+1):

for j in range(i, N+1):

A[i,j] = sp.integrate(psi[i]*psi[j],

(x, Omega[0], Omega[1]))

A[j,i] = A[i,j]

b[i,0] = sp.integrate(psi[i]*f, (x, Omega[0], Omega[1]))

c = A.LUsolve(b)

u = 0

for i in range(len(psi)):

u += c[i,0]*psi[i]

return u, c

Observe: symmetric coefficient matrix so we can halve the integrations.

Implementation of the least squares method; numerical code

- Symbolic integration may be impossible and/or very slow

- Turn to pure numerical computations in those cases

- Supply Python functions

f(x),psi(x,i), and a meshx

def least_squares_numerical(f, psi, N, x,

integration_method='scipy',

orthogonal_basis=False):

import scipy.integrate

A = np.zeros((N+1, N+1))

b = np.zeros(N+1)

Omega = [x[0], x[-1]]

dx = x[1] - x[0]

for i in range(N+1):

j_limit = i+1 if orthogonal_basis else N+1

for j in range(i, j_limit):

print '(%d,%d)' % (i, j)

if integration_method == 'scipy':

A_ij = scipy.integrate.quad(

lambda x: psi(x,i)*psi(x,j),

Omega[0], Omega[1], epsabs=1E-9, epsrel=1E-9)[0]

elif ...

A[i,j] = A[j,i] = A_ij

if integration_method == 'scipy':

b_i = scipy.integrate.quad(

lambda x: f(x)*psi(x,i), Omega[0], Omega[1],

epsabs=1E-9, epsrel=1E-9)[0]

elif ...

b[i] = b_i

c = b/np.diag(A) if orthogonal_basis else np.linalg.solve(A, b)

u = sum(c[i]*psi(x, i) for i in range(N+1))

return u, c

Implementation of the least squares method; plotting

Compare \( f \) and \( u \) visually:

def comparison_plot(f, u, Omega, filename='tmp.pdf'):

x = sp.Symbol('x')

# Turn f and u to ordinary Python functions

f = sp.lambdify([x], f, modules="numpy")

u = sp.lambdify([x], u, modules="numpy")

resolution = 401 # no of points in plot

xcoor = linspace(Omega[0], Omega[1], resolution)

exact = f(xcoor)

approx = u(xcoor)

plot(xcoor, approx)

hold('on')

plot(xcoor, exact)

legend(['approximation', 'exact'])

savefig(filename)

All code in module approx1D.py

Implementation of the least squares method; application

>>> from approx1D import *

>>> x = sp.Symbol('x')

>>> f = 10*(x-1)**2-1

>>> u, c = least_squares(f=f, psi=[1, x], Omega=[1, 2])

>>> comparison_plot(f, u, Omega=[1, 2])

Perfect approximation; parabola approximating parabola

- What if we add \( \baspsi_2=x^2 \) to the space \( V \)?

- That is, approximating a parabola by any parabola?

- (Hopefully we get the exact parabola!)

>>> from approx1D import *

>>> x = sp.Symbol('x')

>>> f = 10*(x-1)**2-1

>>> u, c = least_squares(f=f, psi=[1, x, x**2], Omega=[1, 2])

>>> print u

10*x**2 - 20*x + 9

>>> print sp.expand(f)

10*x**2 - 20*x + 9

Perfect approximation; the general result

- What if we use \( \psi_i(x)=x^i \) for \( i=0,\ldots,N=40 \)?

- The output from

least_squaresis \( c_i=0 \) for \( i>2 \)

Perfect approximation; proof of the general result

If \( f\in V \), \( f=\sum_{j\in\If}d_j\baspsi_j \), for some \( \sequencei{d} \). Then

$$

\begin{equation*}

b_i = (f,\baspsi_i) = \sum_{j\in\If}d_j(\baspsi_j, \baspsi_i)

= \sum_{j\in\If} d_jA_{i,j}

\end{equation*}

$$

The linear system \( \sum_j A_{i,j}c_j = b_i \), \( i\in\If \), is then

$$

\begin{equation*}

\sum_{j\in\If}c_jA_{i,j} = \sum_{j\in\If}d_jA_{i,j},\quad i\in\If

\end{equation*}

$$

which implies that \( c_i=d_i \) for \( i\in\If \) and \( u \) is identical to \( f \).

Finite-precision/numerical computations

The previous computations were symbolic. What if we solve the linear system numerically with standard arrays?

| exact | sympy | numpy32 | numpy64 |

| 9 | 9.62 | 5.57 | 8.98 |

| -20 | -23.39 | -7.65 | -19.93 |

| 10 | 17.74 | -4.50 | 9.96 |

| 0 | -9.19 | 4.13 | -0.26 |

| 0 | 5.25 | 2.99 | 0.72 |

| 0 | 0.18 | -1.21 | -0.93 |

| 0 | -2.48 | -0.41 | 0.73 |

| 0 | 1.81 | -0.013 | -0.36 |

| 0 | -0.66 | 0.08 | 0.11 |

| 0 | 0.12 | 0.04 | -0.02 |

| 0 | -0.001 | -0.02 | 0.002 |

- Column 2:

sympy.mpmath.fp.matrixandsympy.mpmath.fp.lu_solve - Column 3:

numpyarrays withnumpy.float32entries - Column 4:

numpyarrays withnumpy.float64entries

Ill-conditioning (1)

Observations:

- Significant round-off errors in the numerical computations (!)

- But if we plot the approximations they look good (!)

Problem: The basis functions \( x^i \) become almost linearly dependent for large \( N \).

Ill-conditioning (2)

- Almost linearly dependent basis functions give almost singular matrices

- Such matrices are said to be ill conditioned, and Gaussian elimination is severely affected by round-off errors

- The basis \( 1, x, x^2, x^3, x^4, \ldots \) is a bad basis

- Polynomials are fine as basis, but the more orthogonal they are, \( (\baspsi_i,\baspsi_j)\approx 0 \), the better

Fourier series approximation; problem and code

Consider

$$

\begin{equation*}

V = \hbox{span}\,\{ \sin \pi x, \sin 2\pi x,\ldots,\sin (N+1)\pi x\}

\end{equation*}

$$

N = 3

from sympy import sin, pi

psi = [sin(pi*(i+1)*x) for i in range(N+1)]

f = 10*(x-1)**2 - 1

Omega = [0, 1]

u, c = least_squares(f, psi, Omega)

comparison_plot(f, u, Omega)

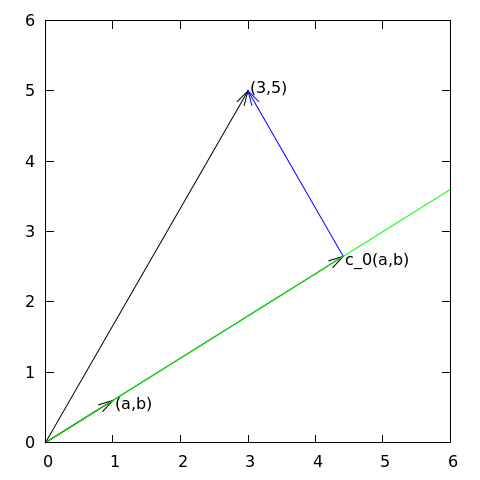

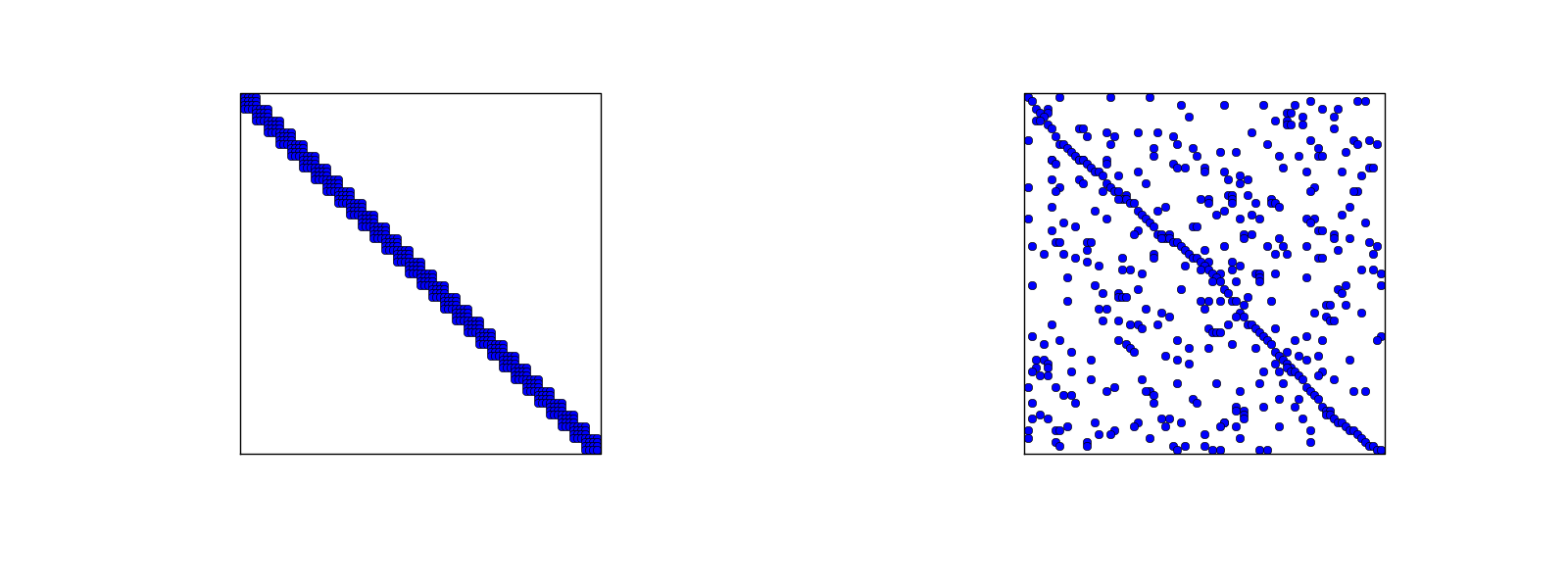

Fourier series approximation; plot

\( N=3 \) vs \( N=11 \):

Fourier series approximation; improvements

- Considerably improvement by \( N=11 \)

- But always discrepancy of \( f(0)-u(0)=9 \) at \( x=0 \), because all the \( \baspsi_i(0)=0 \) and hence \( u(0)=0 \)

- Possible remedy: add a term that leads to correct boundary values

$$

\begin{equation}

u(x) = f(0)(1-x) + xf(1) + \sum_{j\in\If} c_j\baspsi_j(x)

\end{equation}

$$

The extra term ensures \( u(0)=f(0) \) and \( u(1)=f(1) \) and

is a strikingly good help to get a good

approximation!

Fourier series approximation; final results

\( N=3 \) vs \( N=11 \):

Orthogonal basis functions

This choice of sine functions as basis functions is popular because

- the basis functions are orthogonal: \( (\baspsi_i,\baspsi_j)=0 \)

- implying that \( A_{i,j} \) is a diagonal matrix

- implying that we can solve for \( c_i = 2\int_0^1 f(x)\sin ((i+1)\pi x) dx \)

In general for an orthogonal basis, \( A_{i,j} \) is diagonal and we can easily solve for \( c_i \):

$$

c_i = \frac{b_i}{A_{i,i}} = \frac{(f,\baspsi_i)}{(\baspsi_i,\baspsi_i)}

$$

The collocation or interpolation method; ideas and math

Here is another idea for approximating \( f(x) \) by \( u(x)=\sum_jc_j\baspsi_j \):

- Force \( u(\xno{i}) = f(\xno{i}) \) at some selected collocation points \( \sequencei{x} \)

- Then \( u \) interpolates \( f \)

- The method is known as interpolation or collocation

$$

\begin{equation}

u(\xno{i}) = \sum_{j\in\If} c_j \baspsi_j(\xno{i}) = f(\xno{i})

\quad i\in\If,N

\end{equation}

$$

This is a linear system with no need for integration:

$$

\begin{align}

\sum_{j\in\If} A_{i,j}c_j &= b_i,\quad i\in\If\\

A_{i,j} &= \baspsi_j(\xno{i})\\

b_i &= f(\xno{i})

\end{align}

$$

No symmetric matrix: \( \baspsi_j(\xno{i})\neq \baspsi_i(\xno{j}) \) in general

The collocation or interpolation method; implementation

points holds the interpolation/collocation points

def interpolation(f, psi, points):

N = len(psi) - 1

A = sp.zeros((N+1, N+1))

b = sp.zeros((N+1, 1))

x = sp.Symbol('x')

# Turn psi and f into Python functions

psi = [sp.lambdify([x], psi[i]) for i in range(N+1)]

f = sp.lambdify([x], f)

for i in range(N+1):

for j in range(N+1):

A[i,j] = psi[j](points[i])

b[i,0] = f(points[i])

c = A.LUsolve(b)

u = 0

for i in range(len(psi)):

u += c[i,0]*psi[i](x)

return u

The collocation or interpolation method; approximating a parabola by linear functions

- Potential difficulty: how to choose \( \xno{i} \)?

- The results are sensitive to the points!

\( (4/3,5/3) \) vs \( (1,2) \):

Lagrange polynomials; motivation and ideas

Motivation:

- The interpolation/collocation method avoids integration

- With a diagonal matrix \( A_{i,j} = \baspsi_j(\xno{i}) \) we can solve the linear system by hand

The Lagrange interpolating polynomials \( \baspsi_j \) have the property that

$$ \baspsi_i(\xno{j}) =\delta_{ij},\quad \delta_{ij} =

\left\lbrace\begin{array}{ll}

1, & i=j\\

0, & i\neq j

\end{array}\right.

$$

Hence, \( c_i = f(x_i) \) and

$$

\begin{equation}

u(x) = \sum_{j\in\If} f(\xno{i})\baspsi_i(x)

\end{equation}

$$

- Lagrange polynomials and interpolation/collocation look convenient

- Lagrange polynomials are very much used in the finite element method

Lagrange polynomials; formula and code

$$

\begin{equation}

\baspsi_i(x) =

\prod_{j=0,j\neq i}^N

\frac{x-\xno{j}}{\xno{i}-\xno{j}}

= \frac{x-x_0}{\xno{i}-x_0}\cdots\frac{x-\xno{i-1}}{\xno{i}-\xno{i-1}}\frac{x-\xno{i+1}}{\xno{i}-\xno{i+1}}

\cdots\frac{x-x_N}{\xno{i}-x_N}

\tag{13}

\end{equation}

$$

def Lagrange_polynomial(x, i, points):

p = 1

for k in range(len(points)):

if k != i:

p *= (x - points[k])/(points[i] - points[k])

return p

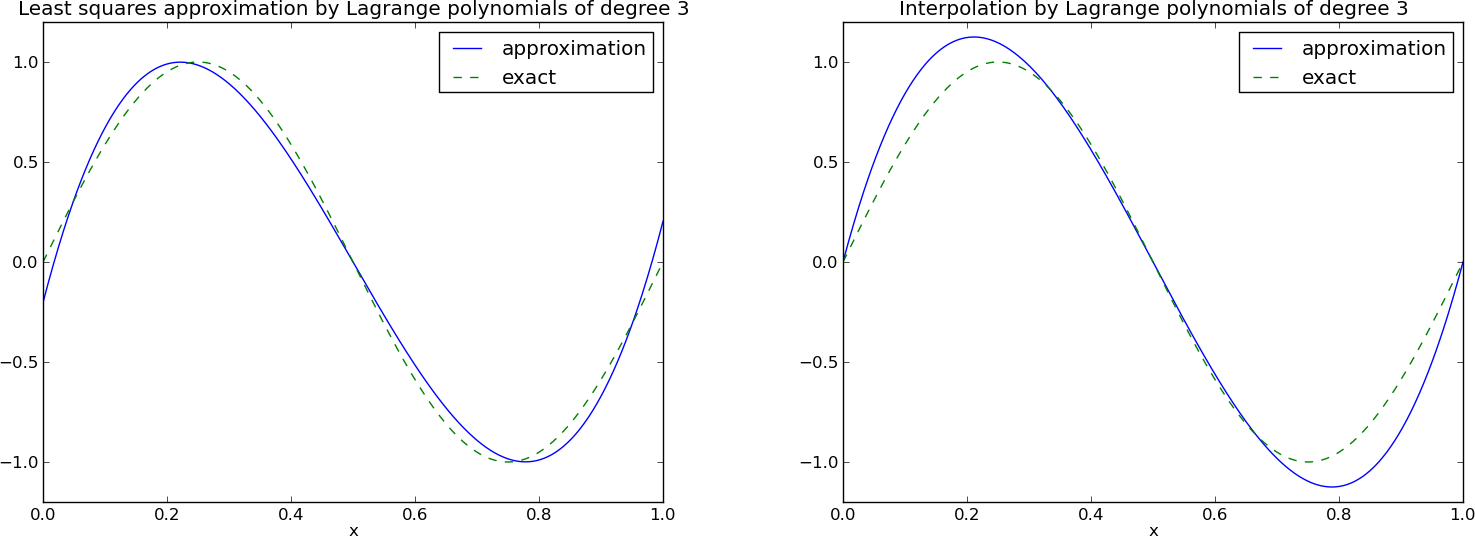

Lagrange polynomials; successful example

Lagrange polynomials; a less successful example

Lagrange polynomials; oscillatory behavior

12 points, degree 11, plot of two of the Lagrange polynomials - note that they are zero at all points except one.

Problem: strong oscillations near the boundaries for larger \( N \) values.

Lagrange polynomials; remedy for strong oscillations

The oscillations can be reduced by a more clever choice of interpolation points, called the Chebyshev nodes:

$$

\begin{equation}

\xno{i} = \half (a+b) + \half(b-a)\cos\left( \frac{2i+1}{2(N+1)}pi\right),\quad i=0\ldots,N

\end{equation}

$$

on an interval \( [a,b] \).

Lagrange polynomials; recalculation with Chebyshev nodes

Lagrange polynomials; less oscillations with Chebyshev nodes

12 points, degree 11, plot of two of the Lagrange polynomials - note that they are zero at all points except one.

Finite element basis functions

The basis functions have so far been global: \( \baspsi_i(x) \neq 0 \) almost everywhere

In the finite element method we use basis functions with local support

- Local support: \( \baspsi_i(x) \neq 0 \) for \( x \) in a small subdomain of \( \Omega \)

- Typically hat-shaped

- \( u(x) \) based on these \( \baspsi_i \) is a piecewise polynomial defined over many (small) subdomains

- We introduce \( \basphi_i \) as the name of these finite element hat functions (and for now choose \( \baspsi_i=\basphi_i \))

The linear combination of hat functions is a piecewise linear function

Elements and nodes

Split \( \Omega \) into non-overlapping subdomains called elements:

$$

\begin{equation}

\Omega = \Omega^{(0)}\cup \cdots \cup \Omega^{(N_e)}

\end{equation}

$$

On each element, introduce points called nodes: \( \xno{0},\ldots,\xno{N_n} \)

- The finite element basis functions are named \( \basphi_i(x) \)

- \( \basphi_i=1 \) at node \( i \) and 0 at all other nodes

- \( \basphi_i \) is a Lagrange polynomial on each element

- For nodes at the boundary between two elements, \( \basphi_i \) is made up of a Lagrange polynomial over each element

Example on elements with two nodes (P1 elements)

Data structure: nodes holds coordinates or nodes, elements holds the

node numbers in each element

nodes = [0, 1.2, 2.4, 3.6, 4.8, 5]

elements = [[0, 1], [1, 2], [2, 3], [3, 4], [4, 5]]

Illustration of two basis functions on the mesh

Example on elements with three nodes (P2 elements)

nodes = [0, 0.125, 0.25, 0.375, 0.5, 0.625, 0.75, 0.875, 1.0]

elements = [[0, 1, 2], [2, 3, 4], [4, 5, 6], [6, 7, 8]]

Some corresponding basis functions (P2 elements)

Examples on elements with four nodes per element (P3 elements)

d = 3 # d+1 nodes per element

num_elements = 4

num_nodes = num_elements*d + 1

nodes = [i*0.5 for i in range(num_nodes)]

elements = [[i*d+j for j in range(d+1)] for i in range(num_elements)]

Some corresponding basis functions (P3 elements)

The numbering does not need to be regular from left to right

nodes = [1.5, 5.5, 4.2, 0.3, 2.2, 3.1]

elements = [[2, 1], [4, 5], [0, 4], [3, 0], [5, 2]]

Interpretation of the coefficients \( c_i \)

Important property: \( c_i \) is the value of \( u \) at node \( i \), \( \xno{i} \):

$$

\begin{equation}

u(\xno{i}) = \sum_{j\in\If} c_j\basphi_j(\xno{i}) =

c_i\basphi_i(\xno{i}) = c_i

\tag{14}

\end{equation}

$$

because \( \basphi_j(\xno{i}) =0 \) if \( i\neq j \)

Properties of the basis functions

- \( \basphi_i(x) \neq 0 \) only on those elements that contain global node \( i \)

- \( \basphi_i(x)\basphi_j(x) \neq 0 \) if and only if \( i \) and \( j \) are global node numbers in the same element

Since \( A_{i,j}=\int\basphi_i\basphi_j\dx \), most of the elements in the coefficient matrix will be zero

How to construct quadratic \( \basphi_i \) (P2 elements)

- Associate Lagrange polynomials with the nodes in an element

- When the polynomial is 1 on the element boundary, combine it with the polynomial in the neighboring element

Example on linear \( \basphi_i \) (P1 elements)

$$

\begin{equation}

\basphi_i(x) = \left\lbrace\begin{array}{ll}

0, & x < \xno{i-1}\\

(x - \xno{i-1})/h

& \xno{i-1} \leq x < \xno{i}\\

1 -

(x - x_{i})/h,

& \xno{i} \leq x < \xno{i+1}\\

0, & x\geq \xno{i+1}

\end{array}

\right.

\tag{15}

\end{equation}

$$

Example on cubic \( \basphi_i \) (P3 elements)

Calculating the linear system for \( c_i \)

Computing a specific matrix entry (1)

\( A_{2,3}=\int_\Omega\basphi_2\basphi_3 dx \): \( \basphi_2\basphi_3\neq 0 \) only over element 2. There,

$$ \basphi_3(x) = (x-x_2)/h,\quad \basphi_2(x) = 1- (x-x_2)/h$$

$$

A_{2,3} = \int_\Omega \basphi_2\basphi_{3}\dx =

\int_{\xno{2}}^{\xno{3}}

\left(1 - \frac{x - \xno{2}}{h}\right) \frac{x - x_{2}}{h}

\dx = \frac{h}{6}

$$

Computing a specific matrix entry (2)

$$ A_{2,2} =

\int_{\xno{1}}^{\xno{2}}

\left(\frac{x - \xno{1}}{h}\right)^2\dx +

\int_{\xno{2}}^{\xno{3}}

\left(1 - \frac{x - \xno{2}}{h}\right)^2\dx

= \frac{h}{3}

$$

Calculating a general row in the matrix; figure

$$ A_{i,i-1} = \int_\Omega \basphi_i\basphi_{i-1}\dx = \hbox{?}$$

Calculating a general row in the matrix; details

$$

\begin{align*}

A_{i,i-1} &= \int_\Omega \basphi_i\basphi_{i-1}\dx\\

&=

\underbrace{\int_{\xno{i-2}}^{\xno{i-1}} \basphi_i\basphi_{i-1}\dx}_{\basphi_i=0} +

\int_{\xno{i-1}}^{\xno{i}} \basphi_i\basphi_{i-1}\dx +

\underbrace{\int_{\xno{i}}^{\xno{i+1}} \basphi_i\basphi_{i-1}\dx}_{\basphi_{i-1}=0}\\

&= \int_{\xno{i-1}}^{\xno{i}}

\underbrace{\left(\frac{x - x_{i}}{h}\right)}_{\basphi_i(x)}

\underbrace{\left(1 - \frac{x - \xno{i-1}}{h}\right)}_{\basphi_{i-1}(x)} \dx =

\frac{h}{6}

\end{align*}

$$

- \( A_{i,i+1}=A_{i,i-1} \) due to symmetry

- \( A_{i,i}=h/3 \) (same calculation as for \( A_{2,2} \))

- \( A_{0,0}=A_{N,N}=h/3 \) (only one element)

Calculation of the right-hand side

$$

\begin{equation}

b_i = \int_\Omega\basphi_i(x)f(x)\dx

= \int_{\xno{i-1}}^{\xno{i}} \frac{x - \xno{i-1}}{h} f(x)\dx

+ \int_{x_{i}}^{\xno{i+1}} \left(1 - \frac{x - x_{i}}{h}\right) f(x)

\dx

\tag{16}

\end{equation}

$$

Need a specific \( f(x) \) to do more...

Specific example with two elements; linear system and solution

- \( f(x)=x(1-x) \) on \( \Omega=[0,1] \)

- Two equal-sized elements \( [0,0.5] \) and \( [0.5,1] \)

$$

\begin{equation*}

A = \frac{h}{6}\left(\begin{array}{ccc}

2 & 1 & 0\\

1 & 4 & 1\\

0 & 1 & 2

\end{array}\right),\quad

b = \frac{h^2}{12}\left(\begin{array}{c}

2 - 3h\\

12 - 14h\\

10 -17h

\end{array}\right)

\end{equation*}

$$

$$

\begin{equation*} c_0 = \frac{h^2}{6},\quad c_1 = h - \frac{5}{6}h^2,\quad

c_2 = 2h - \frac{23}{6}h^2

\end{equation*}

$$

Specific example with two elements; plot

$$

\begin{equation*} u(x)=c_0\basphi_0(x) + c_1\basphi_1(x) + c_2\basphi_2(x)\end{equation*}

$$

Specific example: what about four elements?

Assembly of elementwise computations

Split the integrals into elementwise integrals

$$

\begin{equation}

A_{i,j} = \int_\Omega\basphi_i\basphi_jdx =

\sum_{e} \int_{\Omega^{(e)}} \basphi_i\basphi_jdx,\quad

A^{(e)}_{i,j}=\int_{\Omega^{(e)}} \basphi_i\basphi_jdx

\tag{17}

\end{equation}

$$

Important:

- \( A^{(e)}_{i,j}\neq 0 \) if and only if \( i \) and \( j \) are nodes in element \( e \) (otherwise no overlap between the basis functions)

- all the nonzero elements in \( A^{(e)}_{i,j} \) are collected in an element matrix

The element matrix

$$

\tilde A^{(e)} = \{ \tilde A^{(e)}_{r,s}\},\quad

\tilde A^{(e)}_{r,s} =

\int_{\Omega^{(e)}}\basphi_{q(e,r)}\basphi_{q(e,s)}dx,

\quad r,s\in\Ifd=\{0,\ldots,d\}

$$

- \( r,s \) run over local node numbers in an element; \( i,j \) run over global node numbers

- \( i=q(e,r) \): mapping of local node number \( r \) in element

\( e \) to the global node number \( i \) (math equivalent to

i=elements[e][r]) - Add \( \tilde A^{(e)}_{r,s} \) into the global \( A_{i,j} \) (assembly)

$$

\begin{equation}

A_{q(e,r),q(e,s)} := A_{q(e,r),q(e,s)} + \tilde A^{(e)}_{r,s},\quad

r,s\in\Ifd

\end{equation}

$$

Illustration of the matrix assembly: regularly numbered P1 elements

Illustration of the matrix assembly: regularly numbered P3 elements

Illustration of the matrix assembly: irregularly numbered P1 elements

Assembly of the right-hand side

$$

\begin{equation}

b_i = \int_\Omega f(x)\basphi_i(x)dx =

\sum_{e} \int_{\Omega^{(e)}} f(x)\basphi_i(x)dx,\quad

b^{(e)}_{i}=\int_{\Omega^{(e)}} f(x)\basphi_i(x)dx

\end{equation}

$$

Important:

- \( b_i^{(e)}\neq 0 \) if and only if global node \( i \) is a node in element \( e \) (otherwise \( \basphi_i=0 \))

- The \( d+1 \) nonzero \( b_i^{(e)} \) can be collected in an element vector \( \tilde b_r^{(e)}=\{ \tilde b_r^{(e)}\} \), \( r\in\Ifd \)

Assembly:

$$

\begin{equation}

b_{q(e,r)} := b_{q(e,r)} + \tilde b^{(e)}_{r},\quad

r,s\in\Ifd

\end{equation}

$$

Mapping to a reference element

Instead of computing

$$

\begin{equation*} \tilde A^{(e)}_{r,s} = \int_{\Omega^{(e)}}\basphi_{q(e,r)}(x)\basphi_{q(e,s)}(x)dx

= \int_{x_L}^{x_R}\basphi_{q(e,r)}(x)\basphi_{q(e,s)}(x)dx

\end{equation*}

$$

we now map \( [x_L, x_R] \) to

a standardized reference element domain \( [-1,1] \) with local coordinate \( X \)

Affine mapping

$$

\begin{equation}

x = \half (x_L + x_R) + \half (x_R - x_L)X

\tag{18}

\end{equation}

$$

or rewritten as

$$

\begin{equation}

x = x_m + {\half}hX, \qquad x_m=(x_L+x_R)/2

\tag{19}

\end{equation}

$$

Integral transformation

Reference element integration: just change integration variable from \( x \) to \( X \). Introduce local basis function

$$

\begin{equation}

\refphi_r(X) = \basphi_{q(e,r)}(x(X))

\end{equation}

$$

$$

\begin{equation}

\tilde A^{(e)}_{r,s} = \int_{\Omega^{(e)}}\basphi_{q(e,r)}(x)\basphi_{q(e,s)}(x)dx

= \int\limits_{-1}^1 \refphi_r(X)\refphi_s(X)\underbrace{\frac{dx}{dX}}_{\det J = h/2}dX

= \int\limits_{-1}^1 \refphi_r(X)\refphi_s(X)\det J\,dX

\end{equation}

$$

$$

\begin{equation}

\tilde b^{(e)}_{r} = \int_{\Omega^{(e)}}f(x)\basphi_{q(e,r)}(x)dx

= \int\limits_{-1}^1 f(x(X))\refphi_r(X)\det J\,dX

\tag{20}

\end{equation}

$$

Advantages of the reference element

- Always the same domain for integration: \( [-1,1] \)

- We only need formulas for \( \refphi_r(X) \) over one element (no piecewise polynomial definition)

- \( \refphi_r(X) \) is the same for all elements: no dependence on element length and location, which is "factored out" in the mapping and \( \det J \)

Standardized basis functions for P1 elements

$$

\begin{align}

\refphi_0(X) &= \half (1 - X)

\tag{21}\\

\refphi_1(X) &= \half (1 + X)

\tag{22}

\end{align}

$$

Standardized basis functions for P2 elements

P2 elements:

$$

\begin{align}

\refphi_0(X) &= \half (X-1)X\\

\refphi_1(X) &= 1 - X^2\\

\refphi_2(X) &= \half (X+1)X

\end{align}

$$

Easy to generalize to arbitrary order!

Integration over a reference element; element matrix

P1 elements and \( f(x)=x(1-x) \).

$$

\begin{align}

\tilde A^{(e)}_{0,0}

&= \int_{-1}^1 \refphi_0(X)\refphi_0(X)\frac{h}{2} dX\nonumber\\

&=\int_{-1}^1 \half(1-X)\half(1-X) \frac{h}{2} dX =

\frac{h}{8}\int_{-1}^1 (1-X)^2 dX = \frac{h}{3}

\tag{23}\\

\tilde A^{(e)}_{1,0}

&= \int_{-1}^1 \refphi_1(X)\refphi_0(X)\frac{h}{2} dX\nonumber\\

&=\int_{-1}^1 \half(1+X)\half(1-X) \frac{h}{2} dX =

\frac{h}{8}\int_{-1}^1 (1-X^2) dX = \frac{h}{6}\\

\tilde A^{(e)}_{0,1} &= \tilde A^{(e)}_{1,0}

\tag{24}\\

\tilde A^{(e)}_{1,1}

&= \int_{-1}^1 \refphi_1(X)\refphi_1(X)\frac{h}{2} dX\nonumber\\

&=\int_{-1}^1 \half(1+X)\half(1+X) \frac{h}{2} dX =

\frac{h}{8}\int_{-1}^1 (1+X)^2 dX = \frac{h}{3}

\tag{25}

\end{align}

$$

Integration over a reference element; element vector

$$

\begin{align}

\tilde b^{(e)}_{0}

&= \int_{-1}^1 f(x(X))\refphi_0(X)\frac{h}{2} dX\nonumber\\

&= \int_{-1}^1 (x_m + \half hX)(1-(x_m + \half hX))

\half(1-X)\frac{h}{2} dX \nonumber\\

&= - \frac{1}{24} h^{3} + \frac{1}{6} h^{2} x_{m} - \frac{1}{12} h^{2} - \half h x_{m}^{2} + \half h x_{m}

\tag{26}\\

\tilde b^{(e)}_{1}

&= \int_{-1}^1 f(x(X))\refphi_1(X)\frac{h}{2} dX\nonumber\\

&= \int_{-1}^1 (x_m + \half hX)(1-(x_m + \half hX))

\half(1+X)\frac{h}{2} dX \nonumber\\

&= - \frac{1}{24} h^{3} - \frac{1}{6} h^{2} x_{m} + \frac{1}{12} h^{2} -

\half h x_{m}^{2} + \half h x_{m}

\end{align}

$$

\( x_m \): element midpoint.

Tedious calculations! Let's use symbolic software

>>> import sympy as sp

>>> x, x_m, h, X = sp.symbols('x x_m h X')

>>> sp.integrate(h/8*(1-X)**2, (X, -1, 1))

h/3

>>> sp.integrate(h/8*(1+X)*(1-X), (X, -1, 1))

h/6

>>> x = x_m + h/2*X

>>> b_0 = sp.integrate(h/4*x*(1-x)*(1-X), (X, -1, 1))

>>> print b_0

-h**3/24 + h**2*x_m/6 - h**2/12 - h*x_m**2/2 + h*x_m/2

Can printe out in LaTeX too (convenient for copying into reports):

>>> print sp.latex(b_0, mode='plain')

- \frac{1}{24} h^{3} + \frac{1}{6} h^{2} x_{m}

- \frac{1}{12} h^{2} - \half h x_{m}^{2}

+ \half h x_{m}

Implementation

- Coming functions appear in fe_approx1D.py

- Functions can operate in symbolic or numeric mode

- The code documents all steps in finite element calculations!

Compute finite element basis functions in the reference element

Let \( \refphi_r(X) \) be a Lagrange polynomial of degree d:

import sympy as sp

import numpy as np

def phi_r(r, X, d):

if isinstance(X, sp.Symbol):

h = sp.Rational(1, d) # node spacing

nodes = [2*i*h - 1 for i in range(d+1)]

else:

# assume X is numeric: use floats for nodes

nodes = np.linspace(-1, 1, d+1)

return Lagrange_polynomial(X, r, nodes)

def Lagrange_polynomial(x, i, points):

p = 1

for k in range(len(points)):

if k != i:

p *= (x - points[k])/(points[i] - points[k])

return p

def basis(d=1):

"""Return the complete basis."""

X = sp.Symbol('X')

phi = [phi_r(r, X, d) for r in range(d+1)]

return phi

Compute the element matrix

def element_matrix(phi, Omega_e, symbolic=True):

n = len(phi)

A_e = sp.zeros((n, n))

X = sp.Symbol('X')

if symbolic:

h = sp.Symbol('h')

else:

h = Omega_e[1] - Omega_e[0]

detJ = h/2 # dx/dX

for r in range(n):

for s in range(r, n):

A_e[r,s] = sp.integrate(phi[r]*phi[s]*detJ, (X, -1, 1))

A_e[s,r] = A_e[r,s]

return A_e

Example on symbolic vs numeric element matrix

>>> from fe_approx1D import *

>>> phi = basis(d=1)

>>> phi

[1/2 - X/2, 1/2 + X/2]

>>> element_matrix(phi, Omega_e=[0.1, 0.2], symbolic=True)

[h/3, h/6]

[h/6, h/3]

>>> element_matrix(phi, Omega_e=[0.1, 0.2], symbolic=False)

[0.0333333333333333, 0.0166666666666667]

[0.0166666666666667, 0.0333333333333333]

Compute the element vector

def element_vector(f, phi, Omega_e, symbolic=True):

n = len(phi)

b_e = sp.zeros((n, 1))

# Make f a function of X

X = sp.Symbol('X')

if symbolic:

h = sp.Symbol('h')

else:

h = Omega_e[1] - Omega_e[0]

x = (Omega_e[0] + Omega_e[1])/2 + h/2*X # mapping

f = f.subs('x', x) # substitute mapping formula for x

detJ = h/2 # dx/dX

for r in range(n):

b_e[r] = sp.integrate(f*phi[r]*detJ, (X, -1, 1))

return b_e

Note f.subs('x', x): replace x by \( x(X) \) such that f contains X

Fallback on numerical integration if symbolic integration fails

- Element matrix: only polynomials and

sympyalways succeeds - Element vector: \( \int f\refphi \dx \) can fail

(

sympythen returns anIntegralobject instead of a number)

def element_vector(f, phi, Omega_e, symbolic=True):

...

I = sp.integrate(f*phi[r]*detJ, (X, -1, 1)) # try...

if isinstance(I, sp.Integral):

h = Omega_e[1] - Omega_e[0] # Ensure h is numerical

detJ = h/2

integrand = sp.lambdify([X], f*phi[r]*detJ)

I = sp.mpmath.quad(integrand, [-1, 1])

b_e[r] = I

...

Linear system assembly and solution

def assemble(nodes, elements, phi, f, symbolic=True):

N_n, N_e = len(nodes), len(elements)

zeros = sp.zeros if symbolic else np.zeros

A = zeros((N_n, N_n))

b = zeros((N_n, 1))

for e in range(N_e):

Omega_e = [nodes[elements[e][0]], nodes[elements[e][-1]]]

A_e = element_matrix(phi, Omega_e, symbolic)

b_e = element_vector(f, phi, Omega_e, symbolic)

for r in range(len(elements[e])):

for s in range(len(elements[e])):

A[elements[e][r],elements[e][s]] += A_e[r,s]

b[elements[e][r]] += b_e[r]

return A, b

Linear system solution

if symbolic:

c = A.LUsolve(b) # sympy arrays, symbolic Gaussian elim.

else:

c = np.linalg.solve(A, b) # numpy arrays, numerical solve

Note: the symbolic computation of A and b and the symbolic

solution can be very tedious.

Example on computing symbolic approximations

>>> h, x = sp.symbols('h x')

>>> nodes = [0, h, 2*h]

>>> elements = [[0, 1], [1, 2]]

>>> phi = basis(d=1)

>>> f = x*(1-x)

>>> A, b = assemble(nodes, elements, phi, f, symbolic=True)

>>> A

[h/3, h/6, 0]

[h/6, 2*h/3, h/6]

[ 0, h/6, h/3]

>>> b

[ h**2/6 - h**3/12]

[ h**2 - 7*h**3/6]

[5*h**2/6 - 17*h**3/12]

>>> c = A.LUsolve(b)

>>> c

[ h**2/6]

[12*(7*h**2/12 - 35*h**3/72)/(7*h)]

[ 7*(4*h**2/7 - 23*h**3/21)/(2*h)]

Example on computing numerical approximations

>>> nodes = [0, 0.5, 1]

>>> elements = [[0, 1], [1, 2]]

>>> phi = basis(d=1)

>>> x = sp.Symbol('x')

>>> f = x*(1-x)

>>> A, b = assemble(nodes, elements, phi, f, symbolic=False)

>>> A

[ 0.166666666666667, 0.0833333333333333, 0]

[0.0833333333333333, 0.333333333333333, 0.0833333333333333]

[ 0, 0.0833333333333333, 0.166666666666667]

>>> b

[ 0.03125]

[0.104166666666667]

[ 0.03125]

>>> c = A.LUsolve(b)

>>> c

[0.0416666666666666]

[ 0.291666666666667]

[0.0416666666666666]

The structure of the coefficient matrix

>>> d=1; N_e=8; Omega=[0,1] # 8 linear elements on [0,1]

>>> phi = basis(d)

>>> f = x*(1-x)

>>> nodes, elements = mesh_symbolic(N_e, d, Omega)

>>> A, b = assemble(nodes, elements, phi, f, symbolic=True)

>>> A

[h/3, h/6, 0, 0, 0, 0, 0, 0, 0]

[h/6, 2*h/3, h/6, 0, 0, 0, 0, 0, 0]

[ 0, h/6, 2*h/3, h/6, 0, 0, 0, 0, 0]

[ 0, 0, h/6, 2*h/3, h/6, 0, 0, 0, 0]

[ 0, 0, 0, h/6, 2*h/3, h/6, 0, 0, 0]

[ 0, 0, 0, 0, h/6, 2*h/3, h/6, 0, 0]

[ 0, 0, 0, 0, 0, h/6, 2*h/3, h/6, 0]

[ 0, 0, 0, 0, 0, 0, h/6, 2*h/3, h/6]

[ 0, 0, 0, 0, 0, 0, 0, h/6, h/3]

Note: do this by hand to understand what is going on!

General result: the coefficient matrix is sparse

- Sparse = most of the entries are zeros

- Below: P1 elements

$$

\begin{equation}

A = \frac{h}{6}

\left(

\begin{array}{cccccccccc}

2 & 1 & 0

&\cdots & \cdots & \cdots & \cdots & \cdots & 0 \\

1 & 4 & 1 & \ddots & & & & & \vdots \\

0 & 1 & 4 & 1 &

\ddots & & & & \vdots \\

\vdots & \ddots & & \ddots & \ddots & 0 & & & \vdots \\

\vdots & & \ddots & \ddots & \ddots & \ddots & \ddots & & \vdots \\

\vdots & & & 0 & 1 & 4 & 1 & \ddots & \vdots \\

\vdots & & & & \ddots & \ddots & \ddots &\ddots & 0 \\

\vdots & & & & &\ddots & 1 & 4 & 1 \\

0 &\cdots & \cdots &\cdots & \cdots & \cdots & 0 & 1 & 2

\end{array}

\right)

\end{equation}

$$

Exemplifying the sparsity for P2 elements

$$

\begin{equation}

A = \frac{h}{30}

\left(

\begin{array}{ccccccccc}

4 & 2 & - 1 & 0

& 0 & 0 & 0 & 0 & 0\\

2 & 16 & 2

& 0 & 0 & 0 & 0 & 0 & 0\\- 1 & 2 &

8 & 2 & - 1 & 0 & 0 & 0 & 0\\

0 & 0 & 2 & 16 & 2 & 0 & 0 & 0 & 0\\

0 & 0 & - 1 & 2 & 8 & 2 & - 1 & 0 & 0\\

0 & 0 & 0 & 0 & 2 & 16 & 2 & 0 & 0\\

0 & 0 & 0 & 0 & - 1 & 2 & 8 & 2 & - 1

\\0 & 0 & 0 & 0 & 0 & 0 &

2 & 16 & 2\\0 & 0 & 0 & 0 & 0

& 0 & - 1 & 2 & 4

\end{array}

\right)

\end{equation}

$$

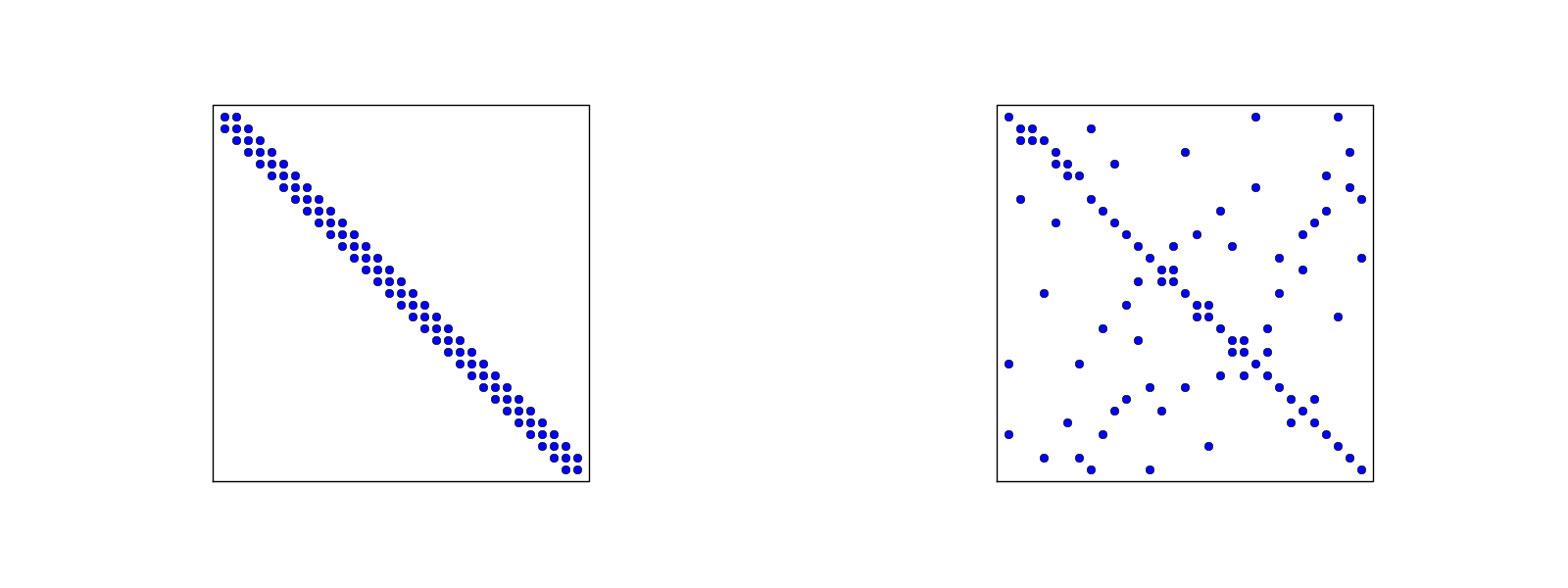

Matrix sparsity pattern for regular/random numbering of P1 elements

- Left: number nodes and elements from left to right

- Right: number nodes and elements arbitrarily

Matrix sparsity pattern for regular/random numbering of P3 elements

- Left: number nodes and elements from left to right

- Right: number nodes and elements arbitrarily

Sparse matrix storage and solution

The minimum storage requirements for the coefficient matrix \( A_{i,j} \):

- P1 elements: only 3 nonzero entires per row

- P2 elements: only 5 nonzero entires per row

- P3 elements: only 7 nonzero entires per row

- It is important to utilize sparse storage and sparse solvers

- In Python:

scipy.sparsepackage

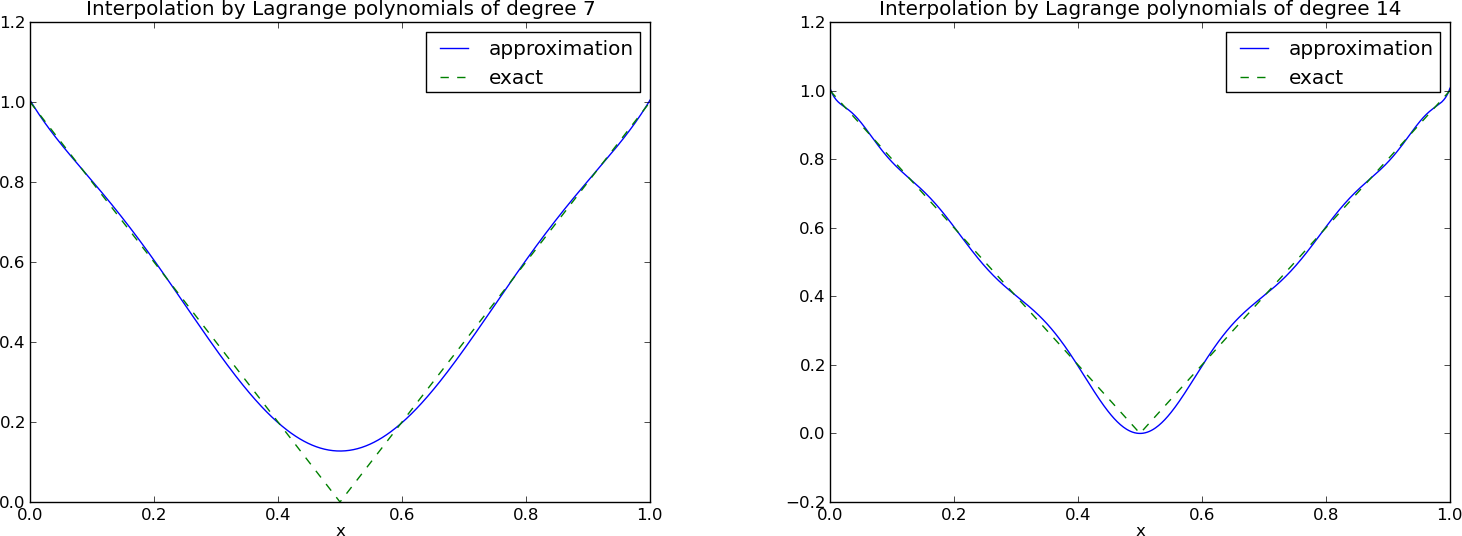

Approximate \( f\sim x^9 \) by various elements; code

Compute a mesh with \( N_e \) elements, basis functions of degree \( d \), and approximate a given symbolic expression \( f(x) \) by a finite element expansion \( u(x) = \sum_jc_j\basphi_j(x) \):

import sympy as sp

from fe_approx1D import approximate

x = sp.Symbol('x')

approximate(f=x*(1-x)**8, symbolic=False, d=1, N_e=4)

approximate(f=x*(1-x)**8, symbolic=False, d=2, N_e=2)

approximate(f=x*(1-x)**8, symbolic=False, d=1, N_e=8)

approximate(f=x*(1-x)**8, symbolic=False, d=2, N_e=4)

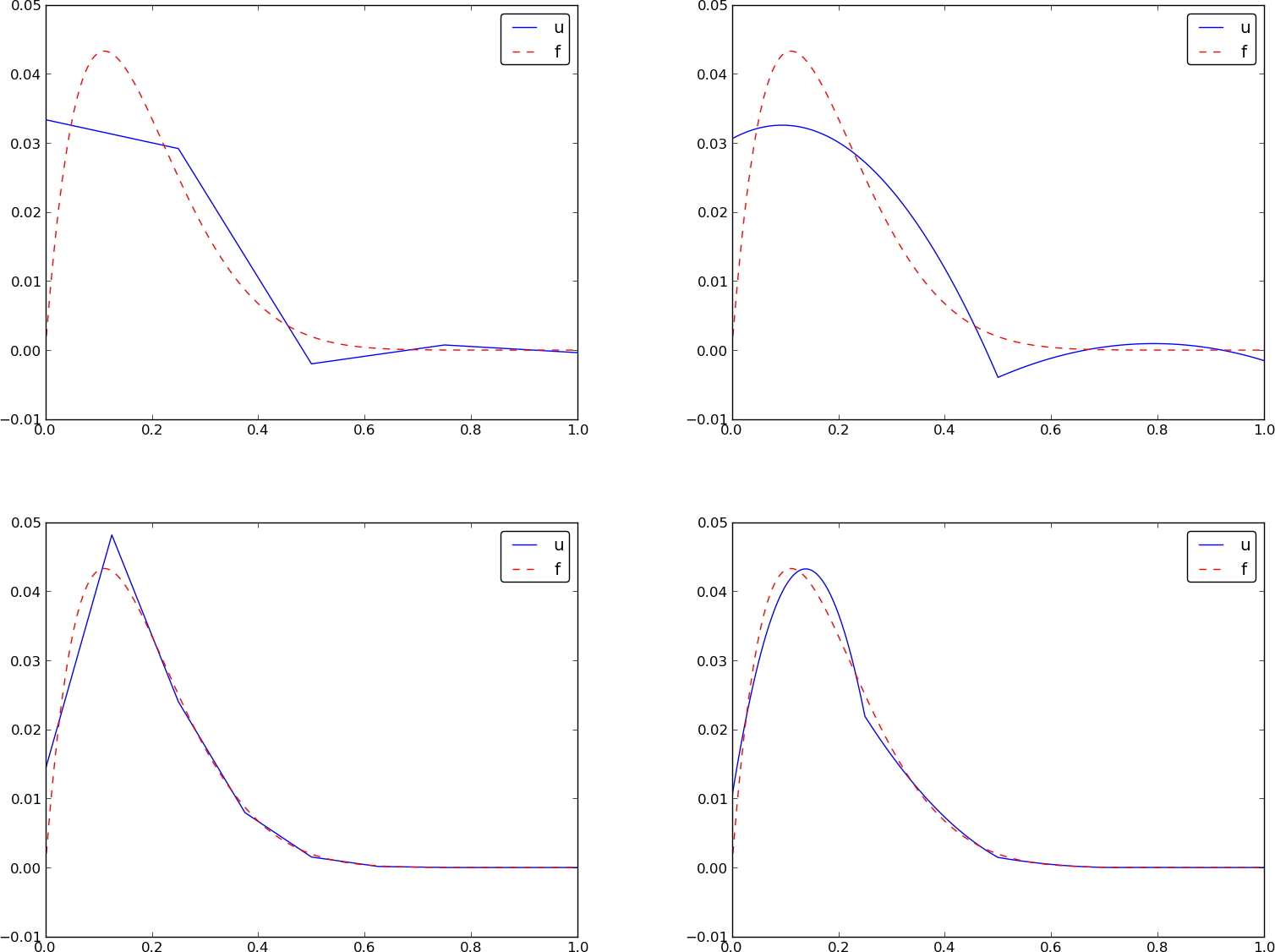

Approximate \( f\sim x^9 \) by various elements; plot

Comparison of finite element and finite difference approximation

- Finite difference approximation of a function \( f(x) \): simply choose \( u_i = f(x_i) \) (interpolation)

- Galerkin/projection and least squares method: must derive and solve a linear system

- What is really the difference in \( u \)?

Interpolation/collocation with finite elements

Let \( \{\xno{i}\}_{i\in\If} \) be the nodes in the mesh. Collocation means

$$

\begin{equation}

u(\xno{i})=f(\xno{i}),\quad i\in\If,

\end{equation}

$$

which translates to

$$ \sum_{j\in\If} c_j \basphi_j(\xno{i}) = f(\xno{i}),$$

but \( \basphi_j(\xno{i})=0 \) if \( i\neq j \) so the sum collapses to one

term \( c_i\basphi_i(\xno{i}) = c_i \), and we have the result

$$

\begin{equation}

c_i = f(\xno{i})

\end{equation}

$$

Same result as the standard finite difference approach, but finite elements define \( u \) also between the \( \xno{i} \) points

Galerkin/project and least squares vs collocation/interpolation or finite differences

- Scope: work with P1 elements

- Use projection/Galerkin or least squares (equivalent)

- Interpret the resulting linear system as finite difference equations

The P1 finite element machinery results in a linear system where equation no \( i \) is

$$

\begin{equation}

\frac{h}{6}(u_{i-1} + 4u_i + u_{i+1}) = (f,\basphi_i)

\tag{27}

\end{equation}

$$

Note:

- We have used \( u_i \) for \( c_i \) to make notation similar to finite differences

- The finite difference counterpart is just \( u_i=f_i \)

Expressing the left-hand side in finite difference operator notation

Rewrite the left-hand side of finite element equation no \( i \):

$$

\begin{equation}

h(u_i + \frac{1}{6}(u_{i-1} - 2u_i + u_{i+1})) = [h(u + \frac{h^2}{6}D_x D_x u)]_i

\end{equation}

$$

This is the standard finite difference approximation of

$$ h(u + \frac{h^2}{6}u'')$$

Treating the right-hand side; Trapezoidal rule

$$ (f,\basphi_i) = \int_{\xno{i-1}}^{\xno{i}} f(x)\frac{1}{h} (x - \xno{i-1}) dx

+ \int_{\xno{i}}^{\xno{i+1}} f(x)\frac{1}{h}(1 - (x - x_{i})) dx

$$

Cannot do much unless we specialize \( f \) or use numerical integration.

Trapezoidal rule using the nodes:

$$ (f,\basphi_i) = \int_\Omega f\basphi_i dx\approx h\half(

f(\xno{0})\basphi_i(\xno{0}) + f(\xno{N})\basphi_i(\xno{N}))

+ h\sum_{j=1}^{N-1} f(\xno{j})\basphi_i(\xno{j})

$$

\( \basphi_i(\xno{j})=\delta_{ij} \), so this formula collapses to one term:

$$

\begin{equation}

(f,\basphi_i) \approx hf(\xno{i}),\quad i=1,\ldots,N-1\thinspace.

\end{equation}

$$

Same result as in collocation (interpolation) and the finite difference method!

Treating the right-hand side; Simpson's rule

$$ \int_\Omega g(x)dx \approx \frac{h}{6}\left( g(\xno{0}) +

2\sum_{j=1}^{N-1} g(\xno{j})

+ 4\sum_{j=0}^{N-1} g(\xno{j+\half}) + f(\xno{2N})\right),

$$

Our case: \( g=f\basphi_i \). The sums collapse because \( \basphi_i=0 \) at most of

the points.

$$

\begin{equation}

(f,\basphi_i) \approx \frac{h}{3}(f_{i-\half} + f_i + f_{i+\half})

\end{equation}

$$

Conclusions:

- While the finite difference method just samples \( f \) at \( x_i \), the finite element method applies an average (smoothing) of \( f \) around \( x_i \)

- On the left-hand side we have a term \( \sim hu'' \), and \( u'' \) also contribute to smoothing

- There is some inherent smoothing in the finite element method

Finite element approximation vs finite differences

With Trapezoidal integration of \( (f,\basphi_i) \), the finite element metod essentially solve

$$

\begin{equation}

u + \frac{h^2}{6} u'' = f,\quad u'(0)=u'(L)=0,

\end{equation}

$$

by the finite difference method

$$

\begin{equation}

[u + \frac{h^2}{6} D_x D_x u = f]_i

\end{equation}

$$

With Simpson integration of \( (f,\basphi_i) \) we essentially solve

$$

\begin{equation}

[u + \frac{h^2}{6} D_x D_x u = \bar f]_i,

\end{equation}

$$

where

$$ \bar f_i = \frac{1}{3}(f_{i-1/2} + f_i + f_{i+1/2}) $$

Note: as \( h\rightarrow 0 \), \( hu''\rightarrow 0 \) and \( \bar f_i\rightarrow f_i \).

Making finite elements behave as finite differences

- Can we adjust the finite element method so that we do not get the extra \( hu'' \) smoothing term and averaging of \( f \)?

- This is sometimes important in time-dependent problems to incorporate good properties of finite differences into finite elements

Result:

- Compute all integrals by the Trapezoidal method and P1 elements

- Specifically, the coefficient matrix becomes diagonal ("lumped") - no linear system (!)

- Loss of accuracy? The Trapezoidal rule has error \( \Oof{h^2} \), the same as the approximation error in P1 elements

Limitations of the nodes and element concepts

So far,

- Nodes: points for defining \( \basphi_i \) and computing \( u \) values

- Elements: subdomain (containing a few nodes)

- This is a common notion of nodes and elements

One problem:

- Our algorithms need nodes at the element boundaries

- This is often not desirable, so we need to throw the

nodesandelementsarrays away and find a more generalized element concept

A generalized element concept

- We introduce cell for the subdomain that we up to now called element

- A cell has vertices (interval end points)

- Nodes are, almost as before, points where we want to compute unknown functions

- Degrees of freedom is what the \( c_j \) represent (usually function values at nodes)

The concept of a finite element

- a reference cell in a local reference coordinate system

- a set of basis functions \( \refphi_r \) defined on the cell

- a set of degrees of freedom (e.g., function values) that uniquely determine the basis functions such that \( \refphi_r=1 \) for degree of freedom number \( r \) and \( \refphi_r=0 \) for all other degrees of freedom

- a mapping between local and global degree of freedom numbers (dof map)

- a geometric mapping of the reference cell onto to cell in the physical domain: \( [-1,1]\ \Rightarrow\ [x_L,x_R] \)

Implementation; basic data structures

- Cell vertex coordinates:

vertices(equalsnodesfor P1 elements) - Element vertices:

cell[e][r]holds global vertex number of local vertex norin elemente(same aselementsfor P1 elements) -

dof_map[e,r]maps local dofrin elementeto global dof number (same aselementsfor Pd elements)

The assembly process now applies dof_map:

A[dof_map[e][r], dof_map[e][s]] += A_e[r,s]

b[dof_map[e][r]] += b_e[r]

Implementation; example with P2 elements

vertices = [0, 0.4, 1]

cells = [[0, 1], [1, 2]]

dof_map = [[0, 1, 2], [2, 3, 4]]

Implementation; example with P0 elements

Example: Same mesh, but \( u \) is piecewise constant in each cell (P0 element).

Same vertices and cells, but

dof_map = [[0], [1]]

May think of one node in the middle of each element.

cells, vertices, and dof_map.

Example on doing the algorithmic steps

# Use modified fe_approx1D module

from fe_approx1D_numint import *

x = sp.Symbol('x')

f = x*(1 - x)

N_e = 10

# Create mesh with P3 (cubic) elements

vertices, cells, dof_map = mesh_uniform(N_e, d=3, Omega=[0,1])

# Create basis functions on the mesh

phi = [basis(len(dof_map[e])-1) for e in range(N_e)]

# Create linear system and solve it

A, b = assemble(vertices, cells, dof_map, phi, f)

c = np.linalg.solve(A, b)

# Make very fine mesh and sample u(x) on this mesh for plotting

x_u, u = u_glob(c, vertices, cells, dof_map,

resolution_per_element=51)

plot(x_u, u)

Approximating a parabola by P0 elements

The approximate function automates the steps in the previous slide:

from fe_approx1D_numint import *

x=sp.Symbol("x")

for N_e in 4, 8:

approximate(x*(1-x), d=0, N_e=N_e, Omega=[0,1])

Computing the error of the approximation; principles

$$ L^2 \hbox{ error: }\quad ||e||_{L^2} =

\left(\int_{\Omega} e^2 dx\right)^{1/2}$$

Accurate approximation of the integral:

- Sample \( u(x) \) at many points in each element (call

u_glob, returnsxandu) - Use the Trapezoidal rule based on the samples

- It is important to integrate \( u \) accurately over the elements

- (In a finite difference method we would just sample the mesh point values)

Computing the error of the approximation; details

$$ \int_\Omega g(x) dx \approx \sum_{j=0}^{n-1} \half(g(x_j) +

g(x_{j+1}))(x_{j+1}-x_j)$$

# Given c, compute x and u values on a very fine mesh

x, u = u_glob(c, vertices, cells, dof_map,

resolution_per_element=101)

# Compute the error on the very fine mesh

e = f(x) - u

e2 = e**2

# Vectorized Trapezoidal rule

E = np.sqrt(0.5*np.sum((e2[:-1] + e2[1:])*(x[1:] - x[:-1]))

How does the error depend on \( h \) and \( d \)?

Theory and experiments show that the least squares or projection/Galerkin method in combination with Pd elements of equal length \( h \) has an error

$$

\begin{equation}

||e||_{L^2} = Ch^{d+1}

\tag{28}

\end{equation}

$$

where \( C \) depends on \( f \), but not on \( h \) or \( d \).

Cubic Hermite polynomials; definition

- Can we construct \( \basphi_i(x) \) with continuous derivatives? Yes!

Consider a reference cell \( [-1,1] \). We introduce two nodes, \( X=-1 \) and \( X=1 \). The degrees of freedom are

- 0: value of function at \( X=-1 \)

- 1: value of first derivative at \( X=-1 \)

- 2: value of function at \( X=1 \)

- 3: value of first derivative at \( X=1 \)

Cubic Hermite polynomials; derivation

4 constraints on \( \refphi_r \) (1 for dof \( r \), 0 for all others):

- \( \refphi_0(\Xno{0}) = 1 \), \( \refphi_0(\Xno{1}) = 0 \), \( \refphi_0'(\Xno{0}) = 0 \), \( \refphi_0'(\Xno{1}) = 0 \)

- \( \refphi_1'(\Xno{0}) = 1 \), \( \refphi_1'(\Xno{1}) = 0 \), \( \refphi_1(\Xno{0}) = 0 \), \( \refphi_1(\Xno{1}) = 0 \)

- \( \refphi_2(\Xno{1}) = 1 \), \( \refphi_2(\Xno{0}) = 0 \), \( \refphi_2'(\Xno{0}) = 0 \), \( \refphi_2'(\Xno{1}) = 0 \)

- \( \refphi_3'(\Xno{1}) = 1 \), \( \refphi_3'(\Xno{0}) = 0 \), \( \refphi_3(\Xno{0}) = 0 \), \( \refphi_3(\Xno{1}) = 0 \)

This gives 4 linear, coupled equations for each \( \refphi_r \) to determine the 4 coefficients in the cubic polynomial

Cubic Hermite polynomials; result

$$

\begin{align}

\refphi_0(X) &= 1 - \frac{3}{4}(X+1)^2 + \frac{1}{4}(X+1)^3\\

\refphi_1(X) &= -(X+1)(1 - \half(X+1))^2\\

\refphi_2(X) &= \frac{3}{4}(X+1)^2 - \half(X+1)^3\\

\refphi_3(X) &= -\half(X+1)(\half(X+1)^2 - (X+1))\\

\end{align}

$$

Numerical integration

- \( \int_\Omega f\basphi_idx \) must in general be computed by numerical integration

- Numerical integration is often used for the matrix too

Common form of a numerical integration rule:

$$

\begin{equation}

\int_{-1}^{1} g(X)dX \approx \sum_{j=0}^M w_jg(\bar X_j),

\end{equation}

$$

where

- \( \bar X_j \) are integration points

- \( w_j \) are integration weights

Different rules correspond to different choices of points and weights

The Midpoint rule

Simplest possibility: the Midpoint rule,

$$

\begin{equation}

\int_{-1}^{1} g(X)dX \approx 2g(0),\quad \bar X_0=0,\ w_0=2,

\end{equation}

$$

Exact for linear integrands

Newton-Cotes rules

- Idea: use a fixed, uniformly distributed set of points in \( [-1,1] \)

- The points often coincides with nodes

- Very useful for making \( \basphi_i\basphi_j=0 \) and get diagonal ("mass") matrices ("lumping")

The Trapezoidal rule:

$$

\begin{equation}

\int_{-1}^{1} g(X)dX \approx g(-1) + g(1),\quad \bar X_0=-1,\ \bar X_1=1,\ w_0=w_1=1,

\tag{29}

\end{equation}

$$

Simpson's rule:

$$

\begin{equation}

\int_{-1}^{1} g(X)dX \approx \frac{1}{3}\left(g(-1) + 4g(0)

+ g(1)\right),

\end{equation}

$$

where

$$

\begin{equation}

\bar X_0=-1,\ \bar X_1=0,\ \bar X_2=1,\ w_0=w_2=\frac{1}{3},\ w_1=\frac{4}{3}

\end{equation}

$$

Gauss-Legendre rules with optimized points

- Optimize the location of points to get higher accuracy

- Gauss-Legendre rules (quadrature) adjust points and weights to integrate polynomials exactly

$$

\begin{align}

M=1&:\quad \bar X_0=-\frac{1}{\sqrt{3}},\

\bar X_1=\frac{1}{\sqrt{3}},\ w_0=w_1=1\\

M=2&:\quad \bar X_0=-\sqrt{\frac{3}{{5}}},\ \bar X_0=0,\

\bar X_2= \sqrt{\frac{3}{{5}}},\ w_0=w_2=\frac{5}{9},\ w_1=\frac{8}{9}

\end{align}

$$

- \( M=1 \): integrates 3rd degree polynomials exactly

- \( M=2 \): integrates 5th degree polynomials exactly

- In general, \( M \)-point rule integrates a polynomial of degree \( 2M+1 \) exactly.

See numint.py for a large collection of Gauss-Legendre rules.

Approximation of functions in 2D

Inner product in 2D:

$$

\begin{equation}

(f,g) = \int_\Omega f(x,y)g(x,y) dx dy

\end{equation}

$$

Least squares and project/Galerkin lead to a linear system

$$

\begin{align*}

\sum_{j\in\If} A_{i,j}c_j &= b_i,\quad i\in\If\\

A_{i,j} &= (\baspsi_i,\baspsi_j)\\

b_i &= (f,\baspsi_i)

\end{align*}

$$

Challenge: How to construct 2D basis functions \( \baspsi_i(x,y) \)?

2D basis functions as tensor products of 1D functions

Use a 1D basis for \( x \) variation and a similar for \( y \) variation:

$$

\begin{align}

V_x &= \mbox{span}\{ \hat\baspsi_0(x),\ldots,\hat\baspsi_{N_x}(x)\}

\tag{30}\\

V_y &= \mbox{span}\{ \hat\baspsi_0(y),\ldots,\hat\baspsi_{N_y}(y)\}

\tag{31}

\end{align}

$$

The 2D vector space can be defined as a tensor product \( V = V_x\otimes V_y \) with basis functions

$$

\baspsi_{p,q}(x,y) = \hat\baspsi_p(x)\hat\baspsi_q(y)

\quad p\in\Ix,q\in\Iy\tp

$$

Tensor products

Given two vectors \( a=(a_0,\ldots,a_M) \) and \( b=(b_0,\ldots,b_N) \) their outer tensor product, also called the dyadic product, is \( p=a\otimes b \), defined through

$$ p_{i,j}=a_ib_j,\quad i=0,\ldots,M,\ j=0,\ldots,N\tp$$

Note: \( p \) has two indices (as a matrix or two-dimensional array)

Example: 2D basis as tensor product of 1D spaces,

$$ \baspsi_{p,q}(x,y) = \hat\baspsi_p(x)\hat\baspsi_q(y),

\quad p\in\Ix,q\in\Iy$$

Double or single index?

The 2D basis can employ a double index and double sum:

$$ u = \sum_{p\in\Ix}\sum_{q\in\Iy} c_{p,q}\baspsi_{p,q}(x,y)

$$

Or just a single index:

$$ u = \sum_{j\in\If} c_j\baspsi_j(x,y)$$

with

$$

\baspsi_i(x,y) = \hat\baspsi_p(x)\hat\baspsi_q(y),

\quad i=p N_y + q\hbox{ or } i=q N_x + p

$$

Example on 2D (bilinear) basis functions; formulas

In 1D we use the basis

$$ \{ 1, x \} $$

2D tensor product (all combinations):

$$ \baspsi_{0,0}=1,\quad \baspsi_{1,0}=x, \quad \baspsi_{0,1}=y,

\quad \baspsi_{1,1}=xy

$$

or with a single index:

$$ \baspsi_0=1,\quad \baspsi_1=x, \quad \baspsi_2=y,\quad\baspsi_3 =xy

$$

See notes for details of a hand-calculation.

Example on 2D (bilinear) basis functions; plot

Quadratic \( f(x,y) = (1+x^2)(1+2y^2) \) (left), bilinear \( u \) (right):

Implementation; principal changes to the 1D code

Very small modification of approx1D.py:

-

Omega = [[0, L_x], [0, L_y]] - Symbolic integration in 2D

- Construction of 2D (tensor product) basis functions

Implementation; 2D integration

import sympy as sp

integrand = psi[i]*psi[j]

I = sp.integrate(integrand,

(x, Omega[0][0], Omega[0][1]),

(y, Omega[1][0], Omega[1][1]))

# Fall back on numerical integration if symbolic integration

# was unsuccessful

if isinstance(I, sp.Integral):

integrand = sp.lambdify([x,y], integrand)

I = sp.mpmath.quad(integrand,

[Omega[0][0], Omega[0][1]],

[Omega[1][0], Omega[1][1]])

Implementation; 2D basis functions

Tensor product of 1D "Taylor-style" polynomials \( x^i \):

def taylor(x, y, Nx, Ny):

return [x**i*y**j for i in range(Nx+1) for j in range(Ny+1)]

Tensor product of 1D sine functions \( \sin((i+1)\pi x) \):

def sines(x, y, Nx, Ny):

return [sp.sin(sp.pi*(i+1)*x)*sp.sin(sp.pi*(j+1)*y)

for i in range(Nx+1) for j in range(Ny+1)]

Complete code in approx2D.py

Implementation; application

\( f(x,y) = (1+x^2)(1+2y^2) \)

>>> from approx2D import *

>>> f = (1+x**2)*(1+2*y**2)

>>> psi = taylor(x, y, 1, 1)

>>> Omega = [[0, 2], [0, 2]]

>>> u, c = least_squares(f, psi, Omega)

>>> print u

8*x*y - 2*x/3 + 4*y/3 - 1/9

>>> print sp.expand(f)

2*x**2*y**2 + x**2 + 2*y**2 + 1

Implementation; trying a perfect expansion

Add higher powers to the basis such that \( f\in V \):

>>> psi = taylor(x, y, 2, 2)

>>> u, c = least_squares(f, psi, Omega)

>>> print u

2*x**2*y**2 + x**2 + 2*y**2 + 1

>>> print u-f

0

Expected: \( u=f \) when \( f\in V \)

Generalization to 3D

Key idea:

$$ V = V_x\otimes V_y\otimes V_z$$

$$

\begin{align*}

a^{(q)} &= (a^{(q)}_0,\ldots,a^{(q)}_{N_q}),\quad q=0,\ldots,m\\

p &= a^{(0)}\otimes\cdots\otimes a^{(m)}\\

p_{i_0,i_1,\ldots,i_m} &= a^{(0)}_{i_1}a^{(1)}_{i_1}\cdots a^{(m)}_{i_m}

\end{align*}

$$

$$

\begin{align*}

\baspsi_{p,q,r}(x,y,z) &= \hat\baspsi_p(x)\hat\baspsi_q(y)\hat\baspsi_r(z)\\

u(x,y,z) &= \sum_{p\in\Ix}\sum_{q\in\Iy}\sum_{r\in\Iz} c_{p,q,r}

\baspsi_{p,q,r}(x,y,z)

\end{align*}

$$

Finite elements in 2D and 3D

The two great advantages of the finite element method:

- Can handle complex-shaped domains in 2D and 3D

- Can easily provide higher-order polynomials in the approximation

Finite elements in 1D: mostly for learning, insight, debugging

Examples on cell types

2D:

- triangles

- quadrilaterals

3D:

- tetrahedra

- hexahedra

Rectangular domain with 2D P1 elements

Deformed geometry with 2D P1 elements

Rectangular domain with 2D Q1 elements

Basis functions over triangles in the physical domain

The P1 triangular 2D element: \( u \) is linear \( ax + by + c \) over each triangular cell

Basic features of 2D P1 elements

- \( \basphi_r(X,Y) \) is a linear function over each element

- Cells = triangles

- Vertices = corners of the cells

- Nodes = vertices

- Degrees of freedom = function values at the nodes

Linear mapping of reference element onto general triangular cell

\( \basphi_i \): pyramid shape, composed of planes

- \( \basphi_i(X,Y) \) varies linearly over an element

- \( \basphi_i=1 \) at vertex (node) \( i \), 0 at all other vertices (nodes)

Element matrices and vectors

- As in 1D, the contribution from one cell to the matrix involves just a few numbers, collected in the element matrix and vector

- \( \basphi_i\basphi_j\neq 0 \) only if \( i \) and \( j \) are degrees of freedom (vertices/nodes) in the same element

- The 2D P1 has a \( 3\times 3 \) element matrix

Basis functions over triangles in the reference cell

$$

\begin{align}

\refphi_0(X,Y) &= 1 - X - Y\\

\refphi_1(X,Y) &= X\\

\refphi_2(X,Y) &= Y

\end{align}

$$

Higher-degree \( \refphi_r \) introduce more nodes (dof = node values)

2D P1, P2, P3, P4, P5, and P6 elements

P1 elements in 1D, 2D, and 3D

P2 elements in 1D, 2D, and 3D

- Interval, triangle, tetrahedron: simplex element (plural quick-form: simplices)

- Side of the cell is called face

- Thetrahedron has also edges

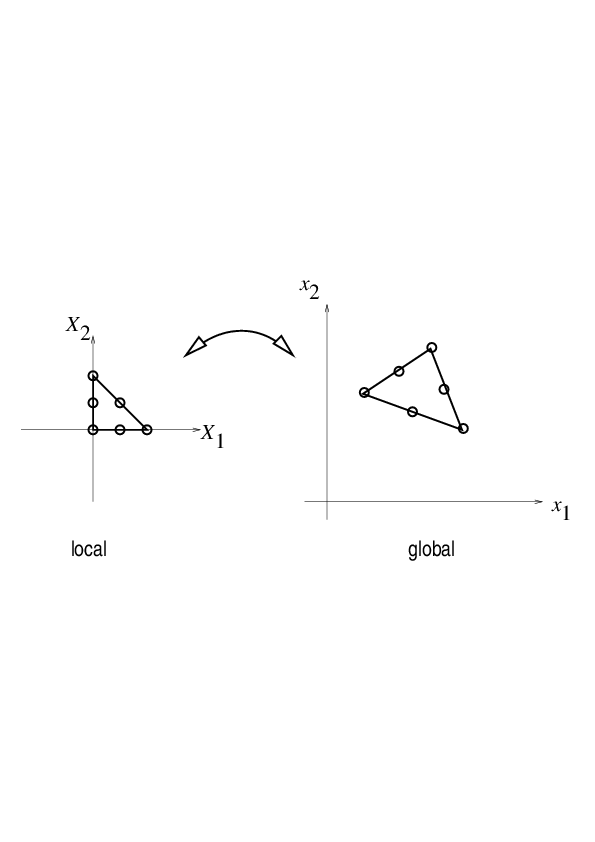

Affine mapping of the reference cell; formula

Mapping of local \( \X = (X,Y) \) coordinates in the reference cell to global, physical \( \x = (x,y) \) coordinates:

$$

\begin{equation}

\x = \sum_{r} \refphi_r^{(1)}(\X)\xdno{q(e,r)}

\tag{32}

\end{equation}

$$

where

- \( r \) runs over the local vertex numbers in the cell

- \( \xdno{i} \) are the \( (x,y) \) coordinates of vertex \( i \)

- \( \refphi_r^{(1)} \) are P1 basis functions

This mapping preserves the straight/planar faces and edges.

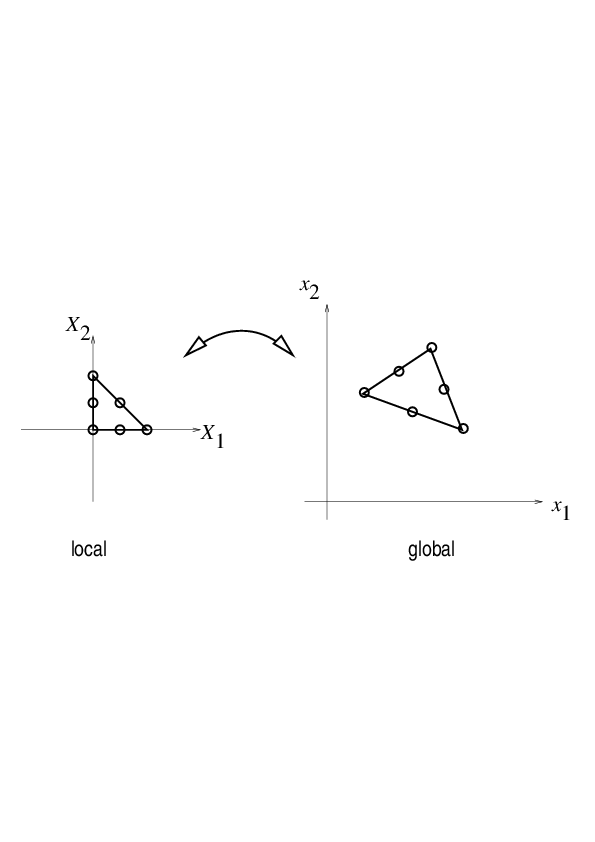

Affine mapping of the reference cell; figure

Isoparametric mapping of the reference cell

Idea: Use the basis functions of the element (not only the P1 functions) to map the element

$$

\begin{equation}

\x = \sum_{r} \refphi_r(\X)\xdno{q(e,r)}

\tag{33}

\end{equation}

$$

Advantage: higher-order polynomial basis functions now map the reference cell to a curved triangle or tetrahedron.

Computing integrals

Integrals must be transformed from \( \Omega^{(e)} \) (physical cell) to \( \tilde\Omega^r \) (reference cell):

$$

\begin{align}

\int_{\Omega^{(e)}}\basphi_i (\x) \basphi_j (\x) \dx &=

\int_{\tilde\Omega^r} \refphi_i (\X) \refphi_j (\X)

\det J\, \dX\\

\int_{\Omega^{(e)}}\basphi_i (\x) f(\x) \dx &=

\int_{\tilde\Omega^r} \refphi_i (\X) f(\x(\X)) \det J\, \dX

\end{align}

$$

where \( \dx = dx dy \) or \( \dx = dxdydz \) and \( \det J \) is the determinant of the

Jacobian of the mapping \( \x(\X) \).

$$

\begin{equation}

J = \left[\begin{array}{cc}

\frac{\partial x}{\partial X} & \frac{\partial x}{\partial Y}\\

\frac{\partial y}{\partial X} & \frac{\partial y}{\partial Y}

\end{array}\right], \quad

\det J = \frac{\partial x}{\partial X}\frac{\partial y}{\partial Y}

- \frac{\partial x}{\partial Y}\frac{\partial y}{\partial X}

\tag{34}

\end{equation}

$$

Affine mapping (32): \( \det J=2\Delta \), \( \Delta = \hbox{cell volume} \)

!slide

Remark on going from 1D to 2D/3D

Differential equation models

Our aim is to extend the ideas for approximating \( f \) by \( u \), or solving

$$ u = f $$

to real differential equations like[[[

$$ -u'' + bu = f,\quad u(0)=1,\ u'(L)=D $$

Three methods are addressed:

- least squares

- Galerkin/projection

- collocation (interpolation)

Method 2 will be totally dominating!

Abstract differential equation

$$

\begin{equation}

\mathcal{L}(u) = 0,\quad x\in\Omega \end{equation}

$$

Examples (1D problems):

$$

\begin{align}

\mathcal{L}(u) &= \frac{d^2u}{dx^2} - f(x),

\tag{35}\\

\mathcal{L}(u) &= \frac{d}{dx}\left(\dfc(x)\frac{du}{dx}\right) + f(x),

\tag{36}\\

\mathcal{L}(u) &= \frac{d}{dx}\left(\dfc(u)\frac{du}{dx}\right) - au + f(x),

\tag{37}\\

\mathcal{L}(u) &= \frac{d}{dx}\left(\dfc(u)\frac{du}{dx}\right) + f(u,x)

\tag{38}

\end{align}

$$

Abstract boundary conditions

$$

\begin{equation}

{\cal B}_0(u)=0,\ x=0,\quad {\cal B}_1(u)=0,\ x=L

\end{equation}

$$

Examples:

$$

\begin{align}

\mathcal{B}_i(u) &= u - g,\quad &\hbox{Dirichlet condition}\\

\mathcal{B}_i(u) &= -\dfc \frac{du}{dx} - g,\quad &\hbox{Neumann condition}\\

\mathcal{B}_i(u) &= -\dfc \frac{du}{dx} - h(u-g),\quad &\hbox{Robin condition}

\end{align}

$$

Reminder about notation

- \( \uex(x) \) is the symbol for the exact solution of \( \mathcal{L}(\uex)=0 \)

- \( u(x) \) denotes an approximate solution

- We seek \( u\in V \)

- \( V = \hbox{span}\{ \baspsi_0(x),\ldots,\baspsi_N(x)\} \), \( V \) has basis \( \sequencei{\baspsi} \)

- \( \If =\{0,\ldots,N\} \) is an index set

- \( u(x) = \sum_{j\in\If} c_j\baspsi_j(x) \)

- Inner product: \( (u,v) = \int_\Omega uv\dx \)

- Norm: \( ||u||=\sqrt{(u,u)} \)

New topics

Much is similar to approximating a function (solving \( u=f \)), but two new topics are needed:

- Variational formulation of the differential equation problem (including integration by parts)

- Handling of boundary conditions

Residual-minimizing principles

- When solving \( u=f \) we knew the error \( e=f-u \) and could use principles for minimizing the error

- When solving \( \mathcal{L}(\uex)=0 \) we do not know \( \uex \) and cannot work with the error \( e=\uex - u \)

- We only have the error in the equation: the residual \( R \)

Inserting \( u=\sum_jc_j\baspsi_j \) in \( \mathcal{L}=0 \) gives a residual

$$

\begin{equation}

R = \mathcal{L}(u) = \mathcal{L}(\sum_j c_j \baspsi_j) \neq 0

\end{equation}

$$

Goal: minimize \( R \) wrt \( \sequencei{c} \) (and hope it makes a small \( e \) too)

$$ R=R(c_0,\ldots,c_N; x)$$

The least squares method

Idea: minimize

$$

\begin{equation}

E = ||R||^2 = (R,R) = \int_{\Omega} R^2 dx

\end{equation}

$$

Minimization wrt \( \sequencei{c} \) implies

$$

\begin{equation}

\frac{\partial E}{\partial c_i} =

\int_{\Omega} 2R\frac{\partial R}{\partial c_i} dx = 0\quad

\Leftrightarrow\quad (R,\frac{\partial R}{\partial c_i})=0,\quad

i\in\If

\tag{39}

\end{equation}

$$

\( N+1 \) equations for \( N+1 \) unknowns \( \sequencei{c} \)

The Galerkin method

Idea: make \( R \) orthogonal to \( V \),

$$

\begin{equation}

(R,v)=0,\quad \forall v\in V

\tag{40}

\end{equation}

$$

This implies

$$

\begin{equation}

(R,\baspsi_i)=0,\quad i\in\If

\tag{41}

\end{equation}

$$

\( N+1 \) equations for \( N+1 \) unknowns \( \sequencei{c} \)

The Method of Weighted Residuals

Generalization of the Galerkin method: demand \( R \) orthogonal to some space \( W \), possibly \( W\neq V \):

$$

\begin{equation}

(R,v)=0,\quad \forall v\in W

\tag{42}

\end{equation}

$$

If \( \{w_0,\ldots,w_N\} \) is a basis for \( W \):

$$

\begin{equation}

(R,w_i)=0,\quad i\in\If

\tag{43}

\end{equation}

$$

- \( N+1 \) equations for \( N+1 \) unknowns \( \sequencei{c} \)

- Weighted residual with \( w_i = \partial R/\partial c_i \) gives least squares

Terminology: test and trial Functions

- \( \baspsi_j \) used in \( \sum_jc_j\baspsi_j \) is called trial function

- \( \baspsi_i \) or \( w_i \) used as weight in Galerkin's method is called test function

The collocation method

Idea: demand \( R=0 \) at \( N+1 \) points

$$

\begin{equation}

R(\xno{i}; c_0,\ldots,c_N)=0,\quad i\in\If

\tag{44}

\end{equation}

$$

Note: The collocation method is a weighted residual method with delta functions as weights

$$ 0 = \int_\Omega R(x;c_0,\ldots,c_N)

\delta(x-\xno{i})\dx = R(\xno{i}; c_0,\ldots,c_N)$$

$$

\begin{equation}

\hbox{property of } \delta(x):\quad

\int_{\Omega} f(x)\delta (x-\xno{i}) dx = f(\xno{i}),\quad \xno{i}\in\Omega

\tag{45}

\end{equation}

$$

Examples on using the principles

The first model problem

$$

\begin{equation}

-u''(x) = f(x),\quad x\in\Omega=[0,L],\quad u(0)=0,\ u(L)=0

\tag{46}

\end{equation}

$$

Basis functions:

$$

\begin{equation}

\baspsi_i(x) = \sinL{i},\quad i\in\If

\tag{47}

\end{equation}

$$

The residual:

$$

\begin{align}

R(x;c_0,\ldots,c_N) &= u''(x) + f(x),\nonumber\\

&= \frac{d^2}{dx^2}\left(\sum_{j\in\If} c_j\baspsi_j(x)\right)

+ f(x),\nonumber\\

&= -\sum_{j\in\If} c_j\baspsi_j''(x) + f(x)

\tag{48}

\end{align}

$$

Boundary conditions

Since \( u(0)=u(L)=0 \) we must ensure that all \( \baspsi_i(0)=\baspsi_i(L)=0 \). Then

$$ u(0) = \sum_jc_j\baspsi_j(0) = 0,\quad u(L) = \sum_jc_j\baspsi_j(L) $$

- \( u \) known: Dirichlet boundary condition

- \( u' \) known: Neumann boundary condition

- Must have \( \baspsi_i=0 \) where Dirichlet conditions apply

The least squares method; principle

$$

(R,\frac{\partial R}{\partial c_i}) = 0,\quad i\in\If

$$

$$

\begin{equation}

\frac{\partial R}{\partial c_i} =

\frac{\partial}{\partial c_i}

\left(\sum_{j\in\If} c_j\baspsi_j''(x) + f(x)\right)

= \baspsi_i''(x) \end{equation}

$$

Because:

$$

\frac{\partial}{\partial c_i}\left(c_0\baspsi_0'' + c_1\baspsi_1'' + \cdots +

c_{i-1}\baspsi_{i-1}'' + \color{blue}{c_i\baspsi_{i}''} + c_{i+1}\baspsi_{i+1}''

+ \cdots + c_N\baspsi_N'' \right) = \baspsi_{i}''

$$

The least squares method; equation system

$$

\begin{equation}

(\sum_j c_j \baspsi_j'' + f,\baspsi_i'')=0,\quad i\in\If

\end{equation}

$$

Rearrangement:

$$

\begin{equation}

\sum_{j\in\If}(\baspsi_i'',\baspsi_j'')c_j = -(f,\baspsi_i''),\quad i\in\If \end{equation}

$$

This is a linear system

$$

\begin{equation*} \sum_{j\in\If}A_{i,j}c_j = b_i,\quad i\in\If

\end{equation*}

$$

with

$$

\begin{align}

A_{i,j} &= (\baspsi_i'',\baspsi_j'')\nonumber\\

& = \pi^4(i+1)^2(j+1)^2L^{-4}\int_0^L \sinL{i}\sinL{j}\, dx\nonumber\\

&= \left\lbrace

\begin{array}{ll} {1\over2}L^{-3}\pi^4(i+1)^4 & i=j \\ 0, & i\neq j

\end{array}\right.

\\

b_i &= -(f,\baspsi_i'') = (i+1)^2\pi^2L^{-2}\int_0^Lf(x)\sinL{i}\, dx

\end{align}

$$

Orthogonality of the basis functions gives diagonal matrix

Useful property:

$$

\begin{equation}

\int\limits_0^L \sinL{i}\sinL{j}\, dx = \delta_{ij},\quad

\quad\delta_{ij} = \left\lbrace

\begin{array}{ll} \half L & i=j \\ 0, & i\neq j

\end{array}\right.

\end{equation}

$$

\( \Rightarrow\ (\baspsi_i'',\baspsi_j'') = \delta_{ij} \), i.e., diagonal \( A_{i,j} \), and we can easily solve for \( c_i \):

$$

\begin{equation}

c_i = \frac{2L}{\pi^2(i+1)^2}\int_0^Lf(x)\sinL{i}\, dx

\tag{49}

\end{equation}

$$

Least squares method; solution

Let's sympy do the work (\( f(x)=2 \)):

from sympy import *

import sys

i, j = symbols('i j', integer=True)

x, L = symbols('x L')

f = 2

a = 2*L/(pi**2*(i+1)**2)

c_i = a*integrate(f*sin((i+1)*pi*x/L), (x, 0, L))

c_i = simplify(c_i)

print c_i

$$

\begin{equation}

c_i = 4 \frac{L^{2} \left(\left(-1\right)^{i} + 1\right)}{\pi^{3}

\left(i^{3} + 3 i^{2} + 3 i + 1\right)},\quad

u(x) = \sum_{k=0}^{N/2} \frac{8L^2}{\pi^3(2k+1)^3}\sinL{2k}\tp

\end{equation}

$$

Fast decay: \( c_2 = c_0/27 \), \( c_4=c_0/125 \) - only one term might be good enough:

$$

\begin{equation*} u(x) \approx \frac{8L^2}{\pi^3}\sin\left(\pi\frac{x}{L}\right)\tp \end{equation*}

$$

The Galerkin method; principle

\( R=u''+f \):

$$

\begin{equation*}

(u''+f,v)=0,\quad \forall v\in V,

\end{equation*}

$$

or

$$

\begin{equation}

(u'',v) = -(f,v),\quad\forall v\in V \end{equation}

$$

This is a variational formulation of the differential equation problem.

\( \forall v\in V \) means for all basis functions:

$$

\begin{equation}

(\sum_{j\in\If} c_j\baspsi_j'', \baspsi_i)=-(f,\baspsi_i),\quad i\in\If \end{equation}

$$

The Galerkin method; solution

Since \( \baspsi_i''\propto \baspsi_i \), Galerkin's method gives the same linear system and the same solution as the least squares method (in this particular example).

The collocation method

\( R=0 \) (i.e.,the differential equation) must be satisfied at \( N+1 \) points:

$$

\begin{equation}

-\sum_{j\in\If} c_j\baspsi_j''(\xno{i}) = f(\xno{i}),\quad i\in\If

\end{equation}

$$

This is a linear system \( \sum_j A_{i,j}=b_i \) with entries

$$

\begin{equation*} A_{i,j}=-\baspsi_j''(\xno{i})=

(j+1)^2\pi^2L^{-2}\sin\left((j+1)\pi \frac{x_i}{L}\right),

\quad b_i=2

\end{equation*}

$$

Choose: \( N=0 \), \( x_0=L/2 \)

$$ c_0=2L^2/\pi^2 $$

Comparison of the methods

- Exact solution: \( u(x)=x(L-x) \)

- Galerkin or least squares (\( N=0 \)): \( u(x)=8L^2\pi^{-3}\sin (\pi x/L) \)

- Collocation method (\( N=0 \)): \( u(x)=2L^2\pi^{-2}\sin (\pi x/L) \).

- Max error in Galerkin/least sq.: \( -0.008L^2 \)

- Max error in collocation: \( 0.047L^2 \)

Useful techniques

Integration by parts

Second-order derivatives will hereafter be integrated by parts

$$

\begin{align}

\int_0^L u''(x)v(x) dx &= - \int_0^Lu'(x)v'(x)dx

+ [vu']_0^L\nonumber\\

&= - \int_0^Lu'(x)v'(x) dx

+ u'(L)v(L) - u'(0)v(0)

\tag{50}

\end{align}

$$

Motivation:

- Lowers the order of derivatives

- Gives more symmetric forms (incl. matrices)

- Enables easy handling of Neumann boundary conditions

- Finite element basis functions \( \basphi_i \) have discontinuous derivatives (at cell boundaries) and are not suited for terms with \( \basphi_i'' \)

Boundary function; principles

- What about nonzero Dirichlet conditions? Say \( u(L)=D \)

- We always require \( \baspsi_i(L)=0 \) (i.e., \( \baspsi_i=0 \) where Dirichlet conditions applies)

- Problem: \( u(L) = \sum_j c_j\baspsi_j(L)=\sum_j c_j\cdot 0=0\neq D \) - always

- Solution: \( u(x) = B(x) + \sum_j c_j\baspsi_j(x) \)

- \( B(x) \): user-constructed boundary function that fulfills the Dirichlet conditions

- If \( u(L)=D \), \( B(L)=D \)

- No restrictions of how \( B(x) \) varies in the interior of \( \Omega \)

Boundary function; example (1)

Dirichlet conditions: \( u(0)=C \) and \( u(L)=D \). Choose for example

$$ B(x) = \frac{1}{L}(C(L-x) + Dx):\qquad B(0)=C,\ B(L)=D $$

$$

\begin{equation}

u(x) = B(x) + \sum_{j\in\If} c_j\baspsi_j(x),

\tag{51}

\end{equation}

$$

$$ u(0) = B(0)= C,\quad u(L) = B(L) = D $$

Boundary function; example (2)

Dirichlet condition: \( u(L)=D \). Choose for example

$$ B(x) = D:\qquad B(L)=D $$

$$

\begin{equation}

u(x) = B(x) + \sum_{j\in\If} c_j\baspsi_j(x),

\tag{51}

\end{equation}

$$

$$ u(L) = B(L) = D $$

Impact of the boundary function on the space where we seek the solution

- \( \sequencei{\baspsi} \) is a basis for \( V \)

- \( \sum_{j\in\If}c_j\baspsi_j(x)\in V \)

- But \( u\not\in V \)!

- Reason: say \( u(0)=C \) and \( u\in V \) (any \( v\in V \) has \( v(0)=C \), then \( 2u\not\in V \) because \( 2u(0)=2C \)

- When \( u(x) = B(x) + \sum_{j\in\If}c_j\baspsi_j(x) \), \( B \neq 0 \), \( B\not\in V \) (in general) and \( u\not\in V \), but \( (u-B)\in V \) since \( \sum_{j}c_j\baspsi_j\in V \)

Abstract notation for variational formulations

The finite element literature (and much FEniCS documentation) applies an abstract notation for the variational formulation:

$$ a(u,v) = L(v)\quad \forall v\in V $$

Example on abstract notation

$$ -u''=f, \quad u'(0)=C,\ u(L)=D,\quad u=D + \sum_jc_j\baspsi_j$$

Variational formulation:

$$

\int_{\Omega} u' v'dx = \int_{\Omega} fvdx\quad - v(0)C