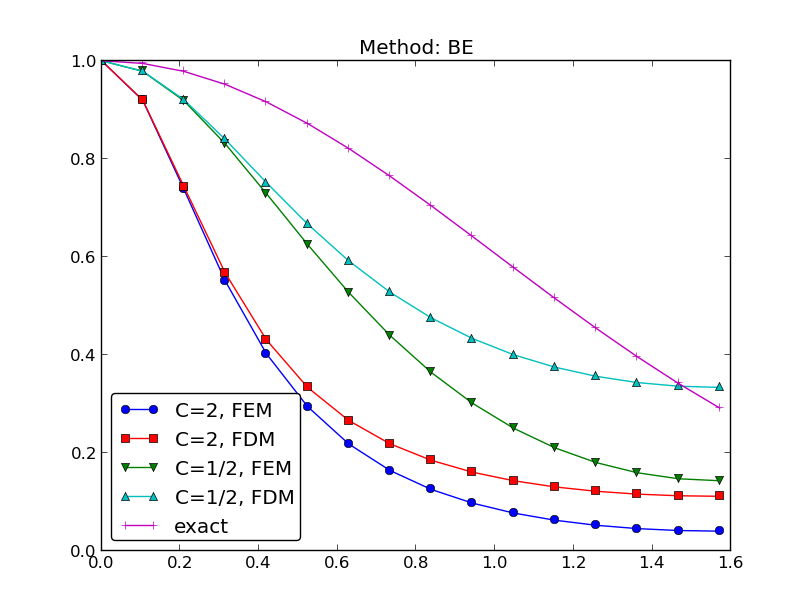

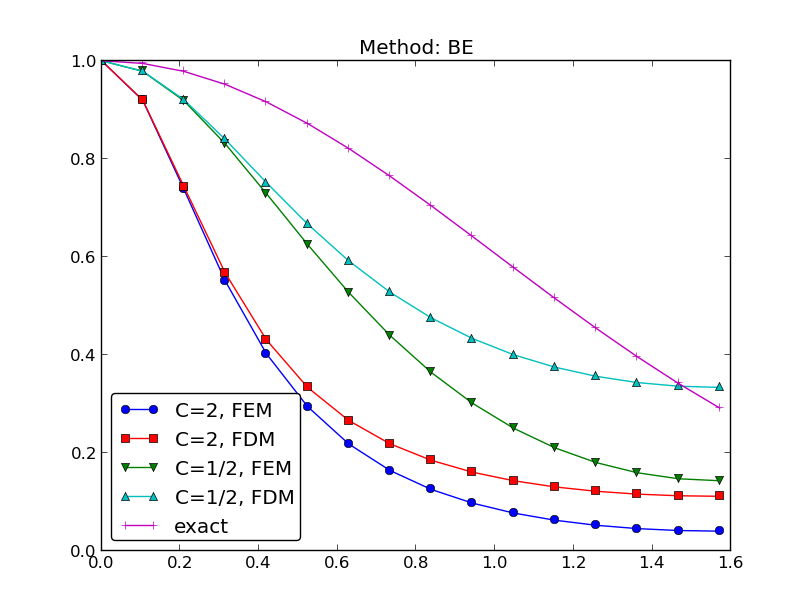

Figure 48: Comparison of coarse-mesh amplification factors for Backward Euler discretization of a 1D diffusion equation.

The finite element method is normally used for discretization in space. There are two alternative strategies for performing a discretization in time:

We can apply a finite difference method in time to (102). First we need a mesh in time, here taken as uniform with mesh points \( t_n = n\Delta t \), \( n=0,1,\ldots,N_t \). A Forward Euler scheme consists of sampling (102) at \( t_n \) and approximating the time derivative by a forward difference \( [D_t^+ u]^n\approx (u^{n+1}-u^n)/\Delta t \). This approximation turns (102) into a differential equation that is discrete in time, but still continuous in space. With a finite difference operator notation we can write the time-discrete problem as $$ \begin{equation} [D_t^+ u = \dfc\nabla^2 u + f]^n, \tag{105} \end{equation} $$ for \( n=1,2,\ldots,N_t-1 \). Writing this equation out in detail and isolating the unknown \( u^{n+1} \) on the left-hand side, demonstrates that the time-discrete problem is a recursive set of problems that are continuous in space: $$ \begin{equation} u^{n+1} = u^n + \Delta t \left( \dfc\nabla^2 u^n + f(\x, t_n)\right) \tag{106} \tp \end{equation} $$ Given \( u^0=I \), we can use (106) to compute \( u^1,u^2,\dots,u^{N_t} \).

For absolute clarity in the various stages of the discretizations, we introduce \( \uex(\x,t) \) as the exact solution of the space-and time-continuous partial differential equation (102) and \( \uex^n(\x) \) as the time-discrete approximation, arising from the finite difference method in time (105). More precisely, \( \uex \) fulfills $$ \begin{equation} \frac{\partial \uex}{\partial t} = \dfc\nabla^2 \uex + f(\x, t) \tag{107}, \end{equation} $$ while \( \uex^{n+1} \), with a superscript, is the solution of the time-discrete equations $$ \begin{equation} \uex^{n+1} = \uex^n + \Delta t \left( \dfc\nabla^2 \uex^n + f(\x, t_n)\right) \tag{108} \tp \end{equation} $$

We now introduce a finite element approximation to \( \uex^n \) and \( \uex^{n+1} \) in (108), where the coefficients depend on the time level: $$ \begin{align} \uex^n &\approx u^n = \sum_{j=0}^{N} c_j^{n}\baspsi_j(\x), \tag{109}\\ \uex^{n+1} &\approx u^{n+1} = \sum_{j=0}^{N} c_j^{n+1}\baspsi_j(\x) \tag{110} \tp \end{align} $$ Note that, as before, \( N \) denotes the number of degrees of freedom in the spatial domain. The number of time points is denoted by \( N_t \). We define a space \( V \) spanned by the basis functions \( \sequencei{\baspsi} \).

A weighted residual method with weighting functions \( w_i \) can now be formulated. We insert (109) and (110) in (108) to obtain the residual $$ R = u^{n+1} - u^n - \Delta t \left( \dfc\nabla^2 u^n + f(\x, t_n)\right) \tp $$ The weighted residual principle, $$ \int_\Omega Rw\dx = 0,\quad \forall w\in W,$$ results in $$ \int_\Omega \left\lbrack u^{n+1} - u^n - \Delta t \left( \dfc\nabla^2 u^n + f(\x, t_n)\right) \right\rbrack w \dx =0, \quad\forall w \in W\tp $$ From now on we use the Galerkin method so \( W=V \). Isolating the unknown \( u^{n+1} \) on the left-hand side gives $$ \int_{\Omega} u^{n+1}\baspsi_i\dx = \int_{\Omega} \left\lbrack u^n - \Delta t \left( \dfc\nabla^2 u^n + f(\x, t_n)\right) \right\rbrack v\dx,\quad \forall v\in V \tp $$

As usual in spatial finite element problems involving second-order derivatives, we apply integration by parts on the term \( \int (\nabla^2 u^n)v\dx \): $$ \int_{\Omega}\dfc(\nabla^2 u^n)v \dx = -\int_{\Omega}\dfc\nabla u^n\cdot\nabla v\dx + \int_{\partial\Omega}\dfc\frac{\partial u^n}{\partial n}v \dx \tp $$ The last term vanishes because we have the Neumann condition \( \partial u^n/\partial n=0 \) for all \( n \). Our discrete problem in space and time then reads $$ \begin{equation} \int_{\Omega} u^{n+1}v\dx = \int_{\Omega} u^n vdx - \Delta t \int_{\Omega}\dfc\nabla u^n\cdot\nabla v\dx + \Delta t\int_{\Omega}f^n v\dx,\quad \forall \v\in V\tp \tag{111} \end{equation} $$ This is the variational formulation of our recursive set of spatial problems.

As in stationary problems, we can introduce a boundary function \( B(\x,t) \) to take care of nonzero Dirichlet conditions: $$ \begin{align} \uex^n &\approx u^n = B(\x,t_n) + \sum_{j=0}^{N} c_j^{n}\baspsi_j(\x), \tag{112}\\ \uex^{n+1} &\approx u^{n+1} = B(\x,t_{n+1}) + \sum_{j=0}^{N} c_j^{n+1}\baspsi_j(\x) \tag{113} \tp \end{align} $$

In a program it is only necessary to store \( u^{n+1} \) and \( u^n \) at the

same time. We therefore drop the \( n \) index in programs and work with

two functions: u for \( u^{n+1} \), the new unknown, and u_1 for

\( u^n \), the solution at the previous time level. This is also

convenient in the mathematics to maximize the correspondence with the

code. From now on \( u_1 \) means the discrete unknown at the previous

time level (\( u^{n} \)) and \( u \) represents the

discrete unknown at the new time level (\( u^{n+1} \)).

Equation (111) with this new

naming convention is expressed as

$$

\begin{equation}

\int_{\Omega} u vdx =

\int_{\Omega} u_1 vdx -

\Delta t \int_{\Omega}\dfc\nabla u_1\cdot\nabla v\dx +

\Delta t\int_{\Omega}f^n v\dx

\tp

\tag{114}

\end{equation}

$$

This variational form can alternatively be expressed by the inner

product notation:

$$

\begin{equation}

(u,v) = (u_1,v) -

\Delta t (\dfc\nabla u_1,\nabla v) +

(f^n, v)

\tp

\tag{115}

\end{equation}

$$

To derive the equations for the new unknown coefficients \( c_j^{n+1} \), now just called \( c_j \), we insert $$ u = \sum_{j=0}^{N}c_j\baspsi_j(\x),\quad u_1 = \sum_{j=0}^{N} c_{1,j}\baspsi_j(\x)$$ in (114) or (115), let the equation hold for all \( v=\baspsi \), $i=0,\ldots,$N, and order the terms as matrix-vector products: $$ \begin{equation} \sum_{j=0}^{N} (\baspsi_i,\baspsi_j) c_j = \sum_{j=0}^{N} (\baspsi_i,\baspsi_j) c_{1,j} -\Delta t \sum_{j=0}^{N} (\nabla\baspsi_i,\dfc\nabla\baspsi_j) c_{1,j} + (f^n,\baspsi_i),\quad i=0,\ldots,N \tp \end{equation} $$ This is a linear system \( \sum_j A_{i,j}c_j = b_i \) with $$ A_{i,j} = (\baspsi_i,\baspsi_j) $$ and $$ b_i = \sum_{j=0}^{N} (\baspsi_i,\baspsi_j) c_{1,j} -\Delta t \sum_{j=0}^{N} (\nabla\baspsi_i,\dfc\nabla\baspsi_j) c_{1,j} + (f^n,\baspsi_i)\tp $$

It is instructive and convenient for implementations to write the linear system on the form $$ \begin{equation} Mc = Mc_1 - \Delta t Kc_1 + f, \end{equation} $$ where $$ \begin{align*} M &= \{M_{i,j}\},\quad M_{i,j}=(\baspsi_i,\baspsi_j),\quad i,j\in\If,\\ K &= \{K_{i,j}\},\quad K_{i,j}=(\nabla\baspsi_i,\dfc\nabla\baspsi_j), \quad i,j\in\If,\\ f &= \{(f(\x,t_n),\baspsi_i)\}_{i\in\If},\\ c &= \{c_i\}_{i\in\If},\\ c_1 &= \{c_{1,i}\}_{i\in\If} \tp \end{align*} $$

We realize that \( M \) is the matrix arising from a term with the zero-th derivative of \( u \), and called the mass matrix, while \( K \) is the matrix arising from a Laplace term \( \nabla^2 u \). The \( K \) matrix is often known as the stiffness matrix. (The terms mass and stiffness stem from the early days of finite elements when applications to vibrating structures dominated. The mass matrix arises from the mass times acceleration term in Newton's second law, while the stiffness matrix arises from the elastic forces in that law. The mass and stiffness matrix appearing in a diffusion have slightly different mathematical formulas.)

Remark. The mathematical symbol \( f \) has two meanings, either the function \( f(\x,t) \) in the PDE or the \( f \) vector in the linear system to be solved at each time level. The symbol \( u \) also has different meanings, basically the unknown in the PDE or the finite element function representing the unknown at a time level. The actual meaning should be evident from the context.

We observe that \( M \) and \( K \) can be precomputed so that we can avoid computing the matrix entries at every time level. Instead, some matrix-vector multiplications will produce the linear system to be solved. The computational algorithm has the following steps:

We can compute the \( M \) and \( K \) matrices using P1 elements in 1D. A uniform mesh on \( [0,L] \) is introduced for this purpose. Since the boundary conditions are solely of Neumann type in this sample problem, we have no restrictions on the basis functions \( \baspsi_i \) and can simply choose \( \baspsi_i = \basphi_i \), \( i=0,\ldots,N=N_n \).

From the section Computation in the global physical domain or Cellwise computations we have that the \( K \) matrix is the same as we get from the finite difference method: \( h[D_xD_x u]^n_i \), while from the section Finite difference interpretation of a finite element approximation we know that \( M \) can be interpreted as the finite difference approximation \( [u + \frac{1}{6}h^2D_xD_x u]^n_i \) (times \( h \)). The equation system \( Mc=b \) in the algorithm is therefore equivalent to the finite difference scheme $$ \begin{equation} [D_t^+(u + \frac{1}{6}h^2D_xD_x u) = \dfc D_xD_x u + f]^n_i \tag{116} \tp \end{equation} $$ (More precisely, \( Mc=b \) divided by \( h \) gives the equation above.)

By applying Trapezoidal integration one can turn \( M \) into a diagonal matrix with \( (h/2,h,\ldots,h,h/2) \) on the diagonal. Then there is no need to solve a linear system at each time level, and the finite element scheme becomes identical to a standard finite difference method $$ \begin{equation} [D_t^+ u = \dfc D_xD_x u + f]^n_i \tag{117} \tp \end{equation} $$

The Trapezoidal integration is not as accurate as exact integration and introduces therefore an error. Whether this error has a good or bad influence on the overall numerical method is not immediately obvious, and is analyzed in detail in the section Analysis of the discrete equations. The effect of the error is at least not more severe than what is produced by the finite difference method.

Making \( M \) diagonal is usually referred to as lumping the mass matrix. There is an alternative method to using an integration rule based on the node points: one can sum the entries in each row, place the sum on the diagonal, and set all other entries in the row equal to zero. For P1 elements the methods of lumping the mass matrix give the same result.

The Backward Euler scheme in time applied to our diffusion problem can be expressed as follows using the finite difference operator notation: $$ [D_t^- u = \dfc\nabla^2 u + f(\x, t)]^n \tp $$ Written out, and collecting the unknown \( u^n \) on the left-hand side and all the known terms on the right-hand side, the time-discrete differential equation becomes $$ \begin{equation} \uex^{n} - \Delta t \left( \dfc\nabla^2 \uex^n + f(\x, t_{n})\right) = \uex^{n-1} \tag{118} \tp \end{equation} $$ Equation (118) can compute \( \uex^1,\uex^2,\dots,\uex^{N_t} \), if we have a start \( \uex^0=I \) from the initial condition. However, (118) is a partial differential equation in space and needs a solution method based on discretization in space. For this purpose we use an expansion as in (109)-(110).

Inserting (109)-(110) in (118), multiplying by \( \baspsi_i \) (or \( v\in V \)), and integrating by parts, as we did in the Forward Euler case, results in the variational form $$ \begin{equation} \int_{\Omega} \left( u^{n}v + \Delta t \dfc\nabla u^n\cdot\nabla v\right)\dx = \int_{\Omega} u^{n-1} v\dx - \Delta t\int_{\Omega}f^n v\dx,\quad\forall v\in V \tag{119} \tp \end{equation} $$ Expressed with \( u \) as \( u^n \) and \( u_1 \) as \( u^{n-1} \), this becomes $$ \begin{equation} \int_{\Omega} \left( uv + \Delta t \dfc\nabla u\cdot\nabla v\right)\dx = \int_{\Omega} u_1 v\dx + \Delta t\int_{\Omega}f^n v\dx, \tag{120} \end{equation} $$ or with the more compact inner product notation, $$ \begin{equation} (u,v) + \Delta t (\dfc\nabla u,\nabla v) = (u_1,v) + \Delta t (f^n,v) \tag{121} \tp \end{equation} $$

Inserting \( u=\sum_j c_j\baspsi_i \) and \( u_1=\sum_j c_{1,j}\baspsi_i \), and choosing \( v \) to be the basis functions \( \baspsi_i\in V \), \( i=0,\ldots,N \), together with doing some algebra, lead to the following linear system to be solved at each time level: $$ \begin{equation} (M + \Delta t K)c = Mc_1 + f, \tag{122} \end{equation} $$ where \( M \), \( K \), and \( f \) are as in the Forward Euler case. This time we really have to solve a linear system at each time level. The computational algorithm goes as follows.

We know what kind of finite difference operators the \( M \) and \( K \) matrices correspond to (after dividing by \( h \)), so (122) can be interpreted as the following finite difference method: $$ \begin{equation} [D_t^-(u + \frac{1}{6}h^2D_xD_x u) = \dfc D_xD_x u + f]^n_i \tag{123} \tp \end{equation} $$

The mass matrix \( M \) can be lumped, as explained in the section Comparing P1 elements with the finite difference method, and then the linear system arising from the finite element method with P1 elements corresponds to a plain Backward Euler finite difference method for the diffusion equation: $$ \begin{equation} [D_t^- u = \dfc D_xD_x u + f]^n_i \tag{124} \tp \end{equation} $$

Suppose now that the boundary condition (104) is replaced by a mixed Neumann and Dirichlet condition, $$ \begin{align} u(\x,t) &= u_0(\x,t),\quad & \x\in\partial\Omega_D,\\ -\dfc\frac{\partial}{\partial n} u(\x,t) &= g(\x,t),\quad & \x\in\partial{\Omega}_N\tp \end{align} $$

Using a Forward Euler discretization in time, the variational form at a time level becomes $$ \begin{equation} \int_\Omega u^{n+1}v\dx = \int_\Omega (u^n - \Delta t\dfc\nabla u^n\cdot\nabla v)\dx - \Delta t\int_{\partial\Omega_N} gv\ds,\quad \forall v\in V\tp \end{equation} $$

The Dirichlet condition \( u=u_0 \) at \( \partial\Omega_D \) can be incorporated through a boundary function \( B(\x)=u_0(\x) \) and demanding that \( v=0 \) at \( \partial\Omega_D \). The expansion for \( u^n \) is written as $$ u^n(\x) = u_0(\x,t_n) + \sum_{j\in\If}c_j^n\baspsi_j(\x)\tp$$ Inserting this expansion in the variational formulation and letting it hold for all basis functions \( \baspsi_i \) leads to the linear system $$ \begin{align*} \sum_{j\in\If} \left(\int_\Omega \baspsi_i\baspsi_j\dx\right) c^{n+1}_j &= \sum_{j\in\If} \left(\int_\Omega\left( \baspsi_i\baspsi_j - \Delta t\dfc\nabla \baspsi_i\cdot\nabla\baspsi_j\right)\dx\right) c_j^n - \\ &\quad \int_\Omega\left( u_0(\x,t_{n+1}) - u_0(\x,t_n) + \Delta t\dfc\nabla u_0(\x,t_n)\cdot\nabla \baspsi_i\right)\dx \\ & \quad + \Delta t\int_\Omega f\baspsi_i\dx - \Delta t\int_{\partial\Omega_N} g\baspsi_i\ds, \quad i\in\If\tp \end{align*} $$ In the following, we adopt the convention that the unknowns \( c_j^{n+1} \) are written as \( c_j \), while the known \( c_j^n \) from the previous time level are denoted by \( c_{1,j} \).

When using finite elements, each basis function \( \basphi_i \) is associated with a node \( \xno{i} \). We have a collection of nodes \( \{\xno{i}\}_{i\in\Ifb} \) on the boundary \( \partial\Omega_D \). Suppose \( U_k^n \) is the known Dirichlet value at \( \xno{k} \) at time \( t_n \) (\( U_k^n=u_0(\xno{k},t_n) \)). The appropriate boundary function is then $$ B(\x,t_n)=\sum_{j\in\Ifb} U_j^n\basphi_j\tp$$ The unknown coefficients \( c_j \) are associated with the rest of the nodes, which have numbers \( \nu(i) \), \( i\in\If = \{0,\ldots,N\} \). The basis functions for \( V \) are chosen as \( \baspsi_i = \basphi_{\nu(i)} \), \( i\in\If \), and all of these vanish at the boundary nodes as they should. The expansion for \( u^{n+1} \) and \( u^n \) become $$ \begin{align*} u^n &= \sum_{j\in\Ifb} U_j^n\basphi_j + \sum_{j\in\If}c_{1,j}\basphi_{\nu(j)},\\ u^{n+1} &= \sum_{j\in\Ifb} U_j^{n+1}\basphi_j + \sum_{j\in\If}c_{j}\basphi_{\nu(j)}\tp \end{align*} $$ The equations for the unknown coefficients \( c_i \) become $$ \begin{align*} \sum_{j\in\If} \left(\int_\Omega \basphi_i\basphi_j\dx\right) c_j &= \sum_{j\in\If} \left(\int_\Omega\left( \basphi_i\basphi_j - \Delta t\dfc\nabla \basphi_i\cdot\nabla\basphi_j\right)\dx\right) c_{1,j} - \\ &\quad \sum_{j\in\Ifb}\int_\Omega\left( \basphi_i\basphi_j(U_j^{n+1} - U_j^n) + \Delta t\dfc\nabla \basphi_i\cdot\nabla \basphi_jU_j^n\right)\dx \\ &\quad + \Delta t\int_\Omega f\basphi_i\dx - \Delta t\int_{\partial\Omega_N} g\basphi_i\ds, \quad i\in\If\tp \end{align*} $$

Instead of introducing a boundary function \( B \) we can work with basis functions associated with all the nodes and incorporate the Dirichlet conditions by modifying the linear system. Let \( \If \) be the index set that counts all the nodes: \( \{0,1,\ldots,N=N_n\} \). The expansion for \( u^n \) is then \( \sum_{j\in\If}c^n_j\basphi_j \) and the variational form becomes $$ \begin{align*} \sum_{j\in\If} \left(\int_\Omega \basphi_i\basphi_j\dx\right) c_j &= \sum_{j\in\If} \left(\int_\Omega\left( \basphi_i\basphi_j - \Delta t\dfc\nabla \basphi_i\cdot\nabla\basphi_j\right)\dx\right) c_{1,j} \\ &\quad - \Delta t\int_\Omega f\basphi_i\dx - \Delta t\int_{\partial\Omega_N} g\basphi_i\ds\tp \end{align*} $$ We introduce the matrices \( M \) and \( K \) with entries \( M_{i,j}=\int_\Omega\basphi_i\basphi_j\dx \) and \( K_{i,j}=\int_\Omega\dfc\nabla\basphi_i\cdot\nabla\basphi_j\dx \), respectively. In addition, we define the vectors \( c \), \( c_1 \), and \( f \) with entries \( c_i \), \( c_{1,i} \), and \( \int_\Omega f\basphi_i\dx - \int_{\partial\Omega_N}g\basphi_i\ds \). The equation system can then be written as $$ \begin{equation} Mc = Mc_1 - \Delta t Kc_1 + \Delta t f\tp \end{equation} $$ When \( M \), \( K \), and \( b \) are assembled without paying attention to Dirichlet boundary conditions, we need to replace equation \( k \) by \( c_k=U_k \) for \( k \) corresponding to all boundary nodes (\( k\in\Ifb \)). The modification of \( M \) consists in setting \( M_{k,j}=0 \), \( j\in\If \), and the \( M_{k,k}=1 \). Alternatively, a modification that preserves the symmetry of \( M \) can be applied. At each time level one forms \( b = Mc_1 - \Delta t Kc_1 + \Delta t f \) and sets \( b_k=U^{n+1}_k \), \( k\in\Ifb \), and solves the system \( Mc=b \).

In case of a Backward Euler method, the system becomes (122). We can write the system as \( Ac=b \), with \( A=M + \Delta t K \) and \( b = Mc_1 + f \). Both \( M \) and \( K \) needs to be modified because of Dirichlet boundary conditions, but the diagonal entries in \( K \) should be set to zero and those in \( M \) to unity. In this way, \( A_{k,k}=1 \). The right-hand side must read \( b_k=U^n_k \) for \( k\in\Ifb \) (assuming the unknown is sought at time level \( t_n \)).

We shall address the one-dimensional initial-boundary value problem $$ \begin{align} u_t &= (\dfc u_x)_x + f,\quad & \x\in\Omega =[0,L],\ t\in (0,T], \tag{125} \\ u(x,0) &= 0,\quad & \x\in\Omega, \tag{126}\\ u(0,t) &= a\sin\omega t,\quad & t\in (0,T], \tag{127}\\ u_x(L,t) &= 0,\quad & t\in (0,T]\tp \tag{128} \end{align} $$ A physical interpretation may be that \( u \) is the temperature deviation from a constant mean temperature in a body \( \Omega \) that is subject to an oscillating temperature (e.g., day and night, or seasonal, variations) at \( x=0 \).

We use a Backward Euler scheme in time and P1 elements of constant length \( h \) in space. Incorporation of the Dirichlet condition at \( x=0 \) through modifying the linear system at each time level means that we carry out the computations as explained in the section Discretization in time by a Backward Euler scheme and get a system (122). The \( M \) and \( K \) matrices computed without paying attention to Dirichlet boundary conditions become $$ \begin{align} M &= \frac{h}{6} \left( \begin{array}{cccccccccc} 2 & 1 & 0 &\cdots & \cdots & \cdots & \cdots & \cdots & 0 \\ 1 & 4 & 1 & \ddots & & & & & \vdots \\ 0 & 1 & 4 & 1 & \ddots & & & & \vdots \\ \vdots & \ddots & & \ddots & \ddots & 0 & & & \vdots \\ \vdots & & \ddots & \ddots & \ddots & \ddots & \ddots & & \vdots \\ \vdots & & & 0 & 1 & 4 & 1 & \ddots & \vdots \\ \vdots & & & & \ddots & \ddots & \ddots &\ddots & 0 \\ \vdots & & & & &\ddots & 1 & 4 & 1 \\ 0 &\cdots & \cdots &\cdots & \cdots & \cdots & 0 & 1 & 2 \end{array} \right)\\ K &= \frac{\dfc}{h} \left( \begin{array}{cccccccccc} 1 & -1 & 0 &\cdots & \cdots & \cdots & \cdots & \cdots & 0 \\ -1 & 2 & -1 & \ddots & & & & & \vdots \\ 0 & -1 & 2 & -1 & \ddots & & & & \vdots \\ \vdots & \ddots & & \ddots & \ddots & 0 & & & \vdots \\ \vdots & & \ddots & \ddots & \ddots & \ddots & \ddots & & \vdots \\ \vdots & & & 0 & -1 & 2 & -1 & \ddots & \vdots \\ \vdots & & & & \ddots & \ddots & \ddots &\ddots & 0 \\ \vdots & & & & &\ddots & -1 & 2 & -1 \\ 0 &\cdots & \cdots &\cdots & \cdots & \cdots & 0 & -1 & 1 \end{array} \right) \end{align} $$ The right-hand side of the variational form contains \( Mc_1 \) since there is no source term (\( f \)) and no boundary term from the integration by parts (\( u_x=0 \) at \( x=L \) and we compute as if \( u_x=0 \) at \( x=0 \) too). We must incorporate the Dirichlet boundary condition \( c_0=a\sin\omega t_n \) by ensuring that this is the first equation in the linear system. To this end, the first row in \( K \) and \( M \) are set to zero, but the diagonal entry \( M_{0,0} \) is set to 1. The right-hand side is \( b=Mc_1 \), and we set \( b_0 = a\sin\omega t_n \). Note that in this approach, \( N=N_n \), and \( c \) equals the unknown \( u \) at each node in the mesh. We can write the complete linear system as $$ \begin{align} c_0 &= a\sin\omega t_n,\\ \frac{h}{6}(c_{i-1} + 4c_i + c_{i+1}) + \Delta t\frac{\dfc}{h}(-c_{i-1} +2c_i + c_{i+1}) &= \frac{h}{6}(c_{1,i-1} + 4c_{1,i} + c_{1,i+1}),\\ &\qquad i=1,\ldots,N_n-1,\nonumber\\ \frac{h}{6}(c_{i-1} + 2c_i) + \Delta t\frac{\dfc}{h}(-c_{i-1} +c_i) &= \frac{h}{6}(c_{1,i-1} + 2c_{1,i}),\quad i=N_n\tp \end{align} $$

The Dirichlet boundary condition can alternatively be implemented through a boundary function \( B(x,t)=a\sin\omega t\basphi_0(x) \): $$ u^n(x) = a\sin\omega t_n\basphi_0(x) + \sum_{j\in\If} c_j\basphi_{\nu(j)}(x),\quad \nu(j) = j+1\tp$$ Now, \( N=N_n-1 \) and the \( c \) vector contains values of \( u \) at nodes \( 1,2,\ldots,N_n \). The right-hand side gets a contribution $$ \begin{equation} \int_0^L \left( a(\sin\omega t_n - \sin\omega t_{n-1})\basphi_0\basphi_i - \Delta t\dfc a\sin\omega t_n\nabla\basphi_0\cdot\nabla\basphi_i\right)\dx \tp \tag{129} \end{equation} $$

The diffusion equation \( u_t = \dfc u_{xx} \) allows a (Fourier) wave component \( u=\exp{(\beta t + ikx)} \) as solution if \( \beta = -\dfc k^2 \), which follows from inserting the wave component in the equation. The exact wave component can alternatively be written as $$ \begin{equation} u = \Aex^n e^{ikx},\quad \Aex = e^{-\dfc k^2\Delta t}\tp \tag{130} \end{equation} $$ Many numerical schemes for the diffusion equation has a similar wave component as solution: $$ \begin{equation} u^n_q = A^n e^{ikx}, \tag{131} \end{equation} $$ where is an amplification factor to be calculated by inserting (132) in the scheme. We introduce \( x=qh \), or \( x=q\Delta x \) to align the notation with that frequently used in finite difference methods.

A convenient start of the calculations is to establish some results for various finite difference operators acting on $$ \begin{equation} u^n_q = A^n e^{ikq\Delta x}\tp \tag{132} \end{equation} $$ $$ \begin{align*} [D_t^+ A^n e^{ikq\Delta x}]^n &= A^n e^{ikq\Delta x}\frac{A-1}{\Delta t},\\ [D_t^- A^n e^{ikq\Delta x}]^n &= A^n e^{ikq\Delta x}\frac{1-A^{-1}}{\Delta t},\\ [D_t A^n e^{ikq\Delta x}]^{n+\half} &= A^{n+\half} e^{ikq\Delta x}\frac{A^{\half}-A^{-\half}}{\Delta t} = A^ne^{ikq\Delta x}\frac{A-1}{\Delta t},\\ [D_xD_x A^ne^{ikq\Delta x}]_q &= -A^n \frac{4}{\Delta x^2}\sin^2\left(\frac{k\Delta x}{2}\right)\tp \end{align*} $$

We insert (132) in the Forward Euler scheme with P1 elements in space and \( f=0 \) (this type of analysis can only be carried out if \( f=0 \)), $$ \begin{equation} [D_t^+(u + \frac{1}{6}h^2D_xD_x u) = \dfc D_xD_x u]^n_q \tag{133} \tp \end{equation} $$ We have $$ [D_t^+D_xD_x Ae^{ikx}]^n_q = [D_t^+A]^n [D_xD_x e^{ikx}]_q = -A^ne^{ikp\Delta x} \frac{A-1}{\Delta t}\frac{4}{\Delta x^2}\sin^2 (\frac{k\Delta x}{2}) \tp $$ The term \( [D_t^+Ae^{ikx} + \frac{1}{6}\Delta x^2 D_t^+D_xD_x Ae^{ikx}]^n_q \) then reduces to $$ \frac{A-1}{\Delta t} - \frac{1}{6}\Delta x^2 \frac{A-1}{\Delta t} \frac{4}{\Delta x^2}\sin^2 (\frac{k\Delta x}{2}), $$ or $$ \frac{A-1}{\Delta t} \left(1 - \frac{2}{3}\sin^2 (k\Delta x/2)\right) \tp $$ Introducing \( p=k\Delta x/2 \) and \( C=\dfc\Delta t/\Delta x^2 \), the complete scheme becomes $$ (A-1) \left(1 - \frac{2}{3}\sin^2 p\right) = -4C\sin^2 p,$$ from which we find \( A \) to be $$ A = 1 - 4C\frac{\sin^2 p}{1 - \frac{2}{3}\sin^2 p} \tp $$

How does this \( A \) change the stability criterion compared to the Forward Euler finite difference scheme and centered differences in space? The stability criterion is \( |A|\leq 1 \), which here implies \( A\leq 1 \) and \( A\geq -1 \). The former is always fulfilled, while the latter leads to $$ 4C\frac{\sin^2 p}{1 + \frac{2}{3}\sin^2 p} \leq 2\tp $$ The factor \( \sin^2 p/(1 - \frac{2}{3}\sin^2 p) \) can be plotted for \( p\in [0,\pi/2] \), and the maximum value goes to 3 as \( p\rightarrow \pi/2 \). The worst case for stability therefore occurs for the shortest possible wave, \( p=\pi/2 \), and the stability criterion becomes $$ \begin{equation} C\leq \frac{1}{6}\quad\Rightarrow\quad \Delta t\leq \frac{\Delta x^2}{6\dfc}, \end{equation} $$ which is a factor 1/3 worse than for the standard Forward Euler finite difference method for the diffusion equation, which demands \( C\leq 1/2 \). Lumping the mass matrix will, however, recover the finite difference method and therefore imply \( C\leq 1/2 \) for stability.

We can use the same approach and insert (132) in the Backward Euler scheme with P1 elements in space and \( f=0 \): $$ \begin{equation} [D_t^-(u + \frac{1}{6}h^2D_xD_x u) = \dfc D_xD_x u]^n_i \tag{134} \tp \end{equation} $$ Similar calculations as in the Forward Euler case lead to $$ (1-A^{-1}) \left(1 - \frac{2}{3}\sin^2 p\right) = -4C\sin^2 p,$$ and hence $$ A = \left( 1 + 4C\frac{\sin^2 p}{1 - \frac{2}{3}\sin^2 p}\right)^{-1} \tp $$

It is of interest to compare \( A \) and \( \Aex \) as functions of \( p \) for some \( C \) values. Figure 48 display the amplification factors for the Backward Euler scheme corresponding a coarse mesh with \( C=2 \) and a mesh at the stability limit of the Forward Euler scheme in the finite difference method, \( C=1/2 \). Figures 49 and 50 shows how the accuracy increases with lower \( C \) values for both the Forward Euler and Backward schemes, respectively. The striking fact, however, is that the accuracy of the finite element method is significantly less than the finite difference method for the same value of \( C \). Lumping the mass matrix to recover the numerical amplification factor \( A \) of the finite difference method is therefore a good idea in this problem.

Figure 48: Comparison of coarse-mesh amplification factors for Backward Euler discretization of a 1D diffusion equation.

Figure 49: Comparison of fine-mesh amplification factors for Forward Euler discretization of a 1D diffusion equation.

Figure 50: Comparison of fine-mesh amplification factors for Backward Euler discretization of a 1D diffusion equation.

Remaining tasks: