Note: VERY PRELIMINARY VERSION! (Expect lots of typos!)

Finite difference methods for waves on a string

A finite difference method

Step 1: Discretizing the Domain

Step 2: Fulfilling the equation at the mesh points

Step 3: Replacing derivatives by finite differences

Step 4: Formulating a Recursive Algorithm

Sketch of an implementation

Generalization: including a source term

The extended problem

Discretization

Constructing an exact solution of the discrete equations

Implementation

Making a solver function

Verification: exact quadratic solution

Visualization: animating \( u(x,t) \)

Visualization via SciTools

Making movie files

Skipping frames for animation speed

Visualization via Matplotlib

Running a case

The benefits of scaling

Vectorization

Operations on slices of arrays

Finite difference schemes expressed as slices

Verification

Efficiency measurements

Exercises

Exercise 1: Add storage of solution in a user action function

Exercise 2: Use a class for the user action function

Exercise 3: Compare several Courant numbers in one movie

Generalization: reflecting boundaries

Neumann boundary condition

Discretization of derivatives at the boundary

Implementation of Neumann conditions

Alternative implementation via ghost cells

Idea

Implementation

Generalization: variable wave velocity

The model PDE with a variable coefficient

Discretizing the variable coefficient

Computing the coefficient between mesh points

How a variable coefficient affects the stability

Implementation of variable coefficients

A more general model PDE with variable coefficients

Generalization: including damping

Building a general 1D wave equation solver

User action function as a class

Collection of initial conditions

Calling functions from the command line

Exercises

Exercise 4: Use ghost cells to implement Neumann conditions

Exercise 5: Find a symmetry boundary condition

Exercise 6: Prove symmetry of a 1D wave problem computationally

Exercise 7: Prove symmetry of a 1D wave problem analytically

Exercise 8: Send pulse waves through a layered medium

Exercise 9: Explain why numerical noise occurs

Exercise 10: Investigate harmonic averaging in a 1D model

Finite difference methods for 2D and 3D wave equations

Multi-dimensional wave equations

Mesh

Discretization

Discretizing the PDEs

Handling boundary conditions where is \( u \) known

Discretizing the \( \partial u/\partial n = 0 \)

Implementation

Scalar computations

Domain and mesh

Stability limit

Solution arrays

Computing the solution

Vectorized computations

Verification

Testing a quadratic solution

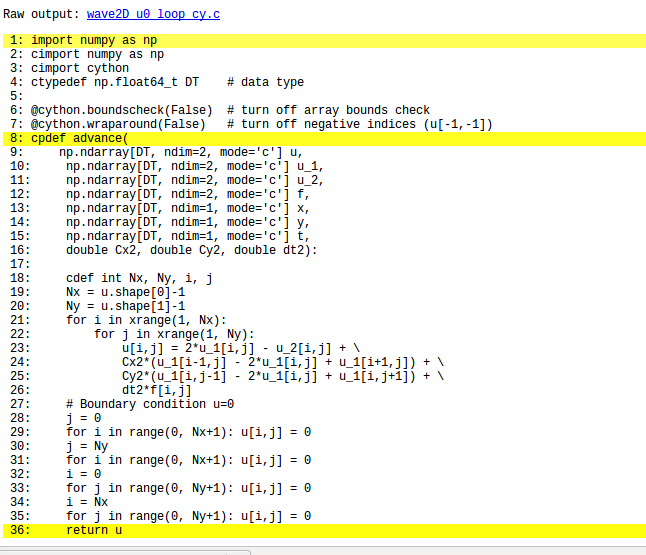

Migrating loops to Cython

Declaring variables and annotating the code

Visual inspection of the C translation

Building the extension module

Calling the Cython function

Efficiency

Migrating loops to Fortran

The Fortran subroutine

Building the Fortran module with f2py

Examining doc strings

How to avoid array copying

Efficiency

Migrating loops to C via Cython

Translating index pairs to single indices

The complete C code

The Cython interface file

Building the extension module

Efficiency

Migrating loops to C via f2py

Migrating loops to C via Instant

Migrating loops to C++ via f2py

Using classes to implement a simulator

Callbacks to Python from Fortran or C

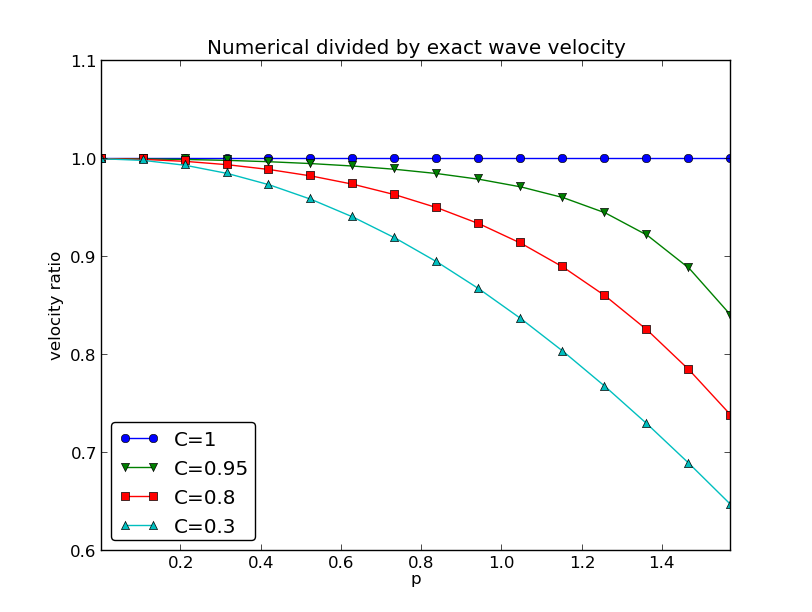

Analysis of the continuous and discrete solutions

Properties of the solution of the wave equation

Analysis of the finite difference scheme

Extending the analysis to 2D and 3D

Applications of wave equations

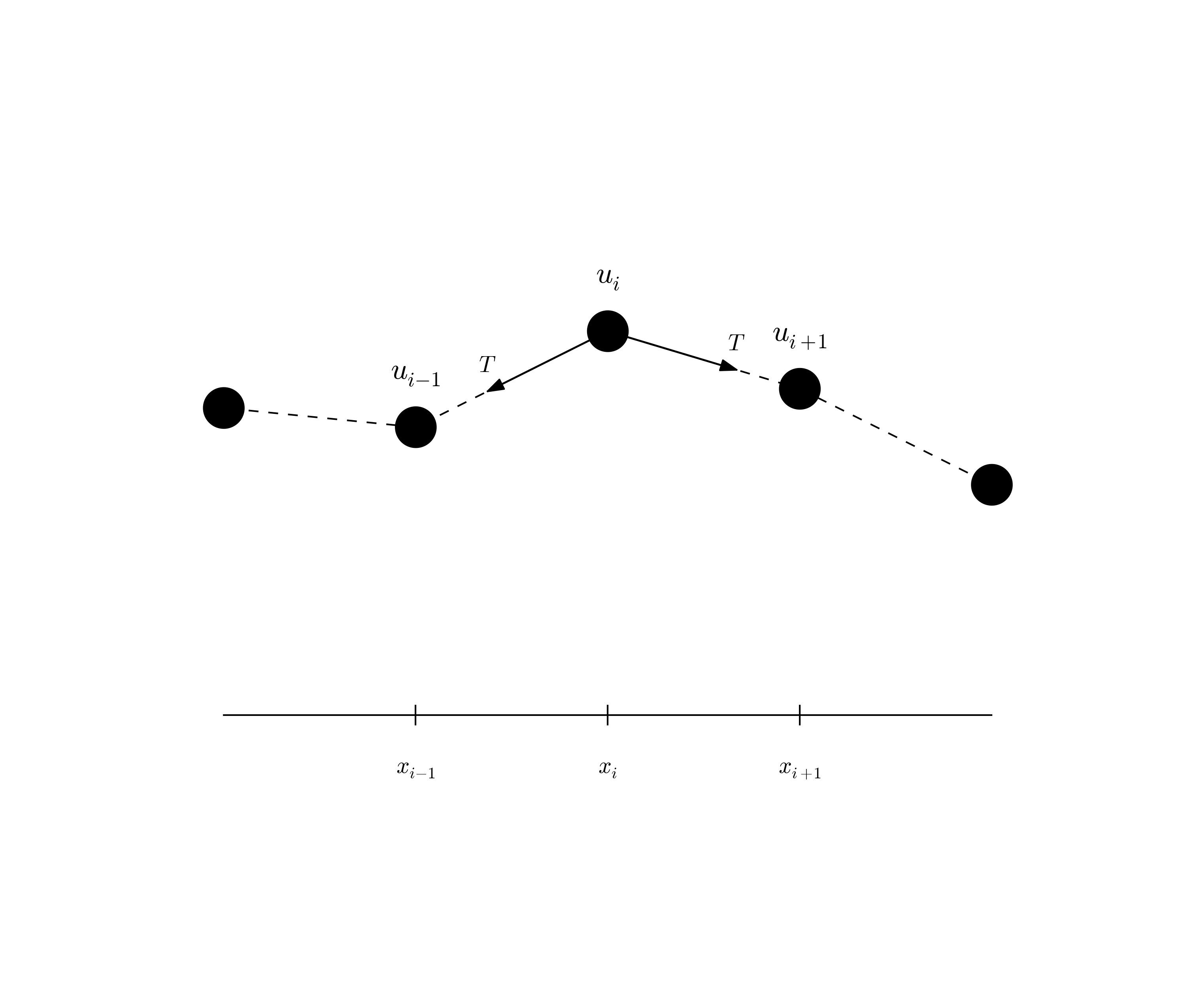

Waves on a string

External forcing

Modeling the tension via springs

Waves on a membrane

Elastic waves in a rod

The acoustic model for seismic waves

Anisotropy

Sound waves in liquids and gases

Spherical waves

The linear shallow water equations

Wind drag on the surface

Bottom drag

Effect of the Earth's rotation

Waves in blood vessels

Reduction to standard wave equation

Electromagnetic waves

Exercises

Exercise 11: Simulate elastic waves in a rod

Exercise 12: Test the efficiency of compiled loops in 3D

Exercise 13: Earthquake-generated tsunami in a 1D model

Exercise 14: Implement an open boundary condition

Exercise 15: Earthquake-generated tsunami over a subsea hill

Exercise 16: Implement Neumann conditions in 2D

Exercise 17: Implement a convergence test for a 2D code

Exercise 18: Earthquake-generated tsunami over a 3D hill

Exercise 19: Implement loops in compiled languages

Exercise 20: Write a complete program in Fortran or C

Exercise 21: Investigate Matplotlib for visualization

Exercise 22: Investigate Mayavi for visualization

Exercise 23: Investigate Paraview for visualization

Exercise 24: Investigate OpenDX for visualization

Exercise 25: Investigate harmonic vs arithmetic mean

Exercise 26: Simulate seismic waves in 2D

A very wide range of physical processes lead to wave motion, where signals are propagated through a medium in space and time, normally with little or no permanent movement of the medium itself. The shape of the signals may undergo changes as they travel through matter, but usually not so much that the signals cannot be recognized at some later point in space and time. Many types of wave motion can be described by the wave equation \( u_{tt}=\nabla\cdot (c^2\nabla u) + f \), which we will solve in the forthcoming text by finite difference methods.

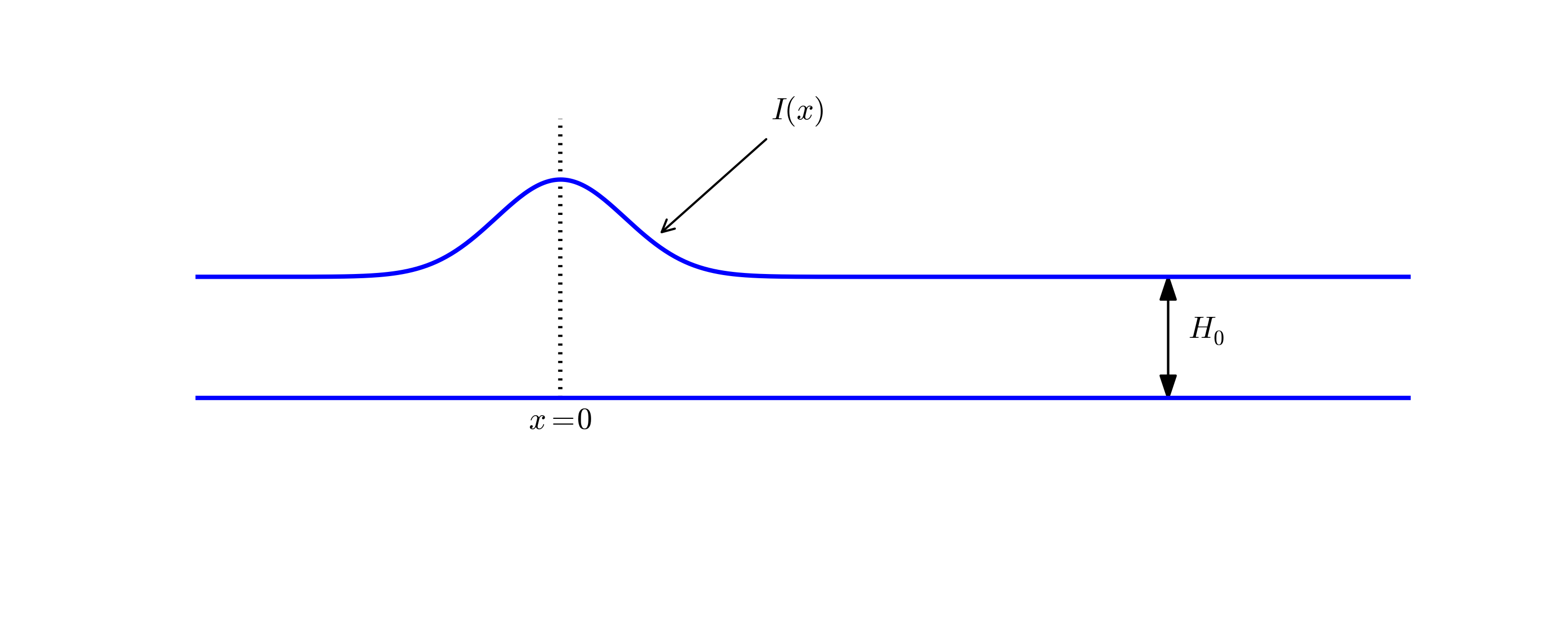

We begin our study of wave equations by simulating one-dimensional waves on a string, say on a guitar or violin string. Let the string in the deformed state coincide with the interval \( [0,L] \) on the \( x \) axis, and let \( u(x,t) \) be the displacement at time \( t \) in the \( y \) direction of a point initially at \( x \). The displacement function \( u \) is governed by the mathematical model

$$ \begin{align} \frac{\partial^2 u}{\partial t^2} &= c^2 \frac{\partial^2 u}{\partial x^2}, \quad x\in (0,L),\ t\in (0,T] \label{wave:pde1}\\ u(x,0) &= I(x), \quad x\in [0,L] \label{wave:pde1:ic:u}\\ \frac{\partial}{\partial t}u(x,0) &= 0, \quad x\in [0,L] \label{wave:pde1:ic:ut}\\ u(0,t) & = 0, \quad t\in (0,T], \label{wave:pde1:bc:0}\\ u(L,t) & = 0, \quad t\in (0,T] \thinspace . \label{wave:pde1:bc:L} \end{align} $$ The constant \( c \) and the function \( I(x) \) must be prescribed.

Equation \eqref{wave:pde1} is known as the one-dimensional wave equation. Since this PDE contains a second-order derivative in time, we need two initial conditions, here \eqref{wave:pde1:ic:u} specifying the initial shape of the string, \( I(x) \), and \eqref{wave:pde1:ic:ut} reflecting that the initial velocity of the string is zero. In addition, PDEs need boundary conditions, here \eqref{wave:pde1:bc:0} and \eqref{wave:pde1:bc:L}, specifying that the string is fixed at the ends, i.e., that the displacement \( u \) is zero at the ends.

Sometimes we will use a more compact notation for the partial derivatives to save space:

$$ \begin{equation} u_t = \frac{\partial u}{\partial t}, u_{tt} = \frac{\partial^2 u}{\partial t^2}, \end{equation} $$ and similar for derivatives with respect to other variables. Then the wave equation can be written compactly as \( u_{tt} = c^2u_{xx} \).

The PDE problem \eqref{wave:pde1}-\eqref{wave:pde1:bc:L} will now be discretized in space and time by a finite difference method.

The temporal domain \( [0,T] \) is represented by a finite number of mesh points

$$ \begin{equation} t_0=0 < t_1 < t_2 < \cdots < t_{N-1} < t_N = T \thinspace . \end{equation} $$ Similarly, the spatial domain \( [0,L] \) is replaced by a set of mesh points

$$ \begin{equation} 0 = x_0 < x_1 < x_2 < \cdots < x_{N_x-1} < x_{N_x} = L \thinspace . \end{equation} $$ One may view the mesh as two-dimensional in the \( x,t \) plane, consisting of points \( (x_i, t_n) \), \( i=0,\ldots,N_x \), \( n=0,\ldots,N \).

For uniformly distributed mesh points we can introduce the constant mesh spacings \( \Delta t \) and \( \Delta x \). We have that

$$ \begin{equation} x_i = i\Delta x,\quad i=0,\ldots,N_x, \end{equation} $$ and

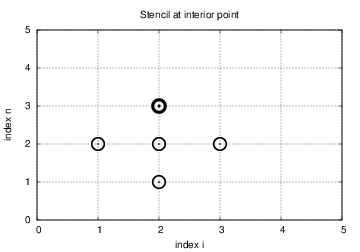

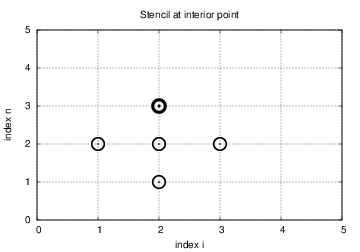

$$ \begin{equation} t_i = n\Delta t,\quad n=0,\ldots,N \thinspace . \end{equation} $$ We also have that \( \Delta x = x_i-x_{i-1} \), \( i=1,\ldots,N_x \), and \( \Delta t = t_n - t_{n-1} \), \( n=1,\ldots,N \). Figure 1 displays a mesh in the \( x \)-$t$ plane with \( N=5 \), \( N_x=5 \), and constant mesh spacings.

The solution \( u(x,t) \) is sought at the mesh points: \( u(x_i,t_n) \) for \( i=0,\ldots,N_x \) and \( n=0,\ldots,N \). The numerical approximation at mesh point \( (x_i,t_n) \) is denoted by \( u^n_i \). The circles in Figure 1 illustrates neighboring mesh points where values of \( u^n_i \) are connected through a discrete equation. In this particular case, \( u_2^1 \), \( u_1^2 \), \( u_2^2 \), \( u_3^2 \), and \( u_2^3 \) are connected in a discrete equation associated with the center point \( (2,2) \). The term stencil is often used about the discrete equation at a mesh point, and the geometry of a typical stencil is illustrated in Figure 1.

For a numerical solution by the finite difference method, we relax the condition that \eqref{wave:pde1} holds at all points in the domain \( (0,L)\times (0,T] \) to the requirement that the PDE is fulfilled at the mesh points:

$$ \begin{equation} \frac{\partial^2}{\partial t^2} u(x_i, t_n) = c^2\frac{\partial^2}{\partial x^2} u(x_i, t_n), \label{wave:pde1:step2} \end{equation} $$ for \( i=1,\ldots,N_x-1 \) and \( n=1,\ldots,N \). For \( n=0 \) we have the initial conditions \( u=I(x) \) and \( u_t=0 \), and at the boundaries \( i=0,N_x \) we have the boundary condition \( u=0 \).

The second-order derivatives can be replaced by central differences. The most widely used difference approximation of the second-order derivative is

$$ \frac{\partial^2}{\partial t^2}u(x_i,t_n)\approx \frac{u_i^{n+1} - 2u_i^n + u^{n-1}_i}{\Delta t^2} = [D_tD_t u]^n_i,$$ and a similar definition of the second-order derivative in \( x \) direction:

$$ \frac{\partial^2}{\partial x^2}u(x_i,t_n)\approx \frac{u_{i+1}^{n} - 2u_i^n + u^{n}_{i-1}}{\Delta x^2} = [D_xD_x u]^n_i \thinspace . $$ We can now replace the derivatives in \eqref{wave:pde1:step2} and get

$$ \begin{equation} \frac{u_i^{n+1} - 2u_i^n + u^{n-1}_i}{\Delta t^2} = c^2\frac{u_{i+1}^{n} - 2u_i^n + u^{n}_{i-1}}{\Delta x^2}, \label{wave:pde1:step3b} \end{equation} $$ or written more compactly using the operator notation:

$$ \begin{equation} [D_tD_t u = c^2 D_xD_x]^{n}_i \thinspace . \label{wave:pde1:step3a} \end{equation} $$

We also need to replace the derivative in the initial condition \eqref{wave:pde1:ic:ut} by a finite difference approximation. A centered difference of the type $$ \frac{\partial}{\partial t} u(x_i,t_n)\approx \frac{u^1_i - u^{-1}_i}{2\Delta t} = [D_{2t} u]^0_i, $$ seems appropriate. In operator notation the initial condition is written as $$ [D_{2t} u]^0_i = 0 \thinspace . $$ Writing out this equation and ordering the terms give $$ \begin{equation} u^{-1}_i=u^1_i,\quad i=0,\ldots,N_x\thinspace . \label{wave:pde1:step3c} \end{equation} $$

As for ordinary differential equations, we assume that \( u^n_i \) and \( u^{n-1}_i \) are already computed for \( i=0,\ldots,N_x \). The only unknown quantity in \eqref{wave:pde1:step3b} is therefore \( u^{n+1}_i \), which we can solve for:

$$ \begin{equation} u^{n+1}_i = -u^{n-1}_i + 2u^n_i + C^2 \left(u^{n}_{i+1}-2u^{n}_{i} + u^{n}_{i-1}\right), \label{wave:pde1:step4} \end{equation} $$ where we have introduced the parameter $$ \begin{equation} C = c\frac{\Delta t}{\Delta x}, \end{equation} $$ known as the (dimensionless) Courant number. We see that the discrete version of the PDE features only one parameter, \( C \), which is therefore the key parameter that governs the quality of the numerical solution. Both the primary physical parameter \( c \) and the numerical parameters \( \Delta x \) and \( \Delta t \) are lumped together in \( C \).

Given that \( u^{n-1}_i \) and \( u^n_i \) are computed for \( i=0,\ldots,N_x \), we find new values at the next time level by applying the formula \eqref{wave:pde1:step4} for \( i=1,\ldots,N_x-1 \). Figure 1 illustrates the points that are used to compute \( u^3_2 \). For the boundary points, \( i=0 \) and \( i=N_x \), we apply the boundary conditions: \( u_0^{n+1}=0 \) and \( u_{N_x}^{n+1}=0 \).

A problem with \eqref{wave:pde1:step4} arises when \( n=0 \) since the formula for \( u^1_i \) involves \( u^{-1}_i \), which is an undefined quantity outside the time mesh and the time domain. However, we can use the boundary condition \eqref{wave:pde1:step3c} in combination with \eqref{wave:pde1:step4} when \( n=0 \) to arrive at a special formula for \( u_i^1 \):

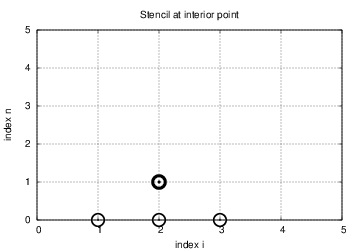

$$ \begin{equation} u_i^1 = u^0_i - \half C^2\left(u^{n}_{i+1}-2u^{n}_{i} + u^{n}_{i-1}\right) \thinspace . \label{wave:pde1:step4:1} \end{equation} $$ Figure 2 illustrates how \eqref{wave:pde1:step4:1} connects four instead of five points: \( u^1_2 \), \( u_1^0 \), \( u_2^0 \), and \( u_3^0 \).

We can now summarize the computational algorithm:

In a Python implementation of this algorithm, we use the array elements u[i] to store \( u^{n+1}_i \), u_1[i] to store \( u^n_i \), and u_2[i] to store \( u^{n-1}_i \). The algorithm only needs to access the three most recent time levels, so we need only three arrays for \( u_i^{n+1} \), \( u_i^n \), and \( u_i^{n-1} \), \( i=0,\ldots,N_x \). Storing all the solutions in a two-dimensional array of size \( (N_x+1)\times (N+1) \) would be possible in this simple one-dimensional PDE problem, but is normally out of the question in three-dimensional (3D) and large two-dimensional (2D) problems. We shall therefore in all our programs for solving PDEs have the unknown in memory at as few time levels as possible.

The following Python snippet realizes the steps in the computational algorithm.

# Given mesh points as arrays x and t (x[i], t[n])

dx = x[1] - x[0]; dt = t[1] - t[0]

C = c*dt/dx

N = len(t)-1

C2 = C**2 # help variable in the scheme

# Set initial condition u(x,0) = I(x)

for i in range(0, Nx+1):

u_1[i] = I(x[i])

# Apply special formula for first step, incorporating du/dt=0

for i in range(1, Nx):

u[i] = u_1[i] - 0.5*C**2(u_1[i+1] - 2*u_1[i] + u_1[i-1])

u[0] = 0; u[Nx] = 0

u_2[:], u_1[:] = u_1, u

for n in range(1, N):

# Update all inner mesh points at time t[n+1]

for i in range(1, Nx):

u[i] = 2u_1[i] - u_2[i] - \

C**2(u_1[i+1] - 2*u_1[i] + u_1[i-1])

# Insert boundary conditions

u[0] = 0; u[Nx] = 0

# Switch variables before next step

u_2[:], u_1[:] = u_1, u

Before implementing the algorithm, it is convenient to add a source term to the PDE \eqref{wave:pde1} since it gives us more freedom in finding test problems for verification. In particular, the source term allows us to use manufactured solutions for software testing, where we simply choose some function as solution, fit the corresponding source term, and define consistent boundary and initial conditions. Such solutions will seldom fulfill the initial condition \eqref{wave:pde1:ic:ut} so we need to generalize this condition to \( u_t=V(x) \).

We now address the following extended initial-boundary value problem for one-dimensional wave phenomena:

$$ \begin{align} u_{tt} &= c^2 u_{xx} + f(x,t), \quad x\in (0,L),\ t\in (0,T] \label{wave:pde2}\\ u(x,0) &= I(x), \quad x\in [0,L] \label{wave:pde2:ic:u}\\ u_t(x,0) &= V(x), \quad x\in [0,L] \label{wave:pde2:ic:ut}\\ u(0,t) & = 0, \quad t>0, \label{wave:pde2:bc:0}\\ u(L,t) & = 0, \quad t>0 \thinspace . \label{wave:pde2:bc:L} \end{align} $$

Sampling the PDE at \( (x_i,t_n) \) and using the same finite difference approximations as above, yields

$$ \begin{equation} [D_tD_t u = c^2 D_xD_x + f]^{n}_i \thinspace . \label{wave:pde2:fdop} \end{equation} $$ Writing this out and solving for the unknown \( u^{n+1}_i \) results in

$$ \begin{equation} u^{n+1}_i = -u^{n-1}_i + 2u^n_i + C^2 (u^{n}_{i+1}-2u^{n}_{i} + u^{n}_{i-1}) + \Delta t^2 f^n_i \label{wave:pde2:step3b} \thinspace . \end{equation} $$

The equation for the first time step must be rederived. The discretization of the initial condition \( u_t = V(x) \) at \( t=0 \) becomes

$$ [D_{2t}u = V]^0_i\quad\Rightarrow u^{-1}_i = u^{1}_i - 2\Delta t V_i,$$ which, when inserted in \eqref{wave:pde2:step3b} for \( n=0 \), gives

$$ \begin{equation} u^{1}_i = u^0_i - \Delta t V_i + \frac{1}{2} C^2 \left(u^{n}_{i+1}-2u^{n}_{i} + u^{n}_{i-1}\right) + \frac{1}{2}\Delta t^2 f^n_i \label{wave:pde2:step3c} \thinspace . \end{equation} $$

For verification purposes we shall use a solution that is quadratic in space and linear in time. More specifically, we choose $$ \uex (x,t) = x(L-x)(1+\frac{1}{2}t), $$ which by insertion in the PDE leads to \( f(x,t)=2(1+t)c^2 \). Moreover, this \( u \) fulfills the boundary conditions and is compatible with \( I(x)=x(L-x) \) and \( V(x)=\frac{1}{2}x(L-x) \).

To see if the discrete values \( \uex(x_i,t_n) \) fulfills the discrete equation as well, we first establish the results $$ \begin{align} \lbrack D_tD_t t^2\rbrack^n &= \frac{t_{n+1}^2 - 2t_n^2 + t_{n-1}^2}{\Delta t^2} = (n+1)^2 -n^2 + (n-1)^2 = 2,\\ \lbrack D_tD_t t\rbrack^n &= \frac{t_{n+1} - 2t_n + t_{n-1}}{\Delta t^2} = \frac{((n+1) -n + (n-1))\Delta t}{\Delta t^2} = 0 \thinspace . \end{align} $$ Hence, $$ [D_tD_t \uex]^n_i = x_i(L-x_i)[D_tD_t (1+\frac{1}{2}t)]^n = x_i(L-x_i)\frac{1}{2}[D_tD_t t]^n = 0,$$ and $$ \begin{align*} \lbrack D_xD_x \uex\rbrack^n_i &= (1+\frac{1}{2}t_n)\lbrack D_xD_x (xL-x^2)\rbrack_i = (1+\frac{1}{2}t_n)\lbrack LD_xD_x x - D_xD_x x^2\rbrack_i \\ &= -2(1+\frac{1}{2}t_n) \thinspace . \end{align*} $$ Since \( f^n_i = 2(1+\frac{1}{2}t_n)c^2 \), we see that the scheme accepts \( \uex \) as a solution. Moreover, \( \uex(x_i,0)=I(x_i) \), \( \partial \uex/\partial t = V(x_i) \) at \( t=0 \), and \( \uex(x_0,t)=\uex(x_{N_x},0)=0 \). Also the modified scheme for the first time step is fulfilled by \( \uex(x_i,t_n) \). Therefore, the exact solution \( \uex(x,t) \) of the PDE problem is also an exact solution of the discrete problem. We can use this result to check that the computed \( u^n_i \) vales from an implementation equals \( \uex(x_i,t_n) \) within machine precision, regardless of the mesh spacings \( \Delta x \) and \( \Delta t \)! Nevertheless, there might be stability restrictions on \( \Delta x \) and \( \Delta t \), so the test can only be run for a mesh that is compatible with the stability criterion (which is \( C\leq 1 \), to be derived later).

A product of quadratic or linear expressions in the various independent variables, as shown above, will often fulfill both the continuous and discrete PDE problem and can therefore be very useful solutions for verifying implementations. However, for 1D wave equations of the type \( u_t=c^2u_{xx} \) we shall see that there is always another much more powerful way of generating exact solutions (just set \( C=1 \)).

A real implementation of the basic computational algorithm can be encapsulated in a function, taking all the input data for the problem as arguments. The physical input data consists of \( c \), \( I(x) \), \( V(x) \), \( f(x,t) \), \( L \), and \( T \). The numerical input is the mesh parameters \( \Delta t \) and \( \Delta x \). One possibility is to specify \( N_x \) and the Courant number \( C=c\Delta t/\Delta x \). The latter is convenient to prescribe instead of \( \Delta t \) when performing numerical investigations, because the numerical accuracy depends directly on \( C \).

The solution at all spatial points at a new time level is stored in an array u (of length \( N_x+1 \)). We need to decide what do to with this solution, e.g., visualize the curve, analyze the values, or write the array to file for later use. The decision what to do is left to the user in a suppled function

def user_action(u, x, t, n)

where u is the solution at the spatial points x at time t[n].

A first attempt at a solver function is listed below.

from numpy import *

def solver(I, V, f, c, L, Nx, C, T, user_action=None):

"""Solve u_tt=c^2*u_xx + f on (0,L)x(0,T]."""

x = linspace(0, L, Nx+1) # mesh points in space

dx = x[1] - x[0]

dt = C*dx/c

N = int(round(T/dt))

t = linspace(0, N*dt, N+1) # mesh points in time

C2 = C**2 # help variable in the scheme

if f is None or f == 0 :

f = lambda x, t: 0

if V is None or V == 0:

V = lambda x: 0

u = zeros(Nx+1) # solution array at new time level

u_1 = zeros(Nx+1) # solution at 1 time level back

u_2 = zeros(Nx+1) # solution at 2 time levels back

import time; t0 = time.clock() # for measuring CPU time

# Load initial condition into u_1

for i in range(0,Nx+1):

u_1[i] = I(x[i])

if user_action is not None:

user_action(u_1, x, t, 0)

# Special formula for first time step

n = 0

for i in range(1, Nx):

u[i] = u_1[i] + dt*V(x[i]) + \

0.5*C2*(u_1[i-1] - 2*u_1[i] + u_1[i+1]) + \

0.5*dt**2*f(x[i], t[n])

u[0] = 0; u[Nx] = 0

if user_action is not None:

user_action(u, x, t, 1)

u_2[:], u_1[:] = u_1, u

for n in range(1, N):

# Update all inner points at time t[n+1]

for i in range(1, Nx):

u[i] = - u_2[i] + 2*u_1[i] + \

C2*(u_1[i-1] - 2*u_1[i] + u_1[i+1]) + \

dt**2*f(x[i], t[n])

# Insert boundary conditions

u[0] = 0; u[Nx] = 0

if user_action is not None:

if user_action(u, x, t, n+1):

break

# Switch variables before next step

u_2[:], u_1[:] = u_1, u

cpu_time = t0 - time.clock()

return u, x, t, cpu_time

We use the test problem derived in the section Generalization: including a source term for verification. Here is a function realizing this verification as a nose test:

import nose.tools as nt

def test_quadratic():

"""Check that u(x,t)=x(L-x)(1+t) is exactly reproduced."""

def exact_solution(x, t):

return x*(L-x)*(1 + 0.5*t)

def I(x):

return exact_solution(x, 0)

def V(x):

return 0.5*exact_solution(x, 0)

def f(x, t):

return 2*(1 + 0.5*t)*c**2

L = 2.5

c = 1.5

Nx = 3 # very coarse mesh

C = 0.75

T = 18

u, x, t, cpu = solver(I, V, f, c, L, Nx, C, T)

u_e = exact_solution(x, t[-1])

diff = abs(u - u_e).max()

nt.assert_almost_equal(diff, 0, places=14)

Now that we have verified the implementation it is time to do a real computation where we also display the evolution of the waves on the screen.

The following viz function defines a user_action callback function for plotting the solution at each time level:

def viz(I, V, f, c, L, Nx, C, T, umin, umax, animate=True):

"""Run solver and visualize u at each time level."""

import scitools.std as st, time, glob, os

def plot_u(u, x, t, n):

"""user_action function for solver."""

st.plot(x, u, 'r-',

xlabel='x', ylabel='u',

axis=[0, L, umin, umax],

title='t=%f' % t[n], show=True)

# Let the initial condition stay on the screen for 2

# seconds, else insert a pause of 0.2 s between each plot

time.sleep(2) if t[n] == 0 else time.sleep(0.2)

st.savefig('frame_%04d.png' % n) # for movie making

# Clean up old movie frames

for filename in glob.glob('frame_*.png'):

os.remove(filename)

user_action = plot_u if animate else None

u, x, t, cpu = solver(I, V, f, c, L, Nx, C, T, user_action)

# Make movie files

st.movie('frame_*.png', encoder='mencoder', fps=4,

output_file='movie.avi')

st.movie('frame_*.png', encoder='html', fps=4,

output_file='movie.html')

A function inside another function, like plot_u in the above code segment, has access to and remembers (!) all the local variables in the surrounding code inside the viz function. This is known in computer science as a closure and is convenient. For example, the st and time modules defined outside plot_u are accessible for plot_u the function is called (as user_action) in the solver function. Some think, however, that a class instead of a closure is a cleaner and easier-to-understand implementation of the user action function, see the section Building a general 1D wave equation solver.

Two hardcopies of the animation are made from the frame_*.png files, using movie function from SciTools at the end of the viz function. The AVI file movie1.avi can be played in a standard movie player. The HTML file movie.html is essentially a movie player that can be loaded into a browser to display the individual frame_*.png files. Note that padding the frame counter in the frame_*.png files (%04d format) is essential so that the wildcard notation frame_*.png expands to the correct set of files.

Rather than using the simple movie function, one can run native commands in the terminal window. An AVI movie can be made by mencoder:

Terminal> mencoder "mf://frame_%04d.png" -mf fps=4:type=png \

-ovc lavc -o movie.avi

Terminal> mplayer movie.avi

The HTML player can only be generated by

Terminal> scitools movie output_file=movie.html fps=4 frame_*.png

The fps parameter controls the number of frames per second, i.e., the speed of the movie.

Sometimes the time step is small and \( T \) is large, leading to an inconveniently large number of plot files and a slow animation on the screen. The solution to such a problem is to decide on a total number of frames in the animation, num_frames, and plot the solution only at every every frame. The total number of time levels (i.e., maximum possible number of frames) is the length of t, t.size, and if we want num_frames, we need to plot every t.size/num_frames frame:

every = int(t.size/float(num_frames))

if n % every == 0 or n == t.size-1:

st.plot(x, u, 'r-', ...)

The initial condition (n=0) is natural to include, and as n % every == 0 will very seldom be true for the very final frame, we also add that frame (n == t.size-1).

A simple choice of numbers may illustrate the formulas: say we have 801 frames in total (t.size) and we allow only 60 frames to be plotted. Then we need to plot every 801/60 frame, which with integer division yields 13 as every. Using the mod function, n % every, this operation is zero every time n can be divided by 13 without a remainder. That is, the if test is true when n equals \( 0, 13, 26, 39, ..., 780, 801 \). The associated code is included in the plot_u function in the file wave1D_u0_sv.py.

The previous code based on the plot interface from scitools.std can be run with Matplotlib as the visualization backend, but if one desires to program directly with Matplotlib, quite different code is needed. Matplotlib's interactive mode must be turned on:

import matplotlib.pyplot as plt

plt.ion() # interactive mode on

The most commonly used animation technique with Matplotlib is to update the data in the plot at each time level:

# Make a first plot

lines = plt.plot(t, u)

# call plt.axis, plt.xlabel, plt.ylabel, etc. as desired

# At later time levels

lines[0].set_ydata(u)

plt.legend('t=%g' % t[n])

plt.draw() # make updated plot

plt.savefig(...)

An alternative is to rebuild the plot at every time level:

plt.clf() # delete any previous curve(s)

plt.axis([...])

plt.plot(t, u)

# plt.xlabel, plt.legend and other decorations

plt.draw()

plt.savefig(...)

Many prefer to work with figure and axis objects as in MATLAB:

fig = plt.figure()

...

fig.clf()

ax = fig.gca()

ax.axis(...)

ax.plot(t, u)

# ax.set_xlabel, ax.legend and other decorations

plt.draw()

fig.savefig(...)

The first demo of our 1D wave equation solver concerns vibrations of a string that is initially deformed to a triangular shape, like when picking a guitar string:

$$ \begin{equation} I(x) = \left\lbrace \begin{array}{ll} ax/x_0, & x < x_0,\\ a(L-x)/(L-x_0), & \hbox{otherwise} \end{array}\right. \label{wave:pde1:guitar:I} \end{equation} $$ We choose \( L=40 \) cm, \( x_0=0.8L \), \( a=5 \) mm, \( N_x=50 \), and a time frequency \( \nu = 440 \) Hz. The relation between the wave speed \( c \) and \( \nu \) is \( c=\nu\lambda \), where \( \lambda \) is the wavelength, taken as \( 2L \) because the longest wave on the string form half a wavelength. There is no external force, so \( f=0 \), and the string is at rest initially so that \( V=0 \). A function setting these physical parameters and calling viz for this case goes as follows:

def guitar(C):

"""Triangular wave (pulled guitar string)."""

L = 0.4

x0 = 0.8*L

a = 0.005

freq = 440

wavelength = 2*L

c = freq*wavelength

omega = 2*pi*freq

num_periods = 1

T = 2*pi/omega*num_periods

Nx = 50

def I(x):

return a*x/x0 if x < x0 else a/(L-x0)*(L-x)

umin = -1.2*a; umax = -umin

cpu = viz(I, 0, 0, c, L, Nx, C, T, umin, umax, animate=True)

The associated program has the name wave1D_u0_s.py. Run the program and watch the movie of the vibrating string.

The previous example demonstrated that quite some work is needed with establishing relevant physical parameters for a case. By scaling the mathematical problem we can often reduce the need to estimate physical parameters dramatically. A scaling consists of introducing new independent and dependent variables, with the aim that the absolute value of these vary between 0 and 1: $$ \bar x = \frac{x}{L},\quad \bar t = \frac{L}{c}t,\quad \bar u = \frac{u}{a} \thinspace . $$ Replacing old by new variables in the PDE, using \( f=0 \), and dropping the bars, results in \( u_{tt} = u_{xx} \). That is, the original equation with \( c=1 \). The initial condition corresponds to \eqref{wave:pde1:guitar:I} with \( a=1 \), \( L=1 \), and \( x_0\in [0,1] \). This means that we only need to decide on \( x_0 \), because the scaled problem corresponds to setting all other parameters to unity! In the code we can just set a=c=L=1, x0=0.8, and there is no need to calculate with wavelengths and frequencies to estimate \( c \).

The only non-trivial parameter to estimate is \( T \) and how it relates to periods in periodic solutions. If \( u\sim \sin (\omega t) = \sin (\omega \bar t L/c) \) in time, \( \omega = 2\pi c/\lambda \), where \( \lambda =2L \) is the wavelength. One period \( T \) in dimensionless time means \( \omega T L/c = 2\pi \), which gives \( T=2 \).

The computational algorithm for solving the wave equation visits one mesh point at a time and evaluates a formula for the new value at that point. Technically, this is implemented by a loop over array elements in a program. Such loops may run slowly in Python (and similar interpreted languages such as R and MATLAB). One technique for speeding up loops is to perform operations on entire arrays instead of working with one element at a time. This is referred to as vectorization, vector computing, or array computing. Operations on whole arrays are possible if the computations involving each element is independent of each other and therefore can, at least in principle, be performed simultaneously. Vectorization not only speeds up the code on serial computers, but it also makes it easy to exploit parallel computing.

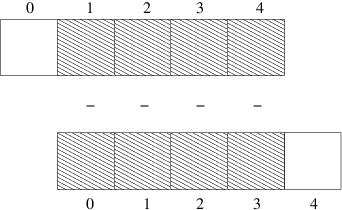

Efficient computing with numpy arrays demands that we avoid loops and compute with entire arrays at once, or at least large portions of them. Consider this calculation of differences:

n = u.size

for i in range(0, n-1):

d[i] = u[i+1] - u[i]

All the differences here are independent of each other. The computation of d can therefore alternatively be done by subtracting the array \( (u_0,u_1,\ldots,u_{n-1}) \) from the array where the elements are shifted one index upwards: \( (u_1,u_2,\ldots,u_n) \), see Figure 3. The former subset of the array can be expressed by u[0:n-1], u[0:-1], or just u[:-1], meaning from index 0 up to, but not including, the last element (-1). The latter subset is obtained by u[1:n] or u[1:], meaning from index 1 and the rest of the array. The computation of d can now be done without an explicit Python loop:

d = u[1:] - u[:-1]

or with explicit limits if desired:

d = u[1:n] - u[0:n-1]

Indices with a colon, going from an index to (but not including) another index are called slices. With numpy arrays, the computations are still done by loops, but in efficient, compiled, highly optimized code in C or Fortran. Such array operations can also easily be distributed among many processors on parallel computers. We say that the scalar code above, working on an element (a scalar) at a time, has been replaced by an equivalent vectorized code. The process of vectorizing code is called vectorization.

Figure 3: Illustration of subtracting two (displaced) slices of two arrays.

Newcomers to vectorization are encouraged to choose a small array u, say with five elements, and simulate with pen and paper both the loop version and the vectorized version.

Finite difference schemes basically contains differences between array elements with shifted indices. Consider the updating formula

for i in range(1, n-1):

u2[i] = u[i-1] - 2*u[i] + u[i+1]

The vectorization consists of replacing the loop by arithmetics on slices of arrays of length n-2:

u2 = u[:-2] - 2*u[1:-1] + u[2:]

u2 = u[0:n-2] - 2*u[1:n-1] + u[2:n] # alternative

Note that u2 here gets length n-2. If u2 is already an array of length n and we want to use the formula to update all the "inner" elements of u2, as we will when solving a 1D wave equation, we can write

u2[1:-1] = u[:-2] - 2*u[1:-1] + u[2:]

u2[1:n-1] = u[0:n-2] - 2*u[1:n-1] + u[2:n] # alternative

Pen and paper calculations with a small array will demonstrate what is actually going on. The expression on the right-hand side are done in the following steps, involving temporary arrays with intermediate results, since we can only work with two arrays at a time in arithmetic expressions:

temp1 = 2*u[1:-1]

temp2 = u[0:-2] - temp1

temp3 = temp2 + u[2:]

u2[1:-1] = temp3

We can extend the example to a formula with an additional term computed by calling a function:

def f(x):

return x**2 + 1

for i in range(1, n-1):

u2[i] = u[i-1] - 2*u[i] + u[i+1] + f(x[i])

Assuming u2, u, and x all have length n, the vectorized version becomes

u2[1:-1] = u[:-2] - 2*u[1:-1] + u[2:] + f(x[1:-1])

We now have the necessary tools to vectorize the algorithm for the wave equation. There are three loops: one for the initial condition, one for the first time step, and finally the loop that is repeated for all subsequent time levels. Since only the latter is repeated a potentially large number of times, we limit the efforts of vectorizing the code to this loop:

for i in range(1, Nx):

u[i] = 2*u_1[i] - u_2[i] + \

C2*(u_1[i-1] - 2*u_1[i] + u_1[i+1])

The vectorized version becomes

u[1:-1] = - u_2[1:-1] + 2*u_1[1:-1] + \

C2*(u_1[:-2] - 2*u_1[1:-1] + u_1[2:])

or

u[1:Nx] = 2*u_1[1:Nx]- u_2[1:Nx] + \

C2*(u_1[0:Nx-1] - 2*u_1[1:Nx] + u_1[2:Nx+1])

The program wave1D_u0_sv.py contains a new version of the function solver where both the scalar and the vectorized loops are included.

We may reuse the quadratic solution \( \uex(x,t)=x(L-x)(1+\frac{1}{2}t) \) for verifying also the vectorized code. A nose test can now test both the scalar and the vectorized version. Moreover, we may use a user_action function that compares the computed and exact solution at each time level and performs an assert:

def test_quadratic():

"""

Check the scalar and vectorized versions work for

a quadratic u(x,t)=x(L-x)(1+t) that is exactly reproduced.

"""

exact_solution = lambda x, t: x*(L - x)*(1 + 0.5*t)

I = lambda x: exact_solution(x, 0)

V = lambda x: 0.5*exact_solution(x, 0)

f = lambda x, t: 2*c**2*(1 + 0.5*t)

L = 2.5

c = 1.5

Nx = 3 # very coarse mesh

C = 1

T = 18 # long time integration

def assert_no_error(u, x, t, n):

u_e = exact_solution(x, t[n])

diff = abs(u - u_e).max()

print diff

nt.assert_almost_equal(diff, 0, places=13)

solver(I, V, f, c, L, Nx, C, T,

user_action=assert_no_error, version='scalar')

solver(I, V, f, c, L, Nx, C, T,

user_action=assert_no_error, version='vectorized')

Here, we also used the opportunity to demonstrate how to achieve very compact code with the use of lambda functions for the various input parameters that require a Python function.

Running the wave1D_u0_sv.py code with the previous string vibration example for \( N_x=50,100,200,400,800 \) and measuring the CPU time (see the run_efficiency_experiments function), shows that the speed-up of vectorization goes approximately like \( 5/N_x \), which is a substantial effect!

Extend the plot_u function in the file wave1D_u0_s.py to also store the solutions u in a list. To this end, declare all_u as an empty list in the viz function, outside plot_u, and perform an append operation inside the plot_u function. Note that a function, like plot_u, inside another function, like viz, remembers all local variables in viz function, including all_u, even when plot_u is called (user_action) in the solver function. Test both all_u.append(u) and all_u.append(u.copy()). Why does one of these constructions fail to store the solution correctly? Let the viz function return the all_u list converted to a two-dimensional numpy array. Filename: wave1D_u0_s2.py.

Redo Exercise 1: Add storage of solution in a user action function using a class for the user action function. That is, define a class Action where the all_u list is an attribute, and implement the user action function as a method (the special method __call__ is a natural choice). The class versions avoids that the user action function depends on parameters defined outside the function (such as all_u in Exercise 1: Add storage of solution in a user action function). Filename: wave1D_u0_s2c.py.

Use the program from Exercise 1: Add storage of solution in a user action function or Exercise 2: Use a class for the user action function to compute the and store the solutions corresponding to different Courant numbers, say 1.0, 0.9, and 0.1. Make visualization where the three solutions are compared. That is, each frame in the animation shows three curves. The challenge in such a visualization is to ensure that the curves in one plot corresponds to the same time point. Use slicing of the array returned from the viz function to pick out the solution at the right time levels (provided that the Courant numbers are integer factors of the smallest Courant number such that the slicing works).

Now we shall generalize the boundary condition \( u=0 \) from the section Finite difference methods for waves on a string to the condition \( u_x=0 \), which is more complicated to express numerically and also implement.

When a wave hits a boundary and is to be reflected back, one applies the condition

$$ \begin{equation} \frac{\partial u}{\partial n} \equiv \normalvec\cdot\nabla u = 0 \label{wave:pde1:Neumann:0} \thinspace . \end{equation} $$ The derivative \( \partial /\partial n \) is in the outward normal direction from a general boundary. For a 1D domain \( [0,L] \), we have that \( \partial/\partial n = \partial /\partial x \) at \( x=L \) and \( \partial/\partial n-\partial /\partial x \) at \( x=0 \). Boundary conditions specifying the value of \( \partial u/\partial n \) are known as Neumann conditions, while Dirichlet conditions refer to specifications of \( u \).When the values are zero (\( \partial u/\partial n=0 \) or \( u=0 \)) we speak about homogeneous Neumann or Dirichlet conditions.

How can we incorporate the condition \eqref{wave:pde1:Neumann:0} in the finite difference scheme? Since we have used central differences in all the other approximations to derivatives in the scheme, it is tempting to implement \eqref{wave:pde1:Neumann:0} at \( x=0 \) and \( t=t_n \) by the difference

$$ \begin{equation} \frac{u_{-1}^n - u_1^n}{2\Delta x} = 0 \thinspace . \label{wave:pde1:Neumann:0:cd} \end{equation} $$ The problem is that \( u_{-1}^n \) is not a \( u \) value that is being computed since the point is outside the mesh. However, if we combine \eqref{wave:pde1:Neumann:0:cd} with the scheme for \( i=0 \),

$$ \begin{equation} u^{n+1}_i = -u^{n-1}_i + 2u^n_i + C^2 \left(u^{n}_{i+1}-2u^{n}_{i} + u^{n}_{i-1}\right), \label{wave:pde1:Neumann:0:scheme} \end{equation} $$ we can eliminate the fictitious value \( u_{-1}^n \). We see that \( u_{-1}^n=u_1^n \) from \eqref{wave:pde1:Neumann:0:cd}, which can be used in \eqref{wave:pde1:Neumann:0:scheme} to arrive at a modified scheme for the boundary point \( u_0^{n+1} \):

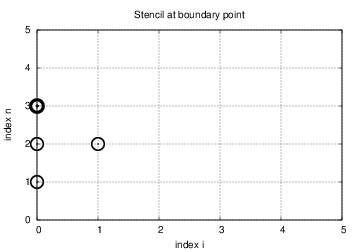

$$ \begin{equation} u^{n+1}_i = -u^{n-1}_i + 2u^n_i + 2C^2 \left(u^{n}_{i+1}-u^{n}_{i}\right),\quad i=0 \thinspace . \end{equation} $$ Figure 4 illustrates how this equation looks like for computing \( u^3_0 \) in terms of \( u^2_0 \), \( u^1_0 \), and \( u^2_1 \).

Figure 4: Modified stencil at a boundary with a Neumann condition.

Similarly, \eqref{wave:pde1:Neumann:0} applied at \( x=L \) is discretized by a central difference

$$ \begin{equation} \frac{u_{N_x+1}^n - u_{N_x-1}^n}{2\Delta x} = 0 \thinspace . \label{wave:pde1:Neumann:0:cd2} \end{equation} $$ Combined with the scheme for \( i=N_x \) we get a modified scheme for the boundary value \( u_{N_x}^{n+1} \):

$$ \begin{equation} u^{n+1}_i = -u^{n-1}_i + 2u^n_i + 2C^2 \left(u^{n}_{i-1}-u^{n}_{i}\right),\quad i=N_x \thinspace . \end{equation} $$

The modification of the scheme at the boundary is also required for the special formula for the first time step. How the stencil moves through the mesh and is modified at the boundary can be illustrated by an animation

The implementation of the special formulas for the boundary points can benefit from using the general formula for the interior points, but replacing \( u_{i-1}^n \) by \( u_{i+1}^n \) for \( i=0 \) and \( u_{i+1}^n \) by \( u_{i-1}^n \) for \( i=N_x \). This is achieved by just replacing the index \( i-1 \) by \( i+1 \) for \( i=0 \) and \( i+1 \) by \( i-1 \) for \( i=N_x \). In a program, we introduce variables to hold the value of the offset indices: im1 for i-1 and ip1 for i+1. It is now just a manner of defining im1 and ip1 properly for the internal points and the boundary points. The coding for the latter reads

i = 0

ip1 = i+1

im1 = ip1 # i-1 -> i+1

u[i] = u_1[i] + C2*(u_1[im1] - 2*u_1[i] + u_1[ip1])

i = Nx

im1 = i-1

ip1 = im1 # i+1 -> i-1

u[i] = u_1[i] + C2*(u_1[im1] - 2*u_1[i] + u_1[ip1])

We can in fact create one loop over both the internal and boundary points and use only one updating formula:

for i in range(0, Nx+1):

ip1 = i+1 if i < Nx else i-1

im1 = i-1 if i > 0 else i+1

u[i] = u_1[i] + C2*(u_1[im1] - 2*u_1[i] + u_1[ip1])

The program wave1D_dn.py solves the 1D wave equation \( u_{tt}=c^2u_{xx}+f(x,t) \) with general boundary and initial conditions:

Instead of modifying the scheme at the boundary, we can introduce extra points outside the mesh such that \( u_{-1}^n \) and \( u_{N_x+1}^n \) are defined. Adding the intervals \( [-\Delta x,0] \) and \( [L, L+\Delta x] \), often referred to as "ghost cells", to the mesh gives us all the needed mesh points \( i=-1,0,\ldots,N_x,N_x+1 \). If we ensure that we always have $$ u_{-1}^n = u_{1}^n\hbox{ and } u_{N_x-1}^n = u_{N_x+1}^n,$$ the application of the standard scheme at a boundary point \( i=0 \) or \( i=N_x \) will be correct and ensure the solution is compatible with the boundary condition.

We may keep x as the array for the physical mesh points, but just add extra elements to u,

u = zeros(Nx+3)

and similar for u_1 and u_2.

A major indexing problem arises as Python indices must start at 0 (u[-1] will always mean the last element in u). This implies that we in the mathematics should write the scheme as

$$ u^{n+1}_i = \cdots,\quad i=1,\ldots,N_x+1,$$ when \( i \) runs through all points in the physical mesh, and \( i=0 \) corresponds to the fictitious point \( x_{-1}=-\Delta x \). The old code for updating u at the inner mesh points must now be extended to cover all points:

for i in range(1, Nx+2):

u[i] = - u_2[i] + 2*u_1[i] + \

C2*(0.5*(q[i] + q[i+1])*(u_1[i+1] - u_1[i]) - \

0.5*(q[i] + q[i-1])*(u_1[i] - u_1[i-1])) + \

dt2*f(x[i], t[n])

Then the boundary points are updated, assuming the Neumann condition applies at both boundaries:

u[0] = u[2]

u[Nx+2] = u[Nx]

The ghost cell is only added to the boundary where we have a Neumann condition.

The solution returned to the user should preferably be an array with values corresponding to the physical mesh points in x, meaning that we return u[1:-1] (if there are two ghost cells).

Our next generalization of the 1D wave equation \eqref{wave:pde1} or \eqref{wave:pde2} is to allow for a variable wave velocity \( c \): \( c=c(x) \), usually motivated by wave motion in a domain composed of different physical media with different properties for propagating waves.

Instead of working with the squared quantity \( c^2(x) \) we shall for notational convenience introduce \( q(x) = c^2(x) \). A 1D wave equation with variable wave velocity often takes the form

$$ \begin{equation} \frac{\partial^2 u}{\partial t^2} = \frac{\partial}{\partial x}\left( q(x) \frac{\partial u}{\partial x}\right) + f(x,t) \label{wave:pde2:var:c:pde} \thinspace . \end{equation} $$ This equation sampled at a mesh point \( (x_i,t_n) \) reads $$ \frac{\partial^2 }{\partial t^2} u(x_i,t_n) = \frac{\partial}{\partial x}\left( q(x_i) \frac{\partial}{\partial x} u(x_i,t_n)\right) + f(x_i,t_n), $$ where the only new term is $$ \frac{\partial}{\partial x}\left( q(x_i) \frac{\partial}{\partial x} u(x_i,t_n)\right) = \left[ \frac{\partial}{\partial x}\left( q(x) \frac{\partial u}{\partial x}\right)\right]^n_i \thinspace . $$

The principal idea is to first discretize the outer derivative. Define $$ \phi = q(x) \frac{\partial u}{\partial x}, $$ and use a centered derivative around \( x=x_i \) for the derivative of \( \phi \): $$ \left[\frac{\partial\phi}{\partial x}\right]^n_i \approx \frac{\phi_{i+\frac{1}{2}} - \phi_{i-\frac{1}{2}}}{\Delta x} = [D_x\phi]^n_i \thinspace . $$ Then discretize $$ \phi_{i+\frac{1}{2}} = q_{i+\frac{1}{2}} \left[\frac{\partial u}{\partial x}\right]^n_{i+\frac{1}{2}} \approx q_{i+\frac{1}{2}} \frac{u^n_{i+1} - u^n_{i}}{\Delta x} = [q D_x u]_{i+\frac{1}{2}}^n \thinspace . $$ Similarly, $$ \phi_{i-\frac{1}{2}} = q_{i-\frac{1}{2}} \left[\frac{\partial u}{\partial x}\right]^n_{i-\frac{1}{2}} \approx q_{i-\frac{1}{2}} \frac{u^n_{i} - u^n_{i-1}}{\Delta x} = [q D_x u]_{i-\frac{1}{2}}^n \thinspace . $$ These intermediate results are now combined to $$ \begin{equation} \left[ \frac{\partial}{\partial x}\left( q(x) \frac{\partial u}{\partial x}\right)\right]^n_i \approx \frac{1}{\Delta x^2} \left( q_{i+\frac{1}{2}} \left({u^n_{i+1} - u^n_{i}}\right) - q_{i-\frac{1}{2}} \left({u^n_{i} - u^n_{i-1}}\right)\right) \label{wave:pde2:var:c:formula} \thinspace . \end{equation} $$ With operator notation we can write the discretization as $$ \begin{equation} \left[ \frac{\partial}{\partial x}\left( q(x) \frac{\partial u}{\partial x}\right)\right]^n_i \approx [D_xq D_x u]^n_i \label{wave:pde2:var:c:formula:op} \thinspace . \end{equation} $$

Remark. Many are tempted to use the chain rule on the term \( \frac{\partial}{\partial x}\left( q(x) \frac{\partial u}{\partial x}\right) \), but this is not a good idea when discretizing such a term.

If \( q \) is a known function of \( x \), we can easily evaluate \( q_{i+\frac{1}{2}} \) simply as \( q(x_{i+\frac{1}{2}}) \) with \( x_{i+\frac{1}{2}} = x_i + \frac{1}{2}\Delta x \). However, in many cases \( c \), and hence \( q \), is only known as a discrete function, often at the mesh points \( x_i \). Evaluating \( q \) between two mesh points \( x_i \) and \( x_{i+1} \) can be done by averaging in three ways: $$ \begin{align} q_{i+\frac{1}{2}} \approx \frac{1}{2}\left( q_{i} + q_{i+1}\right) = [\overline{q}^{x}]_i,\quad\hbox{(arithmetic mean)} \label{wave:pde2:var:c:mean:arithmetic}\\ q_{i+\frac{1}{2}} \approx 2\left( \frac{1}{q_{i}} + \frac{1}{q_{i+1}}\right)^{-1}, \quad\hbox{(harmonic mean)} \label{wave:pde2:var:c:mean:harmonic}\\ q_{i+\frac{1}{2}} \approx \left(q_{i}q_{i+1}\right)^{1/2}, \quad\hbox{(geometric mean)} \label{wave:pde2:var:c:mean:geometric} \end{align} $$ The arithmetic mean in \eqref{wave:pde2:var:c:mean:arithmetic} is by far the most commonly used averaging technique.

With the operator notation from \eqref{wave:pde2:var:c:mean:arithmetic} we can specify the discretization of the complete variable-coefficient wave equation in a compact way: $$ \begin{equation} \lbrack D_tD_t u = D_x\overline{q}^{x}D_x u + f\rbrack^{n}_i \thinspace . \label{wave:pde2:var:c:scheme:op} \end{equation} $$ From this notation we immediately see what kind of differences that each term is approximated with. The notation \( \overline{q}^{x} \) also specifies that the variable coefficient is approximated by an arithmetic mean. With the notation \( [D_xq D_x u]^n_i \), we specify that \( q \) is evaluated directly, as a function, between the mesh points: \( q(x_{i-\frac{1}{2}}) \) and \( q(x_{i+\frac{1}{2}}) \).

Before any implementation, it remains to solve \eqref{wave:pde2:var:c:scheme:op} with respect to \( u_i^{n+1} \):

$$ \begin{align} u^{n+1}_i &= - u_i^{n-1} + 2u_i^n + \nonumber\\ &\quad \left(\frac{\Delta x}{\Delta t}\right)^2 \left( \frac{1}{2}(q_{i} + q_{i+1})(u_{i+1}^n - u_{i}^n) - \frac{1}{2}(q_{i} + q_{i-1})(u_{i}^n - u_{i-1}^n)\right) + \nonumber\\ & \quad \Delta t^2 f^n_i \thinspace . \label{wave:pde2:var:c:scheme:impl} \end{align} $$

The stability criterion derived in the section Analysis of the finite difference scheme reads \( \Delta t\leq \Delta x/c \). If \( c=c(x) \), the criterion will depend on the spatial location. We must therefore choose a \( \Delta t \) that is small enough such that no mesh cell has \( \Delta x/c(x) >\Delta t \). That is, we must use the largest \( c \) value in the criterion:

$$ \begin{equation} \Delta t \leq \beta \frac{\Delta x}{\max_{x\in [0,L]}c(x)} \thinspace . \end{equation} $$ The parameter \( \beta \) is included as a safety factor: in some problems with a significantly varying \( c \) it turns out that one must choose \( \beta <1 \) to have stable solutions (\( \beta =0.9 \) may act as an all-round value).

The implementation of the scheme with a variable wave velocity may assume that \( c \) is available as an array c[i] at the mesh points. The following loop is a straightforward implementation of the scheme \eqref{wave:pde2:var:c:scheme:impl}:

for i in range(1, Nx):

u[i] = - u_2[i] + 2*u_1[i] + \

C2*(0.5*(q[i] + q[i+1])*(u_1[i+1] - u_1[i]) - \

0.5*(q[i] + q[i-1])*(u_1[i] - u_1[i-1])) + \

dt2*f(x[i], t[n])

The coefficient C2 is now defined as (dt/dx)**2 and not as the squared Courant number since the wave velocity is variable and appears inside the parenthesis.

With Neumann conditions \( \partial u/\partial x=0 \) at the boundary, we need to combine this scheme with the discrete version of the boundary condition, \( u^{n+1}_{-1}=u^{n+1}_1 \):

i = 0

ip1 = i+1

im1 = ip1

u[i] = - u_2[i] + 2*u_1[i] + \

C2*(0.5*(q[i] + q[ip1])*(u_1[ip1] - u_1[i]) - \

0.5*(q[i] + q[im1])*(u_1[i] - u_1[im1])) + \

dt2*f(x[i], t[n])

The use of q[i] + q[i+1] for q[i] + q[i-1] might be unexpected, but the original formula with q[i-1] accesses actually q[-1] which is legal indexing, but a \( q \) value at the opposite side of the mesh! To have a more correct computation of \( q_{-1/2} \), we therefore assume \( q'(0)=0 \) so that \( q_{1/2}=q_{-1/2} \). Alternatively, one could compute \( q_{-1/2} \) by extrapolation from \( q_0 \) and \( q_1 \):

$$ q_{-\frac{1}{2}} \approx q_0 + \frac{1}{2}(q_1-q_0) , $$ but this would modify the scheme at the boundary so that we cannot reuse the formula for the interior point as we do above.

A vectorized version of the scheme with a variable coefficient at internal points in the mesh becomes

u[1:-1] = - u_2[1:-1] + 2*u_1[1:-1] + \

C2*(0.5*(q[1:-1] + q[2:])*(u_1[2:] - u_1[1:-1]) -

0.5*(q[1:-1] + q[:-2])*(u_1[1:-1] - u_1[:-2])) + \

dt2*f(x[1:-1], t[n])

Sometimes a wave PDE has a variable coefficient also in front of the time-derivative term:

$$ \begin{equation} \varrho(x)\frac{\partial^2 u}{\partial t^2} = \frac{\partial}{\partial x}\left( q(x) \frac{\partial u}{\partial x}\right) + f(x,t) \label{wave:pde2:var:c:pde2} \thinspace . \end{equation} $$ A natural scheme is

$$ \begin{equation} [\varrho D_tD_t u = D_x\overline{q}^xD_x u + f]^n_i \thinspace . \end{equation} $$ We realize that the \( \varrho \) coefficient poses no particular difficulty because the only value \( \varrho_i^n \) enters the formula above (when written out). There is hence no need for any averaging of \( \varrho \). Often, \( \varrho \) will be moved to the right-hand side, also without any difficulty:

$$ \begin{equation} [D_tD_t u = \varrho^{-1}D_x\overline{q}^xD_x u + f]^n_i \thinspace . \end{equation} $$

Waves die out by two mechanisms. In 2D and 3D the energy of the wave spreads out in space, and energy conservation then requires the amplitude to decrease. This effect is not present in 1D. The other cause of amplitude reduction is by damping. For example, the vibrations of a string die out because of air resistance and non-elastic effects in the string.

The simplest way of including damping is to add a first-order derivative to the equation (in the same way as friction forces enter a vibrating mechanical system): $$ \begin{equation} \frac{\partial^2 u}{\partial t^2} + b\frac{\partial u}{\partial t} = c^2\frac{\partial^2 u}{\partial x^2} + f(x,t), \label{wave:pde3} \end{equation} $$ where \( b \geq 0 \) is a prescribed damping coefficient.

A typical discretization of \eqref{wave:pde3} in terms of centered differences reads

$$ \begin{equation} [D_tD_t u + bD_{2t}u = c^2D_xD_x u + f]^n_i \thinspace . \label{wave:pde3:fd} \end{equation} $$ Writing out the equation and solving for the unknown \( u^{n+1}_i \) gives the scheme

$$ \begin{equation} u^{n+1}_i = (1 + \frac{1}{2}b\Delta t)^{-1}((\frac{1}{2}b\Delta t -1) u^{n-1}_i + 2u^n_i + C^2 \left(u^{n}_{i+1}-2u^{n}_{i} + u^{n}_{i-1}\right) + \Delta t^2 f^n_i), \thinspace . \label{wave:pde3:fd2} \end{equation} $$ New equations must be derived for \( u^1_i \), and for boundary points in case of Neumann conditions.

The damping is very small in many wave phenomena and then only evident for very long time simulations, so it is common to drop the \( bu_t \) term in the wave equation.

The program wave1D_dn_vc.py is a fairly general code for 1D wave propagation problems that targets the following initial-boundary value problem

$$ \begin{align} u_t &= (c^2(x)u_x)_x + f(x,t),\quad x\in (0,L),\ t\in (0,T], \label{wave:pde2:software:ueq}\\ u(x,0) &= I(x),\quad x\in [0,L],\\ u_t(x,0) &= V(t),\quad x\in [0,L],\\ u(0,t) &= U_0(t)\hbox{ or } u_x(0,t)=0,\quad t\in (0,T],\\ u(L,t) &= U_L(t)\hbox{ or } u_x(L,t)=0,\quad t\in (0,T] \label{wave:pde2:software:bcL} \end{align} $$

The solver function is a natural extension of the simplest solver function in the initial wave1D_u0_s.py program, extended with Neumann boundary conditions (\( u_x=0 \)), a possibly time-varying boundary condition on \( u \) (\( U_0(t) \), \( U_L(t) \)), and a variable wave velocity. The different code segments needed to make these extensions are shown and commented upon in the preceding text.

The vectorization is only applied inside the time loop, not for the initial condition or the first time steps, since this initial work is negligible for long time simulations in 1D problems.

A useful feature in the wave1D_dn_vc.py program is the specification of the user_action function as a class. Although the plot_u function in the viz function of previous wave1D*.py programs remembers the local variables in the viz function, it is a cleaner solution to store the needed variables together with the function, which is exactly what a class offers.

A class for flexible plotting, cleaning up files, and making a movie files like function viz and plot_u did can be coded as follows:

class PlotSolution:

"""

Class for the user_action function in solver.

Visualizes the solution only.

"""

def __init__(self,

casename='tmp', # prefix in filenames

umin=-1, umax=1, # fixed range of y axis

pause_between_frames=None, # movie speed

backend='matplotlib', # or 'gnuplot'

screen_movie=True, # show movie on screen?

every_frame=1): # show every_frame frame

self.casename = casename

self.yaxis = [umin, umax]

self.pause = pause_between_frames

module = 'scitools.easyviz.' + backend + '_'

exec('import %s as st' % module)

self.st = st

self.screen_movie = screen_movie

self.every_frame = every_frame

# Clean up old movie frames

for filename in glob('frame_*.png'):

os.remove(filename)

def __call__(self, u, x, t, n):

if n % self.every_frame != 0:

return

self.st.plot(x, u, 'r-',

xlabel='x', ylabel='u',

axis=[x[0], x[-1],

self.yaxis[0], self.yaxis[1]],

title='t=%f' % t[n],

show=self.screen_movie)

# pause

if t[n] == 0:

time.sleep(2) # let initial condition stay 2 s

else:

if self.pause is None:

pause = 0.2 if u.size < 100 else 0

time.sleep(pause)

self.st.savefig('%s_frame_%04d.png' % (self.casename, n))

Understanding this class requires quite some familiarity with Python in general and class programming in particular.

The constructor shows how we can flexibly import the plotting engine as (typically) scitools.easyviz.gnuplot_ or scitools.easyviz.matplotlib_ (the trailing underscore is SciTool's way of avoiding name clash between its interface modules and plotting packages). With the screen_movie parameter we can suppress displaying each movie frame on the screen (show=False parameter to plot). Alternatively, for slow movies associated with fine meshes, one can set every_frame to, e.g., 10, causing every 10 frames to be shown.

The __call__ method makes PlotSolution instances behave like functions, so we can just pass an instance, say p, as the user_action argument in the solver function, and any call to user_action will be a call to p.__call__.

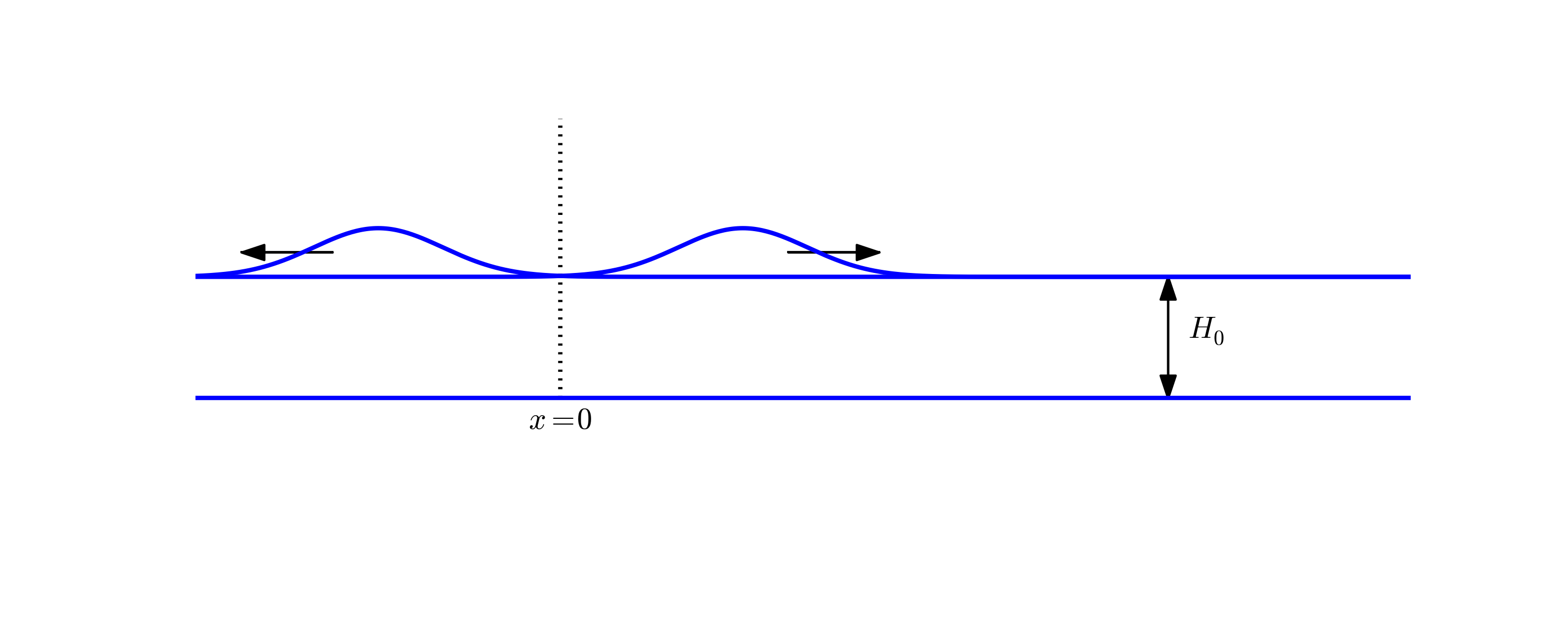

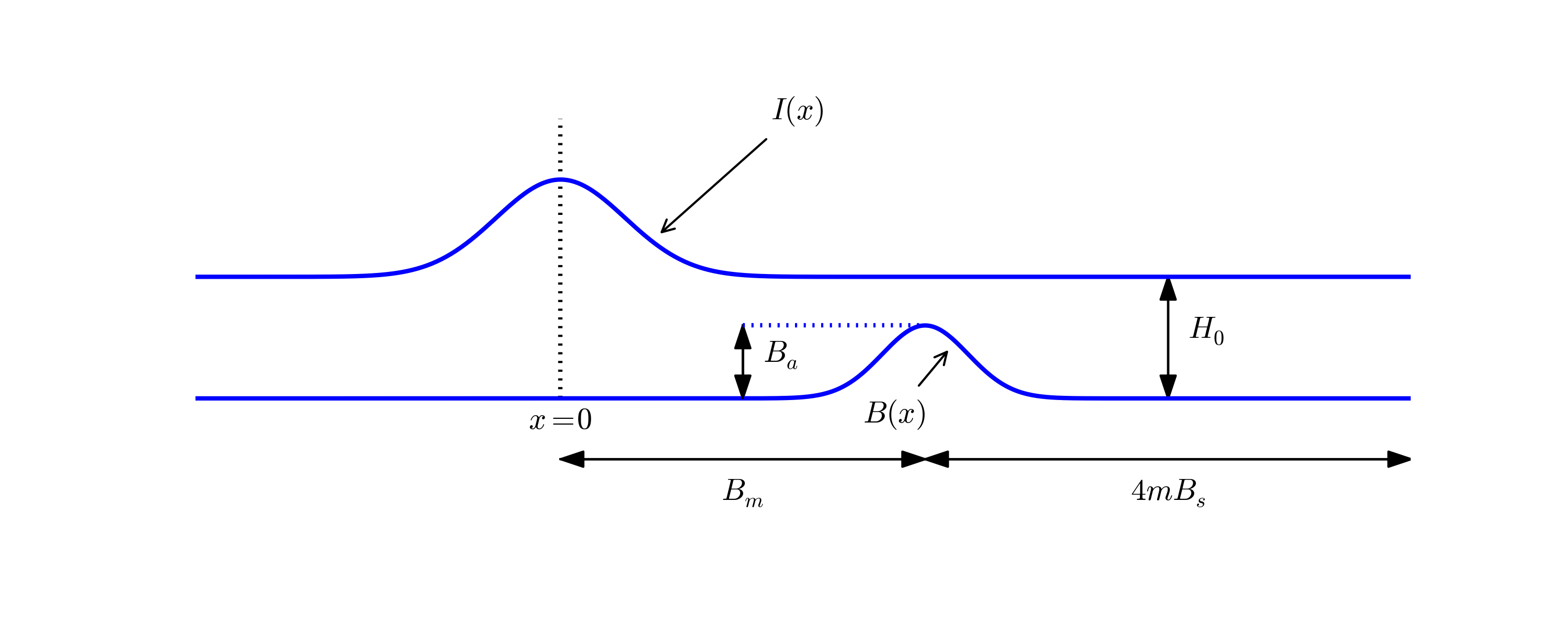

The function pulse in wave1D_dn_vc.py demonstrates wave motion in heterogeneous media where \( c \) varies. One can specify an interval where the wave velocity is decreased by a factor slowness_factor (or increased by making this factor less than one). Four types of initial conditions are available: a square pulse (plug), a Gaussian function (gaussian), a cosine "hat" consisting of one period of the cosine function (cosinehat), and half a period of a cosine "hat" (half-cosinehat). These peak-shaped initial conditions can be placed in the middle (loc='center') or at the left end (loc='left') of the domain. The pulse function is a flexible tool for playing around with various wave shapes and location of a medium with a different wave velocity:

def pulse(C=1, Nx=200, animate=True, version='vectorized', T=2,

loc='center', pulse_tp='gaussian', slowness_factor=2,

medium=[0.7, 0.9], every_frame=1):

"""

Various peaked-shaped initial conditions on [0,1].

Wave velocity is decreased by the slowness_factor inside

the medium spcification. The loc parameter can be 'center'

or 'left', depending on where the pulse is to be located.

"""

L = 1.

if loc == 'center':

xc = L/2

elif loc == 'left':

xc = 0

sigma = L/20. # width measure of I(x)

c_0 = 1.0 # wave velocity outside medium

if pulse_tp in ('gaussian','Gaussian'):

def I(x):

return exp(-0.5*((x-xc)/sigma)**2)

elif pulse_tp == 'plug':

def I(x):

return 0 if abs(x-xc) > sigma else 1

elif pulse_tp == 'cosinehat':

def I(x):

# One period of a cosine

w = 2

a = w*sigma

return 0.5*(1 + cos(pi*(x-xc)/a)) \

if xc - a <= x <= xc + a else 0

elif pulse_tp == 'half-cosinehat':

def I(x):

# Half a period of a cosine

w = 4

a = w*sigma

return cos(pi*(x-xc)/a) \

if xc - 0.5*a <= x <= xc + 0.5*a else 0

else:

raise ValueError('Wrong pulse_tp="%s"' % pulse_tp)

def c(x):

return c_0/slowness_factor \

if medium[0] <= x <= medium[1] else c_0

umin=-0.5; umax=1.5*I(xc)

casename = '%s_Nx%s_sf%s' % \

(pulse_tp, Nx, slowness_factor)

action = PlotMediumAndSolution(

medium, casename=casename, umin=umin, umax=umax,

every_frame=every_frame, screen_movie=animate)

solver(I=I, V=None, f=None, c=c, U_0=None, U_L=None,

L=L, Nx=Nx, C=C, T=T,

user_action=action, version=version,

dt_safety_factor=1)

The PlotMediumAndSolution class used here is a subclass of PlotSolution where the medium, specified by the medium interval, is indicated in the plots.

Experimenting with the pulse function, as suggested in Exercise 8: Send pulse waves through a layered medium, Exercise 9: Explain why numerical noise occurs, and Exercise 10: Investigate harmonic averaging in a 1D model can easily be done in a little program that imports the wave1D_dn_vc module and calls pulse with appropriate parameters. However, sometimes it is handy to perform such calls directly on the command line. A useful function function_UI from the scitools.misc module takes a list of functions in your program, along with sys.argv, and returns the right call to a function based on the command-line arguments. That is, by writing

Terminal> python progname.py funcname \

arg1 arg2 .., kwarg1=v1 kwarg2=v2

the function_UI function constructs string

`funcname(arg1, arg2, ..., kwarg1=v1, kwarg2=v2`

that you can send to eval to realize the call. For example, the code

from scitools.misc import function_UI

cmd = function_UI([pulse,], sys.argv)

eval(cmd)

combined with the command

Terminal> python wave1D_dn_vc.py pulse C=1 loc="'left'" \

"medium=[0.5, 0.88]" slowness_factor=2 Nx=40 \

pulse_tp="'cosinehat'" T=1 every_frame=10

makes eval(cmd) perform the call

pulse(C=1, loc='left', medium=[0.5, 0.8], slowness_factor=2,

Nx=40, pulse_tp='cosinehat', T=1, every_frame=10)

Writing only the function name and no arguments on the command line makes function_UI figure out what the arguments are and write a help string. In short, scitools.mist.function_UI makes all your desired functions in a program callable from the command line with two statements. This is especially useful during program testing.

Modify the program wave1D_dn.py to incorporate the ghost cell technique outlined in the section Alternative implementation via ghost cells. Add no, one, or two ghost cells according to the specified boundary conditions. Filename: wave1D_dn_ghost.py.

Consider the solution \( u(x,t) \) in Exercise 7: Prove symmetry of a 1D wave problem analytically that is symmetric around \( x=0 \). This means that we can simulate the wave process in only the half of the domain \( [0,L] \). What is the correct boundary condition to impose at \( x=0 \)? Filename: wave1D_symmetric.

Perform simulations of the complete wave problem from Exercise 7: Prove symmetry of a 1D wave problem analytically on \( [-L,L] \). Thereafter, utilize the symmetry of the solution and run a simulation in half of the domain \( [0,L] \), using the boundary condition at \( x=0 \) as derived in Exercise 5: Find a symmetry boundary condition. Compare the two solutions and make sure that they are the same. Filename: wave1D_symmetric.

Consider the simple "plug" wave where \( \Omega = [-L,L] \) and

$$ \begin{equation*} I(x) = \left\lbrace\begin{array}{ll} 1, & x\in [-\delta, \delta],\\ 0, & \hbox{otherwise} \end{array}\right. \end{equation*} $$ for some number \( 0 < \delta < L \). The boundary conditions can be set to \( u=0 \). The solution to this problem is symmetric around \( x=0 \). Prove this by setting up the complete initial-boundary value problem and showing that if \( u(x,t) \) is a solution, then also \( u(-x,t) \) is a solution. Filename: wave1D_symmetric.py.

Use the pulse function in wave1D_dn_vs.py to investigate sending a pulse, located with its peak at \( x=0 \), through the medium to the right where it hits another medium for \( x\in [0.7,0.9] \) where the wave velocity is decreased by a factor \( s_f \). Report what happens with a Gaussian pulse, a "cohat" pulse, half a "cohat" pulse, and a plug pulse for resolutions \( N_x=40,80,160 \), and \( s_f=2,4 \). Make a reference solution in the homogeneous case too with \( s_f=1 \) and \( N_x=40 \). Use \( C=1 \) in the medium outside \( [0.7,0.9] \). Simulate until \( T=2 \). Filename: pulse1D.py.

The experiments performed in Exercise 8: Send pulse waves through a layered medium shows considerable numerical noise in the form of non-physical waves, especially for \( s_f=4 \) and the plug pulse or the half a "cohat" pulse. The noise is much less visible for a Gaussian pulse. Run the case with the plug and half a "cohat" pulses for \( s_f=1 \), \( C=0.9, 0.25 \), and \( N_x=40,80,160 \). Use the numerical dispersion relation to explain the observations. Filename: pulse1D_analysis.pdf.

Will harmonic averaging of the wave velocity give better numerical results for the case \( s_f=4 \) in Exercise 8: Send pulse waves through a layered medium? Filenames: pulse1D_harmonic.pdf, pulse1D_harmonic.py.

A natural next step is to consider extensions of the methods for various variants of the one-dimensional wave equation to two-dimensional (2D) and three-dimensional (3D) versions of the wave equation.

The general wave equation in \( d \) space dimensions, with constant wave velocity \( c \), can be written in the compact form

$$ \begin{equation} \frac{\partial^2 u}{\partial t^2} = c^2\nabla^2 u\hbox{ for }\xpoint\in\Omega\subset\Real^d,\ t\in (0,T] \thinspace . \label{wave:2D3D:model1} \end{equation} $$ In a 2D problem, \( d=2 \), and

$$ \begin{equation*} \nabla^2 u = \frac{\partial^2 u}{\partial x^2} + \frac{\partial^2 u}{\partial y^2} ,\end{equation*} $$ while in three space dimensions, \( d=3 \), and

$$ \begin{equation*} \nabla^2 u = \frac{\partial^2 u}{\partial x^2} + \frac{\partial^2 u}{\partial y^2} + \frac{\partial^2 u}{\partial z^2} \thinspace . \end{equation*} $$

Many applications involve variable coefficients, and the general wave equation in \( d \) dimensions is in this case written as

$$ \begin{equation} \varrho\frac{\partial^2 u}{\partial t^2} = \nabla\cdot (q\nabla u) + f\hbox{ for }\xpoint\in\Omega\subset\Real^d,\ t\in (0,T], \label{wave:2D3D:model2} \end{equation} $$ which in 2D becomes

$$ \begin{equation} \varrho(x,y) \frac{\partial^2 u}{\partial t^2} = \frac{\partial}{\partial x}\left( q(x,y) \frac{\partial u}{\partial x}\right) + \frac{\partial}{\partial y}\left( q(x,y) \frac{\partial u}{\partial y}\right) + f(x,y,t) \thinspace . \end{equation} $$ To save some writing and space we may use the index notation, where subscript \( t \), \( x \), \( y \), or \( z \) means differentiation with respect to that coordinate. For example,

$$ \begin{align*} \frac{\partial^2 u}{\partial t^2} &= u_{tt},\\ \frac{\partial}{\partial y}\left( q(x,y) \frac{\partial u}{\partial y}\right) &= (q u_y)_y \thinspace . \end{align*} $$ The 3D versions of the two model PDEs, with and without variable coefficients, can with now with the aid of the index notation for differentiation be stated as

$$ \begin{align} u_{tt} &= c^2(u_{xx} + u_{yy} + u_{zz}) + f, \label{wave:2D3D:model1:v2}\\ \varrho u_{tt} &= (q u_x)_x + (q u_z)_z + (q u_z)_z + f \label{wave:2D3D:model2:v2} \thinspace . \end{align} $$

At each point of the boundary \( \partial\Omega \) of \( \Omega \) we need one boundary condition involving the unknown \( u \). The boundary conditions are of three principal types:

We introduce a mesh in time and in space. The mesh in time consists of time points \( t_0=0 < t_1 <\cdots < t_N \), often with a constant spacing \( \Delta t= t_{n+1}-t_{n} \), \( n=0,\ldots,N-1 \).

When using finite difference approximations, the domain shape in space is normally simple. We assume that \( \Omega \) has the shape of a \( d \)-dimensional box shape. Mesh points are introduced separately in the various space directions: \( x_0 < x_1 <\cdots < x_{N_x} \) in \( x \) direction, \( y_0 < y_1 <\cdots < y_{N_y} \) in \( y \) direction, and \( z_0 < z_1 <\cdots < z_{N_z} \) in \( z \) direction. It is a very common choice to use constant mesh spacings: \( \Delta x = x_{i+1}-x_{i} \), \( i=0,\ldots,N_x-1 \), \( \Delta y = y_{j+1}-y_{j} \), \( j=0,\ldots,N_y-1 \), and \( \Delta z = z_{k+1}-z_{k} \), \( k=0,\ldots,N_z-1 \), but often with \( \Delta x\neq \Delta y\neq \Delta z \). In case the mesh spacings are equal in the spatial directions, one often introduces the symbol \( h \): \( h = \Delta x = \Delta y =\Delta z \).

The unknown \( u \) at mesh point \( (x_i,y_j,z_k,t_n) \) is denoted \( u^{n}_{i,j,k} \). In 2D problems we just skip the \( z \) coordinate (by assuming no variation in that direction: \( \partial/\partial z=0 \)) and write \( u^n_{i,j} \).

Two- and three-dimensional wave equations are easily discretized by assembling building blocks for discretization of 1D wave equations, because the multi-dimensional versions just contain terms of the same type that occur in 1D.

For example, \eqref{wave:2D3D:model1:v2} can be discretized as

$$ \begin{equation} [D_tD_t u = c^2(D_xD_x u + D_yD_yu + D_zD_z u) + f]^n_{i,j,k} \thinspace . \end{equation} $$ A 2D version might be instructive to write out in detail:

$$ [D_tD_t u = c^2(D_xD_x u + D_yD_yu) + f]^n_{i,j,k}, $$ which becomes

$$ \frac{u^{n+1}_{i,j} - 2u^{n}_{i,j} + u^{n-1}_{i,j}}{\Delta t^2} = c^2 \frac{u^{n}_{i+1,j} - 2u^{n}_{i,j} + u^{n}_{i-1,j}}{\Delta x^2} + c^2 \frac{u^{n}_{i,j+1} - 2u^{n}_{i,j} + u^{n}_{i,j-1}}{\Delta y^2} + f^n_{i,j}, $$ Assuming as usual that all values at the time levels \( n \) and \( n-1 \) are known, we can solve for the only unknown \( u^{n+1}_{i,j} \).

As in the 1D case, we need to develop a special formula for \( u^1_{i,j} \) where we combine the general scheme for \( u^{n+1}_{i,j} \), when \( n=0 \), with the discretization of the initial condition:

$$ [D_{2t}u = V]^0_{i,j}\quad\Rightarrow\quad u^{-1}_{i,j} = u^1_{i,j} - 2\Delta t V_{i,j} \thinspace . $$

The PDE \eqref{wave:2D3D:model2:v2} with variable coefficients is discretized term by term using the corresponding elements from the 1D case:

$$ \begin{equation} [\varrho D_tD_t u = (D_x\overline{q}^x D_x u + D_y\overline{q}^y D_yu + D_z\overline{q}^z D_z u) + f]^n_{i,j,k} \thinspace . \end{equation} $$ When written out and solved for the unknown \( u^{n+1}_{i,j,k} \), one gets the scheme

$$ \begin{align*} u^{n+1}_{i,j,k} &= - u^{n-1}_{i,j,k} + 2u^{n}_{i,j,k} + \\ &= \frac{1}{\varrho_{i,j,k}}\frac{1}{\Delta x^2} ( \frac{1}{2}(q_{i,j,k} + q_{i+1,j,k})(u^{n}_{i+1,j,k} - u^{n}_{i,j,k}) - \\ &\qquad\quad \frac{1}{2}(q_{i-1,j,k} + q_{i,j,k})(u^{n}_{i,j,k} - u^{n}_{i-1,j,k})) + \\ &= \frac{1}{\varrho_{i,j,k}}\frac{1}{\Delta x^2} ( \frac{1}{2}(q_{i,j,k} + q_{i,j+1,k})(u^{n}_{i,j+1,k} - u^{n}_{i,j,k}) - \\ &\qquad\quad\frac{1}{2}(q_{i,j-1,k} + q_{i,j,k})(u^{n}_{i,j,k} - u^{n}_{i,j-1,k})) + \\ &= \frac{1}{\varrho_{i,j,k}}\frac{1}{\Delta x^2} ( \frac{1}{2}(q_{i,j,k} + q_{i,j,k+1})(u^{n}_{i,j,k+1} - u^{n}_{i,j,k}) -\\ &\qquad\quad \frac{1}{2}(q_{i,j,k-1} + q_{i,j,k})(u^{n}_{i,j,k} - u^{n}_{i,j,k-1})) + \\ + &\qquad \Delta t^2 f^n_{i,j,k} \thinspace . \end{align*} $$

Also here we need to develop a special formula for \( u^1_{i,j,k} \) by combining the scheme for \( n=0 \) with the discrete initial condition \( u^{-1}_{i,j,k}=u^1_{i,j,k} - 2\Delta tV_{i,j,k} \).

The schemes listed above are valid for the internal points in the mesh. After updating these, we need to visit all the mesh points at the boundaries and set the prescribed \( u \) value.

The condition \( \partial u/\partial n = 0 \) was implemented in 1D by discretizing it with a \( D_{2t}u \) centered difference, and thereafter eliminating the fictitious \( u \) point outside the mesh by using the general scheme at the boundary point. Exactly the same idea is reused in multi dimensions. Consider \( \partial u/\partial n = 0 \) at a boundary \( y=0 \). The normal direction is then in \( -y \) direction, so $$ \frac{\partial u}{\partial n} = -\frac{\partial u}{\partial y},$$ and we set

$$ [-D_{2y} u = 0]^n_{i,0}\quad\Rightarrow\quad \frac{u^n_{i,1}-u^n_{i,-1}}{2\Delta y} = 0 \thinspace . $$ From this it follows that \( u^n_{i,-1}=u^n_{i,1} \). The discretized PDE at the boundary point \( (i,0) \) reads

$$ \frac{u^{n+1}_{i,0} - 2u^{n}_{i,0} + u^{n-1}_{i,0}}{\Delta t^2} = c^2 \frac{u^{n}_{i+1,0} - 2u^{n}_{i,0} + u^{n}_{i-1,0}}{\Delta x^2} + c^2 \frac{u^{n}_{i,1} - 2u^{n}_{i,0} + u^{n}_{i,-1}}{\Delta y^2} + f^n_{i,j}, $$ We can then just insert \( u^1_{i,j} \) for \( u^n_{i,-1} \) in this equation and then solve for the boundary value \( u^{n+1}_{i,0} \).

From these calculations, we see a pattern: the general scheme applies at the boundary \( j=0 \) too if we just replace \( j-1 \) by \( j+1 \). Such a pattern is particularly useful for implementations.

We shall now describe in detail various Python implementations for solving a standard 2D, linear wave equation with constant wave velocity and \( u=0 \) on the boundary. The wave equation is to be solved in the space-time domain \( \Omega\times (0,T] \), where \( \Omega = [0,L_x]\times [0,L_y] \) is a rectangular spatial domain. More precisely, the complete initial-boundary value problem is defined by

$$ \begin{align} u_t &= c^2(u_{xx} + u_{yy}) + f(x,y,t),\quad (x,y)\in \Omega,\ t\in (0,T],\\ u(x,y,0) &= I(x,y),\quad (x,y)\in\Omega,\\ u_t(x,y,0) &= V(x,y),\quad (x,y)\in\Omega,\\ u &= 0,\quad (x,y)\in\partial\Omega,\ t\in (0,T], \end{align} $$ where \( \partial\Omega \) is the boundary of \( \Omega \), in this case the four sides of the rectangle \( [0,L_x]\times [0,L_y] \): \( x=0, \), \( x=L_x \), \( y=0 \), and \( y=L_y \).

The PDE is discretized as $$ [D_t D_t u = c^2(D_xD_x u + D_yD_y u) + f]^n_{i,j}, $$ which leads to an explicit updating formula to be implemented in a program:

$$ \begin{equation} u^{n+1} = -u^{n-1}_{i,j} + 2u^n_{i,j} + C_x^2( u^{n}_{i+1,j} - 2u^{n}_{i,j} + u^{n}_{i-1,j}) + C_y^2 (u^{n}_{i,j+1} - 2u^{n}_{i,j} + u^{n}_{i,j-1}) + \Delta t^2 f_{i,j}^n, \label{wave:2D3D:impl1:2Du0:ueq:discrete} \end{equation} $$ for all interior mesh points \( i=0,\ldots,N_x-1 \) and \( j=0,\ldots,N_y-1 \), and for \( n=1,\ldots, N \). The constants \( C_x \) and \( C_y \) are defined as $$ C_x = c\frac{\Delta t}{\Delta x},\quad C_x = c\frac{\Delta t}{\Delta y} \thinspace . $$

At the boundary we simply set \( u^{n+1}_{i,j}=0 \) for \( i=0 \), \( j=0,\ldots,N_y \); \( i=N_x \), \( j=0,\ldots,N_y \); \( j=0 \), \( i=0,\ldots,N_x \); and \( j=N_y \), \( i=0,\ldots,N_i \). For the first step, \( n=0 \), \eqref{wave:2D3D:impl1:2Du0:ueq:discrete} is combined with the discretization of the initial condition \( u_t=V \), \( [D_{2t} u = V]^0_{i,j} \) to obtain a special formula for \( u^1_{i,j} \) at the interior mesh points:

$$ \begin{equation} u^{1} = u^0_{i,j} + \Delta t V_{i,j} + \frac{1}{2}C_x^2( u^{0}_{i+1,j} - 2u^{0}_{i,j} + u^{0}_{i-1,j}) + \frac{1}{2}C_y^2 (u^{0}_{i,j+1} - 2u^{0}_{i,j} + u^{0}_{i,j-1}) + \frac{1}{2}\Delta t^2f_{i,j}^n, \label{wave:2D3D:impl1:2Du0:ueq:discrete} \end{equation} $$

The solver function for a 2D case with constant wave velocity and \( u=0 \) as boundary condition follows the setup from the similar function for the 1D case in wave1D_u0_s.py, but there are a few necessary extensions. The code is in the program wave2D_u0.py.

The spatial domain is now \( [0,L_x]\times [0,L_y] \), specified by the arguments Lx and Ly. Similarly, the number of mesh points in the \( x \) and \( y \) directions, \( N_x \) and \( N_y \), become the arguments Nx and Ny. In multi-dimensional problems it makes less sense to specify a Courant number as the wave velocity is a vector and the mesh spacings may differ in the various spatial directions. We therefore give \( \Delta t \) explicitly. The signature of the solver function is then

def solver(I, V, f, c, Lx, Ly, Nx, Ny, dt, T,

user_action=None, version='scalar',

dt_safety_factor=1):

Key parameters used in the calculations are created as

x = linspace(0, Lx, Nx+1) # mesh points in x dir

y = linspace(0, Ly, Ny+1) # mesh points in y dir

dx = x[1] - x[0]

dy = y[1] - y[0]

N = int(round(T/float(dt)))

t = linspace(0, N*dt, N+1) # mesh points in time

Cx2 = (c*dt/dx)**2; Cy2 = (c*dt/dy)**2 # help variables

dt2 = dt**2

Specifying a negative dt parameter makes us use the stability limit with a safety factor:

if dt <= 0:

stability_limit = (1/float(c))*(1/sqrt(1/dx**2 + 1/dy**2))

dt = dt_safety_factor*stability_limit

u = zeros((Nx+1,Ny+1)) # solution array

u_1 = zeros((Nx+1,Ny+1)) # solution at t-dt

u_2 = zeros((Nx+1,Ny+1)) # solution at t-2*dt

where \( u^{n+1}_{i,j} \) corresponds to u[i,j], \( u^{n}_{i,j} \) to u_1[i,j], and \( u^{n-1}_{i,j} \) to u_1[i,j]

Inserting the initial condition I in u_1 and making a callback to the user in terms of the user_action function should be straightforward generalization of the 1D code:

for i in range(0, Nx+1):

for j in range(0, Ny+1):

u_1[i,j] = I(x[i], y[j])

if user_action is not None:

user_action(u_1, x, xv, y, yv, t, 0)

The user_action function has more arguments which will be commented upon in the section on vectorization.

The key finite difference formula for updating the solution at a time level is implemented as

for i in range(1, Nx):

for j in range(1, Ny):