Note: PRELIMINARY VERSION (expect typos!)

Finite difference methods

A basic model for exponential decay

The Forward Euler scheme

Step 1: Discretizing the domain

Step 2: Fulfilling the equation at discrete time points

Step 3: Replacing derivatives by finite differences

Step 4: Formulating a recursive algorithm

The Backward Euler scheme

The Crank-Nicolson scheme

The unifying \( \theta \)-rule

Constant time step

Compact operator notation for finite differences

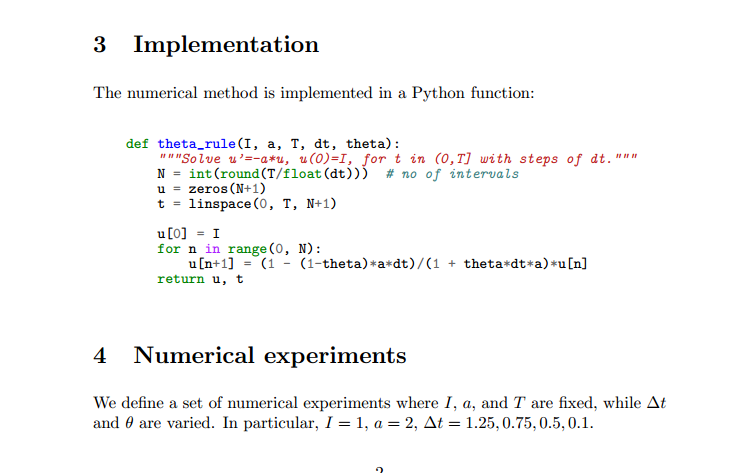

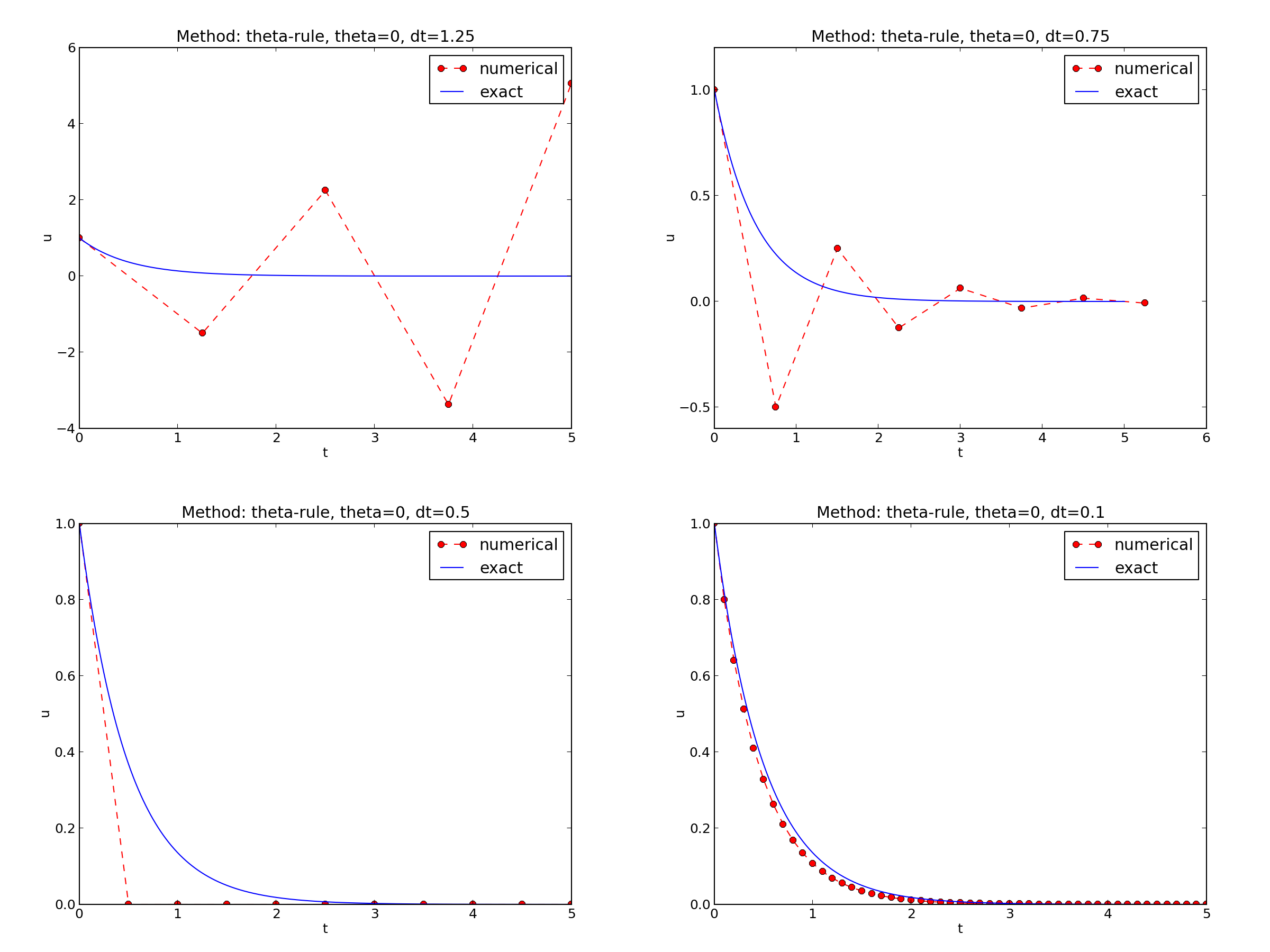

Implementation

Making a solver function

Function for computing the numerical solution

Integer division

Doc strings

Formatting of numbers

Running the program

Verifying the implementation

Running a few algorithmic steps by hand

Comparison with an exact discrete solution

Computing the numerical error

Plotting solutions

Plotting with SciTools

Creating user interfaces

Reading a sequence of command-line arguments

Working with an argument parser

Computing convergence rates

Estimating \( r \)

Implementation

Debugging via convergence rates

Memory-saving implementation

Software engineering

Making a module

Prefixing imported functions by the module name

Doctests

Unit testing with nose

Basic use of nose

Demonstrating nose

Installation of nose

Using nose to test modules with doctests

Classical unit testing with unittest

Basic use of unittest

Demonstration of unittest

Implementing simple problem and solver classes

The problem class

The solver class

The visualizer class

Combing the objects

Implementing more advanced problem and solver classes

A generic class for parameters

The problem class

The solver class

The visualizer class

Performing scientific experiments

Interpreting output from other programs

Making a report

Plain HTML

HTML with MathJax

LaTeX

Sphinx

Markdown

Wiki formats

Doconce

Worked example

Publishing a complete project

Exercises

Exercise 1: Experiment with integer division

Exercise 2: Experiment with wrong computations

Exercise 3: Implement specialized functions

Exercise 4: Plot the error function

Exercise 5: Compare methods for a give time mesh

Exercise 6: Change formatting of numbers and debug

Exercise 7: Write a doctest

Exercise 8: Write a nose test

Exercise 9: Make a module

Exercise 10: Make use of a class implementation

Analysis of the \( \theta \)-rule for a decay ODE

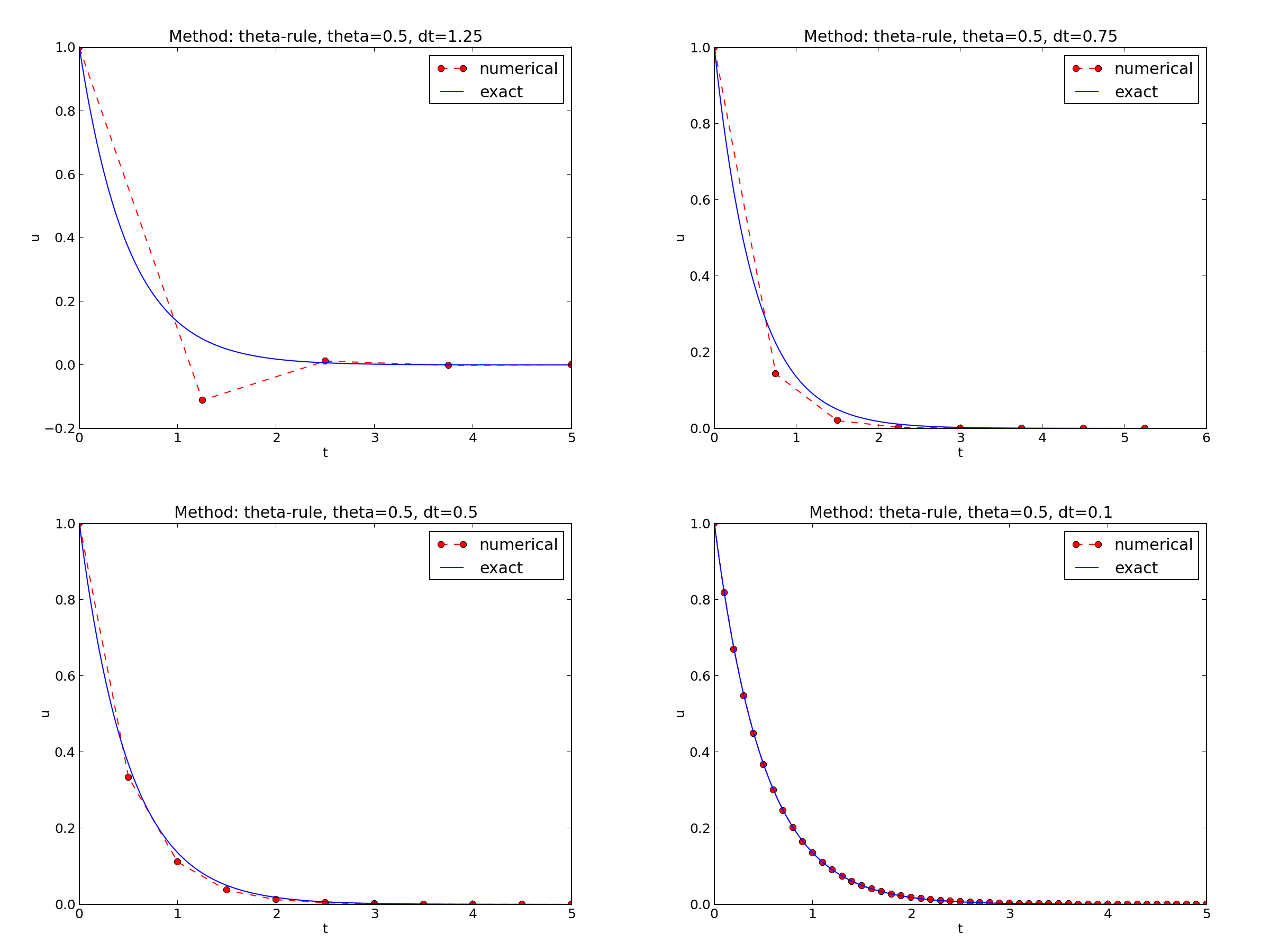

Discouraging numerical solutions

Experimental investigation of oscillatory solutions

Exact numerical solution

Stability

Comparing Amplification Factors

Series Expansion of Amplification Factors

Local error

Analytical comparison of schemes

The real (global) error at a point

Integrated errors

Exercises

Exercise 11: Explore the \( \theta \)-rule for exponential growth

Exercise 12: Summarize investigations in a report

Exercise 13: Plot amplification factors for exponential growth

Model extensions

Generalization: including a variable coefficient

Generalization: including a source term

Schemes

Implementation of the generalized model problem

Deriving the \( \theta \)-rule formula

The Python code

Coding of variable coefficients

Verification via trivial solutions

Verification via manufactured solutions

Extension to systems of ODEs

General first-order ODEs

Generic form

The Odespy software

Example: Runge-Kutta methods

Remark about using the \( \theta \)-rule in Odespy

Example: Adaptive Runge-Kutta methods

Other schemes

Implicit 2-step backward scheme

The Leapfrog scheme

The filtered Leapfrog scheme

2nd-order Runge-Kutta scheme

A 2nd-order Taylor-series method

2nd-order Adams-Bashforth scheme

3rd-order Adams-Bashforth scheme

Exercises

Exercise 14: Implement the 2-step backward scheme

Exercise 15: Implement the Leapfrog scheme

Exercise 16: Experiment with the Leapfrog scheme

Exercise 17: Analyze the Leapfrog scheme

Exercise 18: Implement the 2nd-order Adams-Bashforth scheme

Exercise 19: Implement the 3rd-order Adams-Bashforth scheme

Exercise 20: Generalize a class implementation

Exercise 21: Generalize an advanced class implementation

Exercise 22: Make a unified implementation of many schemes

Exercise 23: Analyze explicit 2nd-order methods

Applications of exponential decay models

Evolution of a population

Compound interest and inflation

Radioactive Decay

Newton's law of cooling

Decay of atmospheric pressure with altitude

Multiple atmospheric layers

Simplification: \( L=0 \)

Simplification: one-layer model

Vertical motion of a body in a viscous fluid

Overview of forces

Equation of motion

Terminal velocity

A Crank-Nicolson scheme

Physical data

Verification

Decay ODEs from solving a PDE by Fourier expansions

Exercises

Exercise 24: Simulate the pressure drop in the atmosphere

Exercise 25: Make a program for vertical motion in a fluid

Exercise 26: Plot forces acting in vertical motion in a fluid

Exercise 27: Simulate a free fall of a parachute jumper

Exercise 28: Simulate a complete parachute jump

Exercise 29: Simulate a rising ball in water

Exercise 30: Radioactive decay of Carbon-14

Exercise 31: Radioactive decay of two substances

Exercise 32: Find time of murder from body temperature

Exercise 33: Simulate an oscillating cooling process

Exercise 34: Compute \( y=|x| \) by solving an ODE

Exercise 35: Simulate growth of a fortune with random interest rate

Exercise 36: Simulate sudden environmental changes for a population

Exercise 37: Simulate oscillating environment for a population

Exercise 38: Simulate logistic growth

Exercise 39: Rederive the equation for continuous compound interest

Finite difference methods for partial differential equations (PDEs) employ a range of concepts and tools that can be introduced and illustrated in the context of simple ordinary differential equation (ODE) examples. By first working with ODEs, we keep the mathematical problems to be solved as simple as possible (but no simpler), thereby allowing full focus on understanding the concepts and tools that will be reused and further extended when addressing finite difference methods for time-dependent PDEs. The forthcoming treatment of ODEs is therefore solely dominated by reasoning and methods that directly carry over to numerical methods for PDEs.

We study two model problems: an ODE for a decaying phenomena, which will be relevant for PDEs of diffusive nature, and an ODE for oscillating phenomena, which will be relevant for PDEs of wave nature. Both problems are linear with known analytical solutions such that we can easily assess the quality of various numerical methods and analyze their behavior.

The purpose of this module is to explain finite difference methods in detail for a simple ordinary differential equation (ODE). Emphasis is put on the reasoning when discretizing the problem, various ways of programming the methods, how to verify that the implementation is correct, experimental investigations of the numerical behavior of the methods, and theoretical analysis of the methods to explain the observations.

Our model problem is perhaps the simplest ODE:

$$ \begin{equation*} u'(t) = -au(t), \end{equation*} $$ Here, \( a>0 \) is a constant and \( u'(t) \) means differentiation with respect to time \( t \). This type of equation arises in a number of widely different phenomena where some quantity \( u \) undergoes exponential reduction. Examples include radioactive decay, population decay, investment decay, cooling of an object, pressure decay in the atmosphere, and retarded motion in fluids (for some of these models, \( a \) can be negative as well). Studying numerical solution methods for this simple ODE gives important insight that can be reused for diffusion PDEs.

The analytical solution of the ODE is found by the method of separation of variables, resulting in

$$ \begin{equation*} u(t) = Ce^{-at},\end{equation*} $$ for any arbitrary constant \( C \). To formulate a mathematical problem for which there is a unique solution, we need a condition to fix the value of \( C \). This condition is known as the initial condition and stated as \( u(0)=I \). That is, we know the value \( I \) of \( u \) when the process starts at \( t=0 \). The exact solution is then \( u(t)=I\exp{(-at)} \).

We seek the solution \( u(t) \) of the ODE for \( t\in (0,T] \). The point \( t=0 \) is not included since we know \( u \) here and assume that the equation governs \( u \) for \( t>0 \). The complete ODE problem then reads: find \( u(t) \) such that

$$ \begin{equation} u' = -au,\ t\in (0,T], \quad u(0)=I\thinspace . \label{decay:problem} \end{equation} $$ This is known as a continuous problem because the parameter \( t \) varies continuously from \( 0 \) to \( T \). For each \( t \) we have a corresponding \( u(t) \). There are hence infinitely many values of \( t \) and \( u(t) \). The purpose of a numerical method is to formulate a corresponding discrete problem whose solution is characterized by a finite number of values, which can be computed in a finite number of steps on a computer.

Solving an ODE like \eqref{decay:problem} by a finite difference method consists of the following four steps:

The time domain \( [0,T] \) is represented by a finite number of \( N+1 \) points

$$ \begin{equation} 0 = t_0 < t_1 < t_2 < \cdots < t_{N-1} < t_N = T\thinspace . \end{equation} $$ The collection of points \( t_0,t_1,\ldots,t_N \) constitutes a mesh or grid. Often the mesh points will be uniformly spaced in the domain \( [0,T] \), which means that the spacing \( t_{n+1}-t_n \) is the same for all \( n \). This spacing is then often denoted by \( \Delta t \), in this case \( t_n=n\Delta t \).

We seek the solution \( u \) at the mesh points: \( u(t_n) \), \( n=1,2,\ldots,N \) (note that \( u^0 \) is already known as \( I \)). A notational short-form for \( u(t_n) \), which will be used extensively, is \( u^{n} \). More precisely, we let \( u^n \) be the numerical approximation to the exact solution at \( t=t_n \), \( u(t_n) \). When we need to clearly distinguish the numerical and the exact solution, we often place a subscript e on the exact solution, as in \( {\uex}(t_n) \). Figure 1 shows the \( t_n \) and \( u_n \) points for \( n=0,1,\ldots,N=7 \) as well as \( \uex(t) \) as the dashed line.

Since finite difference methods produce solutions at the mesh points only, it is an open question what the solution is between the mesh points. One can use methods for interpolation to compute the value of \( u \) between mesh points. The simplest (and most widely used) interpolation method is to assume that \( u \) varies linearly between the mesh points, see Figure 2. Given \( u^{n} \) and \( u^{n+1} \), the value of \( u \) at some \( t\in [t_{n}, t_{n+1}] \) is by linear interpolation

$$ \begin{equation} u(t) \approx u^n + \frac{u^{n+1}-u^n}{t_{n+1}-t_n}(t - t_n)\thinspace . \end{equation} $$

Figure 2: Linear interpolation between the discrete solution values (dashed curve is exact solution).

The ODE is supposed to hold for all \( t\in (0,T] \), i.e., at an infinite number of points. Now we relax that requirement and require that the ODE is fulfilled at a finite set of discrete points in time. The mesh points \( t_1,t_2,\ldots,t_N \) are a natural choice of points. The original ODE is then reduced to the following \( N \) equations:

$$ \begin{equation} u'(t_n) = -au(t_n),\quad n=1,\ldots,N\thinspace . \label{decay:step2} \end{equation} $$

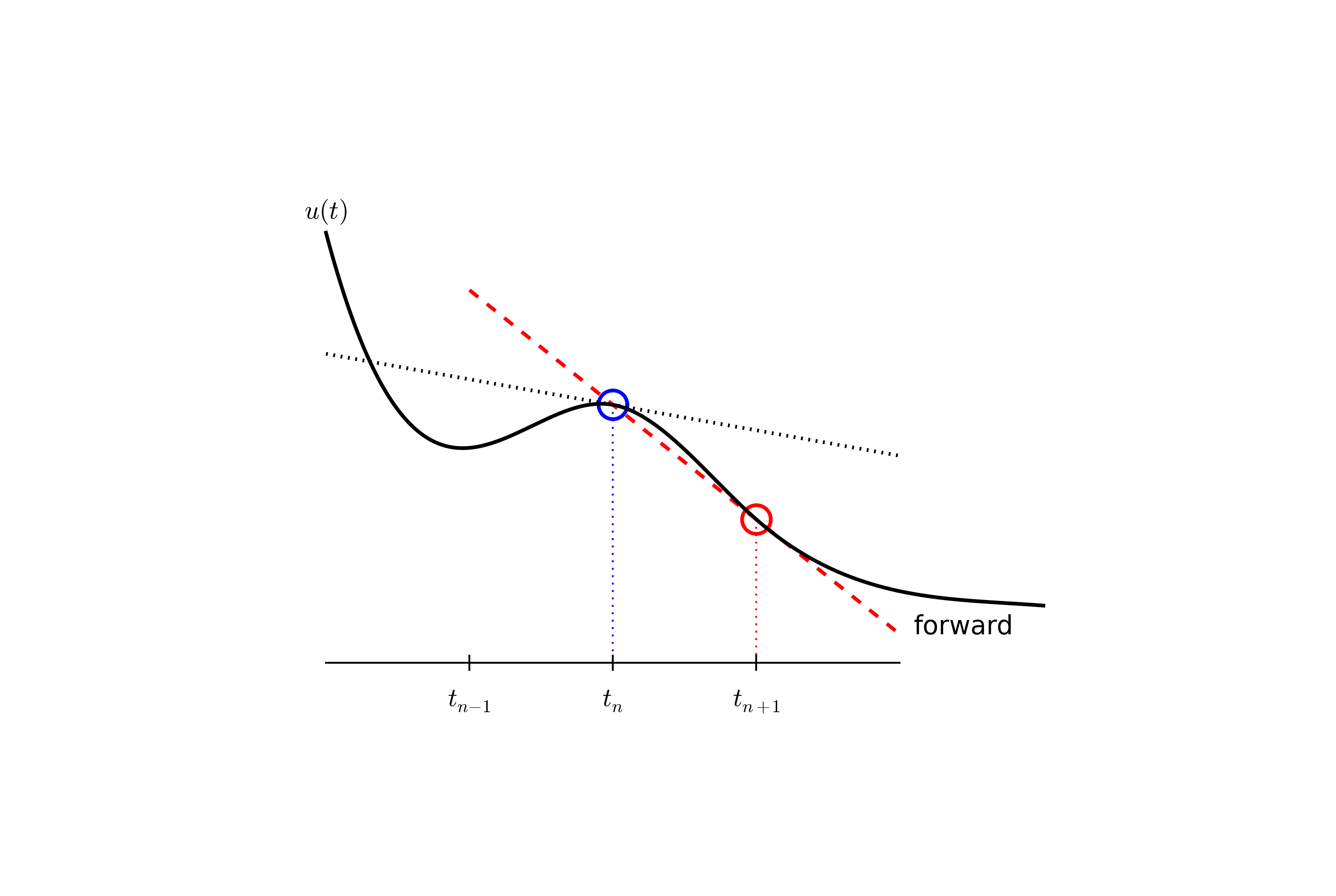

The next and most essential step of the method is to replace the derivative \( u' \) by a finite difference approximation. Let us first try a one-sided difference approximation (see Figure 3),

$$ \begin{equation} u'(t_n) \approx \frac{u^{n+1}-u^{n}}{t_{n+1}-t_n}\thinspace . \label{decay:FEdiff} \end{equation} $$ Inserting this approximation in \eqref{decay:step2} results in

$$ \begin{equation} \frac{u^{n+1}-u^{n}}{t_{n+1}-t_n} = -au^{n},\quad n=0,1,\ldots,N-1\thinspace . \label{decay:step3} \end{equation} $$ This equation is the discrete counterpart to the original ODE problem \eqref{decay:problem}, and often known as a finite difference scheme, which yields a straightforward way to compute the solution at the mesh points (\( u(t_n) \), \( n=1,2,\ldots,N \)) as shown next.

The final step is to identify the computational algorithm to be implemented in a program. The key observation here is to realize that \eqref{decay:step3} can be used to compute \( u^{n+1} \) if \( u^n \) is known. Starting with \( n=0 \), \( u^0 \) is known since \( u^0=u(0)=I \), and \eqref{decay:step3} gives an equation for \( u^1 \). Knowing \( u^1 \), \( u^2 \) can be found from \eqref{decay:step3}. In general, \( u^n \) in \eqref{decay:step3} can be assumed known, and then we can easily solve for the unknown \( u^{n+1} \):

$$ \begin{equation} u^{n+1} = u^n - a(t_{n+1} -t_n)u^n\thinspace . \label{decay:FE} \end{equation} $$ We shall refer to \eqref{decay:FE} as the Forward Euler (FE) scheme for our model problem. From a mathematical point of view, equations of the form \eqref{decay:FE} are known as difference equations since they express how differences in \( u \), like \( u^{n+1}-u^n \), evolve with \( n \). The finite difference method can be viewed as a method for turning a differential equation into a difference equation.

Computation with \eqref{decay:FE} is straightforward:

$$ \begin{align*} u_0 &= I,\\ u_1 & = u^0 - a(t_{1} -t_0)u^0 = I(1-a(t_1-t_0)),\\ u_2 & = u^1 - a(t_{2} -t_1)u^1 = I(1-a(t_1-t_0))(1 - a(t_2-t_1)),\\ u^3 &= u^2 - a(t_{3} -t_2)u^2 = I(1-a(t_1-t_0))(1 - a(t_2-t_1))(1 - a(t_3-t_2)), \end{align*} $$ and so on until we reach \( u^N \). In the case \( t_{n+1}-t_n \) is a constant, denoted by \( \Delta t \), we realize from the above calculations that

$$ \begin{align*} u_0 &= I,\\ u_1 & = I(1-a\Delta t),\\ u_2 & = I(1-a\Delta t)^2,\\ u^3 &= I(1-a\Delta t)^3,\\ &\vdots\\ u^N &= I(1-a\Delta t)^N\thinspace . \end{align*} $$ This means that we have found a closed formula for \( u^n \), and there is no need to let a computer generate the sequence \( u^1, u^2, u^3, \ldots \). However, finding such a formula for \( u^n \) is possible only for a few very simple problems.

As the next sections will show, the scheme \eqref{decay:FE} is just one out of many alternative finite difference (and other) schemes for the model problem \eqref{decay:problem}.

There are many choices of difference approximations in step 3 of the finite difference method as presented in the previous section. Another alternative is

$$ \begin{equation} u'(t_n) \approx \frac{u^{n}-u^{n-1}}{t_{n}-t_{n-1}}\thinspace . \label{decay:BEdiff} \end{equation} $$ Since this difference is based on going backward in time (\( t_{n-1} \)) for information, it is known as the Backward Euler difference. Figure 5 explains the idea.

Inserting \eqref{decay:BEdiff} in \eqref{decay:step2} yields the Backward Euler (BE) scheme:

$$ \begin{equation} \frac{u^{n}-u^{n-1}}{t_{n}-t_{n-1}} = -a u^n\thinspace . \label{decay:BE0} \end{equation} $$ We assume, as explained under step 4 in the section The Forward Euler scheme, that we have computed \( u^0, u^1, \ldots, u^{n-1} \) such that \eqref{decay:BE0} can be used to compute \( u^n \). For direct similarity with the Forward Euler scheme \eqref{decay:FE} we replace \( n \) by \( n+1 \) in \eqref{decay:BE0} and solve for the unknown value \( u^{n+1} \):

$$ \begin{equation} u^{n+1} = \frac{1}{1+ a(t_{n+1}-t_n)} u^n\thinspace . \label{decay:BE} \end{equation} $$

The finite difference approximations used to derive the schemes \eqref{decay:FE} and \eqref{decay:BE} are both one-sided differences, known to be less accurate than central (or midpoint) differences. We shall now construct a central difference at \( t_{n+1/2}=\frac{1}{2} (t_n + t_{n+1}) \), or \( t_{n+1/2}=(n+\frac{1}{2})\Delta t \) if the mesh spacing is uniform in time. The approximation reads

$$ \begin{equation} u'(t_{n+\frac{1}{2}}) \approx \frac{u^{n+1}-u^n}{t_{n+1}-t_n}\thinspace . \label{decay:CNdiff} \end{equation} $$ Note that the fraction on the right-hand side is the same as for the Forward Euler approximation \eqref{decay:FEdiff} and the Backward Euler approximation \eqref{decay:BEdiff} (with \( n \) replaced by \( n+1 \)). The accuracy of this fraction as an approximation to the derivative of \( u \) depends on where we seek the derivative: in the center of the interval \( [t_{n},t_{n+1}] \) or at the end points.

With the formula \eqref{decay:CNdiff}, where \( u' \) is evaluated at \( t_{n+1/2} \), it is natural to demand the ODE to be fulfilled at the time points between the mesh points:

$$ \begin{equation} u'(t_{n+\frac{1}{2}}) = -au(t_{n+\frac{1}{2}}),\quad n=0,\ldots,N-1\thinspace . \label{decay:step2m} \end{equation} $$ Using \eqref{decay:CNdiff} in \eqref{decay:step2m} results in

$$ \begin{equation} \frac{u^{n+1}-u^n}{t_{n+1}-t_n} = -au^{n+\frac{1}{2}}, \label{decay:CN0} \end{equation} $$ where \( u^{n+\frac{1}{2}} \) is a short form for \( u(t_{n+\frac{1}{2}}) \). The problem is that we aim to compute \( u^n \) for integer \( n \), implying that \( u^{n+\frac{1}{2}} \) is not a quantity computed by our method. It must be expressed by the quantities that we actually produce, i.e., \( u \) at the mesh points. One possibility is to approximate \( u^{n+\frac{1}{2}} \) as an average of the \( u \) values at the neighboring mesh points:

$$ \begin{equation} u^{n+\frac{1}{2}} \approx \frac{1}{2} (u^n + u^{n+1})\thinspace . \label{decay:uhalfavg} \end{equation} $$ Using \eqref{decay:uhalfavg} in \eqref{decay:CN0} results in

$$ \begin{equation} \frac{u^{n+1}-u^n}{t_{n+1}-t_n} = -a\frac{1}{2} (u^n + u^{n+1})\thinspace . \label{decay:CN1} \end{equation} $$ Figure 5 sketches the geometric interpretation of such a centered difference.

We assume that \( u^n \) is already computed so that \( u^{n+1} \) is the unknown, which we can solve for:

$$ \begin{equation} u^{n+1} = \frac{1-\frac{1}{2} a(t_{n+1}-t_n)}{1 + \frac{1}{2} a(t_{n+1}-t_n)}u^n\thinspace . \label{decay:CN} \end{equation} $$ The finite difference scheme \eqref{decay:CN} is known as the midpoint scheme or the Crank-Nicolson (CN) scheme. We shall use the latter name.

Let us reconsider the derivation of the Forward Euler, Backward Euler, and Crank-Nicolson schemes. In all the mentioned schemes we replace \( u' \) by the fraction

$$ \begin{equation*} \frac{u^{n+1}-u^{n}}{t_{n+1}-t_n},\end{equation*} $$ and the difference between the methods lies in which point this fraction approximates the derivative; i.e., in which point we sample the ODE. So far this has been the end points or the midpoint of \( [t_n,t_{n+1}] \). However, we may choose any point \( \tilde t \in [t_n,t_{n+1}] \). The difficulty is that evaluating the right-hand side \( -au \) at an arbitrary point faces the same problem as in the section The Crank-Nicolson scheme: the point value must be expressed by the discrete \( u \) quantities that we compute by the scheme, i.e., \( u^n \) and \( u^{n+1} \). Following the averaging idea from the section The Crank-Nicolson scheme, the value of \( u \) at an arbitrary point \( \tilde t \) can be calculated as a weighted average, which generalizes the arithmetic average \( \frac{1}{2}u^n + \frac{1}{2}u^{n+1} \). If we express \( \tilde t \) as a weighted average $$ t_{n+\theta} = \theta t_{n+1} + (1-\theta) t_{n},$$ where \( \theta\in [0,1] \) is the weighting factor, we can write

$$ \begin{equation} u(\tilde t) = u(\theta t_{n+1} + (1-\theta) t_{n}) \approx \theta u^{n+1} + (1-\theta) u^{n}\thinspace . \label{decay:thetaavg} \end{equation} $$

We can now let the ODE hold at the point \( \tilde t\in [t_n,t_{n+1}] \), approximate \( u' \) by the fraction \( (u^{n+1}-u^{n})/(t_{n+1}-t_n) \), and approximate the right-hand side \( -au \) by the weighted average \eqref{decay:thetaavg}. The result is

$$ \begin{equation} \frac{u^{n+1}-u^{n}}{t_{n+1}-t_n} = -a (\theta u^{n+1} + (1-\theta) u^{n}) \label{decay:th0} \thinspace . \end{equation} $$ This is a generalized scheme for our model problem: \( \theta =0 \) gives the Forward Euler scheme, \( \theta =1 \) gives the Backward Euler scheme, and \( \theta =1/2 \) gives the Crank-Nicolson scheme. In addition, we may choose any other value of \( \theta \) in \( [0,1] \).

As before, \( u^n \) is considered known and \( u^{n+1} \) unknown, so we solve for the latter:

$$ \begin{equation} u^{n+1} = \frac{1 - (1-\theta) a(t_{n+1}-t_n)}{1 + \theta a(t_{n+1}-t_n)}\thinspace . \label{decay:th} \end{equation} $$ This scheme is known as the \( \theta \)-rule, or alternatively written as the "theta-rule".

All schemes up to now have been formulated for a general non-uniform mesh in time: \( t_0,t_1,\ldots,t_N \). Non-uniform meshes are highly relevant since one can use many points in regions where \( u \) varies rapidly, and save points in regions where \( u \) is slowly varying. This is the key idea of adaptive methods where the spacing of the mesh points are determined as the computations proceed.

However, a uniformly distributed set of mesh points is very common and sufficient for many applications. It therefore makes sense to present the finite difference schemes for a uniform point distribution \( t_n=n\Delta t \), where \( \Delta t \) is the constant spacing between the mesh points, also referred to as the time step. The resulting formulas look simpler and are perhaps more well known:

$$ \begin{align} u^{n+1} &= (1 - a\Delta t )u^n \quad (\hbox{FE}) \label{decay:FE:u}\\ u^{n+1} &= \frac{1}{1+ a\Delta t} u^n \quad (\hbox{BE}) \label{decay:BE:u}\\ u^{n+1} &= \frac{1-\frac{1}{2} a\Delta t}{1 + \frac{1}{2} a\Delta t} u^n \quad \quad (\hbox{CN}) \label{decay:CN:u}\\ u^{n+1} &= \frac{1 - (1-\theta) a\Delta t}{1 + \theta a\Delta t}u^n \quad (\theta-\hbox{rule}) \label{decay:th:u} \end{align} $$

Not surprisingly, we present alternative schemes because they have different pros and cons, both for the simple ODE in question (which can easily be solved as accurately as desired), and for more advanced differential equation problems.

Finite difference formulas can be tedious to write and read, especially for differential equations with many terms and many derivatives. To save space and help the reader of the scheme to quickly see the nature of the difference approximations, we introduce a compact notation. A forward difference approximation is denoted by the \( D_t^+ \) operator:

$$ \begin{equation} [D_t^+u]^n = \frac{u^{n+1} - u^{n}}{\Delta t} \approx \frac{d}{dt} u(t_n) \label{fd:D:f} \thinspace . \end{equation} $$ The notation consists of an operator that approximates differentiation with respect to an independent variable, here \( t \). The operator is built of the symbol \( D \), with the variable as subscript and a superscript denoting the type of difference. The superscript \( {}^+ \) indicates a forward difference. We place square brackets around the operator and the function it operates on and specify the mesh point, where the operator is acting, by a superscript.

The corresponding operator notation for a centered difference and a backward difference reads

$$ \begin{equation} [D_tu]^n = \frac{u^{n+\frac{1}{2}} - u^{n-\frac{1}{2}}}{\Delta t} \approx \frac{d}{dt} u(t_n), \label{fd:D:c} \end{equation} $$ and $$ \begin{equation} [D_t^-u]^n = \frac{u^{n} - u^{n-1}}{\Delta t} \approx \frac{d}{dt} u(t_n) \label{fd:D:b} \thinspace . \end{equation} $$ Note that the superscript \( {}^- \) denotes the backward difference, while no superscript implies a central difference.

An averaging operator is also convenient to have:

$$ \begin{equation} [\overline{u}^{t}]^n = \frac{1}{2} (u^{n-\frac{1}{2}} + u^{n+\frac{1}{2}} ) \approx u(t_n) \label{fd:mean:a} \end{equation} $$ The superscript \( t \) indicates that the average is taken along the time coordinate. The common average \( (u^n + u^{n+1})/2 \) can now be expressed as \( [\overline{u}^{t}]^{n+1/2} \).

The Backward Euler finite difference approximation to \( u'=-au \) can be written as follows utilizing the compact notation:

$$ \begin{equation*} [D_t^-u]^n = -au^n \thinspace . \end{equation*} $$ In difference equations we often place the square brackets around the whole equation, to indicate at which mesh point the equation applies, since each term is supposed to be approximated at the same point:

$$ \begin{equation} [D_t^- u = -au]^n \thinspace . \end{equation} $$ The Forward Euler scheme takes the form

$$ \begin{equation} [D_t^+ u = -au]^n, \end{equation} $$ while the Crank-Nicolson scheme is written as

$$ \begin{equation} [D_t u = -a\overline{u}^t]^{n+\frac{1}{2}}\thinspace . \label{fd:compact:ex:CN} \end{equation} $$ Just apply \eqref{fd:D:c} and \eqref{fd:mean:a} and write out the expressions to see that \eqref{fd:compact:ex:CN} is indeed the Crank-Nicolson scheme.

The \( \theta \)-rule can be specified by

$$ \begin{equation} [\bar D_t u = -a\overline{u}^{t,\theta}]^{n+\theta}, \label{decay:fd1:op:theta} \end{equation} $$ if we define a new time difference and a weighted averaging operator:

$$ \begin{equation} [\bar D_t u]^{n+\theta} = \frac{u^{n+1}-u^n}{t^{n+1}-t^n}, \label{decay:fd1:Du:theta} \end{equation} \begin{equation} [\overline{u}^{t,\theta}]^{n+\theta} = (1-\theta)u^{n} + \theta u^{n+1} \approx u(t_{n+\theta}), \label{decay:fd1:wmean:a} \end{equation} $$ where \( \theta\in [0,1] \). Note that for \( \theta =1/2 \) we recover the standard centered difference and the standard arithmetic average. The idea in \eqref{decay:fd1:op:theta} is to sample the equation at \( t_{n+\theta} \), use a skew difference at that point \( [\bar D_t u]^{n+\theta} \), and a shifted mean value. An alternative notation is $$ [D_t u]^{n+1/2} = \theta [-au]^{n+1} + (1-\theta)[-au]^{n}\thinspace .$$

Looking at the various examples above and comparing them with the underlying differential equations, we see immediately which difference approximations that have been used and at which point they apply. Therefore, the compact notation efficiently communicates the reasoning behind turning a differential equation into a difference equation.

The purpose now is to make a computer program for solving $$ u'(t) = -au(t),\quad t\in (0,T], \quad u(0)=I, $$ and display the solution on the screen, preferably together with the exact solution. We shall also be concerned with how we can test that the implementation is correct.

All programs referred to in this section are found in the src/decay directory.

Mathematical problem. We want to explore the Forward Euler scheme, the Backward Euler, and the Crank-Nicolson schemes applied to our model problem. From an implementational points of view, it is advantageous to implement the \( \theta \)-rule $$ u^{n+1} = \frac{1 - (1-\theta) a\Delta t}{1 + \theta a\Delta t}u^n, $$ since it can generate the three other schemes by various of choices of \( \theta \): \( \theta=0 \) for Forward Euler, \( \theta =1 \) for Backward Euler, and \( \theta =1/2 \) for Crank-Nicolson. Given \( a \), \( u^0=I \), \( T \), and \( \Delta t \), our task is to use the \( \theta \)-rule to compute \( u^1, u^2,\ldots,u^N \), where \( t_N=N\Delta t \), and \( N \) the closest integer to \( T/\Delta t \).

Computer Language: Python. Any programming language can be used to generate the \( u^{n+1} \) values from the formula above. However, in this document we shall mainly make use of Python of several reasons:

We choose to have an array u for storing the \( u^n \) values, \( n=0,1,\ldots,N \). The algorithmic steps are

The following Python function takes the input data of the problem (\( I \), \( a \), \( T \), \( \Delta t \), \( \theta \)) as arguments and returns two arrays with the solution \( u^0,\ldots,u^N \) and the mesh points \( t_0,\ldots,t_N \), respectively:

from numpy import *

def solver(I, a, T, dt, theta):

"""Solve u'=-a*u, u(0)=I, for t in (0,T] with steps of dt."""

N = int(T/dt) # no of time intervals

T = N*dt # adjust T to fit time step dt

u = zeros(N+1) # array of u[n] values

t = linspace(0, T, N+1) # time mesh

u[0] = I # assign initial condition

for n in range(0, N): # n=0,1,...,N-1

u[n+1] = (1 - (1-theta)*a*dt)/(1 + theta*dt*a)*u[n]

return u, t

The numpy library contains a lot of functions for array computing. Most of the function names are similar to what is found in the alternative scientific computing language MATLAB. Here we make use of

To compute with the solver function, we need to call it. Here is a sample call:

u, t = solver(I=1, a=2, T=8, dt=0.8, theta=1)

The shown implementation of the solver may face problems and wrong results if T, a, dt, and theta are given as integers, see Exercise 1: Experiment with integer division and Exercise 2: Experiment with wrong computations. The problem is related to integer division in Python (as well as in Fortran, C, and C++): 1/2 becomes 0, while 1.0/2, 1/2.0, or 1.0/2.0 all become are 0.5. It is enough that at least the nominator or the denominator is a real number (i.e., a float object) to ensure correct mathematical division. Inserting a conversion dt = float(dt) guarantees that dt is float and avoids problems in Exercise 2: Experiment with wrong computations.

Another problem with computing \( N=T/\Delta t \) is that we should round \( N \) to the nearest integer. With N = int(T/dt) the int operation picks the largest integer smaller than T/dt. Correct rounding is obtained by

N = int(round(T/dt))

The complete version of our improved, safer solver function then becomes

from numpy import *

def solver(I, a, T, dt, theta):

"""Solve u'=-a*u, u(0)=I, for t in (0,T] with steps of dt."""

dt = float(dt) # avoid integer division

N = int(round(T/dt)) # no of time intervals

T = N*dt # adjust T to fit time step dt

u = zeros(N+1) # array of u[n] values

t = linspace(0, T, N+1) # time mesh

u[0] = I # assign initial condition

for n in range(0, N): # n=0,1,...,N-1

u[n+1] = (1 - (1-theta)*a*dt)/(1 + theta*dt*a)*u[n]

return u, t

Right below the header line in the solver function there is a Python string enclosed in triple double quotes """. The purpose of this string object is to document what the function does and what the arguments are. In this case the necessary documentation do not span more than one line, but with triple double quoted strings the text may span several lines:

def solver(I, a, T, dt, theta):

"""

Solve

u'(t) = -a*u(t),

with initial condition u(0)=I, for t in the time interval

(0,T]. The time interval is divided into time steps of

length dt.

theta=1 corresponds to the Backward Euler scheme, theta=0

to the Forward Euler scheme, and theta=0.5 to the Crank-

Nicolson method.

"""

...

Such documentation strings appearing right after the header of a function are called doc strings. There are tools that can automatically produce nicely formatted documentation by extracting the definition of functions and the contents of doc strings.

It is strongly recommended to equip any function whose purpose is not obvious with a doc string. Nevertheless, the forthcoming text deviates from this rule if the function is explained in the text.

Having computed the discrete solution u, it is natural to look at the numbers:

# Write out a table of t and u values:

for i in range(len(t)):

print t[i], u[i]

This compact print statement gives unfortunately quite ugly output because the t and u values are not aligned in nicely formatted columns. To fix this problem, we recommend to use the printf format, supported most programming languages inherited from C. Another choice is Python's recent format string syntax.

Writing t[i] and u[i] in two nicely formatted columns is done like this with the printf format:

print 't=%6.3f u=%g' % (t[i], u[i])

The percentage signs signify "slots" in the text where the variables listed at the end of the statement are inserted. For each "slot" one must specify a format for how the variable is going to appear in the string: s for pure text, d for an integer, g for a real number written as compactly as possible, 9.3E for scientific notation with three decimals in a field of width 9 characters (e.g., -1.351E-2), or .2f for a standard decimal notation, here with two decimals, formatted with minimum width. The printf syntax provides a quick way of formatting tabular output of numbers with full control of the layout.

The alternative format string syntax looks like

print 't={t:6.3f} u={u:g}'.format(t=t[i], u=u[i])

As seen, this format allows logical names in the "slots" where t[i] and u[i] are to be inserted. The "slots" are surrounded by curly braces, and the logical name is followed by a colon and then the printf-like specification of how to format real numbers, integers, or strings.

The function and main program shown above must be placed in a file, say with name dc_v1.py. Make sure you write the code with a suitable text editor (Gedit, Emacs, Vim, Notepad++, or similar). The program is run by executing the file this way:

Terminal> python dc_v1.py

The text Terminal> just signifies a prompt in a Unix/Linux or DOS terminal window. After this prompt (which will look different in your terminal window, depending on the terminal application and how it is set up), commands like python dc_v1.py can be issued. These commands are interpreted by the operating system.

We strongly recommend to run Python programs within the IPython shell. First start IPython by typing ipython in the terminal window. Inside the IPython shell, our program dc_v1.py is run by the command run dc_v1.py. The advantage of running programs in IPython are many: previous commands are easily recalled, %pdb turns on debugging so that variables can be examined if the program aborts due to an exception, output of commands are stored in variables, programs and statements can be profiled, any operating system command can be executed, modules can be loaded automatically and other customizations can be performed when starting IPython -- to mention a few of the most useful features.

Although running programs in IPython is strongly recommended, most execution examples in the forthcoming text use the standard Python shell with prompt >>> and run programs through a typesetting like Terminal> python programname. The reason is that such typesetting makes the text more compact in the vertical direction than showing sessions with IPython syntax.

It is easy to make mistakes while deriving and implementing numerical algorithms, so we should never believe in the printed \( u \) values before they have been thoroughly verified. The most obvious idea is to compare the computed solution with the exact solution, when that exists, but there will always be a discrepancy between these two solutions because of the numerical approximations. The challenging question is whether we have the mathematically correct discrepancy or if we have another, maybe small, discrepancy due to both an approximation error and an error in the implementation.

The purpose of verifying a program is to bring evidence for the fact that there are no errors in the implementation. To avoid mixing unavoidable approximation errors and undesired implementation errors, we should try to make tests where we have some exact computation of the discrete solution or at least parts of it.

The simplest approach to produce a correct reference for the discrete solution \( u \) of finite difference equations is to compute a few steps of the algorithm by hand. Then we can compare the hand calculations with numbers produced by the program.

A straightforward approach is to use a calculator and compute \( u^1 \), \( u^2 \), and \( u^3 \). However, the chosen values of \( I \) and \( \theta \) given in the execution example above are not good, because the numbers 0 and 1 can easily simplify formulas too much for test purposes. For example, with \( \theta =1 \) the nominator in the formula for \( u^n \) will be the same for all \( a \) and \( \Delta t \) values. One should therefore choose more "arbitrary" values, say \( \theta =0.8 \) and \( I=0.1 \). Hand calculations with the aid of a calculator gives

$$ A\equiv \frac{1 - (1-\theta) a\Delta t}{1 + \theta a \Delta t} = 0.298245614035$$ $$ \begin{align*} u^1 &= AI=0.0298245614035,\\ u^2 &= Au^1= 0.00889504462912,\\ u^3 &=Au^2= 0.00265290804728 \end{align*} $$

Comparison of these manual calculations with the result of the solver function is carried out in the function

def verify_three_steps():

"""Compare three steps with known manual computations."""

theta = 0.8; a = 2; I = 0.1; dt = 0.8

u_by_hand = array([I,

0.0298245614035,

0.00889504462912,

0.00265290804728])

N = 3 # number of time steps

u, t = solver(I=I, a=a, T=N*dt, dt=dt, theta=theta)

tol = 1E-15 # tolerance for comparing floats

difference = abs(u - u_by_hand).max()

success = difference <= tol

return success

The main program, where we call the solver function and print u, is now put in a separate function main:

def main():

u, t = solver(I=1, a=2, T=8, dt=0.8, theta=1)

# Write out a table of t and u values:

for i in range(len(t)):

print 't=%6.3f u=%g' % (t[i], u[i])

# or print 't={t:6.3f} u={u:g}'.format(t=t[i], u=u[i])

The main program in the file may now first run the verification test and then go on with the real simulation (main()) only if the test is passed:

if verify_three_steps():

main()

else:

print 'Bug in the implementation!'

Since the verification test is always done, future errors introduced accidentally in the program have a good chance of being detected.

It is essential that verification tests can be automatically run at any time. For this purpose, there are test frameworks and corresponding programming rules that allow us to request running through a suite of test cases, but in this very early stage of program development we just implement and run the verification in our own code so that every detail is visible and understood.

The complete program including the verify_three_steps* functions is found in the file dc_verf1.py.

Sometimes it is possible to find a closed-form exact discrete solution that fulfills the discrete finite difference equations. The implementation can then be verified against the exact discrete solution. This is usually the best technique for verification.

Define $$ A = \frac{1 - (1-\theta) a\Delta t}{1 + \theta a \Delta t}\thinspace . $$ Manual computations with the \( \theta \)-rule results in $$ \begin{align*} u^0 &= I,\\ u^1 &= Au^0 = AI,\\ u^2 &= Au^1 = A^2I,\\ &\vdots\\ u^n &= A^nu^{n-1} = A^nI \thinspace . \end{align*} $$ We have then established the exact discrete solution as $$ \begin{equation} u^n = IA^n \label{decay:un:exact} \thinspace . \end{equation} $$ One should be conscious about the different meanings of the notation on the left- and right-hand side of this equation: on the left, \( n \) is a superscript reflecting a counter of mesh points, while on the right, \( n \) is the power in an exponentiation.

Comparison of the exact discrete solution and the computed solution is done in the following function:

def verify_exact_discrete_solution():

def exact_discrete_solution(n, I, a, theta, dt):

factor = (1 - (1-theta)*a*dt)/(1 + theta*dt*a)

return I*factor**n

theta = 0.8; a = 2; I = 0.1; dt = 0.8

N = int(8/dt) # no of steps

u, t = solver(I=I, a=a, T=N*dt, dt=dt, theta=theta)

u_de = array([exact_discrete_solution(n, I, a, theta, dt)

for n in range(N+1)])

difference = abs(u_de - u).max() # max deviation

tol = 1E-15 # tolerance for comparing floats

success = difference <= tol

return success

Note that one can define a function inside another function (but such a function is invisible outside the function in which it is defined). The complete program is found in the file dc_verf2.py.

Now that we have evidence for a correct implementation, we are in a position to compare the computed \( u^n \) values in the u array with the exact \( u \) values at the mesh points, in order to study the error in the numerical solution.

Let us first make a function for the analytical solution \( \uex(t)=Ie^{-at} \) of the model problem:

def exact_solution(t, I, a):

return I*exp(-a*t)

A natural way to compare the exact and discrete solutions is to calculate their difference at the mesh points:

$$ \begin{equation} e_n = \uex(t_n) - u^n,\quad n=0,1,\ldots,N \thinspace . \end{equation} $$ These numbers are conveniently computed by

u, t = solver(I, a, T, dt, theta) # Numerical solution

u_e = exact_solution(t, I, a)

e = u_e - u

The last two statements make use of array arithmetics: t is an array of mesh points that we pass to exact_solution. This function evaluates -a*t, which is a scalar times an array, meaning that the scalar is multiplied with each array element. The result is an array, let us call it tmp1. Then exp(tmp1) means applying the exponential function to each element in tmp, resulting an array, say tmp2. Finally, I*tmp2 is computed (scalar times array) and u_e refers to this array returned from exact_solution. The expression u_e - u is the difference between two arrays, resulting in a new array referred to by e.

The array e is the current problem's discrete error function. Very often we want to work with just one number reflecting the size of the error. A common choice is to integrate \( e_n^2 \) over the mesh and take the square root. Assuming the exact and discrete solution to vary linearly between the mesh points, the integral is given exactly by the Trapezoidal rule:

$$ \hat E^2 = \Delta t\left(\frac{1}{2}e_0^2 + \frac{1}{2}e_N^2 + \sum_{n=1}^{N-1} e_n^2\right) $$ A common approximation of this expression, for convenience, is

$$ \hat E^2 \approx E^2 = \Delta t\sum_{n=0}^{N} e_n^2 $$ The error in this approximation is not much of a concern: it means that the error measure is not exactly the Trapezoidal rule of an integral, but a slightly different measure. We could equally well have chosen other error messages, but the choice is not important as long as we use the same error measure consistently in all experiments when investigating the error.

The error measure \( \hat E \) or \( E \) is referred to as the \( L_2 \) norm of the discrete error function. The formula for \( E \) will be frequently used: $$ \begin{equation} E = \sqrt{\Delta t\sum_{n=0}^N e_n^2} \label{decay:E} \end{equation} $$ The corresponding Python code, using array arithmetics, reads

E = sqrt(dt*sum(e**2))

The sum function comes from numpy and computes the sum of the elements of an array. Also the sqrt function is from numpy and computes the square root of each element in the array argument.

Instead of doing array computing we can compute with one element at a time:

m = len(u) # length of u array (alt: u.size)

u_e = zeros(m)

t = 0

for i in range(m):

u_e[i] = exact_solution(t, a, I)

t = t + dt

e = zeros(m)

for i in range(m):

e[i] = u_e[i] - u[i]

s = 0 # summation variable

for i in range(m):

s = s + e[i]**2

error = sqrt(dt*s)

Such element-wise computing, often called scalar computing, takes more code, is less readable, and runs much slower than array computing.

Having the t and u arrays, the approximate solution u is visualized by plot(t, u):

from matplotlib.pyplot import *

plot(t, u)

show()

It will be illustrative to also plot \( \uex(t) \) for comparison. Doing a plot(t, u_e) is not exactly what we want: the plot function draws straight lines between the discrete points (t[n], u_e[n]) while \( \uex(t) \) varies as an exponential function between the mesh points. The technique for showing the "exact" variation of \( \uex(t) \) between the mesh points is to introduce a very fine mesh for \( \uex(t) \):

t_e = linspace(0, T, 1001) # fine mesh

u_e = exact_solution(t_e, I, a)

plot(t, u, 'r-') # red line for u

plot(t_e, u_e, 'b-') # blue line for u_e

With more than one curve in the plot we need to associate each curve with a legend. We also want appropriate names on the axis, a title, and a file containing the plot as an image for inclusion in reports. The Matplotlib package (matplotlib.pyplot) contains functions for this purpose. The names of the functions are similar to the plotting functions known from MATLAB. A complete plot session then becomes

from matplotlib.pyplot import *

figure() # create new plot

t_e = linspace(0, T, 1001) # fine mesh for u_e

u_e = exact_solution(t_e, I, a)

plot(t, u, 'r--o') # red dashes w/circles

plot(t_e, u_e, 'b-') # blue line for exact sol.

legend(['numerical', 'exact'])

xlabel('t')

ylabel('u')

title('theta=%g, dt=%g' % (theta, dt))

savefig('%s_%g.png' % (theta, dt))

show()

Note that savefig here creates a PNG file whose name reflects the values of \( \theta \) and \( \Delta t \) so that we can easily distinguish files from different runs with \( \theta \) and \( \Delta t \).

A bit more sophisticated and easy-to-read filename can be generated by mapping the \( \theta \) value to acronyms for the three common schemes: FE (Forward Euler, \( \theta=0 \)), BE (Backward Euler, \( \theta=1 \)), CN (Crank-Nicolson, \( \theta=0.5 \)). A Python dictionary is ideal for such a mapping from numbers to strings:

theta2name = {0: 'FE', 1: 'BE', 0.5: 'CN'}

savefig('%s_%g.png' % (theta2name[theta], dt))

Let us wrap up the computation of the error measure and all the plotting statements in a function explore. This function can be called for various \( \theta \) and \( \Delta t \) values to see how the error varies with the method and the mesh resolution:

def explore(I, a, T, dt, theta=0.5, makeplot=True):

"""

Run a case with the solver, compute error measure,

and plot the numerical and exact solutions (if makeplot=True).

"""

u, t = solver(I, a, T, dt, theta) # Numerical solution

u_e = exact_solution(t, I, a)

e = u_e - u

E = sqrt(dt*sum(e**2))

if makeplot:

figure() # create new plot

t_e = linspace(0, T, 1001) # fine mesh for u_e

u_e = exact_solution(t_e, I, a)

plot(t, u, 'r--o') # red dashes w/circles

plot(t_e, u_e, 'b-') # blue line for exact sol.

legend(['numerical', 'exact'])

xlabel('t')

ylabel('u')

title('theta=%g, dt=%g' % (theta, dt))

theta2name = {0: 'FE', 1: 'BE', 0.5: 'CN'}

savefig('%s_%g.png' % (theta2name[theta], dt))

savefig('%s_%g.pdf' % (theta2name[theta], dt))

savefig('%s_%g.eps' % (theta2name[theta], dt))

show()

return E

The figure() call is key here: without it, a new plot command will draw the new pair of curves in the same plot window, while we want the different pairs to appear in separate windows and files. Calling figure() ensures this.

The explore function stores the plot in three different image file formats: PNG, PDF, and EPS (Encapsulated PostScript). The PNG format is aimed at being included in HTML files, the PDF format in pdfLaTeX documents, and the EPS format in LaTeX documents. Frequently used viewers for these image files on Unix systems are gv (comes with Ghostscript) for the PDF and EPS formats and display (from the ImageMagick) suite for PNG files:

Terminal> gv BE_0.5.pdf

Terminal> gv BE_0.5.eps

Terminal> display BE_0.5.png

The complete code containing the functions above resides in the file dc_plot_mpl.py. Running this program results in

Terminal> python dc_plot_mpl.py

0.0 0.40: 2.105E-01

0.0 0.04: 1.449E-02

0.5 0.40: 3.362E-02

0.5 0.04: 1.887E-04

1.0 0.40: 1.030E-01

1.0 0.04: 1.382E-02

We observe that reducing \( \Delta t \) by a factor of 10 increases the accuracy for all three methods (\( \theta \) values). We also see that the combination of \( \theta=0.5 \) and a small time step \( \Delta t =0.04 \) gives a much more accurate solution, and that \( \theta=0 \) and \( \theta=0 \) with \( \Delta t = 0.4 \) result in the least accurate solutions.

Figure 6 demonstrates that the numerical solution for \( \Delta t=0.4 \) clearly lies below the exact curve, but that the accuracy improves considerably by using 1/10 of this time step.

Figure 6: The Forward Euler scheme for two values of the time step.

Mounting two PNG files, as done in the figure, is easily done by the montage program from the ImageMagick suite:

Terminal> montage -background white -geometry 100% -tile 2x1 \

FE_0.4.png FE_0.04.png FE1.png

Terminal> convert -trim FE1.png FE1.png

The -geometry argument is used to specify the size of the image, and here we preserve the individual sizes of the images. The -tile HxV option specifies H images in the horizontal direction and V images in the vertical direction. A series of image files to be combined are then listed, with the name of the resulting combined image, here FE1.png at the end. The convert -trim command removes surrounding white areas in the figure.

For \LaTeX{} reports it is not recommended to use montage and PNG files as the result has too low resolution. Instead, plots should be made in the PDF format and combined using the pdftk, pdfnup, and pdfcrop tools (on Linux/Unix):

Terminal> pdftk FE_0.4.png FE_0.04.png output tmp.pdf

Terminal> pdfnup --nup 2x1 tmp.pdf # output in tmp-nup.pdf

Terminal> pdfcrop tmp-nup.pdf tmp.pdf # output in tmp.pdf

Terminal> mv tmp.pdf FE1.png

Here, pdftk combines images into a multi-page PDF file, pdfnup combines the images in individual pages to a table of images (pages), and pdfcrop removes white margins in the resulting combined image file.

The behavior of the two other schemes is shown in Figures 7 and 8. Crank-Nicolson is obviously the most accurate scheme from this visual point of view.

Figure 7: The Backward Euler scheme for two values of the time step.

Figure 8: The Crank-Nicolson scheme for two values of the time step.

The SciTools package provides a unified plotting interface, called Easyviz, to many different plotting packages, including Matplotlib. The syntax is very similar to that of Matplotlib and MATLAB. In fact, the plotting commands shown above look the same in SciTool's Easyviz interface, apart from the import statement, which reads

from scitools.std import *

This statement performs a from numpy import * as well as an import of the most common pieces of the Easyviz (scitools.easyviz) package, along with some additional numerical functionality.

With Easyviz one can, using an extended plot command, merge several plotting commands into one, using keyword arguments:

plot(t, u, 'r--o', # red dashes w/circles

t_e, u_e, 'b-', # blue line for exact sol.

legend=['numerical', 'exact'],

xlabel='t',

ylabel='u',

title='theta=%g, dt=%g' % (theta, dt),

savefig='%s_%g.png' % (theta2name[theta], dt),

show=True)

The dc_plot_st.py file contains such a demo.

By default, Easyviz employs Matplotlib for plotting, but Gnuplot and Grace are viable alternatives:

Terminal> python dc_plot_st.py --SCITOOLS_easyviz_backend gnuplot

Terminal> python dc_plot_st.py --SCITOOLS_easyviz_backend grace

The backend used for creating plots (and numerous other options) can be permanently set in SciTool's configuration file.

All the Gnuplot windows are launched without any need to kill one before the next one pops up (as is the case with Matplotlib) and one can press the key 'q' anywhere in a plot window to kill it. Another advantage of Gnuplot is the automatic choice of sensible and distinguishable line types in black-and-white PostScript files (produced by savefig('myplot.eps')).

Regarding functionality for annotating plots with title, labels on the axis, legends, etc., we refer to the documentation of Matplotlib and SciTools for more detailed information on the syntax. The hope is that the programming syntax explained so far suffices for understanding the code and learning more from a combination of the forthcoming examples and other resources such as books and web pages.

It is good programming practice to let programs read input from the user rather than require the user to edit the source code when trying out new values of input parameters. Reading input from the command line is a simple and flexible way of interacting with the user. Python stores all the command-line arguments in the list sys.argv, and there are, in principle, two ways of programming with command-line arguments in Python:

The dc_plot_mpl.py program needs the following input data: \( I \), \( a \), \( T \), an option to turn the plot on or off (makeplot), and a list of \( \Delta t \) values.

The simplest way of reading this input from the command line is to say that the first four command-line arguments correspond to the first four points in the list above, in that order, and that the rest of the command-line arguments are the \( \Delta t \) values. The input given for makeplot can be a string among 'on', 'off', 'True', and 'False'. The code for reading this input is most conveniently put in a function:

import sys

def read_command_line():

if len(sys.argv) < 6:

print 'Usage: %s I a T on/off dt1 dt2 dt3 ...' % \

sys.argv[0]; sys.exit(1) # abort

I = float(sys.argv[1])

a = float(sys.argv[2])

T = float(sys.argv[3])

makeplot = sys.argv[4] in ('on', 'True')

dt_values = [float(arg) for arg in sys.argv[5:]]

return I, a, T, makeplot, dt_values

One should note the following about the constructions in the program above:

def main():

I, a, T, makeplot, dt_values = read_command_line()

for theta in 0, 0.5, 1:

for dt in dt_values:

E = explore(I, a, T, dt, theta, makeplot)

print '%3.1f %6.2f: %12.3E' % (theta, dt, E)

The complete program can be found in dc_cml.py.

Python's ArgumentParser tool in the argparse module makes it easy to create a professional command-line interface to any program. The documentation of `ArgumentParser` demonstrates its versatile applications, so we shall here just list an example containing the most used features. On the command line we want to specify option value pairs for \( I \), \( a \), and \( T \), e.g., --a 3.5 --I 2 --T 2. Including --makeplot turns the plot on and excluding this option turns the plot off. The \( \Delta t \) values can be given as --dt 1 0.5 0.25 0.1 0.01. Each parameter must have a sensible default value so that we specify the option on the command line only when the default value is not suitable.

We introduce a function for defining the mentioned command-line options:

def define_command_line_options():

import argparse

parser = argparse.ArgumentParser()

parser.add_argument('--I', '--initial_condition', type=float,

default=1.0, help='initial condition, u(0)',

metavar='I')

parser.add_argument('--a', type=float,

default=1.0, help='coefficient in ODE',

metavar='a')

parser.add_argument('--T', '--stop_time', type=float,

default=1.0, help='end time of simulation',

metavar='T')

parser.add_argument('--makeplot', action='store_true',

help='display plot or not')

parser.add_argument('--dt', '--time_step_values', type=float,

default=[1.0], help='time step values',

metavar='dt', nargs='+', dest='dt_values')

return parser

Each command-line option is defined through the parser.add_argument method. Alternative options, like the short --I and the more explaining version --initial_condition can be defined. Other arguments are type for the Python object type, a default value, and a help string, which gets printed if the command-line argument -h or --help is included. The metavar argument specifies the value associated with the option when the help string is printed. For example, the option for \( I \) has this help output:

Terminal> python dc_argparse.py -h

...

--I I, --initial_condition I

initial condition, u(0)

...

The structure of this output is

--I metavar, --initial_condition metavar

help-string

The --makeplot option is a pure flag without any value, implying a true value if the flag is present and otherwise a false value. The action='store_true' makes an option for such a flag.

Finally, the --dt option demonstrates how to allow for more than one value (separated by blanks) through the nargs='+' keyword argument. After the command line is parsed, we get an object where the values of the options are stored as attributes. The attribute name is specified by the dist keyword argument, which for the --dt option reads dt_values. Without the dest argument, the value of option --opt is stored as the attribute opt.

The code below demonstrates how to read the command line and extract the values for each option:

def read_command_line():

parser = define_command_line_options()

args = parser.parse_args()

print 'I={}, a={}, T={}, makeplot={}, dt_values={}'.format(

args.I, args.a, args.T, args.makeplot, args.dt_values)

return args.I, args.a, args.T, args.makeplot, args.dt_values

The main function remains the same as in the dc_cml.py code based on reading from sys.argv directly. A complete program using the demo above of ArgumentParser appears in the file dc_argparse.py.

We normally expect that the error \( E \) in the numerical solution is reduced if the mesh size \( \Delta t \) is decreased. More specifically, many numerical methods obey a power-law relation between \( E \) and \( \Delta t \):

$$ \begin{equation} E = C\Delta t^r, \label{decay:E:dt} \end{equation} $$ where \( C \) and \( r \) are (usually unknown) constants independent of \( \Delta t \). The formula \eqref{decay:E:dt} is viewed as an asymptotic model valid for sufficiently small \( \Delta t \). How small is normally hard to estimate without doing numerical estimations of \( r \).

The parameter \( r \) is known as the convergence rate. For example, if the convergence rate is 2, halving \( \Delta t \) reduces the error by a factor of 4. Diminishing \( \Delta t \) then has a greater impact on the error compared with methods that have \( r=1 \). For a given value of \( r \), we refer to the method as of \( r \)-th order. First- and second-order methods are most common in scientific computing.

There are two ways of estimating \( C \) and \( r \) based on a set of \( m \) simulations with corresponding pairs \( (\Delta t_i, E_i) \), \( i=0,\ldots,m-1 \), and \( \Delta t_{i} < \Delta t_{i-1} \) (i.e., decreasing cell size).

The disadvantage of method 1 is that \eqref{decay:E:dt} might not be valid for the coarsest meshes (largest \( \Delta t \) values), and fitting a line to all the data points is then misleading. Method 2 computes convergence rates for pairs of experiments and allows us to see if the sequence \( r_i \) converges to some value as \( i\rightarrow m-2 \). The final \( r_{m-2} \) can then be taken as the convergence rate. If the coarsest meshes have a differing rate, the corresponding time steps are probably too large for \eqref{decay:E:dt} to be valid. That is, those time steps lie outside the asymptotic range of \( \Delta t \) values where the error behave like \eqref{decay:E:dt}.

It is straightforward to extend the main function in the program dc_argparse.py with statements for computing \( r_0, r_1, \ldots, r_{m-2} \) from \eqref{decay:E:dt}:

from math import log

def main():

I, a, T, makeplot, dt_values = read_command_line()

r = {} # estimated convergence rates

for theta in 0, 0.5, 1:

E_values = []

for dt in dt_values:

E = explore(I, a, T, dt, theta, makeplot=False)

E_values.append(E)

# Compute convergence rates

m = len(dt_values)

r[theta] = [log(E_values[i-1]/E_values[i])/

log(dt_values[i-1]/dt_values[i])

for i in range(1, m, 1)]

for theta in r:

print '\nPairwise convergence rates for theta=%g:' % theta

print ' '.join(['%.2f' % r_ for r_ in r[theta]])

return r

The program is called dc_convrate.py.

The r object is a dictionary of lists. The keys in this dictionary are the \( \theta \) values. For example, r[1] holds the list of the \( r_i \) values corresponding to \( \theta=1 \). In the loop for theta in r, the loop variable theta takes on the values of the keys in the dictionary r (in an undetermined ordering). We could simply do a print r[theta] inside the loop, but this would typically yield output of the convergence rates with 16 decimals:

[1.331919482274763, 1.1488178494691532, ...]

Instead, we format each number with 2 decimals, using a list comprehension to turn the list of numbers, r[theta], into a list of formatted strings. Then we join these strings with a space in between to get a sequence of rates on one line in the terminal window. More generally, d.join(list) joins the strings in the list list to one string, with d as delimiter between list[0], list[1], etc.

Here is an example on the outcome of the convergence rate computations:

Terminal> python dc_convrate.py --dt 0.5 0.25 0.1 0.05 0.025 0.01

...

Pairwise convergence rates for theta=0:

1.33 1.15 1.07 1.03 1.02

Pairwise convergence rates for theta=0.5:

2.14 2.07 2.03 2.01 2.01

Pairwise convergence rates for theta=1:

0.98 0.99 0.99 1.00 1.00

The Forward and Backward Euler methods seem to have an \( r \) value which stabilizes at 1, while the Crank-Nicolson seems to be a second-order method with \( r=2 \).

Very often, we have some theory that predicts what \( r \) is for a numerical method. Various theoretical error measures for the \( \theta \)-rule point to \( r=2 \) for \( \theta =0.5 \) and \( r=1 \) otherwise. The computed estimates of \( r \) are in very good agreement with these theoretical values.

The strong practical application of computing convergence rates is for verification: wrong convergence rates point to errors in the code, and correct convergence rates brings evidence that the implementation is correct. Experience shows that bugs in the code easily destroys the expected convergence rate.

Let us experiment with bugs and see the implication on the convergence rate. We may, for instance, forget to multiply by a in the denominator in the updating formula for u[n+1]:

u[n+1] = (1 - (1-theta)*a*dt)/(1 + theta*dt)*u[n]

Running the same dc_convrate.py command as above gives the expected convergence rates (!). Why? The reason is that we just specified the \( \Delta t \) values are relied on default values for other parameters. The default value of \( a \) is 1. Forgetting the factor a has then no effect. This example shows how importance it is to avoid parameters that are 1 or 0 when verifying implementations. Running the code dc_v0.py with \( a=2.1 \) and \( I=0.1 \) yields

Terminal> python dc_convrate.py --a 2.1 --I 0.1 \

--dt 0.5 0.25 0.1 0.05 0.025 0.01

...

Pairwise convergence rates for theta=0:

1.49 1.18 1.07 1.04 1.02

Pairwise convergence rates for theta=0.5:

-1.42 -0.22 -0.07 -0.03 -0.01

Pairwise convergence rates for theta=1:

0.21 0.12 0.06 0.03 0.01

This time we see that the expected convergence rates for the Crank-Nicolson and Backward Euler methods are not obtained, while \( r=1 \) for the Forward Euler method. The reason for correct rate in the latter case is that \( \theta=0 \) and the wrong theta*dt term in the denominator vanishes anyway.

The error

u[n+1] = ((1-theta)*a*dt)/(1 + theta*dt*a)*u[n]

manifests itself through wrong rates \( r\approx 0 \) for all three methods. About the same results arise from an erroneous initial condition, u[0] = 1, or wrong loop limits, range(1,N). It seems that in this simple problem, most bugs we can think of are detected by the convergence rate test, provided the values of the input data do not hide the bug.

A verify_convergence_rate function could compute the dictionary of list via main and check if the final rate estimates (\( r_{m-2} \)) are sufficiently close to the expected ones. A tolerance of 0.1 seems appropriate, given the uncertainty in estimating \( r \):

def verify_convergence_rate():

r = main()

tol = 0.1

expected_rates = {0: 1, 1: 1, 0.5: 2}

for theta in r:

r_final = r[theta][-1]

diff = abs(expected_rates[theta] - r_final)

if diff > tol:

return False

return True # all tests passed

We remark that r[theta] is a list and the last element in any list can be extracted by the index -1.

The memory storage requirements of our implementations so far consists mainly of the u and t arrays, both of length \( N+1 \), plus some other temporary arrays that Python needs for intermediate results if we do array arithmetics in our program (e.g., I*exp(-a*t) needs to store a*t before - can be applied to it and then exp). The extremely modest storage requirements of simple ODE problems put no restrictions on the formulations of the algorithm and implementation. Nevertheless, when the methods for ODEs used here are applied to three-dimensional partial differential equation (PDE) problems, memory storage requirements suddenly become an issue.

The PDE counterpart to our model problem \( u'=-a \) is a diffusion equation \( u_t = a\nabla^2 u \) posed on a space-time domain. The discrete representation of this domain may in 3D be a spatial mesh of \( M^3 \) points and a time mesh of \( N \) points. A typical desired value for \( M \) is 100 in many applications, or even \( 1000 \). Storing all the computed \( u \) values, like we have done in the programs so far, demands storage of some arrays of size \( M^3N \), giving a factor of \( M^3 \) larger storage demands compared to our ODE programs. Each real number in the array for \( u \) requires 8 bytes of storage, resulting in a demand for 8 Gb of memory for only one array. In such cases one needs good ideas on how to lower the storage requirements. Fortunately, we can usually get rid of the \( M^3 \) factor. Below we explain how this is done, and the technique is almost always applied in implementations of PDE problems.

Let us critically evaluate how much we really need to store in the computer's memory in our implementation of the \( \theta \) method. To compute a new \( u^{n+1} \), all we need is \( u^n \). This implies that the previous \( u^{n-1},u^{n-2},\dots,u^0 \) values do not need to be stored in an array, although this is convenient for plotting and data analysis in the program. Instead of the u array we can work with two variables for real numbers, u and u_1, representing \( u^{n+1} \) and \( u^n \) in the algorithm, respectively. At each time level, we update u from u_1 and then set u_1 = u so that the computed \( u^{n+1} \) value becomes the "previous" value \( u^n \) at the next time level. The downside is that we cannot plot the solution after the simulation is done since only the last two numbers are available. The remedy is to store computed values in a file and use the file for visualizing the solution later.

We have implemented this memory saving idea in the file dc_memsave.py, which is a merge of the dc_plot_mpl.py and dc_argparse.py programs, using module prefixes np for numpy and plt for matplotlib.pyplot.

The following function implements the ideas above regarding minimizing memory usage and storing the solution on file:

def solver_memsave(I, a, T, dt, theta, filename='sol.dat'):

"""

Solve u'=-a*u, u(0)=I, for t in (0,T] with steps of dt.

Minimum use of memory. The solution is store on file

(with name filename) for later plotting.

"""

dt = float(dt) # avoid integer division

N = int(round(T/dt)) # no of intervals

outfile = open(filename, 'w')

# u: time level n+1, u_1: time level n

t = 0

u_1 = I

outfile.write('%.16E %.16E\n' % (t, u_1))

for n in range(1, N+1):

u = (1 - (1-theta)*a*dt)/(1 + theta*dt*a)*u_1

u_1 = u

t += dt

outfile.write('%.16E %.16E\n' % (t, u))

outfile.close()

return u, t

This code snippet serves as a quick introduction to file writing in Python. Reading the data in the file into arrays t and u are done by the function

def read_file(filename='sol.dat'):

infile = open(filename, 'r')

u = []; t = []

for line in infile:

words = line.split()

if len(words) != 2:

print 'Found more than two numbers on a line!', words

sys.exit(1) # abort

t.append(float(words[0]))

u.append(float(words[1]))

return np.array(t), np.array(u)

This type of file with numbers in rows and columns is very common, and numpy has a function loadtxt which loads such tabular data into a two-dimensional array, say data. The number in row i and column j is then data[i,j]. The whole column number j can be extracted by data[:,j]. A version of read_file using np.loadtxt reads

def read_file_numpy(filename='sol.dat'):

data = np.loadtxt(filename)

t = data[:,0]

u = data[:,1]

return t, u

The present counterpart to the explore function from dc_plot_mpl.py must run solver_memsave and then load data from file before we can compute the error measure and make the plot:

def explore(I, a, T, dt, theta=0.5, makeplot=True):

filename = 'u.dat'

u, t = solver_memsave(I, a, T, dt, theta, filename)

t, u = read_file(filename)

u_e = exact_solution(t, I, a)

e = u_e - u

E = np.sqrt(dt*np.sum(e**2))

if makeplot:

plt.figure()

...

The dc_memsave.py file also includes command-line options --I, --a, --T, --dt, --theta, and --makeplot for controlling input parameters and making a single run. For example,

Terminal> python dc_memsave.py --T 10 --theta 1 --dt 2

I=1.0, a=1.0, T=10.0, makeplot=True, theta=1.0, dt=2.0

theta=1.0 dt=2 Error=3.136E-01

Efficient use of differential equation models requires software that is easy to test and flexible for setting up extensive numerical experiments. This section introduces three important concepts and their applications to the exponential decay model:

The previous sections has outlined numerous different programs, all of them having their own copy of the solver function. Such copies of the same piece of code is against the important Don't Repeat Yourself (DRY) principle in programming. If we want to change the solver function there should be one and only one place where the change needs to be performed.

To clean up the repetitive code snippets scattered among the dc_*.py files, we start by collecting the various functions we want to keep for the future in one file, now called dc_mod.py. The following functions are copied to this file:

from numpy import *

from matplotlib.pyplot import *

import sys

def solver(I, a, T, dt, theta):

...

def verify_three_steps():

...

def verify_exact_discrete_solution():

...

def exact_solution(t, I, a):

...

def explore(I, a, T, dt, theta=0.5, makeplot=True):

...

def define_command_line_options():

...

def read_command_line(use_argparse=True):

if use_argparse:

parser = define_command_line_options()

args = parser.parse_args()

print 'I={}, a={}, makeplot={}, dt_values={}'.format(

args.I, args.a, args.makeplot, args.dt_values)

return args.I, args.a, args.makeplot, args.dt_values

else:

if len(sys.argv) < 6:

print 'Usage: %s I a on/off dt1 dt2 dt3 ...' % \

sys.argv[0]; sys.exit(1)

I = float(sys.argv[1])

a = float(sys.argv[2])

T = float(sys.argv[3])

makeplot = sys.argv[4] in ('on', 'True')

dt_values = [float(arg) for arg in sys.argv[5:]]

return I, a, makeplot, dt_values

def main():

...

This dc_mod.py file is already a module such that we can import desired in functions in other programs. For example,

from decay_theta import solver

u, t = solver(I=1.0, a=3.0, T=3, dt=0.01, theta=0.5)

However, it should also be possible to both use dc_mod.py as a module and execute the file as a program that runs main(). This is accomplished by ending the file with a test block:

if __name__ == '__main__':

main()

When dc_mod.py is used as a module, __name__ equals the module name decay_theta, while __name__ equals '__main__' when the file is run as a program. Optionally, we could run the verification tests if the word verify is present on the command line and verify_convergence_rate could be tested if verify_rates is found on the command line. The verify_rates argument must be removed before we read parameter values from the command line, other wise read_command_line (called by main) will not work properly.

if __name__ == '__main__':

if 'verify' in sys.argv:

if verify_three_steps() and verify_discrete_solution():

pass # ok

else:

print 'Bug in the implementation!'

elif 'verify_rates' in sys.argv:

sys.argv.remove('verify_rates')

if not '--dt' in sys.argv:

print 'Must assign several dt values'

sys.exit(1) # abort

if verify_convergence_rate():

pass

else:

print 'Bug in the implementation!'

else:

# Perform simulations

main()

Import statements of the form from module import * imports functions and variables in module.py into the current file. For example, when doing

from numpy import *

from matplotlib.pyplot import *

we get mathematical functions like sin and exp as well as MATLAB-style functions like linspace and plot, which can be called by these well-known names. However, it sometimes becomes confusing to know where a particular function comes from. Is it from numpy? Or matplotlib.pyplot? Or is it our own function?

An alternative import is

import numpy

import matplotlib.pyplot

and such imports require functions to be prefixed by the module name, e.g.,

t = numpy.linspace(0, T, N+1)

u_e = I*numpy.exp(-a*t)

matplotlib.pyplot.plot(t, u_e)

This is normally regarded as a better habit because it is explicitly stated from which module a function comes from.

The modules numpy and matplotlib.pyplot are so frequently used, and their full names quite tedious to write, so two standard abbreviations have evolved in the Python scientific computing community:

import numpy as np

import matplotlib.pyplot as plt

t = np.linspace(0, T, N+1)

u_e = I*np.exp(-a*t)

plt.plot(t, u_e)

A version of the decay_theta module where we use the np and plt prefixes is found in the file decay_theta_v2.py.

The downside of prefixing functions by the module name is that mathematical expressions like \( e^{-at}\sin(2\pi t) \) gets cluttered with module names,

numpy.exp(-a*t)*numpy.sin(2(numpy.pi*t)

# or

np.exp(-a*t)*np.sin(2*np.pi*t)

Such an expression looks like exp(-a*t)*sin(2*pi*t) in most other programming languages. Similarly, np.linspace and plt.plot look less familiar to people who are used to MATLAB and who have not adopted Python's prefix style. Whether to do from module import * or import module depends on personal taste and the problem at hand. In these writings we use from module import in shorter programs where similarity with MATLAB could be an advantage, and where a one-to-one correspondence between mathematical formulas and Python expressions is important. The style import module is preferred inside Python modules (see Exercise 9: Make a module for a demonstration).

We have emphasized how important it is to be able to run tests in the program at any time. This was solved by calling various verify* functions in the previous examples. However, there exists well-established procedures and corresponding tools for automating checking of tests. We shall briefly demonstrate two important techniques: doctest and unit testing. The corresponding files are the modules dc_mod_doctest.py and dc_mod_unittest.py.

Doc strings (the first string after the function header) are used to document the purpose of functions and their arguments. Very often it is instructive to include an example on how to use the function. Interactive examples in the Python shell are most illustrative as we can see the output resulting from function calls. For example, we can in the solver function include an example on calling this function and printing the computed u and t arrays:

def solver(I, a, T, dt, theta):

"""

Solve u'=-a*u, u(0)=I, for t in (0,T] with steps of dt.

>>> u, t = solver(I=0.8, a=1.2, T=4, dt=0.5, theta=0.5)

>>> for t_n, u_n in zip(t, u):

... print 't=%.1f, u=%.14f' % (t_n, u_n)

t=0.0, u=0.80000000000000

t=0.5, u=0.43076923076923

t=1.0, u=0.23195266272189

t=1.5, u=0.12489758761948

t=2.0, u=0.06725254717972

t=2.5, u=0.03621291001985

t=3.0, u=0.01949925924146

t=3.5, u=0.01049960113002

t=4.0, u=0.00565363137770

"""

...

When such interactive demonstrations are inserted in doc strings, Python's doctest module can be used to automate running all commands in interactive sessions and compare new output with the output appearing in the doc string. All we have to do in the current example is to write

Terminal> python -m doctest dc_mod_doctest.py

This command imports the doctest module, which runs all tests. No additional command-line argument is allowed when running doctest. If any test fails, the problem is reported, e.g.,

Terminal> python -m doctest dc_mod_doctest.py

********************************************************

File "dc_mod_doctest.py", line 12, in dc_mod_doctest....

Failed example:

for t_n, u_n in zip(t, u):

print 't=%.1f, u=%.14f' % (t_n, u_n)

Expected:

t=0.0, u=0.80000000000000

t=0.5, u=0.43076923076923

t=1.0, u=0.23195266272189