Basic finite element methods¶

| Author: | Hans Petter Langtangen |

|---|---|

| Date: | Dec 12, 2012 |

Note: QUITE PRELIMINARY VERSION

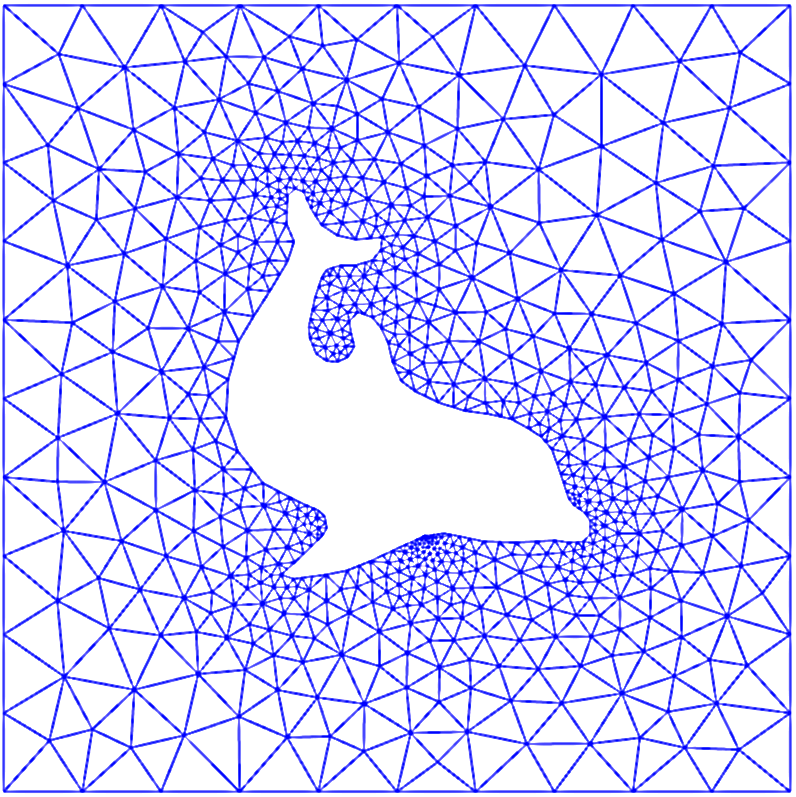

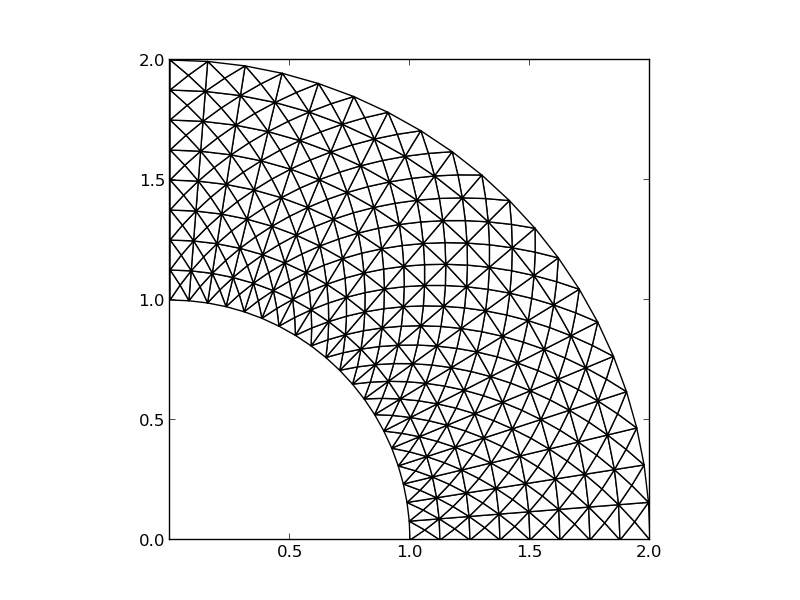

The finite element method is a powerful tool for solving differential equations, especially in complicated domains and where higher-order approximations are desired. Figure Domain for flow around a dolphin shows a two-dimensional domain with a non-trivial geometry. The idea is to divide the domain into triangles (elements) and seek a polynomial approximations to the unknown functions on each triangle. The method glues these piecewise approximations together to find a global solution. Linear and quadratic polynomials over the triangles are particularly popular.

Domain for flow around a dolphin

Many successful numerical methods for differential equations, including the finite element method, aim at approximating the unknown function by a sum

where \({\varphi}_i(x)\) are prescribed functions and \(c_i\), \(i=0,\ldots,N\), are unknown coefficients to be determined. Solution methods for differential equations utilizing (1) must have a principle for constructing \(N+1\) equations to determine \(c_0,\ldots,c_N\). Then there is a machinery regarding the actual constructions of the equations for \(c_0,\ldots,c_N\) in a particular problem. Finally, there is a solve phase for computing the solution \(c_0,\ldots,c_N\) of the \(N+1\) equations.

Especially in the finite element method, the machinery for constructing the discrete equations to be implemented on a computer is quite comprehensive, with many mathematical and implementational details entering the scene at the same time. From an ease-of-learning perspective it can therefore be wise to introduce the computational machinery for a trivial equation: \(u=f\). Solving this equation with \(f\) given and \(u\) on the form (1) means that we seek an approximation \(u\) to \(f\). This approximation problem has the advantage of introducing most of the finite element toolbox, but with postponing demanding topics related to differential equations (e.g., integration by parts, boundary conditions, and coordinate mappings). This is the reason why we shall first become familiar with finite element approximation before addressing finite element methods for differential equations.

First, we refresh some linear algebra concepts about approximating vectors in vector spaces. Second, we extend these concepts to approximating functions in function spaces, using the same principles and the same notation. We present examples on approximating functions by global basis functions with support throughout the entire domain. Third, we introduce the finite element type of local basis functions and explain the computational algorithms for working with such functions. Three types of approximation principles are covered: 1) the least squares method, 2) the Galerkin or \(L_2\) projection method, and 3) interpolation or collocation.

Approximation of vectors¶

We shall start with introducing two fundamental methods for determining the coefficients \(c_i\) in (1) and illustrate the methods on approximation of vectors, because vectors in vector spaces is more intuitive than working with functions in function spaces. The extension from vectors to functions will be trivial as soon as the fundamental ideas are understood.

The first method of approximation is called the least squares method and consists in finding \(c_i\) such that the difference \(u-f\), measured in some norm, is minimized. That is, we aim at finding the best approximation \(u\) to \(f\) (in some norm). The second method is not as intuitive: we find \(u\) such that the error \(u-f\) is orthogonal to the space where we seek \(u\). This is known as projection, or we may also call it a Galerkin method. When approximating vectors and functions, the two methods are equivalent, but this is no longer the case when working with differential equations.

Approximation of planar vectors¶

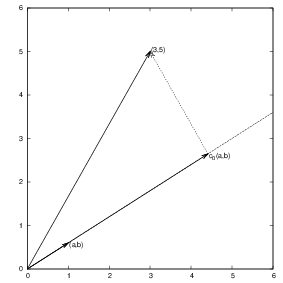

Suppose we have given a vector \(\pmb{f} = (3,5)\) in the \(xy\) plane and that we want to approximate this vector by a vector aligned in the direction of the vector \((a,b)\). Figure Approximation of a two-dimensional vector by a one-dimensional vector depicts the situation.

Approximation of a two-dimensional vector by a one-dimensional vector

We introduce the vector space \(V\) spanned by the vector \(\pmb{\varphi}_0=(a,b)\):

We say that \(\pmb{\varphi}_0\) is a basis vector in the space \(V\). Our aim is to find the vector \(\pmb{u} = c_0\pmb{\varphi}_0\in V\) which best approximates the given vector \(\pmb{f} = (3,5)\). A reasonable criterion for a best approximation could be to minimize the length of the difference between the approximate \(\pmb{u}\) and the given \(\pmb{f}\). The difference, or error, \(\pmb{e} = \pmb{f} -\pmb{u}\) has its length given by the norm

where \((\pmb{e},\pmb{e})\) is the inner product of \(\pmb{e}\) and itself. The inner product, also called scalar product or dot product, of two vectors \(\pmb{u}=(u_0,u_1)\) and \(\pmb{v} =(v_0,v_1)\) is defined as

Remark 1. We should point out that we use the notation \((\cdot,\cdot)\) for two different things: \((a,b)\) for scalar quantities \(a\) and \(b\) means the vector starting in the origin and ending in the point \((a,b)\), while \((\pmb{u},\pmb{v})\) with vectors \(\pmb{u}\) and \(\pmb{v}\) means the inner product of these vectors. Since vectors are here written in boldface font there should be no confusion. Note that the norm associated with this inner product is the usual Eucledian length of a vector.

Remark 2. It might be wise to refresh some basic linear algebra by consulting a textbook. Exercise 1: Linear algebra refresher I and Exercise 2: Linear algebra refresher II suggest specific tasks to regain familiarity with fundamental operations on inner product vector spaces.

The least squares method (1)¶

We now want to find \(c_0\) such that it minimizes \(||\pmb{e}||\). The algebra is simplified if we minimize the square of the norm, \(||\pmb{e}||^2 = (\pmb{e}, \pmb{e})\). Define

We can rewrite the expressions of the right-hand side to a more convenient form for further work:

The rewrite results from using the following fundamental rules for inner product spaces:

Minimizing \(E(c_0)\) implies finding \(c_0\) such that

Differentiating (2) with respect to \(c_0\) gives

Setting the above expression equal to zero and solving for \(c_0\) gives

which in the present case with \(\pmb{\varphi}_0=(a,b)\) results in

For later, it is worth mentioning that setting the key equation (3) to zero can be rewritten as

or

The Galerkin or projection method (1)¶

Minimizing \(||\pmb{e}||^2\) implies that \(\pmb{e}\) is orthogonal to any vector \(\pmb{v}\) in the space \(V\). This result is visually quite clear from Figure Approximation of a two-dimensional vector by a one-dimensional vector (think of other vectors along the line \((a,b)\): all of them will lead to a larger distance between the approximation and \(\pmb{f}\)). To see this result mathematically, we express any \(\pmb{v}\in V\) as \(\pmb{v}=s\pmb{\varphi}_0\) for any scalar parameter \(s\), recall that two vectors are orthogonal when their inner product vanishes, and calculate the inner product

Therefore, instead of minimizing the square of the norm, we could demand that \(\pmb{e}\) is orthogonal to any vector in \(V\). This approach is known as projection, because we it is the same as projecting the vector onto the subspace. We may also use the term Galerkin’s method. Mathematically the approach is stated by the equation

Since an arbitrary \(\pmb{v}\in V\) can be expressed as \(s\pmb{\varphi}_0\), \(s\in\mathbb{R}\), (6) implies

which means that the error must be orthogonal to the basis vector in the space \(V\):

The latter equation gives (4) for \(c_0\). Furthermore, the latter equation also arose from least squares computations in (5).

Approximation of general vectors¶

Let us generalize the vector approximation from the previous section to vectors in spaces with arbitrary dimension. Given some vector \(\pmb{f}\), we want to find the best approximation to this vector in the space

We assume that the basis vectors \(\pmb{\varphi}_0,\ldots,\pmb{\varphi}_N\) are linearly independent so that none of them are redundant and the space has dimension \(N+1\). Any vector \(\pmb{u}\in V\) can be written as a linear combination of the basis vectors,

where \(c_j\in\mathbb{R}\) are scalar coefficients to be determined.

The least squares method (2)¶

Now we want to find \(c_0,\ldots,c_N\) such that \(\pmb{u}\) is the best approximation to \(\pmb{f}\) in the sense that the distance, or error, \(\pmb{e} = \pmb{f} - \pmb{u}\) is minimized. Again, we define the squared distance as a function of the free parameters \(c_0,\ldots,c_N\),

Minimizing this \(E\) with respect to the independent variables \(c_0,\ldots,c_N\) is obtained by setting

The second term in (7) is differentiated as follows:

since the expression to be differentiated is a sum and only one term, \(c_i(\pmb{f},\pmb{\varphi}_i)\), contains \(c_i\) and this term is linear in \(c_i\). To understand this differentiation in detail, write out the sum specifically for, e.g, \(N=3\) and \(i=1\).

The last term in (7) is more tedious to differentiate. We start with

Then

The last term can be included in the other two sums, resulting in

It then follows that setting

leads to a linear system for \(c_0,\ldots,c_N\):

where

(Note that we can change the order of the two vectors in the inner product as desired.)

The Galerkin or projection method (2)¶

In analogy with the “one-dimensional” example in the section Approximation of planar vectors, it holds also here in the general case that minimizing the distance (error) \(\pmb{e}\) is equivalent to demanding that \(\pmb{e}\) is orthogonal to all \(\pmb{v}\in V\):

Since any \(\pmb{v}\in V\) can be written as \(\pmb{v} =\sum_{i=0}^N c_i\pmb{\varphi}_i\), the statement (9) is equivalent to saying that

for any choice of coefficients \(c_0,\ldots,c_N\in\mathbb{R}\). The latter equation can be rewritten as

If this is to hold for arbitrary values of \(c_0,\ldots,c_N\), we must require that each term in the sum vanishes,

These \(N+1\) equations result in the same linear system as (8):

and hence

So, instead of differentiating the \(E(c_0,\ldots,c_N)\) function, we could simply use (9) as the principle for determining \(c_0,\ldots,c_N\), resulting in the \(N+1\) equations (10).

The names least squares method or least squares approximation are natural since the calculations consists of minimizing \(||\pmb{e}||^2\), and \(||\pmb{e}||^2\) is a sum of squares of differences between the components in \(\pmb{f}\) and \(\pmb{u}\). We find \(\pmb{u}\) such that this sum of squares is minimized.

The principle (9), or the equivalent form (10), is known as projection. Almost the same mathematical idea was used by the Russian mathematician Boris Galerkin to solve differential equations, resulting in what is widely known as Galerkin’s method.

Approximation of functions¶

Let \(V\) be a function space spanned by a set of basis functions \({\varphi}_0,\ldots,{\varphi}_N\),

such that any function \(u\in V\) can be written as a linear combination of the basis functions:

For now, in this introduction, we shall look at functions of a single variable \(x\): \(u=u(x)\), \({\varphi}_i={\varphi}_i(x)\), \(i=0,\ldots,N\). Later, we will extend the scope to functions of two- or three-dimensional physical spaces. The approximation (11) is typically used to discretize a problem in space. Other methods, most notably finite differences, are common for time discretization (although the form (11) can be used in time too).

The least squares method (3)¶

Given a function \(f(x)\), how can we determine its best approximation \(u(x)\in V\)? A natural starting point is to apply the same reasoning as we did for vectors in the section Approximation of general vectors. That is, we minimize the distance between \(u\) and \(f\). However, this requires a norm for measuring distances, and a norm is most conveniently defined through an inner product. Viewing a function as a vector of infinitely many point values, one for each value of \(x\), the inner product could intuitively be defined as the usual summation of pairwise components, with summation replaced by integration:

To fix the integration domain, we let \(f(x)\) and \({\varphi}_i(x)\) be defined for a domain \(\Omega\subset\mathbb{R}\). The inner product of two functions \(f(x)\) and \(g(x)\) is then

The distance between \(f\) and any function \(u\in V\) is simply \(f-u\), and the squared norm of this distance is

Note the analogy with (7): the given function \(f\) plays the role of the given vector \(\pmb{f}\), and the basis function \({\varphi}_i\) plays the role of the basis vector \(\pmb{\varphi}_i\). We get can rewrite (13), through similar steps as used for the result (7), leading to

Minimizing this function of \(N+1\) scalar variables \(c_0,\ldots,c_N\) requires differentiation with respect to \(c_i\), for \(i=0,\ldots,N\). The resulting equations are very similar to those we had in the vector case, and we hence end up with a linear system of the form (8), with

The Galerkin or projection method (3)¶

As in the section Approximation of general vectors, the minimization of \((e,e)\) is equivalent to

This is known as a projection of a function \(f\) onto the subspace \(V\). We may also call it a Galerkin method for approximating functions. Using the same reasoning as in (9)-(10), it follows that (14) is equivalent to

Inserting \(e=f-u\) in this equation and ordering terms, as in the multi-dimensional vector case, we end up with a linear system with a coefficient matrix (?) and right-hand side vector (?).

Whether we work with vectors in the plane, general vectors, or functions in function spaces, the least squares principle and the Galerkin or projection method are equivalent.

Example: linear approximation¶

Let us apply the theory in the previous section to a simple problem: given a parabola \(f(x)=10(x-1)^2-1\) for \(x\in\Omega=[1,2]\), find the best approximation \(u(x)\) in the space of all linear functions:

That is, \({\varphi}_0(x)=1\), \({\varphi}_1(x)=x\), and \(N=1\). We seek

where \(c_0\) and \(c_1\) are found by solving a \(2\times 2\) the linear system. The coefficient matrix has elements

The corresponding right-hand side is

Solving the linear system results in

and consequently

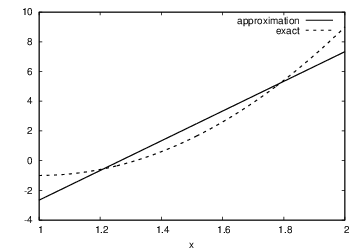

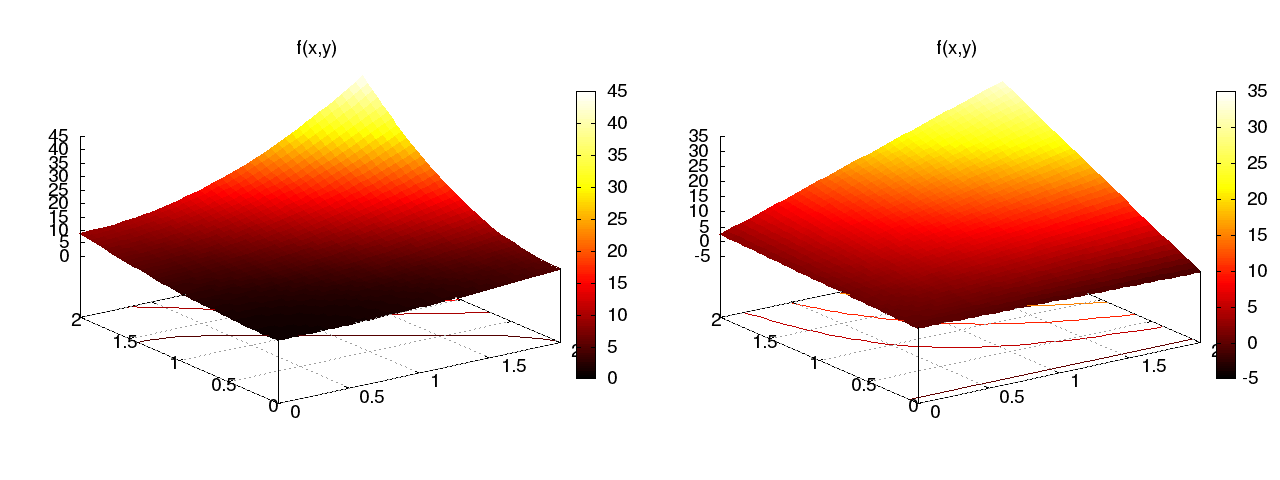

Figure Best approximation of a parabola by a straight line displays the parabola and its best approximation in the space of all linear functions.

Best approximation of a parabola by a straight line

Implementation of the least squares method¶

The linear system can be computed either symbolically or numerically (a numerical integration rule is needed in the latter case). Here is a function for symbolic computation of the linear system, where \(f(x)\) is given as a sympy expression f (involving the symbol x), phi is a list of \({\varphi}_0,\ldots,{\varphi}_N\), and Omega is a 2-tuple/list holding the domain \(\Omega\):

import sympy as sm

def least_squares(f, phi, Omega):

N = len(phi) - 1

A = sm.zeros((N+1, N+1))

b = sm.zeros((N+1, 1))

x = sm.Symbol('x')

for i in range(N+1):

for j in range(i, N+1):

A[i,j] = sm.integrate(phi[i]*phi[j],

(x, Omega[0], Omega[1]))

A[j,i] = A[i,j]

b[i,0] = sm.integrate(phi[i]*f, (x, Omega[0], Omega[1]))

c = A.LUsolve(b)

u = 0

for i in range(len(phi)):

u += c[i,0]*phi[i]

return u

Observe that we exploit the symmetry of the coefficient matrix: only the upper triangular part is computed. Symbolic integration in sympy is often time consuming, and (roughly) halving the work has noticeable effect on the waiting time for the function to finish execution.

Comparing the given \(f(x)\) and the approximate \(u(x)\) visually is done by the following function, which with the aid of sympy‘s lambdify tool converts a sympy functional expression to a Python function for numerical computations:

def comparison_plot(f, u, Omega, filename='tmp.pdf'):

x = sm.Symbol('x')

f = sm.lambdify([x], f, modules="numpy")

u = sm.lambdify([x], u, modules="numpy")

resolution = 401 # no of points in plot

xcoor = linspace(Omega[0], Omega[1], resolution)

exact = f(xcoor)

approx = u(xcoor)

plot(xcoor, approx)

hold('on')

plot(xcoor, exact)

legend(['approximation', 'exact'])

savefig(filename)

The modules='numpy' argument to lambdify is important if there are mathematical functions, such as sin or exp in the symbolic expressions in f or u, and these mathematical functions are to be used with vector arguments, like xcoor above.

Both the least_squares and comparison_plot are found and coded in the file approx1D.py. The forthcoming examples on their use appear in ex_approx1D.py.

Perfect approximation¶

Let us use the code above to recompute the problem from the section Example: linear approximation where we want to approximate a parabola. What happens if we add an element \(x^2\) to the basis and test what the best approximation is if \(V\) is the space of all parabolic functions? The answer is quickly found by running

>>> from approx1D import *

>>> x = sm.Symbol('x')

>>> f = 10*(x-1)**2-1

>>> u = least_squares(f=f, phi=[1, x, x**2], Omega=[1, 2])

>>> print u

10*x**2 - 20*x + 9

>>> print sm.expand(f)

10*x**2 - 20*x + 9

Now, what if we use \(\phi_i(x)=x^i\) for \(i=0,\ldots,N=40\)? The output from least_squares gives \(c_i=0\) for \(i>2\). In fact, we have a general result that if \(f\in V\), the least squares and Galerkin/projection methods compute the exact solution \(u=f\).

The proof is straightforward: if \(f\in V\), \(f\) can be expanded in terms of the basis functions, \(f=\sum_{j=0}^Nd_j{\varphi}_j\), for some coefficients \(d_0,\ldots,d_N\), and the right-hand side then has entries

The linear system \(\sum_jA_{i,j}c_j = b_i\), \(i=0,\ldots,N\), is then

which implies that \(c_i=d_i\) for \(i=0,\ldots,N\).

Ill-conditioning¶

The computational example in the section Perfect approximation applies the least_squares function which invokes symbolic methods to calculate and solve the linear system. The correct solution \(c_0=9, c_1=-20, c_2=10, c_i=0\) for \(i\geq 3\) is perfectly recovered.

Suppose we convert the matrix and right-hand side to floating-point arrays and then solve the system using finite-precision arithmetics, which is what one will (almost) always do in real life. This time we get astonishing results! Up to about \(N=7\) we get a solution that is reasonably close to the exact one. Increasing \(N\) shows that seriously wrong coefficients are computed. Below is a table showing the solution of the linear system arising from approximating a parabola by functions on the form \(u(x)=\sum_{j=0}^Nc_jx^j\), \(N=10\). Analytically, we know that \(c_j=0\) for \(j>2\), but ill-conditioning may produce \(c_j\neq 0\) for \(j>2\).

| exact | sympy | numpy32 | numpy64 |

|---|---|---|---|

| 9 | 9.62 | 5.57 | 8.98 |

| -20 | -23.39 | -7.65 | -19.93 |

| 10 | 17.74 | -4.50 | 9.96 |

| 0 | -9.19 | 4.13 | -0.26 |

| 0 | 5.25 | 2.99 | 0.72 |

| 0 | 0.18 | -1.21 | -0.93 |

| 0 | -2.48 | -0.41 | 0.73 |

| 0 | 1.81 | -0.013 | -0.36 |

| 0 | -0.66 | 0.08 | 0.11 |

| 0 | 0.12 | 0.04 | -0.02 |

| 0 | -0.001 | -0.02 | 0.002 |

The exact value of \(c_j\), \(j=0,\ldots,10\), appears in the first column while the other columns correspond to results obtained by three different methods:

- Column 2: The matrix and vector are converted to the data structure sympy.mpmath.fp.matrix and the sympy.mpmath.fp.lu_solve function is used to solve the system.

- Column 3: The matrix and vector are converted to numpy arrays with data type numpy.float32 (single precision floating-point number) and solved by the numpy.linalg.solve function.

- Column 4: As column 3, but the data type is numpy.float64 (double precision floating-point number).

We see from the numbers in the table that double precision performs much better than single precision. Nevertheless, when plotting all these solutions the curves cannot be visually distinguished (!). This means that the approximations look perfect, despite the partially wrong values of the coefficients.

Increasing \(N\) to 12 makes the numerical solver in sympy report abort with the message: “matrix is numerically singular”. A matrix has to be non-singular to be invertible, which is a requirement when solving a linear system. Already when the matrix is close to singular, it is ill-conditioned, which here implies that the numerical solution algorithms are sensitive to round-off errors and may produce (very) inaccurate results.

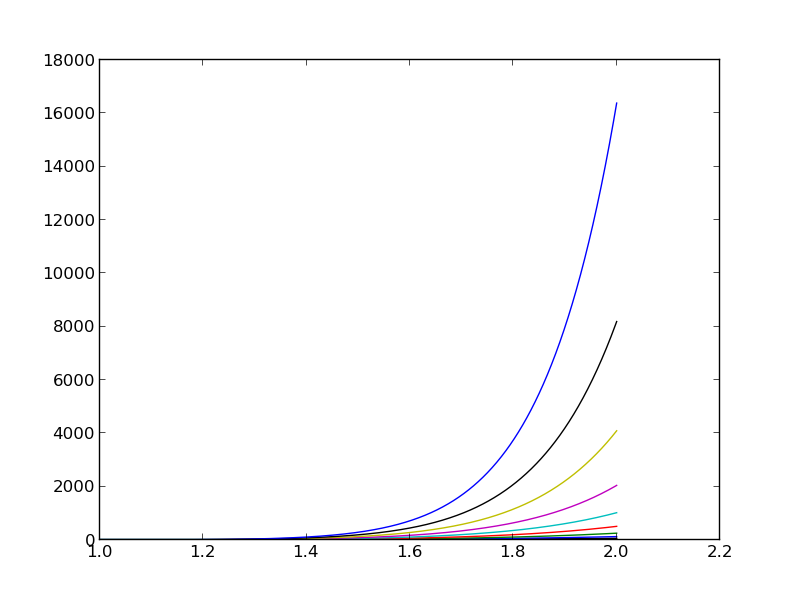

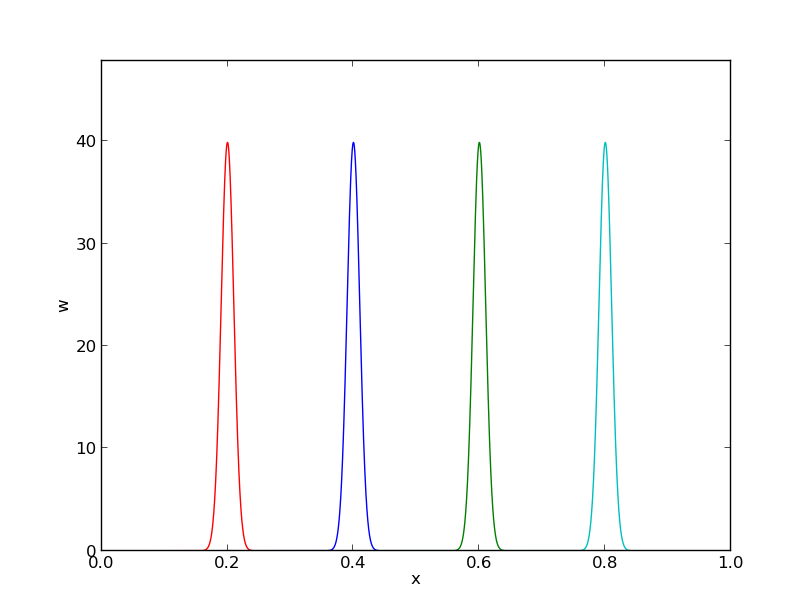

The reason why the coefficient matrix is nearly singular and ill-conditioned is that our basis functions \({\varphi}_i(x)=x^i\) are nearly linearly dependent for large \(i\). That is, \(x^i\) and \(x^{i+1}\) are very close for \(i\) not very small. This phenomenon is illustrated in Figure The 15 first basis functions , . There are 15 lines in this figure, but only half of them are visually distinguishable. Almost linearly dependent basis functions give rise to an ill-conditioned and almost singular matrix. This fact can be illustrated by computing the determinant, which is indeed very close to zero (recall that a zero determinant implies a singular and non-invertible matrix): \(10^{-65}\) for \(N=10\) and \(10^{-92}\) for \(N=12\). Already for \(N=28\) the numerical determinant computation returns a plain zero.

The 15 first basis functions \(x^i\), \(i=0,\ldots,14\)

On the other hand, the double precision numpy solver do run for \(N=100\), resulting in answers that are not significantly worse than those in the table above, and large powers are associated with small coefficients (e.g., \(c_j<10^{-2}\) for \(10\leq j\leq 20\) and \(c<10^{-5}\) for \(j>20\)). Even for \(N=100\) the approximation lies on top of the exact curve in a plot (!).

The conclusion is that visual inspection of the quality of the approximation may not uncover fundamental numerical problems with the computations. However, numerical analysts have studied approximations and ill-conditioning for decades, and it is well known that the basis \(\{1,x,x^2,x^3,\ldots,\}\) is a bad basis. The best basis from a matrix conditioning point of view is to have orthogonal functions such that \((\phi_i,\phi_j)=0\) for \(i\neq j\). There are many known sets of orthogonal polynomials. The functions used in the finite element methods are almost orthogonal, and this property helps to avoid problems with solving matrix systems. Almost orthogonal is helpful, but not enough when it comes to partial differential equations, and ill-conditioning of the coefficient matrix is a theme when solving large-scale finite element systems.

Fourier series¶

A set of sine functions is widely used for approximating functions. Let us take

That is,

An approximation to the \(f(x)\) function from the section Example: linear approximation can then be computed by the least_squares function from the section Implementation of the least squares method:

N = 3

from sympy import sin, pi

x = sm.Symbol('x')

phi = [sin(pi*(i+1)*x) for i in range(N+1)]

f = 10*(x-1)**2 - 1

Omega = [0, 1]

u = least_squares(f, phi, Omega)

comparison_plot(f, u, Omega)

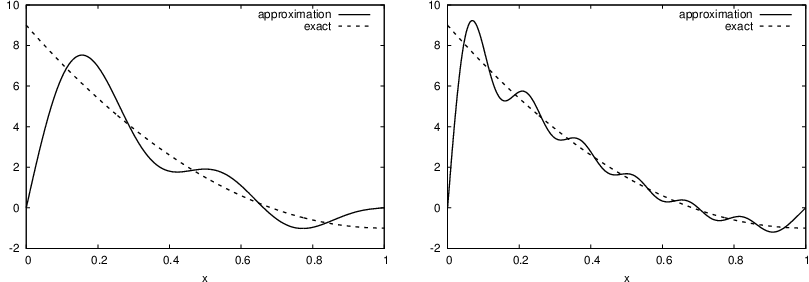

Figure Best approximation of a parabola by a sum of 3 (left) and 11 (right) sine functions (left) shows the oscillatory approximation of \(\sum_{j=0}^{N}c_j\sin ((j+1)\pi x)\) when \(N=3\). Changing \(N\) to 11 improves the approximation considerably, see Figure Best approximation of a parabola by a sum of 3 (left) and 11 (right) sine functions (right).

Best approximation of a parabola by a sum of 3 (left) and 11 (right) sine functions

There is an error \(f(0)-u(0)=9\) at \(x=0\) in Figure Best approximation of a parabola by a sum of 3 (left) and 11 (right) sine functions regardless of how large \(N\) is, because all \({\varphi}_i(0)=0\) and hence \(u(0)=0\). We may help the approximation to be correct at \(x=0\) by seeking

However, this adjustments introduces a new problem at \(x=1\) since we now get an error \(f(1)-u(1)=f(1)-0=-1\) at this point. A more clever adjustment is to replace the \(f(0)\) term by a term that is \(f(0)\) at \(x=0\) and \(f(1)\) at \(x=1\). A simple linear combination \(f(0)(1-x) + xf(1)\) does the job:

This adjustment of \(u\) alters the linear system slightly as we get an extra term \(-(f(0)(1-x) + xf(1),{\varphi}_i)\) on the right-hand side. Figure Best approximation of a parabola by a sum of 3 (left) and 11 (right) sine functions with a boundary term shows the result of ensuring right boundary values: even 3 sines can now adjust the \(f(0)(1-x) + xf(1)\) term such that \(u\) approximates the parabola really well, at least visually.

Best approximation of a parabola by a sum of 3 (left) and 11 (right) sine functions with a boundary term

Orthogonal basis functions¶

The choice of sine functions \({\varphi}_i(x)=\sin ((i+1)\pi x)\) has a great computational advantage: on \(\Omega=[0,1]\) these basis functions are orthogonal, implying that \(A_{i,j}=0\) if \(i\neq j\). This result is realized by trying

integrate(sin(j*pi*x)*sin(k*pi*x), x, 0, 1)

in WolframAlpha (avoid i in the integrand as this symbol means the imaginary unit \(\sqrt{-1}\)). Also by asking WolframAlpha about \(\int_0^1\sin^2 (j\pi x) dx\), we find it to equal 1/2. With a diagonal matrix we can easily solve for the coefficients by hand:

which is nothing but the classical formula for the coefficients of the Fourier sine series of \(f(x)\) on \([0,1]\). In fact, when \(V\) contains the basic functions used in a Fourier series expansion, the approximation method derived in the section Approximation of functions results in the classical Fourier series for \(f(x)\) (see Exercise 6: Fourier series as a least squares approximation for details).

For orthogonal basis functions we can make the least_squares function (much) more efficient since we know that the matrix is diagonal and only the diagonal elements need to be computed:

def least_squares_orth(f, phi, Omega):

N = len(phi) - 1

A = [0]*(N+1)

b = [0]*(N+1)

x = sm.Symbol('x')

for i in range(N+1):

A[i] = sm.integrate(phi[i]**2, (x, Omega[0], Omega[1]))

b[i] = sm.integrate(phi[i]*f, (x, Omega[0], Omega[1]))

c = [b[i]/A[i] for i in range(len(b))]

u = 0

for i in range(len(phi)):

u += c[i]*phi[i]

return u

This function is found in the file approx1D.py.

The collocation (interpolation) method¶

The principle of minimizing the distance between \(u\) and \(f\) is an intuitive way of computing a best approximation \(u\in V\) to \(f\). However, there are other attractive approaches as well. One is to demand that \(u(x_{i}) = f(x_{i})\) at some selected points \(x_{i}\), \(i=0,\ldots,N\):

This criterion also gives a linear system with \(N+1\) unknown coefficients \(c_0,\ldots,c_N\):

with

This time the coefficient matrix is not symmetric because \({\varphi}_j(x_{i})\neq {\varphi}_i(x_{j})\) in general. The method is often referred to as a collocation method and the \(x_{i}\) points are known as collocation points. Others view the approach as an interpolation method since some point values of \(f\) are given (\(f(x_{i})\)) and we fit a continuous function \(u\) that goes through the \(f(x_{i})\) points. In that case the \(x_{i}\) points are called interpolation points.

Given \(f\) as a sympy symbolic expression f, \({\varphi}_0,\ldots,{\varphi}_N\) as a list phi, and a set of points \(x_0,\ldots,x_N\) as a list or array points, the following Python function sets up and solves the matrix system for the coefficients \(c_0,\ldots,c_N\):

def interpolation(f, phi, points):

N = len(phi) - 1

A = sm.zeros((N+1, N+1))

b = sm.zeros((N+1, 1))

x = sm.Symbol('x')

# Turn phi and f into Python functions

phi = [sm.lambdify([x], phi[i]) for i in range(N+1)]

f = sm.lambdify([x], f)

for i in range(N+1):

for j in range(N+1):

A[i,j] = phi[j](points[i])

b[i,0] = f(points[i])

c = A.LUsolve(b)

u = 0

for i in range(len(phi)):

u += c[i,0]*phi[i](x)

return u

Note that it is convenient to turn the expressions f and phi into Python functions which can be called with elements of points as arguments when building the matrix and the right-hand side. The interpolation function is a part of the approx1D module.

A nice feature of the interpolation or collocation method is that it avoids computing integrals. However, one has to decide on the location of the \(x_{i}\) points. A simple, yet common choice, is to distribute them uniformly throughout \(\Omega\).

Example (1)¶

Let us illustrate the interpolation or collocation method by approximating our parabola \(f(x)=10(x-1)^2-1\) by a linear function on \(\Omega=[1,2]\), using two collocation points \(x_0=1+1/3\) and \(x_1=1+2/3\):

f = 10*(x-1)**2 - 1

phi = [1, x]

Omega = [1, 2]

points = [1 + sm.Rational(1,3), 1 + sm.Rational(2,3)]

u = interpolation(f, phi, points)

comparison_plot(f, u, Omega)

The resulting linear system becomes

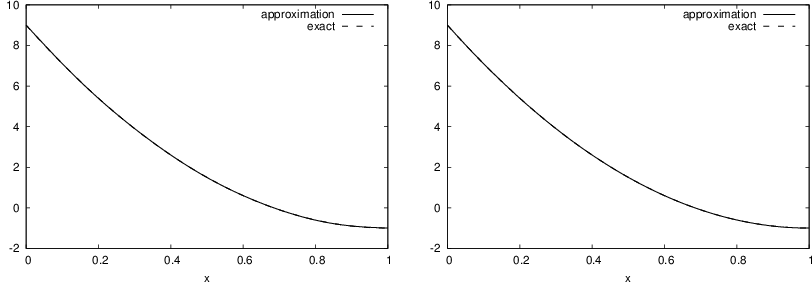

with solution \(c_0=-119/9\) and \(c_1=10\). Figure Approximation of a parabola by linear functions computed by two interpolation points: 4/3 and 5/3 (left) versus 1 and 2 (right) (left) shows the resulting approximation \(u=-119/9 + 10x\). We can easily test other interpolation points, say \(x_0=1\) and \(x_1=2\). This changes the line quite significantly, see Figure Approximation of a parabola by linear functions computed by two interpolation points: 4/3 and 5/3 (left) versus 1 and 2 (right) (right).

Approximation of a parabola by linear functions computed by two interpolation points: 4/3 and 5/3 (left) versus 1 and 2 (right)

Lagrange polynomials¶

In the section Fourier series we explain the advantage with having a diagonal matrix: formulas for the coefficients \(c_0,\ldots,c_N\) can then be derived by hand. For an interpolation/collocation method a diagonal matrix implies that \({\varphi}_j(x_{i}) = 0\) if \(i\neq j\). One set of basis functions \({\varphi}_i(x)\) with this property is the Lagrange interpolating polynomials, or just Lagrange polynomials. (Although the functions are named after Lagrange, they were first discovered by Waring in 1779, rediscovered by Euler in 1783, and published by Lagrange in 1795.) The Lagrange polynomials have the form

for \(i=0,\ldots,N\). We see from (16) that all the \({\varphi}_i\) functions are polynomials of degree \(N\) which have the property

when \(x_s\) is an interpolation/collocation point. This property implies that \(A_{i,j}=0\) for \(i\neq j\) and \(A_{i,j}=1\) when \(i=j\). The solution of the linear system is them simply

and

The following function computes the Lagrange interpolating polynomial \({\varphi}_i(x)\), given the interpolation points \(x_{0},\ldots,x_{N}\) in the list or array points:

def Lagrange_polynomial(x, i, points):

p = 1

for k in range(len(points)):

if k != i:

p *= (x - points[k])/(points[i] - points[k])

return p

The next function computes a complete basis using equidistant points throughout \(\Omega\):

def Lagrange_polynomials_01(x, N):

if isinstance(x, sm.Symbol):

h = sm.Rational(1, N-1)

else:

h = 1.0/(N-1)

points = [i*h for i in range(N)]

phi = [Lagrange_polynomial(x, i, points) for i in range(N)]

return phi, points

When x is an sm.Symbol object, we let the spacing between the interpolation points, h, be a sympy rational number for nice end results in the formulas for \({\varphi}_i\). The other case, when x is a plain Python float, signifies numerical computing, and then we let h be a floating-point number. Observe that the Lagrange_polynomial function works equally well in the symbolic and numerical case (think of x being an sm.Symbol object or a Python float). A little interactive session illustrates the difference between symbolic and numerical computing of the basis functions and points:

>>> import sympy as sm

>>> x = sm.Symbol('x')

>>> phi, points = Lagrange_polynomials_01(x, N=3)

>>> points

[0, 1/2, 1]

>>> phi

[(1 - x)*(1 - 2*x), 2*x*(2 - 2*x), -x*(1 - 2*x)]

>>> x = 0.5 # numerical computing

>>> phi, points = Lagrange_polynomials_01(x, N=3, symbolic=True)

>>> points

[0.0, 0.5, 1.0]

>>> phi

[-0.0, 1.0, 0.0]

The Lagrange polynomials are very much used in finite element methods because of their property (17).

Successful example¶

Trying out the Lagrange polynomial basis for approximating \(f(x)=\sin 2\pi x\) on \(\Omega =[0,1]\) with the least squares and the interpolation techniques can be done by

x = sm.Symbol('x')

f = sm.sin(2*sm.pi*x)

phi, points = Lagrange_polynomials_01(x, N)

Omega=[0, 1]

u = least_squares(f, phi, Omega)

comparison_plot(f, u, Omega)

u = interpolation(f, phi, points)

comparison_plot(f, u, Omega)

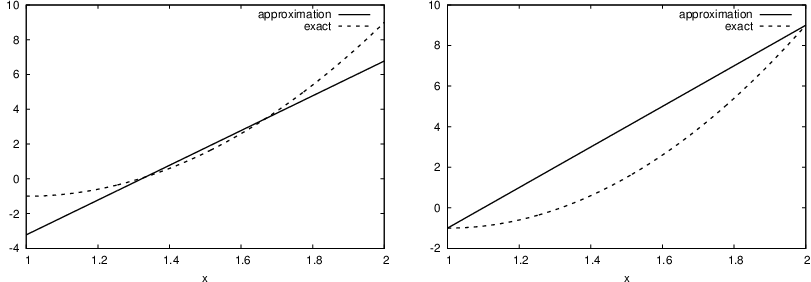

Figure Approximation via least squares (left) and interpolation (right) of a sine function by Lagrange interpolating polynomials of degree 4 shows the results. There is little difference between the least squares and the interpolation technique. Increasing \(N\) gives visually better approximations.

Approximation via least squares (left) and interpolation (right) of a sine function by Lagrange interpolating polynomials of degree 4

Less successful example¶

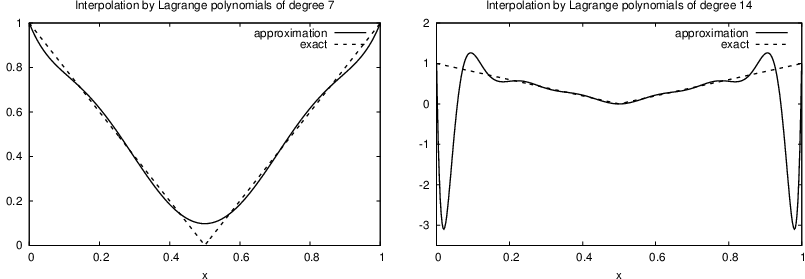

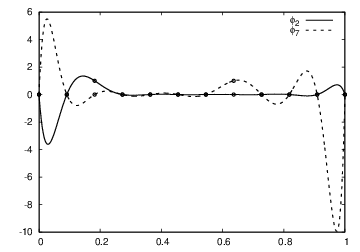

The next example concerns interpolating \(f(x)=|1-2x|\) on \(\Omega =[0,1]\) using Lagrange polynomials. Figure Interpolation of an absolute value function by Lagrange polynomials and uniformly distributed interpolation points: degree 7 (left) and 14 (right) shows a peculiar effect: the approximation starts to oscillate more and more as \(N\) grows. This numerical artifact is not surprising when looking at the individual Lagrange polynomials: Figure Illustration of the oscillatory behavior of two Lagrange polynomials for 12 uniformly spaced points (marked by circles) shows two such polynomials of degree 11, and it is clear that the basis functions oscillate significantly. The reason is simple, since we force the functions to be 1 at one point and 0 at many other points. A polynomial of high degree is then forced to oscillate between these points. The oscillations are particularly severe at the boundary. The phenomenon is named Runge’s phenomenon and you can read

a more detailed explanation on Wikipedia.

Remedy for strong oscillations¶

The oscillations can be reduced by a more clever choice of interpolation points, called the Chebyshev nodes:

on the interval \(\Omega = [a,b]\). Here is a flexible version of the Lagrange_polynomials_01 function above, valid for any interval \(\Omega =[a,b]\) and with the possibility to generate both uniformly distributed points and Chebyshev nodes:

def Lagrange_polynomials(x, N, Omega, point_distribution='uniform'):

if point_distribution == 'uniform':

if isinstance(x, sm.Symbol):

h = sm.Rational(Omega[1] - Omega[0], N)

else:

h = (Omega[1] - Omega[0])/float(N)

points = [Omega[0] + i*h for i in range(N+1)]

elif point_distribution == 'Chebyshev':

points = Chebyshev_nodes(Omega[0], Omega[1], N)

phi = [Lagrange_polynomial(x, i, points) for i in range(N+1)]

return phi, points

def Chebyshev_nodes(a, b, N):

from math import cos, pi

return [0.5*(a+b) + 0.5*(b-a)*cos(float(2*i+1)/(2*(N+1))*pi) \

for i in range(N+1)]

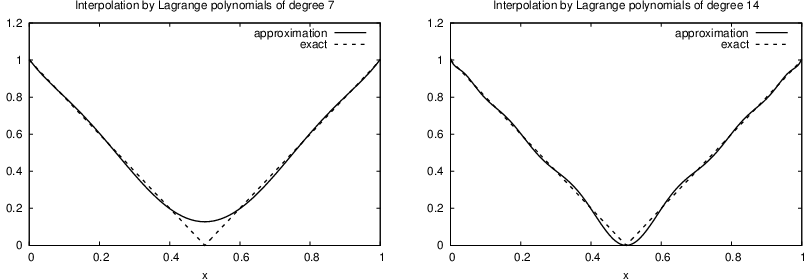

All the functions computing Lagrange polynomials listed above are found in the module file Lagrange.py. Figure Interpolation of an absolute value function by Lagrange polynomials and Chebyshev nodes as interpolation points: degree 7 (left) and 14 (right) shows the improvement of using Chebyshev nodes (compared with Figure Interpolation of an absolute value function by Lagrange polynomials and uniformly distributed interpolation points: degree 7 (left) and 14 (right)).

Another cure for undesired oscillation of higher-degree interpolating polynomials is to use lower-degree Lagrange polynomials on many small patches of the domain, which is the idea pursued in the finite element method. For instance, linear Lagrange polynomials on \([0,1/2]\) and \([1/2,1]\) would yield a perfect approximation to \(f(x)=|1-2x|\) on \(\Omega = [0,1]\) since \(f\) is piecewise linear.

Interpolation of an absolute value function by Lagrange polynomials and uniformly distributed interpolation points: degree 7 (left) and 14 (right)

Illustration of the oscillatory behavior of two Lagrange polynomials for 12 uniformly spaced points (marked by circles)

Interpolation of an absolute value function by Lagrange polynomials and Chebyshev nodes as interpolation points: degree 7 (left) and 14 (right)

Unfortunately, sympy has problems integrating the \(f(x)=|1-2x|\) function times a polynomial. Other choices of \(f(x)\) can also make the symbolic integration fail. Therefore, we should extend the least_squares function such that it falls back on numerical integration if the symbolic integration is unsuccessful. In the latter case, the returned value from sympy‘s integrate function is an object of type Integral. We can test on this type and utilize the mpmath module in sympy to perform numerical integration of high precision. Here is the code:

def least_squares(f, phi, Omega):

N = len(phi) - 1

A = sm.zeros((N+1, N+1))

b = sm.zeros((N+1, 1))

x = sm.Symbol('x')

for i in range(N+1):

for j in range(i, N+1):

integrand = phi[i]*phi[j]

I = sm.integrate(integrand, (x, Omega[0], Omega[1]))

if isinstance(I, sm.Integral):

# Could not integrate symbolically, fallback

# on numerical integration with mpmath.quad

integrand = sm.lambdify([x], integrand)

I = sm.mpmath.quad(integrand, [Omega[0], Omega[1]])

A[i,j] = A[j,i] = I

integrand = phi[i]*f

I = sm.integrate(integrand, (x, Omega[0], Omega[1]))

if isinstance(I, sm.Integral):

integrand = sm.lambdify([x], integrand)

I = sm.mpmath.quad(integrand, [Omega[0], Omega[1]])

b[i,0] = I

c = A.LUsolve(b)

u = 0

for i in range(len(phi)):

u += c[i,0]*phi[i]

return u

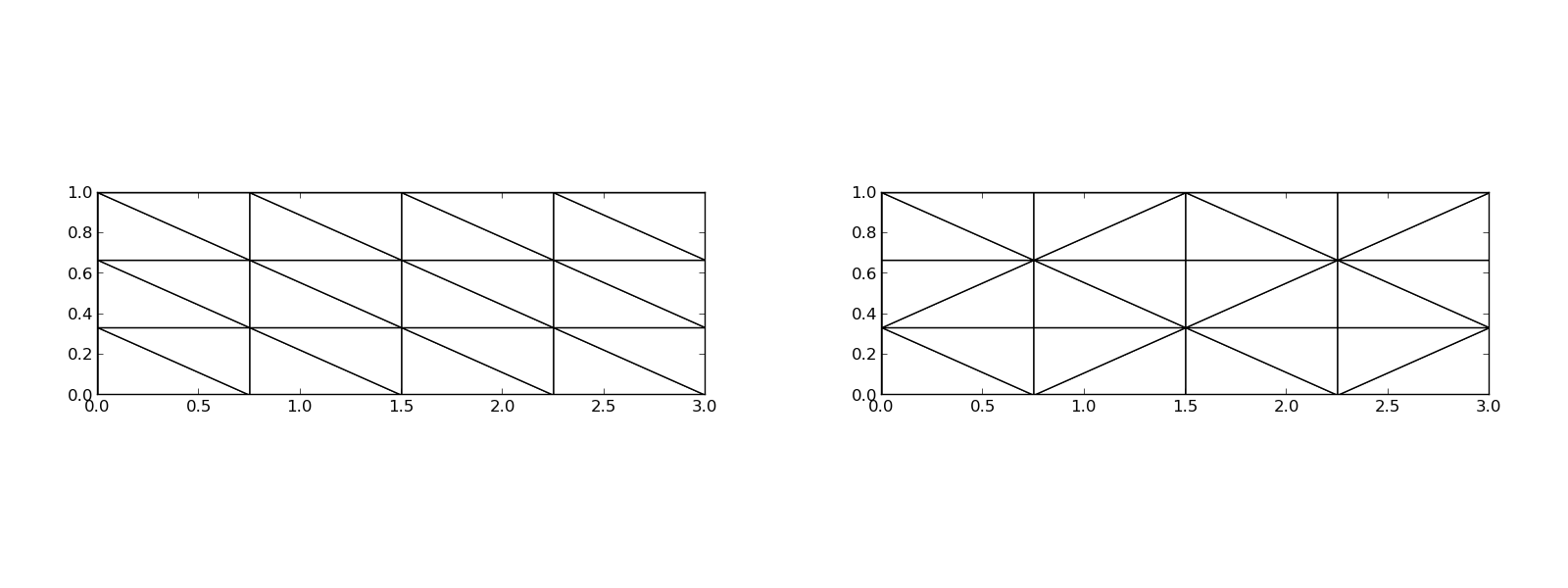

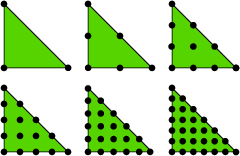

Finite element basis functions¶

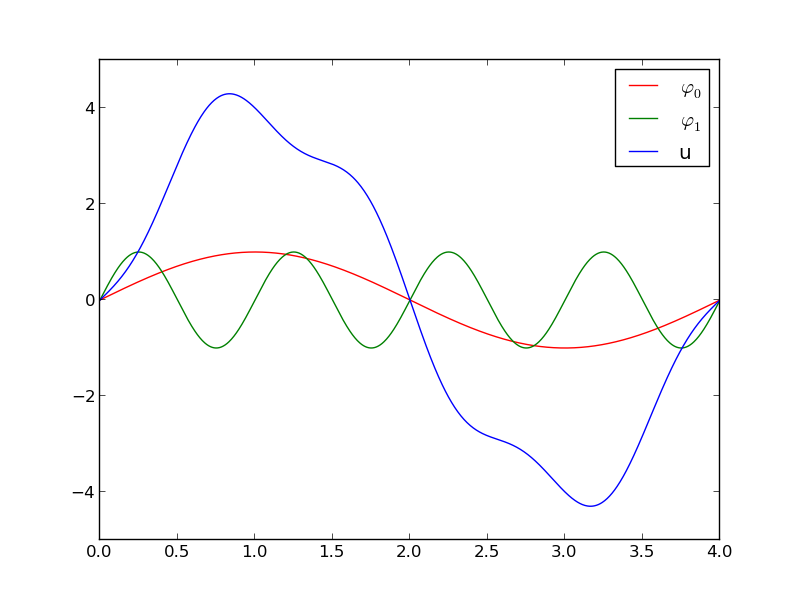

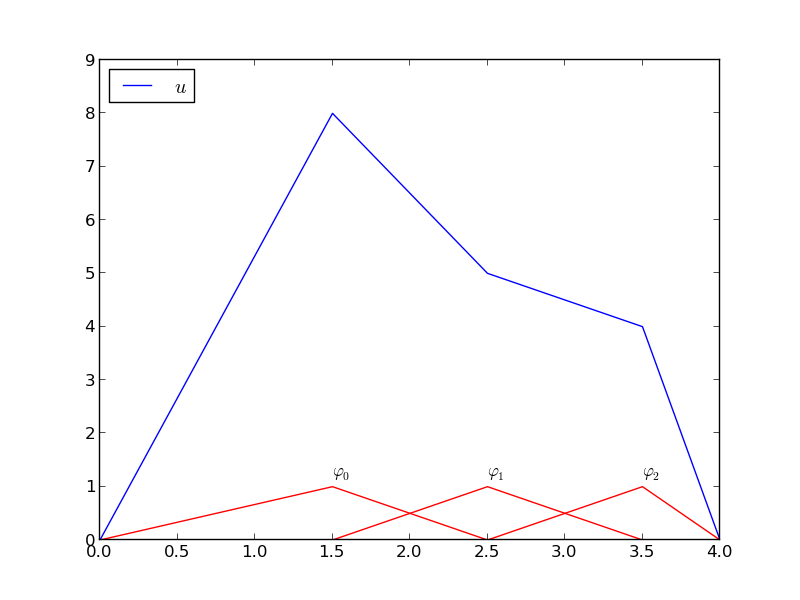

The specific basis functions exemplified in the section Approximation of functions are in general nonzero on the entire domain \(\Omega\), see Figure Approximation based on sine basis functions for an example. We shall now turn the attention to basis functions that have compact support, meaning that they are nonzero on only a small portion of \(\Omega\). Moreover, we shall restrict the functions to be piecewise polynomials. This means that the domain is split into subdomains and the function is a polynomial on one or more subdomains, see Figure Approximation based on local piecewise linear (hat) functions for a sketch involving locally defined hat functions that make \(u=\sum_jc_j{\varphi}_j\) piecewise linear. At the boundaries between subdomains one normally forces continuity of the function only so that when connecting two polynomials from two subdomains, the derivative usually becomes discontinuous. These type of basis functions are fundamental in the finite element method.

Approximation based on sine basis functions

Approximation based on local piecewise linear (hat) functions

We first introduce the concepts of elements and nodes in a simplistic fashion as often met in the literature. Later, we shall generalize the concept of an element, which is a necessary step to treat a wider class of approximations within the family of finite element methods. The generalization is also compatible with the concepts used in the FEniCS finite element software.

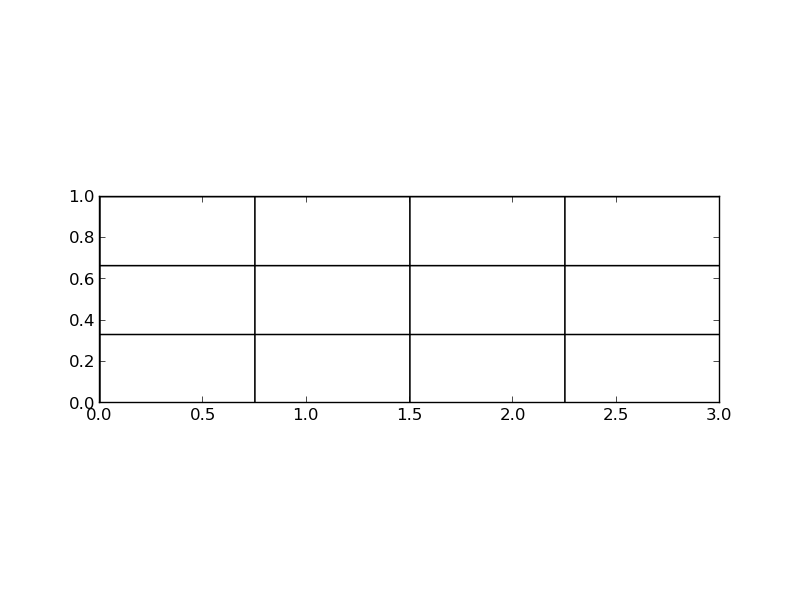

Elements and nodes¶

Let us divide the interval \(\Omega\) on which \(f\) and \(u\) are defined into non-overlapping subintervals \(\Omega^{(e)}\), \(e=0,\ldots,n_e\):

We shall for now refer to \(\Omega^{(e)}\) as an element, having number \(e\). On each element we introduce a set of points called nodes. For now we assume that the nodes are uniformly spaced throughout the element and that the boundary points of the elements are also nodes. The nodes are given numbers both within an element and in the global domain. These are referred to as local and global node numbers, respectively.

Nodes and elements uniquely define a finite element mesh, which is our discrete representation of the domain in the computations. .. index:: finite element mesh

A common special case is that of a uniformly partitioned mesh where each element has the same length and the distance between nodes is constant.

Example (2)¶

On \(\Omega =[0,1]\) we may introduce two elements, \(\Omega^{(0)}=[0,0.4]\) and \(\Omega^{(1)}=[0.4,1]\). Furthermore, let us introduce three nodes per element, equally spaced within each element. The three nodes in element number 0 are \(x_0=0\), \(x_1=0.2\), and \(x_2=0.4\). The local and global node numbers are here equal. In element number 1, we have the local nodes \(x_0=0.4\), \(x_1=0.7\), and \(x_2=1\) and the corresponding global nodes \(x_2=0.4\), \(x_3=0.7\), and \(x_4=1\). Note that the global node \(x_2=0.4\) is shared by the two elements.

For the purpose of implementation, we introduce two lists or arrays: nodes for storing the coordinates of the nodes, with the global node numbers as indices, and elements for holding the global node numbers in each element, with the local node numbers as indices. The nodes and elements lists for the sample mesh above take the form

nodes = [0, 0.2, 0.4, 0.7, 1]

elements = [[0, 1, 2], [2, 3, 4]]

Looking up the coordinate of local node number 2 in element 1 is here done by nodes[elements[1][2]] (recall that nodes and elements start their numbering at 0).

The basis functions¶

Construction principles¶

Standard finite element basis functions are now defined as follows. Let \(i\) be the global node number corresponding to local node \(r\) in element number \(e\).

- If local node number \(r\) is not on the boundary of the element, take \({\varphi}_i(x)\) to be the Lagrange polynomial that is 1 at the local node number \(r\) and zero at all other nodes in the element. On all other elements, \({\varphi}_i=0\).

- If local node number \(r\) is on the boundary of the element, let \({\varphi}_i\) be made up of the Lagrange polynomial that is 1 at this node in element number \(e\) and its neighboring element. On all other elements, \({\varphi}_i=0\).

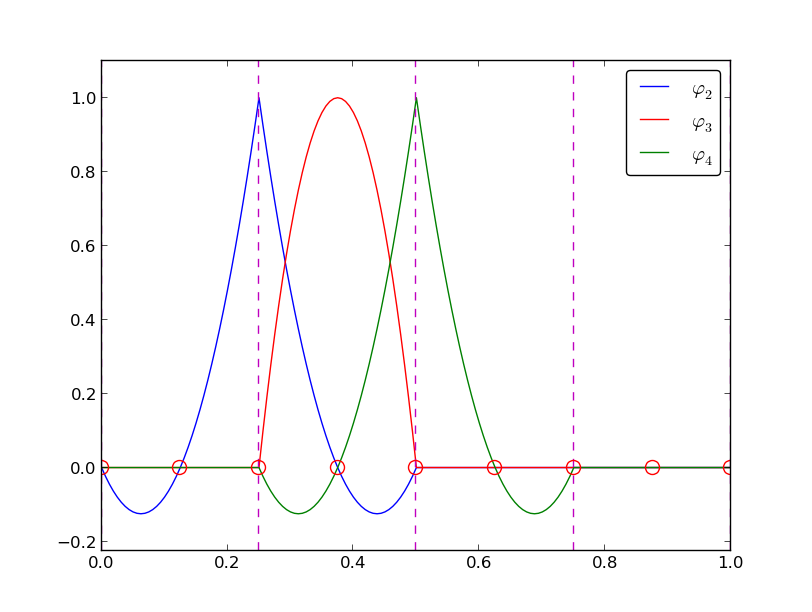

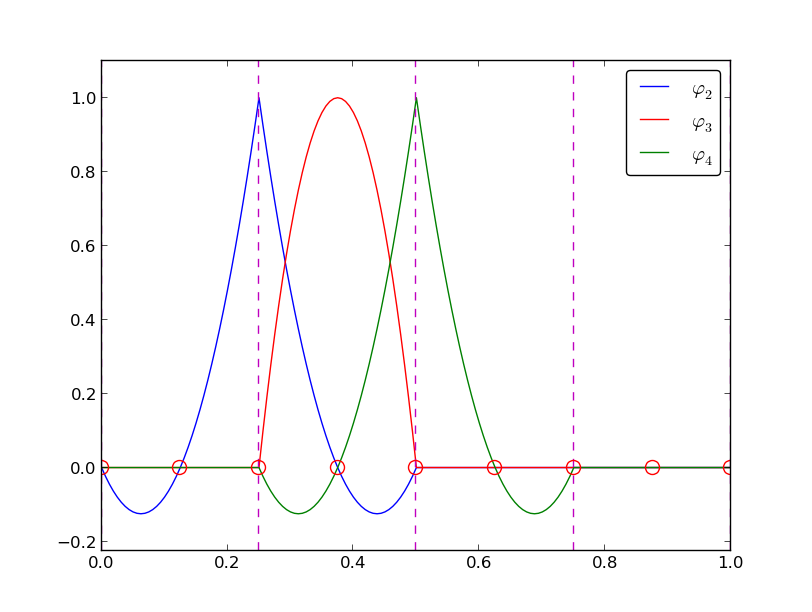

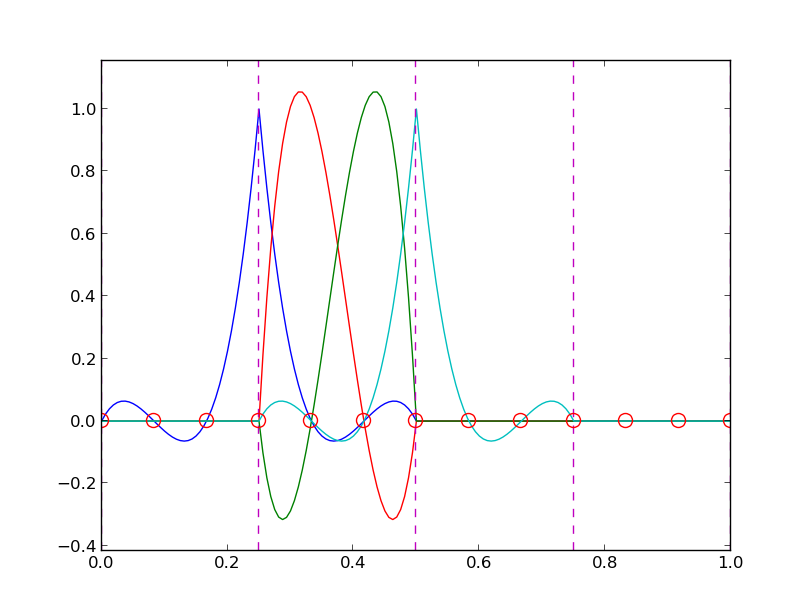

A visual impression of three such basis functions are given in Figure fem:approx:fe:fig:P2. Sometimes we refer to a Lagrange polynomial on an element \(e\), which means the basis function \({\varphi}_i(x)\) when \(x\in\Omega^{(e)}\), and \({\varphi}_i(x)=0\) when \(x\notin\Omega^{(e)}\).

Illustration of the piecewise quadratic basis functions associated with nodes in element 1

Properties of \({\varphi}_i\)¶

The construction of basis functions according to the principles above lead to two important properties of \({\varphi}_i(x)\). First,

when \(x_{j}\) is a node in the mesh with global node number \(j\), because the Lagrange polynomials are constructed to have this property. The property also implies a convenient interpretation of \(c_i\) as the value of \(u\) at node \(i\):

Because of this interpretation, the coefficient \(c_i\) is by many named \(u_i\) or \(U_i\).

Second, \({\varphi}_i(x)\) is mostly zero throughout the domain:

- \({\varphi}_i(x) \neq 0\) only on those elements that contain global node \(i\),

- \({\varphi}_i(x){\varphi}_j(x) \neq 0\) if and only if \(i\) and \(j\) are global node numbers in the same element.

Since \(A_{i,j}\) is the integral of \({\varphi}_i{\varphi}_j\) it means that most of the elements in the coefficient matrix will be zero. We will come back to these properties and use them actively in computations to save memory and CPU time.

We let each element have \(d+1\) nodes, resulting in local Lagrange polynomials of degree \(d\). It is not a requirement to have the same \(d\) value in each element, but for now we will assume so.

Example on quadratic \({\varphi}_i\)¶

Figure fem:approx:fe:fig:P2 illustrates how piecewise quadratic basis functions can look like (\(d=2\)). We work with the domain \(\Omega = [0,1]\) divided into four equal-sized elements, each having three nodes. The nodes and elements lists in this particular example become

nodes = [0, 0.125, 0.25, 0.375, 0.5, 0.625, 0.75, 0.875, 1.0]

elements = [[0, 1, 2], [2, 3, 4], [4, 5, 6], [6, 7, 8]]

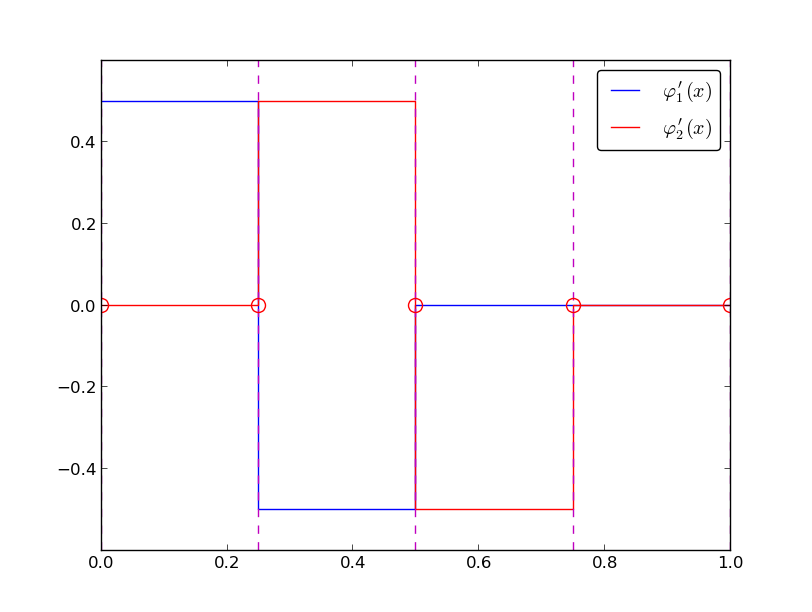

Nodes are marked with circles on the \(x\) axis in the figure, and element boundaries are marked with vertical dashed lines.

Illustration of the piecewise quadratic basis functions associated with nodes in element 1

Let us explain in detail how the basis functions are constructed according to the principles. Consider element number 1 in Figure fem:approx:fe:fig:P2, \(\Omega^{(1)}=[0.25, 0.5]\), with local nodes 0, 1, and 2 corresponding to global nodes 2, 3, and 4. The coordinates of these nodes are \(0.25\), \(0.375\), and \(0.5\), respectively. We define three Lagrange polynomials on this element:

- The polynomial that is 1 at local node 1 (\(x=0.375\), global node 3) makes up the basis function \({\varphi}_3(x)\) over this element, with \({\varphi}_3(x)=0\) outside the element.

- The Lagrange polynomial that is 1 at local node 0 is the “right part” of the global basis function \({\varphi}_2(x)\). The “left part” of \({\varphi}_2(x)\) consists of a Lagrange polynomial associated with local node 2 in the neighboring element \(\Omega^{(0)}=[0, 0.25]\).

- Finally, the polynomial that is 1 at local node 2 (global node 4) is the “left part” of the global basis function \({\varphi}_4(x)\). The “right part” comes from the Lagrange polynomial that is 1 at local node 0 in the neighboring element \(\Omega^{(2)}=[0.5, 0.75]\).

As mentioned earlier, any global basis function \({\varphi}_i(x)\) is zero on elements that do not share the node with global node number \(i\).

The other global functions associated with internal nodes, \({\varphi}_1\), \({\varphi}_5\), and \({\varphi}_7\), are all of the same shape as the drawn \({\varphi}_3\), while the global basis functions associated with shared nodes also have the same shape, provided the elements are of the same length.

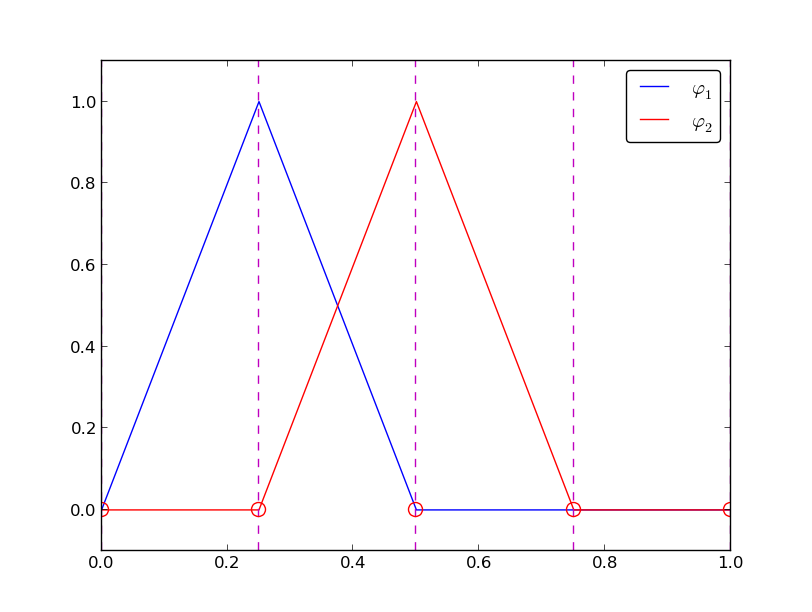

Illustration of the piecewise linear basis functions associated with nodes in element 1

Example on linear \({\varphi}_i\)¶

Figure Illustration of the piecewise linear basis functions associated with nodes in element 1 shows piecewise linear basis functions (\(d=1\)). Also here we have four elements on \(\Omega = [0,1]\). Consider the element \(\Omega^{(1)}=[0.25,0.5]\). Now there are no internal nodes in the elements so that all basis functions are associated with nodes at the element boundaries and hence made up of two Lagrange polynomials from neighboring elements. For example, \({\varphi}_1(x)\) results from the Lagrange polynomial in element 0 that is 1 at local node 1 and 0 at local node 0, combined with the Lagrange polynomial in element 1 that is 1 at local node 0 and 0 at local node 1. The other basis functions are constructed similarly.

Explicit mathematical formulas are needed for \({\varphi}_i(x)\) in computations. In the piecewise linear case, one can show that

Here, \(x_{j}\), \(j=i-1,i,i+1\), denotes the coordinate of node \(j\). For elements of equal length \(h\) the formulas can be simplified to

Example on cubic \({\varphi}_i\)¶

Piecewise cubic basis functions can be defined by introducing four nodes per element. Figure Illustration of the piecewise cubic basis functions associated with nodes in element 1 shows examples on \({\varphi}_i(x)\), \(i=3,4,5,6\), associated with element number 1. Note that \({\varphi}_4\) and \({\varphi}_5\) are nonzero on element number 1, while \({\varphi}_3\) and \({\varphi}_6\) are made up of Lagrange polynomials on two neighboring elements.

Illustration of the piecewise cubic basis functions associated with nodes in element 1

We see that all the piecewise linear basis functions have the same “hat” shape. They are naturally referred to as hat functions, also called chapeau functions. The piecewise quadratic functions in Figure fem:approx:fe:fig:P2 are seen to be of two types. “Rounded hats” associated with internal nodes in the elements and some more “sombrero” shaped hats associated with element boundary nodes. Higher-order basis functions also have hat-like shapes, but the functions have pronounced oscillations in addition, as illustrated in Figure Illustration of the piecewise cubic basis functions associated with nodes in element 1.

A common terminology is to speak about linear elements as elements with two local nodes and where the basis functions are piecewise linear. Similarly, quadratic elements and cubic elements refer to piecewise quadratic or cubic functions over elements with three or four local nodes, respectively. Alternative names, frequently used later, are P1 elements for linear elements, P2 for quadratic elements, and so forth (P$d$ signifies degree \(d\) of the polynomial basis functions).

Calculating the linear system¶

The elements in the coefficient matrix and right-hand side, given by the formulas (?) and (?), will now be calculated for piecewise polynomial basis functions. Consider P1 (piecewise linear) elements. Nodes and elements numbered consecutively from left to right imply the nodes \(x_i=i h\) and the elements

We have in this case \(N\) elements and \(N+1\) nodes, and \(\Omega=[x_{0},x_{N}]\). The formula for \({\varphi}_i(x)\) is given by (20) and a graphical illustration is provided in Figure Illustration of the piecewise linear basis functions associated with nodes in element 1. First we clearly see from Figure Illustration of the piecewise linear basis functions associated with nodes in element 1 that the important property \({\varphi}_i(x){\varphi}_j(x)\neq 0\) if and only if \(j=i-1\), \(j=i\), or \(j=i+1\), or alternatively expressed, if and only if \(i\) and \(j\) are nodes in the same element. Otherwise, \({\varphi}_i\) and \({\varphi}_j\) are too distant to have an overlap and consequently a nonzero product.

The element \(A_{i,i-1}\) in the coefficient matrix can be calculated as

It turns out that \(A_{i,i+1} =h/6\) as well and that \(A_{i,i}=2h/3\). The numbers are modified for \(i=0\) and \(i=N\): \(A_{0,0}=h/3\) and \(A_{N,N}=h/3\). The general formula for the right-hand side becomes

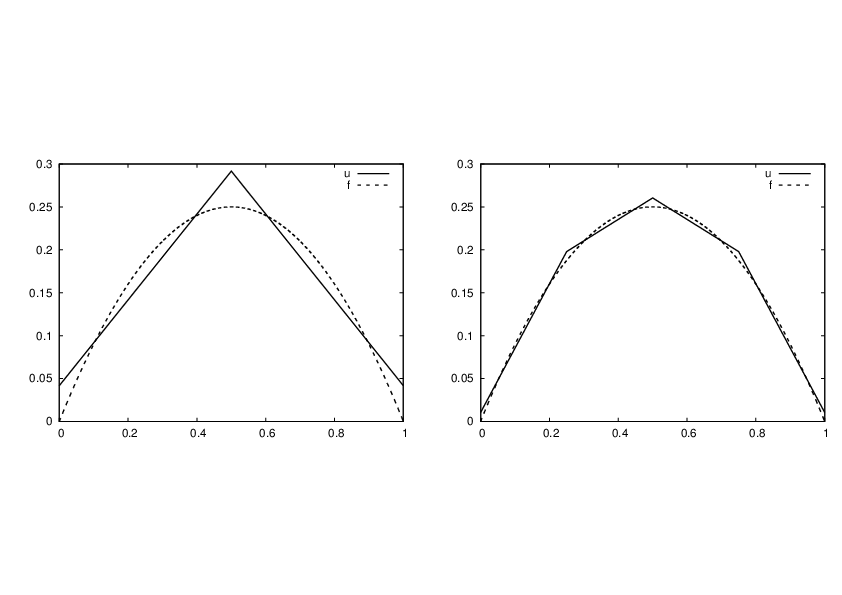

With two equal-sized elements in \(\Omega=[0,1]\) and \(f(x)=x(1-x)\), one gets

The solution becomes

The resulting function

is displayed in Figure Least squares approximation using 2 (left) and 4 (right) P1 elements (left). Doubling the number of elements to four leads to the improved approximation in the right part of Figure Least squares approximation using 2 (left) and 4 (right) P1 elements.

Least squares approximation using 2 (left) and 4 (right) P1 elements

Assembly of elementwise computations¶

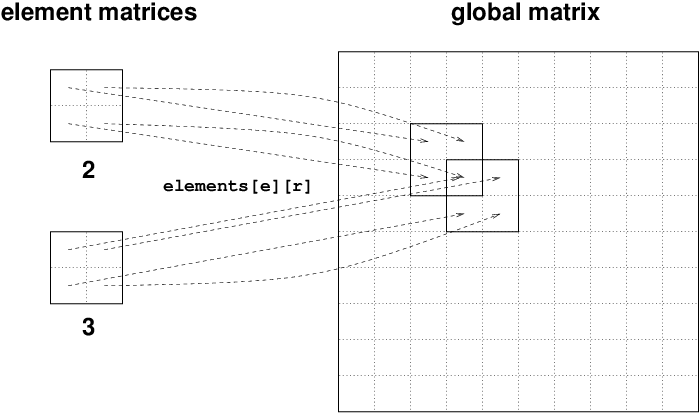

The integrals are naturally split into integrals over individual elements since the formulas change with the elements. This idea of splitting the integral is fundamental in all practical implementations of the finite element method.

Let us split the integral over \(\Omega\) into a sum of contributions from each element:

Now, \(A^{(e)}_{i,j}\neq 0\) if and only if \(i\) and \(j\) are nodes in element \(e\). Introduce \(i=q(e,r)\) as the mapping of local node number \(r\) in element \(e\) to the global node number \(i\). This is just a short mathematical notation for the expression i=elements[e][r] in a program. Let \(r\) and \(s\) be the local node numbers corresponding to the global node numbers \(i=q(e,r)\) and \(j=q(e,s)\). With \(d\) nodes per element, all the nonzero elements in \(A^{(e)}_{i,j}\) arise from the integrals involving basis functions with indices corresponding to the global node numbers in element number \(e\):

These contributions can be collected in a \((d+1)\times (d+1)\) matrix known as the element matrix. We introduce the notation

for the element matrix. For the case \(d=2\) we have

Given the numbers \(\tilde A^{(e)}_{r,s}\), we should according to (22) add the contributions to the global coefficient matrix by

This process of adding in elementwise contributions to the global matrix is called finite element assembly or simply assembly. .. index:: assembly

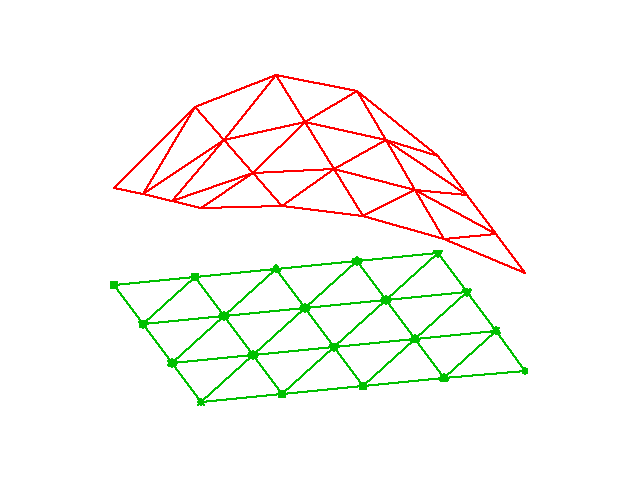

Figure Illustration of matrix assembly illustrates how element matrices for elements with two nodes are added into the global matrix. More specifically, the figure shows how the element matrix associated with elements 2 and 3 assembled, assuming that global nodes are numbered from left to right in the domain.

Illustration of matrix assembly

The right-hand side of the linear system is also computed elementwise:

We observe that \(b_i^{(e)}\neq 0\) if and only if global node \(i\) is a node in element \(e\). With \(d\) nodes per element we can collect the \(d+1\) nonzero contributions \(b_i^{(e)}\), for \(i=q(e,r)\), \(r=0,\ldots,d\), in an element vector

These contributions are added to the global right-hand side by an assembly process similar to that for the element matrices:

Mapping to a reference element¶

Instead of computing the integrals

over some element \(\Omega^{(e)} = [x_L, x_R]\), it is convenient to map the element domain \([x_L, x_R]\) to a standardized reference element domain \([-1,1]\). (We have now introduced \(x_L\) and \(x_R\) as the left and right boundary points of an arbitrary element. With a natural numbering of nodes and elements from left to right through the domain, \(x_L=x_{e}\) and \(x_R=x_{e+1}\).) Let \(X\) be the coordinate in the reference element. A linear or affine mapping from \(X\) to \(x\) reads

This relation can alternatively be expressed by

where we have introduced the element midpoint \(x_m=(x_L+x_R)/2\) and the element length \(h=x_R-x_L\).

Integrating on the reference element is a matter of just changing the integration variable from \(x\) to \(X\). Let

be the basis function associated with local node number \(r\) in the reference element. The integral transformation reads

The stretch factor \(dx/dX\) between the \(x\) and \(X\) coordinates becomes the determinant of the Jacobian matrix of the mapping between the coordinate systems in 2D and 3D. To obtain a uniform notation for 1D, 2D, and 3D problems we therefore replace \(dx/dX\) by \(\det J\) already now. In 1D, \(\det J = dx/dX = h/2\). The integration over the reference element is then written as

The corresponding formula for the element vector entries becomes

Since we from now on will work in the reference element, we need explicit mathematical formulas for the basis functions \({\varphi}_i(x)\) in the reference element only, i.e., we only need to specify formulas for \({\tilde{\varphi}}_r(X)\). This is a very convenient simplification compared to specifying piecewise polynomials in the physical domain.

The \({\tilde{\varphi}}_r(x)\) functions are simply the Lagrange polynomials defined through the local nodes in the reference element. For \(d=1\) and two nodes per element, we have the linear Lagrange polynomials

Quadratic polynomials, \(d=2\), have the formulas

In general,

where \(X_{(0)},\ldots,X_{(d)}\) are the coordinates of the local nodes in the reference element. These are normally uniformly spaced: \(X_{(r)} = -1 + 2r/d\), \(r=0,\ldots,d\).

Integration over a reference element¶

To illustrate the concepts from the previous section in a specific example, we now consider calculation of the element matrix and vector for a specific choice of \(d\) and \(f(x)\). A simple choice is \(d=1\) and \(f(x)=x(1-x)\) on \(\Omega =[0,1]\). We have the general expressions (25) and (26) for \(\tilde A^{(e)}_{r,s}\) and \(\tilde b^{(e)}_{r}\). Writing these out for the choices (?) and (?), and using that \(\det J = h/2\), we get

In the last two expressions we have used the element midpoint \(x_m\).

Integration of lower-degree polynomials above is tedious, and higher-degree polynomials that very much more algebra, but sympy may help. For example,

>>> import sympy as sm

>>> x, x_m, h, X = sm.symbols('x x_m h X')

>>> sm.integrate(h/8*(1-X)**2, (X, -1, 1))

h/3

>>> sm.integrate(h/8*(1+X)*(1-X), (X, -1, 1))

h/6

>>> x = x_m + h/2*X

>>> b_0 = sm.integrate(h/4*x*(1-x)*(1-X), (X, -1, 1))

>>> print b_0

-h**3/24 + h**2*x_m/6 - h**2/12 - h*x_m**2/2 + h*x_m/2

For inclusion of formulas in documents (like the present one), sympy can print expressions in LaTeX format:

>>> print sm.latex(b_0, mode='plain')

- \frac{1}{24} h^{3} + \frac{1}{6} h^{2} x_{m}

- \frac{1}{12} h^{2} - \frac{1}{2} h x_{m}^{2}

+ \frac{1}{2} h x_{m}

Implementation (1)¶

Based on the experience from the previous example, it makes sense to write some code to automate the integration process for any choice of finite element basis functions. In addition, we can automate the assembly process and linear system solution. Appropriate functions for this purpose document all details of all steps in the finite element computations and can found in the module file fe_approx1D.py. Some of the functions are explained below.

Integration¶

First we need a Python function for defining \({\tilde{\varphi}}_r(X)\) in terms of a Lagrange polynomial of degree d:

import sympy as sm

import numpy as np

def phi_r(r, X, d):

if isinstance(X, sm.Symbol):

h = sm.Rational(1, d) # node spacing

nodes = [2*i*h - 1 for i in range(d+1)]

else:

# assume X is numeric: use floats for nodes

nodes = np.linspace(-1, 1, d+1)

return Lagrange_polynomial(X, r, nodes)

def Lagrange_polynomial(x, i, points):

p = 1

for k in range(len(points)):

if k != i:

p *= (x - points[k])/(points[i] - points[k])

return p

Observe how we construct the phi_r function to be a symbolic expression for \({\tilde{\varphi}}_r(X)\) if X is a Symbol object from sympy. Otherwise, we assume that X is a float object and compute the corresponding floating-point value of \({\tilde{\varphi}}_r(X)\). The Lagrange_polynomial function, copied here from the section Fourier series, works with both symbolic and numeric x and points variables.

The complete basis \({\tilde{\varphi}}_0(X),\ldots,{\tilde{\varphi}}_d(X)\) on the reference element is constructed by

def basis(d=1):

X = sm.Symbol('X')

phi = [phi_r(r, X, d) for r in range(d+1)]

return phi

Now we are in a position to write the function for computing the element matrix:

def element_matrix(phi, Omega_e, symbolic=True):

n = len(phi)

A_e = sm.zeros((n, n))

X = sm.Symbol('X')

if symbolic:

h = sm.Symbol('h')

else:

h = Omega_e[1] - Omega_e[0]

detJ = h/2 # dx/dX

for r in range(n):

for s in range(r, n):

A_e[r,s] = sm.integrate(phi[r]*phi[s]*detJ, (X, -1, 1))

A_e[s,r] = A_e[r,s]

return A_e

In the symbolic case (symbolic is True), we introduce the element length as a symbol h in the computations. Otherwise, the real numerical value of the element interval Omega_e is used and the final matrix elements are numbers, not symbols. This functionality can be demonstrated:

>>> from fe_approx1D import *

>>> phi = basis(d=1)

>>> phi

[1/2 - X/2, 1/2 + X/2]

>>> element_matrix(phi, Omega_e=[0.1, 0.2], symbolic=True)

[h/3, h/6]

[h/6, h/3]

>>> element_matrix(phi, Omega_e=[0.1, 0.2], symbolic=False)

[0.0333333333333333, 0.0166666666666667]

[0.0166666666666667, 0.0333333333333333]

The computation of the element vector is done by a similar procedure:

def element_vector(f, phi, Omega_e, symbolic=True):

n = len(phi)

b_e = sm.zeros((n, 1))

# Make f a function of X

X = sm.Symbol('X')

if symbolic:

h = sm.Symbol('h')

else:

h = Omega_e[1] - Omega_e[0]

x = (Omega_e[0] + Omega_e[1])/2 + h/2*X # mapping

f = f.subs('x', x) # substitute mapping formula for x

detJ = h/2 # dx/dX

for r in range(n):

b_e[r] = sm.integrate(f*phi[r]*detJ, (X, -1, 1))

return b_e

Here we need to replace the symbol x in the expression for f by the mapping formula such that f contains the variable X.

The integration in the element matrix function involves only products of polynomials, which sympy can easily deal with, but for the right-hand side sympy may face difficulties with certain types of expressions f. The result of the integral is then an Integral object and not a number as when symbolic integration is successful. It may therefore be wise to introduce a fallback on numerical integration. The symbolic integration can also take much time before an unsuccessful conclusion so we may introduce a parameter symbolic and set it to False to avoid symbolic integration:

def element_vector(f, phi, Omega_e, symbolic=True):

...

if symbolic:

I = sm.integrate(f*phi[r]*detJ, (X, -1, 1))

if not symbolic or isinstance(I, sm.Integral):

h = Omega_e[1] - Omega_e[0] # Ensure h is numerical

detJ = h/2

integrand = sm.lambdify([X], f*phi[r]*detJ)

I = sm.mpmath.quad(integrand, [-1, 1])

b_e[r] = I

...

Successful numerical integration requires that the symbolic integrand is converted to a plain Python function (integrand) and that the element length h is a real number.

Linear system assembly and solution¶

The complete algorithm for computing and assembling the elementwise contributions takes the following form

def assemble(nodes, elements, phi, f, symbolic=True):

n_n, n_e = len(nodes), len(elements)

if symbolic:

A = sm.zeros((n_n, n_n))

b = sm.zeros((n_n, 1)) # note: (n_n, 1) matrix

else:

A = np.zeros((n_n, n_n))

b = np.zeros(n_n)

for e in range(n_e):

Omega_e = [nodes[elements[e][0]], nodes[elements[e][-1]]]

A_e = element_matrix(phi, Omega_e, symbolic)

b_e = element_vector(f, phi, Omega_e, symbolic)

for r in range(len(elements[e])):

for s in range(len(elements[e])):

A[elements[e][r],elements[e][s]] += A_e[r,s]

b[elements[e][r]] += b_e[r]

return A, b

The nodes and elements variables represent the finite element mesh as explained earlier.

Given the coefficient matrix A and the right-hand side b, we can compute the coefficients \(c_0,\ldots,c_N\) in the expansion \(u(x)=\sum_jc_j{\varphi}_j\) as the solution vector c of the linear system:

if symbolic:

c = A.LUsolve(b)

else:

c = np.linalg.solve(A, b)

When A and b are sympy arrays, solution procedure implied by A.LUsolve is symbolic, otherwise, when A and b are numpy arrays, a standard numerical solver is called. The symbolic version is suited for small problems only (small \(N\) values) since the calculation time becomes prohibitively large otherwise. Normally, the symbolic integration will be more time consuming in small problems than the symbolic solution of the linear system.

Example on computing approximations¶

We can exemplify the use of assemble on the computational case from the section Calculating the linear system with two P1 elements (linear basis functions) on the domain \(\Omega=[0,1]\). Let us first work with a symbolic element length:

>>> h, x = sm.symbols('h x')

>>> nodes = [0, h, 2*h]

>>> elements = [[0, 1], [1, 2]]

>>> phi = basis(d=1)

>>> f = x*(1-x)

>>> A, b = assemble(nodes, elements, phi, f, symbolic=True)

>>> A

[h/3, h/6, 0]

[h/6, 2*h/3, h/6]

[ 0, h/6, h/3]

>>> b

[ h**2/6 - h**3/12]

[ h**2 - 7*h**3/6]

[5*h**2/6 - 17*h**3/12]

>>> c = A.LUsolve(b)

>>> c

[ h**2/6]

[12*(7*h**2/12 - 35*h**3/72)/(7*h)]

[ 7*(4*h**2/7 - 23*h**3/21)/(2*h)]

We may, for comparison, compute the c vector for an interpolation/collocation method, taking the nodes as collocation points. This is carried out by evaluating f numerically at the nodes:

>>> fn = sm.lambdify([x], f)

>>> c = [fn(xc) for xc in nodes]

>>> c

[0, h*(1 - h), 2*h*(1 - 2*h)]

The corresponding numerical computations, as done by sympy and still based on symbolic integration, goes as follows:

>>> nodes = [0, 0.5, 1]

>>> elements = [[0, 1], [1, 2]]

>>> phi = basis(d=1)

>>> x = sm.Symbol('x')

>>> f = x*(1-x)

>>> A, b = assemble(nodes, elements, phi, f, symbolic=False)

>>> A

[ 0.166666666666667, 0.0833333333333333, 0]

[0.0833333333333333, 0.333333333333333, 0.0833333333333333]

[ 0, 0.0833333333333333, 0.166666666666667]

>>> b

[ 0.03125]

[0.104166666666667]

[ 0.03125]

>>> c = A.LUsolve(b)

>>> c

[0.0416666666666666]

[ 0.291666666666667]

[0.0416666666666666]

The fe_approx1D module contains functions for generating the nodes and elements lists for equal-sized elements with any number of nodes per element. The coordinates in nodes can be expressed either through the element length symbol h or by real numbers. There is also a function

def approximate(f, symbolic=False, d=1, n_e=4, filename='tmp.pdf'):

which computes a mesh with n_e elements, basis functions of degree d, and approximates a given symbolic expression f by a finite element expansion \(u(x) = \sum_jc_j{\varphi}_j(x)\). When symbolic is False, \(u(x)\) can be computed at a (large) number of points and plotted together with \(f(x)\). The construction of \(u\) points from the solution vector c is done elementwise by evaluating \(\sum_rc_r{\tilde{\varphi}}_r(X)\) at a (large) number of points in each element, and the discrete \((x,u)\) values on each elements are stored in arrays that are finally concatenated to form global arrays with the \(x\) and \(u\) coordinates for plotting. The details are found in the u_glob function in fe_approx1D.py.

The structure of the coefficient matrix¶

Let us first see how the global matrix looks like if we assemble symbolic element matrices, expressed in terms of h, from several elements:

>>> d=1; n_e=8; Omega=[0,1] # 8 linear elements on [0,1]

>>> phi = basis(d)

>>> f = x*(1-x)

>>> nodes, elements = mesh_symbolic(n_e, d, Omega)

>>> A, b = assemble(nodes, elements, phi, f, symbolic=True)

>>> A

[h/3, h/6, 0, 0, 0, 0, 0, 0, 0]

[h/6, 2*h/3, h/6, 0, 0, 0, 0, 0, 0]

[ 0, h/6, 2*h/3, h/6, 0, 0, 0, 0, 0]

[ 0, 0, h/6, 2*h/3, h/6, 0, 0, 0, 0]

[ 0, 0, 0, h/6, 2*h/3, h/6, 0, 0, 0]

[ 0, 0, 0, 0, h/6, 2*h/3, h/6, 0, 0]

[ 0, 0, 0, 0, 0, h/6, 2*h/3, h/6, 0]

[ 0, 0, 0, 0, 0, 0, h/6, 2*h/3, h/6]

[ 0, 0, 0, 0, 0, 0, 0, h/6, h/3]

(The reader is encouraged to assemble the element matrices by hand and verify this result, as this exercise will give a hands-on understanding of what the assembly is about.) In general we have a coefficient matrix that is tridiagonal:

The structure of the right-hand side is more difficult to reveal since it involves an assembly of elementwise integrals of \(f(x(X)){\tilde{\varphi}}_r(X)h/2\), which obviously depend on the particular choice of \(f(x)\). It is easier to look at the integration in \(x\) coordinates, which gives the general formula (21). For equal-sized elements of length \(h\), we can apply the Trapezoidal rule at the global node points to arrive at a somewhat more specific expression than (21):

The reason for this simple formula is simply that \(\phi_i\) is either 0 or 1 at the nodes and 0 at all but one of them.

Going to P2 elements (d=2) leads to the element matrix

and the following global assembled matrix from four elements:

In general, for \(i\) odd we have the nonzeroes

multiplied by \(h/30\), and for \(i\) even we have the nonzeros

multiplied by \(h/30\). The rows with odd numbers correspond to nodes at the element boundaries and get contributions from two neighboring elements in the assembly process, while the even numbered rows correspond to internal nodes in the elements where the only one element contributes to the values in the global matrix.

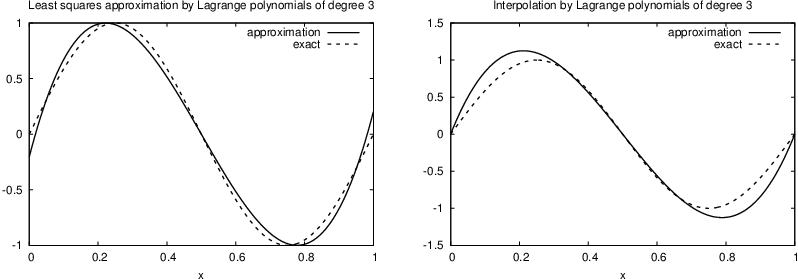

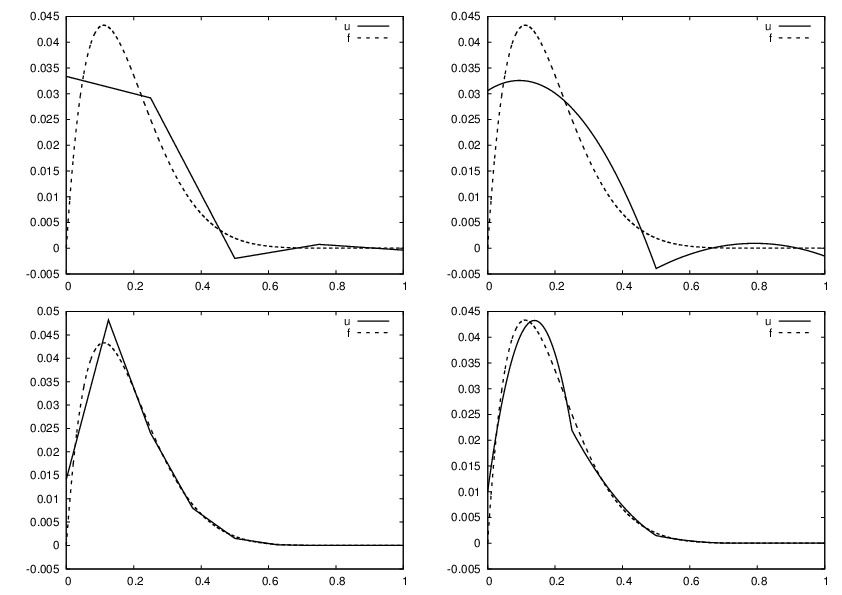

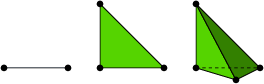

Applications¶

With the aid of the approximate function in the fe_approx1D module we can easily investigate the quality of various finite element approximations to some given functions. Figure Comparison of the finite element approximations: 4 P1 elements with 5 nodes (upper left), 2 P2 elements with 5 nodes (upper right), 8 P1 elements with 9 nodes (lower left), and 4 P2 elements with 9 nodes (lower right) shows how linear and quadratic elements approximates the polynomial \(f(x)=x(1-x)^8\) on \(\Omega =[0,1]\), using equal-sized elements. The results arise from the program

import sympy as sm

from fe_approx1D import approximate

x = sm.Symbol('x')

approximate(f=x*(1-x)**8, symbolic=False, d=1, n_e=4)

approximate(f=x*(1-x)**8, symbolic=False, d=2, n_e=2)

approximate(f=x*(1-x)**8, symbolic=False, d=1, n_e=8)

approximate(f=x*(1-x)**8, symbolic=False, d=2, n_e=4)

The quadratic functions are seen to be better than the linear ones for the same value of \(N\), as we increase \(N\). This observation has some generality: higher degree is not necessarily better on a coarse mesh, but it is as we refined the mesh.

Comparison of the finite element approximations: 4 P1 elements with 5 nodes (upper left), 2 P2 elements with 5 nodes (upper right), 8 P1 elements with 9 nodes (lower left), and 4 P2 elements with 9 nodes (lower right)

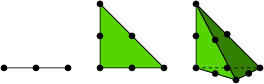

Sparse matrix storage and solution¶

Some of the examples in the preceding section took several minutes to compute, even on small meshes consisting of up to eight elements. The main explanation for slow computations is unsuccessful symbolic integration: sympy may use a lot of energy on integrals like \(\int f(x(X)){\tilde{\varphi}}_r(X)h/2 dx\) before giving up, and the program resorts to numerical integration. Codes that can deal with a large number of basis functions and accept flexible choices of \(f(x)\) should compute all integrals numerically and replace the matrix objects from sympy by the far more efficient array objects from numpy.

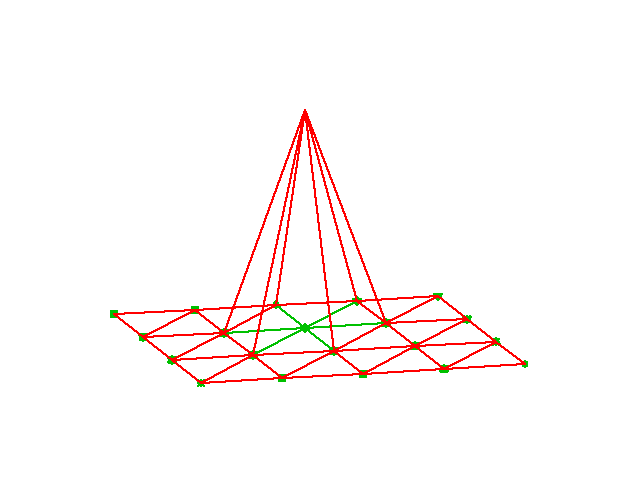

A matrix whose majority of entries are zeros, are known as a sparse matrix. We know beforehand that matrices from finite element approximations are sparse. The sparsity should be utilized in software as it dramatically decreases the storage demands and the CPU-time needed to compute the solution of the linear system. This optimization is not critical in 1D problems where modern computers can afford computing with all the zeros in the complete square matrix, but in 2D and especially in 3D, sparse matrices are fundamental for feasible finite element computations.

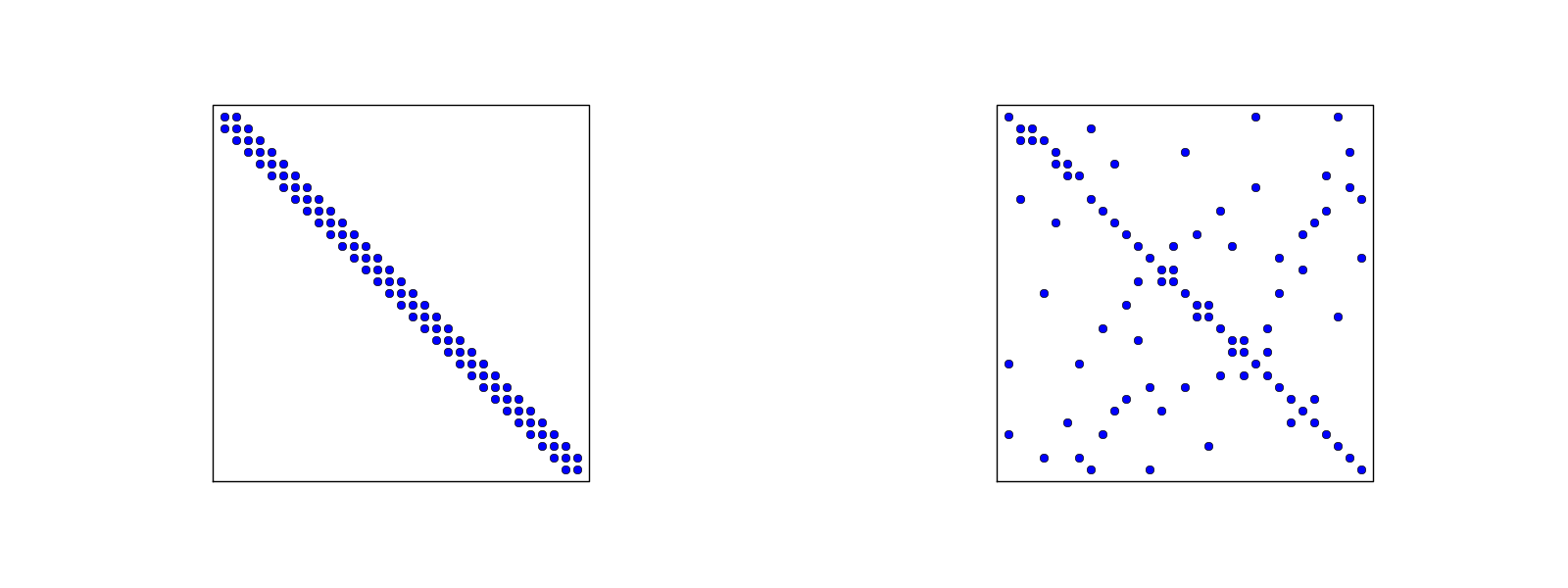

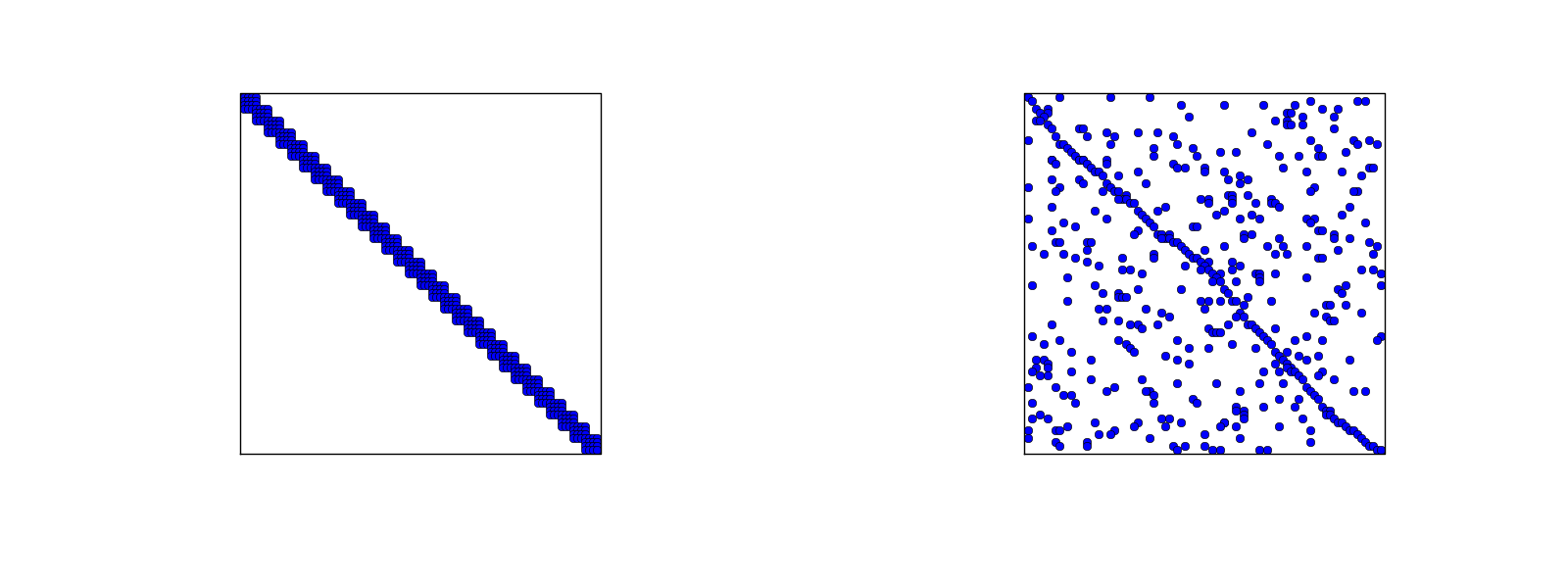

For one-dimensional finite element approximation problems, using a numbering of nodes and elements from left to right over the domain, the assembled coefficient matrix has only a few diagonals different from zero. More precisely, \(2d+1\) diagonals are different from zero. With a different numbering of global nodes, say a random ordering, the diagonal structure is lost, but the number of nonzero elements is unaltered. Figures Matrix sparsity pattern for left-to-right numbering (left) and random numbering (right) of nodes in P1 elements and Matrix sparsity pattern for left-to-right numbering (left) and random numbering (right) of nodes in P3 elements exemplifies sparsity patterns.

Matrix sparsity pattern for left-to-right numbering (left) and random numbering (right) of nodes in P1 elements

Matrix sparsity pattern for left-to-right numbering (left) and random numbering (right) of nodes in P3 elements

The scipy.sparse library supports creation of sparse matrices and linear system solution.

- scipy.sparse.diags for matrix defined via diagonals

- scipy.sparse.lil_matrix for creation via setting elements

- scipy.sparse.dok_matrix for creation via setting elements

Examples to come....

Comparison of finite element and finite difference approximation¶

The previous sections on approximating \(f\) by a finite element function \(u\) utilize the projection/Galerkin or least squares approaches to minimize the approximation error. We may, alternatively, use the collocation/interpolation method. Here we shall compare these three approaches with what one does in the finite difference method when representing a given function on a mesh.

Collocation or interpolation¶

Let \(x_{i}\), \(i=0,\ldots,N\), be the nodes in the mesh. Collocation means

which translates to

but \({\varphi}_j(x_{i})=0\) if \(i\neq j\) so the sum collapses to one term \(c_i{\varphi}_i(x_{i}) = c_i\), and we have the result

That is, \(u\) interpolates \(f\) at the node points (the values coincide at these points, but the variation between the points is dictated by the type of polynomials used in the expansion for \(u\)). The collocation/interpolation approach is obviously much simpler and faster to use than the least squares or projection/Galerkin approach.

Remark. When dealing with approximation of functions via finite elements, all the three methods are in use, while the least squares and collocation methods are used to only a small extend when solving differential equations.

Finite difference approximation of given functions¶

Approximating a given function \(f(x)\) on a mesh in a finite difference context will typically just sample \(f\) at the grid points. That is, the discrete version of \(f(x)\) is the set of point values \(f(x_i)\), \(i=0,\ldots,N\), where \(x_i\) denotes a mesh point. The collocation/interpolation method above gives exactly the same representation.

How does a finite element Galerkin or least squares approximation differ from this straightforward interpolation of \(f\)? This is the question to be addressed next.

Finite difference interpretation of a finite element approximation¶

We now limit the scope to P1 elements since this is the element type that gives formulas closest to what one gets from the finite difference method.

The linear system arising from a Galerkin or least squares approximation reads

For P1 elements and a uniform mesh of element length \(h\) we have calculated the matrix with entries \(({\varphi}_i,{\varphi}_j)\) in the section Calculating the linear system. Equation number \(i\) reads

The finite difference counterpart of this equation is just \(u_i=f_i\). (The first and last equation, corresponding to \(i=0\) and \(i=N\) are slightly different, see the section The structure of the coefficient matrix.)

The left-hand side of (27) can be manipulated to equal

Thinking in terms of finite differences, this is nothing but the standard discretization of

or written as

with difference operators.

Before interpreting the approximation procedure as solving a differential equation, we need to work out what the right-hand side is in the context of P1 elements. Since \({\varphi}_i\) is the linear function that is 1 at \(x_{i}\) and zero at all other nodes, only the interval \([x_{i-1},x_{i+1}]\) contributes to the integral on the right-hand side. This integral is naturally split into two parts according to (20):

However, if \(f\) is not known we cannot do much else with this expression. It is clear that many values of \(f\) around \(x_{i}\) contributes to the right-hand side, not just the single point value \(f(x_{i})\) as in the finite difference method.

To proceed with the right-hand side, we turn to numerical integration schemes. Let us say we use the Trapezoidal method for \((f,{\varphi}_i)\), based on the node points \(x_{i}=i h\):

Since \({\varphi}_i\) is zero at all these points, except at \(x_{i}\), the Trapezoidal rule collapses to one term:

for \(i=1,\ldots,N-1\), which is the same result as with collocation/interpolation, and of course the same result as in the finite difference method. For \(i=0\) and \(i=N\) we get contribution from only one element so

Turning to Simpson’s rule with sample points also in the middle of the elements, \(x_i=i h/2\), \(i=0,\ldots,2N\), it reads in general

where \(\tilde h = x_i - x_{i-1}= h/2\) is the spacing between the sample points. We see that the midpoints with odd numbers have the weight \(2h/3\) while the node points with even numbers have the weight \(h/3\). Since \({\varphi}_i=0\) at the even numbers, except for \(x_{2i}=x_{i}\), and \({\varphi}_i=0\) at all the midpoints, on the midpoints and \(4h/3\) on the node points. Since \({\varphi}_i\) vanishes at all the node points, except \(\xi{i}\), and except \(x_{2i-1}=\xi{i}-h/2\) and \(x_{2i+1}=\xi{i}+h/2\), where \({\varphi}_i=1/2\), we get

In a finite difference context we would typically express this formula as

This shows that, with Simpson’s rule, the finite element method operates with the average of \(f\) over three points, while the finite difference method just applies \(f\) at one point. We may interpret this as a “smearing” or smoothing of \(f\) by the finite element method.

We can now summarize our findings. With the approximation of \((f,{\varphi}_i)\) by the Trapezoidal rule, P1 elements give rise to equations that can be expressed as a finite difference discretization of

expressed with operator notation as

As \(h\rightarrow 0\), the extra term proportional to \(u''\) goes to zero, and the two methods are then equal.

With the Simpson’s rule, we may say that we solve

where \(\bar f_i\) means the average \(\frac{1}{3}(f_{i-1/2} + f_i + f_{i+1/2})\).